Integrating Vision-Language Models into Agentic RAG Systems with ColPali

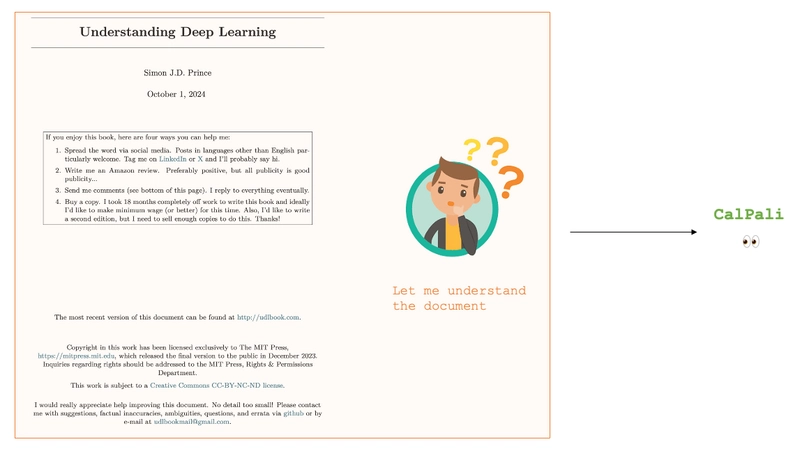

In this tutorial, we will walk through a relatively new technique to build a RAG based pipeline using vision based model, which is based on a paper called ColPali (published in June 2024). In the rapidly evolving world of AI, we're constantly seeking more natural ways for machines to understand and process information. Traditional RAG based systems have been transformative, but they often struggle with multimodal content (i.e. documents that contains a mix of text, images, tables, and more). Before we dive into this new vision-based retrieval technique, it's worth briefly revisiting the challenges faced by traditional RAG systems when dealing with multimodal data. This context will help us better appreciate the value that a vision-based retrieval model like ColPali offers. The Challenge with Traditional RAG Imagine you're trying to find information in a textbook that contains diagrams, charts, equations, and text. The traditional RAG approach would typically require: Extracting text from the document Processing images separately Processing tables separately Trying to understand tables in isolation Somehow stitching all this information together This fragmented approach loses vital context. A diagram often explains concepts that would take paragraphs of text, and the layout of information itself can convey meaning. When working with educational content, research papers, or technical documentation, this limitation becomes particularly problematic. Traditional RAG systems struggle with this. Extracting text from visuals and then feeding it into an LLM strips away structural nuances. But what if we could process raw visual documents directly and retrieve information based on visual relevance? That’s exactly what ColPali enables. ColPali: Efficient Document Retrieval with Vision Language Models Vision-driven RAG systems tackle this challenge differently. Instead of breaking a document into separate components, they process pages as they appear visually, just like humans do when reading. This approach preserves spatial relationships and visual context, both of which are often essential for deep understanding. ColPali uses vision-language models (VLMs) to enhance document processing, bypassing traditional text extraction steps and directly analyzing documents as they are. In this tutorial, we'll explore how to build such a system using: ColPali - A multimodal document retrieval model that processes documents visually [for retrieval] Amazon Nova - A powerful vision language model for analyzing retrieved content [for generation] CrewAI - An agent framework for orchestrating complex AI workflows[for building the Agentic RAG based system] Let's break down how this system works. How ColPali Enhances Document Understanding At its core, ColPali transforms each page of a document into an embedding, similar to how CLIP does for images, but optimized for documents. It views each page holistically through a vision-language model. Here's how it works: Patch Creation: Documents are divided into manageable image patches, simplifying complex page layouts into smaller, processable units. Generating Brain Food: Each patch is converted into embeddings, rich numerical representations that capture both visual and contextual data. These embeddings serve as the foundation for understanding and retrieving relevant content. Detour of Vision Language Model (VLM) To fully grasp how ColPali handles embedding generation, it’s essential to understand Vision-Language Models (VLMs), models that excel at integrating visual data with textual annotations. For a deep dive into VLMs, refer to tutorial. At a high level, VLM consist of the following key components: Image Encoder: Breaks down images into patches and encodes each one into embeddings. Text Encoder: Simultaneously encodes any accompanying text into its own set of embeddings, preserving language-specific context. Image Encoder The Image Encoder component of a VLM breaks down images into smaller patches and processes each patch individually to generate embeddings. These embeddings represent the visual content in a format that the model can interpret and reason over. Patch Processing: Images are divided into patches, which are then individually fed into the encoder. This modular approach allows the model to focus on detailed aspects of each image segment, facilitating a deeper understanding of the overall visual content. Adapter Layer Transformation: After encoding, the output from the image encoder passes through an adapter layer. This layer converts the visual embeddings into a numerical format optimized for further processing within the model. Text Encoder Parallel to the image encoding, the Text Encoder processes textual data. It converts text into a set of embeddings that encapsulate the semantic and syntactic nuances of the language. Text Processing: Text is

In this tutorial, we will walk through a relatively new technique to build a RAG based pipeline using vision based model, which is based on a paper called ColPali (published in June 2024). In the rapidly evolving world of AI, we're constantly seeking more natural ways for machines to understand and process information. Traditional RAG based systems have been transformative, but they often struggle with multimodal content (i.e. documents that contains a mix of text, images, tables, and more).

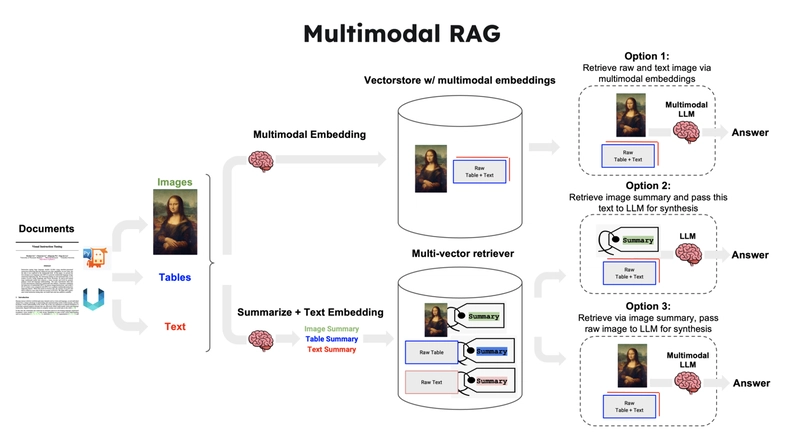

Before we dive into this new vision-based retrieval technique, it's worth briefly revisiting the challenges faced by traditional RAG systems when dealing with multimodal data. This context will help us better appreciate the value that a vision-based retrieval model like ColPali offers.

The Challenge with Traditional RAG

Imagine you're trying to find information in a textbook that contains diagrams, charts, equations, and text. The traditional RAG approach would typically require:

- Extracting text from the document

- Processing images separately

- Processing tables separately

- Trying to understand tables in isolation

- Somehow stitching all this information together

This fragmented approach loses vital context. A diagram often explains concepts that would take paragraphs of text, and the layout of information itself can convey meaning. When working with educational content, research papers, or technical documentation, this limitation becomes particularly problematic.

Traditional RAG systems struggle with this. Extracting text from visuals and then feeding it into an LLM strips away structural nuances. But what if we could process raw visual documents directly and retrieve information based on visual relevance?

That’s exactly what ColPali enables.

ColPali: Efficient Document Retrieval with Vision Language Models

Vision-driven RAG systems tackle this challenge differently. Instead of breaking a document into separate components, they process pages as they appear visually, just like humans do when reading. This approach preserves spatial relationships and visual context, both of which are often essential for deep understanding.

ColPali uses vision-language models (VLMs) to enhance document processing, bypassing traditional text extraction steps and directly analyzing documents as they are.

In this tutorial, we'll explore how to build such a system using:

- ColPali - A multimodal document retrieval model that processes documents visually [for retrieval]

- Amazon Nova - A powerful vision language model for analyzing retrieved content [for generation]

- CrewAI - An agent framework for orchestrating complex AI workflows[for building the Agentic RAG based system]

Let's break down how this system works.

How ColPali Enhances Document Understanding

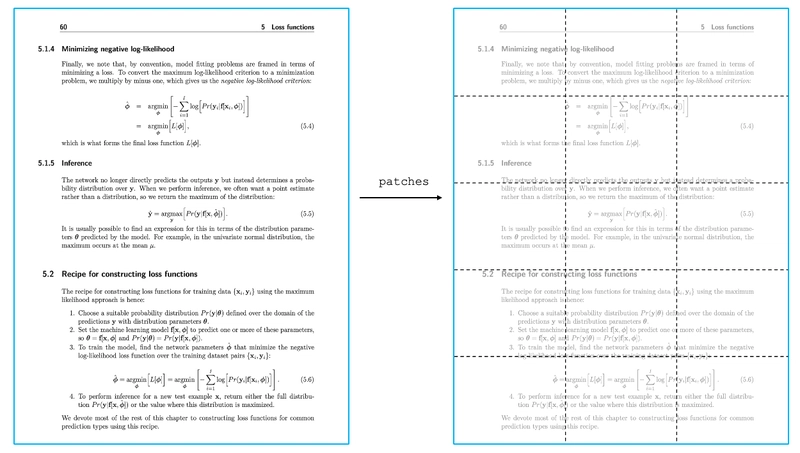

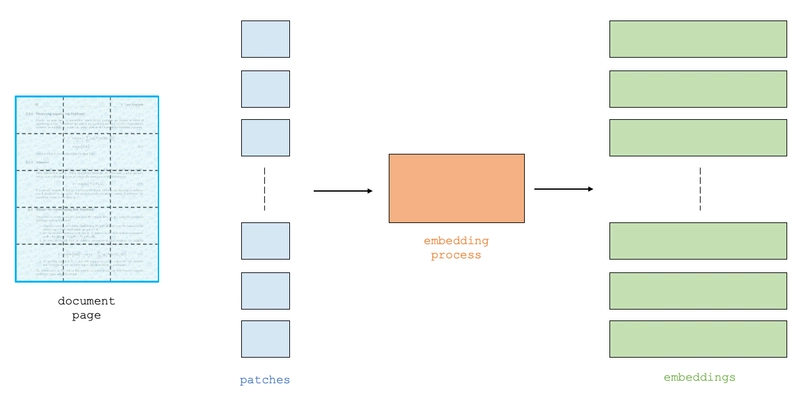

At its core, ColPali transforms each page of a document into an embedding, similar to how CLIP does for images, but optimized for documents. It views each page holistically through a vision-language model. Here's how it works:

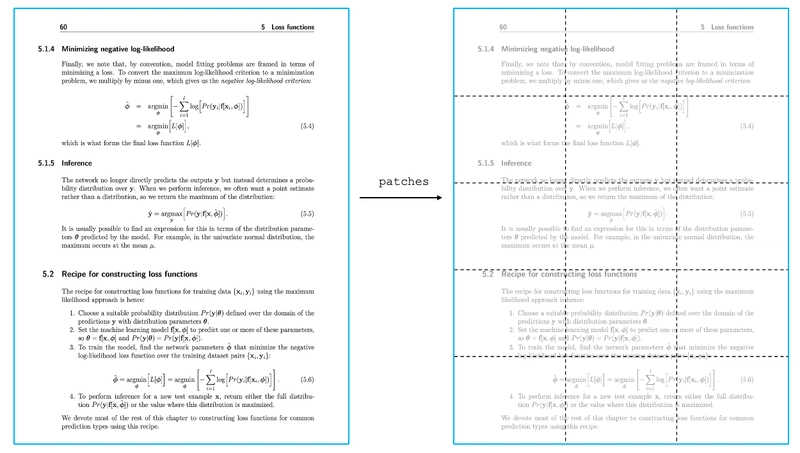

- Patch Creation: Documents are divided into manageable image patches, simplifying complex page layouts into smaller, processable units.

- Generating Brain Food: Each patch is converted into embeddings, rich numerical representations that capture both visual and contextual data. These embeddings serve as the foundation for understanding and retrieving relevant content.

Detour of Vision Language Model (VLM)

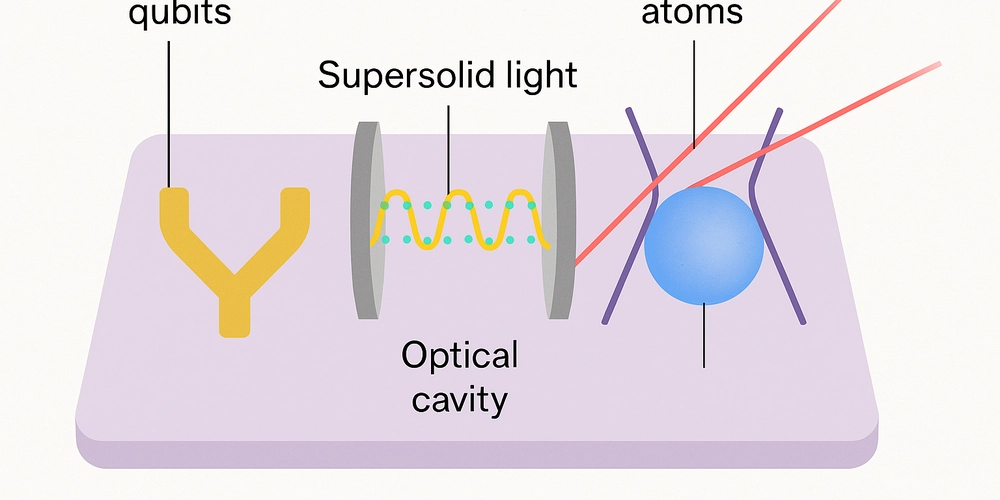

To fully grasp how ColPali handles embedding generation, it’s essential to understand Vision-Language Models (VLMs), models that excel at integrating visual data with textual annotations. For a deep dive into VLMs, refer to tutorial.

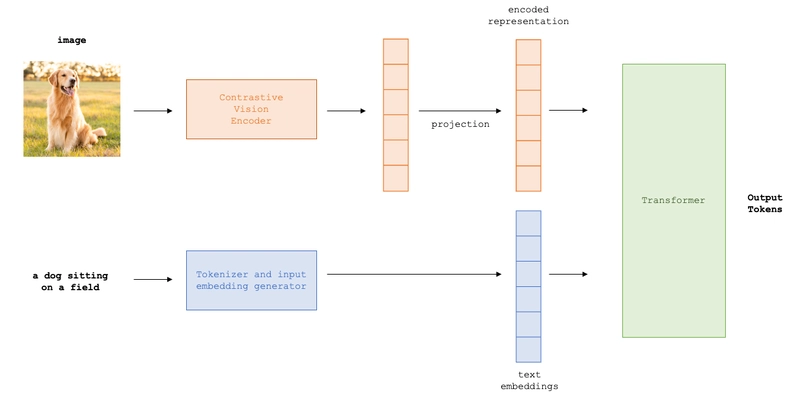

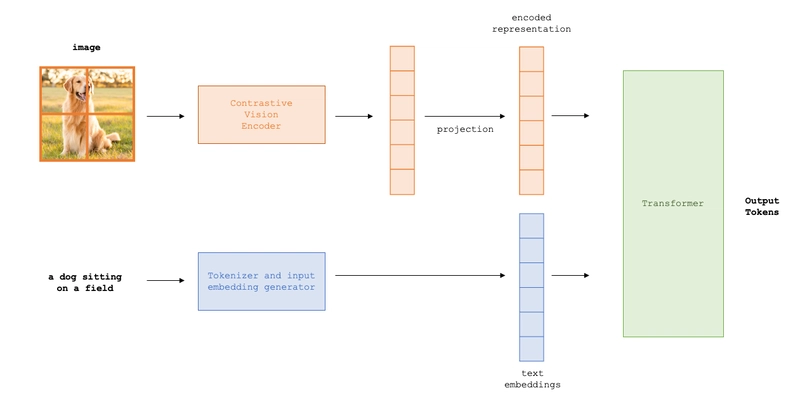

At a high level, VLM consist of the following key components:

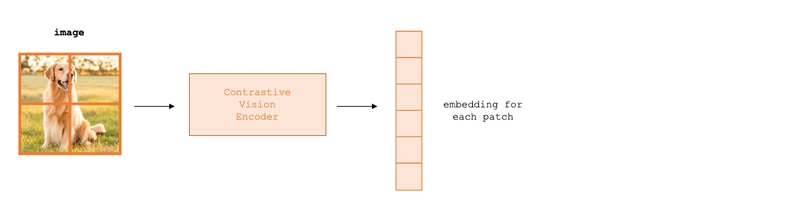

- Image Encoder: Breaks down images into patches and encodes each one into embeddings.

- Text Encoder: Simultaneously encodes any accompanying text into its own set of embeddings, preserving language-specific context.

Image Encoder

The Image Encoder component of a VLM breaks down images into smaller patches and processes each patch individually to generate embeddings. These embeddings represent the visual content in a format that the model can interpret and reason over.

- Patch Processing: Images are divided into patches, which are then individually fed into the encoder. This modular approach allows the model to focus on detailed aspects of each image segment, facilitating a deeper understanding of the overall visual content.

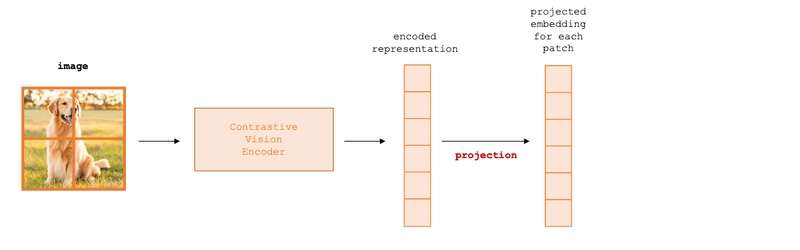

- Adapter Layer Transformation: After encoding, the output from the image encoder passes through an adapter layer. This layer converts the visual embeddings into a numerical format optimized for further processing within the model.

Text Encoder

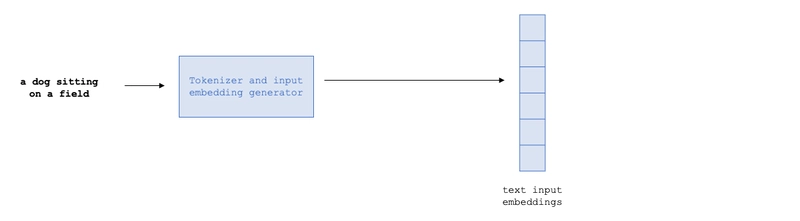

Parallel to the image encoding, the Text Encoder processes textual data. It converts text into a set of embeddings that encapsulate the semantic and syntactic nuances of the language.

- Text Processing: Text is input into the encoder, which then produces embeddings. These embeddings capture the textual context and are crucial for the model to understand and generate language-based responses.

Integration and Output Generation

The final stage in the VLM involves integrating the outputs from both the image and text encoders. This integration occurs within a LLM, where both sets of embeddings interact through the Transformer's attention mechanism.

Contextual Interaction: The image and text token embeddings are combined and processed through the Transformer model. This interaction allows the model to contextualize the information from both modalities, enhancing its ability to generate accurate and relevant responses based on both text and visual inputs.

This comprehensive approach enables VLMs to perform complex tasks that require an understanding of both visual elements and textual information, making them ideal for tasks like multimodal RAG where nuanced document understanding is critical.

So, now that we learnt a bit about vision based language model, lets go back to ColPali and see how it precesses the data and generate the embeddings using the vision based model.

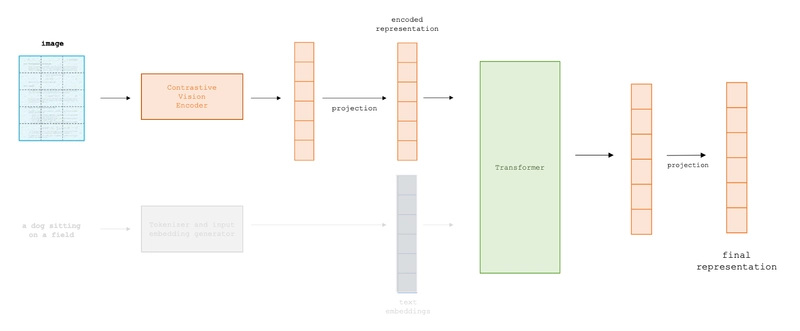

ColPali Embeddings Process

Remember how we started by dividing a document into patches? ColPali treats each page of a document as an image and divides it into patches, typically something like 32×32, resulting in 1024 patches per page.

Think of it like how your eyes scan a document, section by section. These patches are processed individually to capture local visual details, while also maintaining their spatial relationship to the entire page.

Each of these patches is converted into a rich embedding - a numerical representation that captures both visual and textual information. This is achieved through a vision encoder and a language model:

- The vision encoder breaks down image patches into initial embeddings

- A transformer-based LLM refines these embeddings to capture semantic information

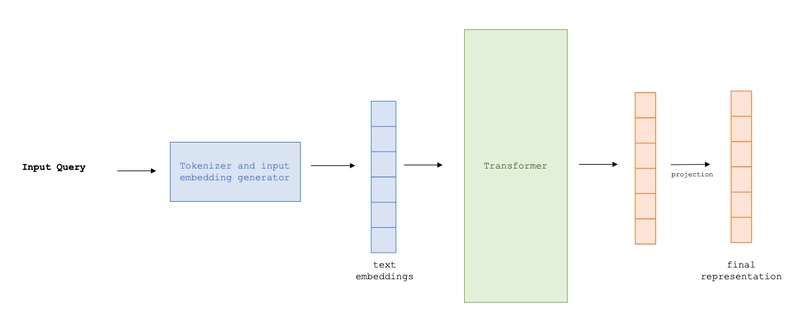

Query Time : Similarity Scoring

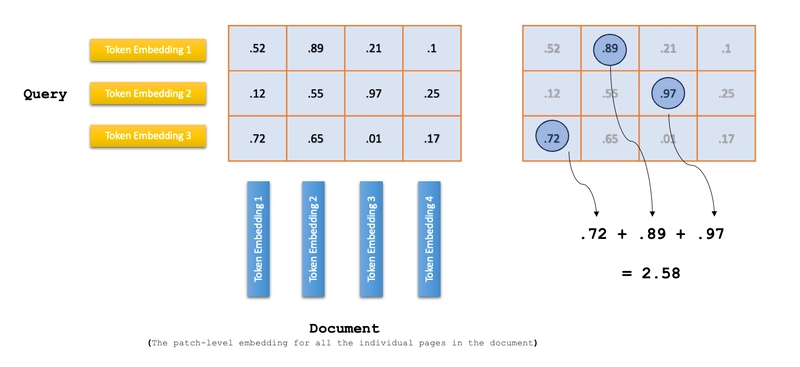

When a user query comes in, ColPali calculates similarity scores between the query and document patches of each page through a scoring matrix.

Step 1: Generating and Projecting Tokens

1) Token Generation: Initially, tokens and their embeddings are generated for the query. This involves transforming the text of the query into a format that the system can process and match against document embeddings.

2) Projection: These tokens are then passed through the same transformer model used during the embedding process. This step involves projecting the tokens into the same embedding space as the document patches, ensuring that the subsequent comparisons are meaningful and accurate.

Step 2: Computing the ColBERT Scoring Matrix

At this point, we have two things:

- Query embeddings

- Embeddings of all pages (at patch level granularity)

The next critical step involves computing the ColBERT scoring matrix. Here's how it works:

1) Embedding Matchup: The scoring matrix is essentially a grid where each row corresponds to a query token and each column to a document patch. The entries in the matrix represent the similarity scores, typically calculated as the dot product between the query token embeddings and the document patch embeddings.

2) Score Maximization: For each query token, the system identifies the maximum similarity score across all document patches. This step is crucial because it ensures that the most relevant patches are considered for generating the response.

3) Summation for Final Score: The maximum scores for each query token are then summed up to produce a final score for each document page. This cumulative score represents the overall relevance of the page to the query.

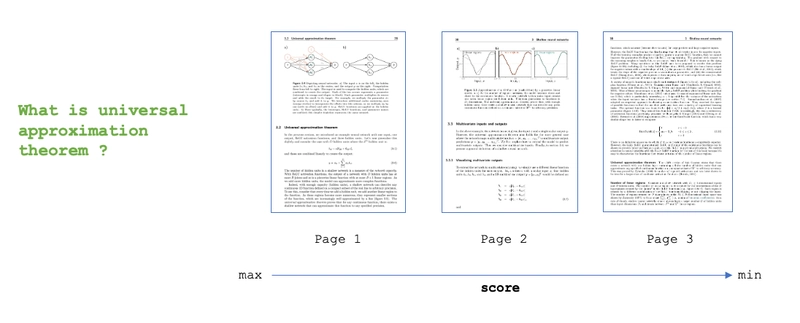

Step 3: Selecting Top-K Pages

Based on the scores computed:

1) Ranking and Retrieval: The pages are ranked according to their scores, and the top-scoring pages are selected. This selection of top-K pages is crucial as it filters out the pages most likely to contain the information sought by the query.

2)Response Generation: These top pages are then fed, along with the query, into a multimodal language model like Amazon Nova. The model uses both the textual and the visual cues from these pages to generate detailed and contextually accurate responses.

If you want to learn more about ColPali, you can refer to the official documentation and also I would recommend you to read the 9 part blog series on RAG on DailyDoseofDS by Avi Chawla and Akshay Pachaar.

Ok, enough of theory. Let's see it in action :)

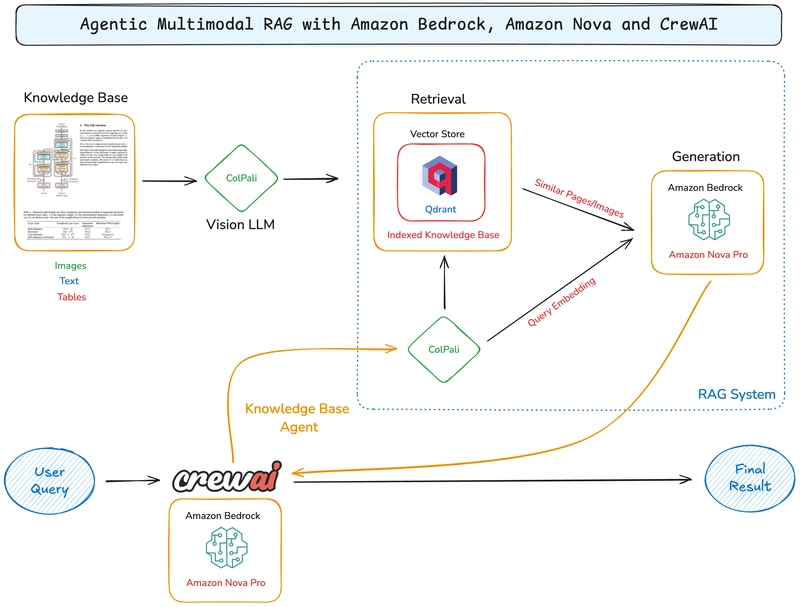

Building an Agentic RAG System with CrewAI

Having a powerful retrieval model is just one piece of the puzzle. To create a truly intelligent system, we need to orchestrate the workflow, this is where CrewAI comes in.

With CrewAI, we’ll define specialized AI agents that work collaboratively to execute complex tasks. Each agent is responsible for a specific role, and together, they handle the retrieval, reasoning, and response generation, all under the hood.

Here's how our architecture will look:

Setup

To follow along with this tutorial, I recommend cloning this repo from GitHub and follow along:

$ pip install colpali-engine torch boto3 tqdm pymupdf numpy matplotlib einops seaborn -q

$ pip install boto3==1.34.162 botocore==1.34.162 crewai==0.70.1 crewai_tools==0.12.1 PyPDF2==3.0.1 -q

Let’s import few of the libraries,

import os

import time

import shutil

import boto3

import os

from huggingface_hub import login

from colpali_engine.models import ColPali, ColPaliProcessor

from pdf2image import convert_from_path

from qdrant_client.http import models

from tqdm import tqdm

from matplotlib import pyplot as plt

from PIL import Image

from crewai import Agent, Task, Crew, LLM, Process

from IPython.display import Markdown

Download the dataset

First, let’s create a directory in your current working directory to store the dataset.

pdf_dir = "pdf_data"

os.makedirs(pdf_dir, exist_ok=True)

For this demo, we'll be using the Class X Science book from NCERT, which is publicly available on their official website. Once you download the PDF, save it within the folder pdf_data

Load the ColPali Multimodal Document Retrieval Model

We will now load the ColPali model from HuggingFace. In case you don’t have any account created in HF, this is the time to sign up and create a HUGGING_FACE_TOKEN. You’ll need this token to authenticate and access gated models.

# Loading the token

os.environ['HUGGING_FACE_TOKEN'] = 'YOUR_HF_API_KEY'

# Login using token from environment variable

login(token=os.getenv('HUGGING_FACE_TOKEN'))

If you're running this code on a machine with a GPU or MPS (for Mac), it's highly recommended to use it for more efficient processing.

import torch

# Check if CUDA/MPS/CPU is available

device = "cuda" if torch.cuda.is_available() else "mps" if torch.backends.mps.is_available() else "cpu"

print(f"{device = }")

Now let’s load the ColPali model and its processor from Hugging Face.

model_name = "vidore/colpali-v1.3"

colpali_model = ColPali.from_pretrained(

pretrained_model_name_or_path=model_name,

torch_dtype=torch.bfloat16,

device_map=device,

cache_dir="./model_cache"

)

colpali_processor = ColPaliProcessor.from_pretrained(

pretrained_model_name_or_path=model_name,

cache_dir="./model_cache"

)

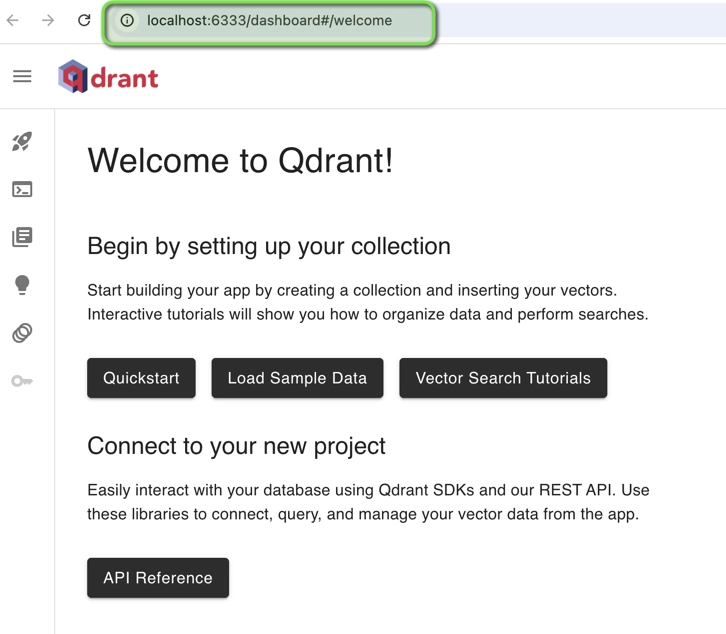

Setting up the vector database

Before we generate embeddings using the ColPali model, we need a place to store and query them. That’s where a vector database is needed.

For this tutorial, we’re using Qdrant, an open-source, high-performance vector database designed specifically for handling high-dimensional vector data.

One of the great things about Qdrant is that it’s easy to self-host using Docker, making local development and experimentation super convenient.

Like other vector stores, Qdrant supports fast similarity search across millions (or even billions) of vectors. But it also offers rich filtering capabilities, which makes it a solid choice for powering RAG pipelines where precision and flexibility matter.

Run it on the shell:

docker run -p 6333:6333 -p 6334:6334 \

-v $(pwd)/qdrant_storage:/qdrant/storage:z \

qdrant/qdrant

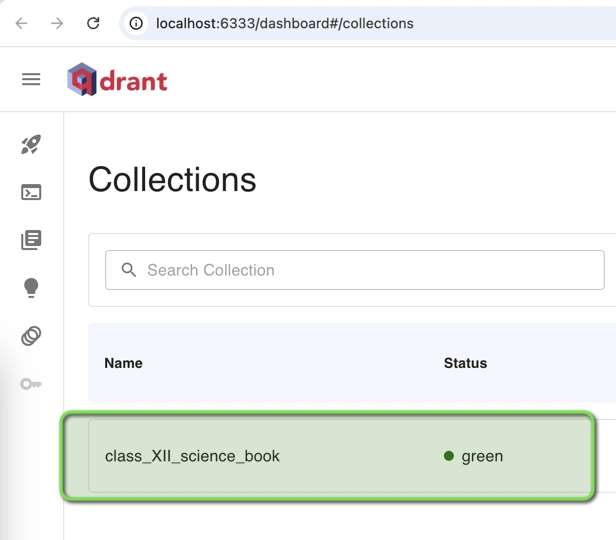

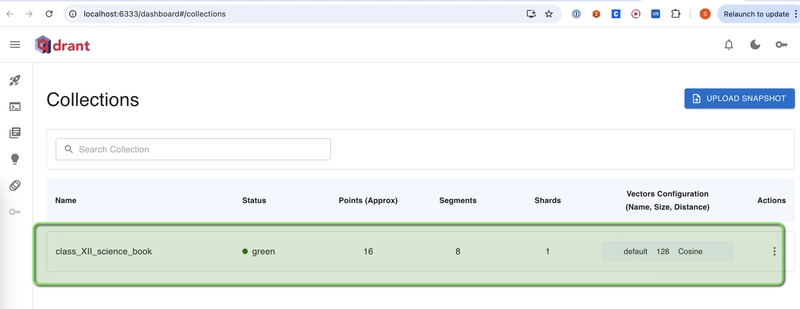

Once Qdrant is up and running, you can access the Qdrant Dashboard locally

Now that we have the Qdrant vector database running locally, let’s create a client object and define a collection called class_XII_science_book to store our embeddings.

We’ll use the official Qdrant Python client to interact with the database.

import qdrant_client

# Step 1: Creating a qdrant client object

client = qdrant_client.QdrantClient(

host="localhost",

port=6333

)

# Step 2: Create a collection

COLLECTION_NAME = "class_XII_science_book"

VECTOR_SIZE = 128

client.create_collection(

collection_name=COLLECTION_NAME,

on_disk_payload=True,

vectors_config=models.VectorParams(

size=VECTOR_SIZE,

distance=models.Distance.COSINE,

on_disk=True,

multivector_config=models.MultiVectorConfig(

comparator=models.MultiVectorComparator.MAX_SIM

),

),

)

You can check the the newly created collection in the dashboard as well,

Store embeddings in vector database

Now that our Qdrant vector database is set up and our collection is ready, it's time to generate embeddings using the ColPali model and store them in the collection.

Let’s walk through the steps:

os.environ["TOKENIZERS_PARALLELISM"] = "false"

# Step 1: Convert PDFs into a dictionary of PIL images

# which will be used to create embeddings

def convert_pdfs_to_images(pdf_folder, poppler_path="/opt/homebrew/bin"):

"""Convert PDFs into a dictionary of PIL images."""

pdf_files = [f for f in os.listdir(pdf_folder) if f.endswith(".pdf")]

all_images = []

for doc_id, pdf_file in enumerate(pdf_files):

pdf_path = os.path.join(pdf_folder, pdf_file)

images = convert_from_path(pdf_path, poppler_path=poppler_path)

for page_num, image in enumerate(images):

all_images.append({"doc_id": doc_id, "page_num": page_num, "image": image.convert("RGB")})

return all_images

# Step 2: Create embeddings for the images

with tqdm(total=len(dataset), desc="Indexing Progress") as pbar:

for i in range(0, len(dataset), BATCH_SIZE):

batch = dataset[i : i + BATCH_SIZE]

# Extract images

images = [item["image"] for item in batch]

# Process and encode images

with torch.no_grad():

batch_images = colpali_processor.process_images(images).to(colpali_model.device)

image_embeddings = colpali_model(**batch_images)

# Prepare points for Qdrant

points = []

for j, embedding in enumerate(image_embeddings):

points.append(

models.PointStruct(

id=i + j, # Use the batch index as the ID

vector=embedding.tolist(), # Convert to list

payload={

"doc_id": batch[j]["doc_id"],

"page_num": batch[j]["page_num"],

"source": "pdf archive",

},

)

)

# Upload points to Qdrant

try:

client.upsert(collection_name=COLLECTION_NAME, points=points)

except Exception as e:

print(f"Error during upsert: {e}")

continue

# Update the progress bar

pbar.update(BATCH_SIZE)

# Step 3: Generate embeddings and store in Qdrant

PDF_DIR = pdf_dir # Change this to your actual folder path

dataset = convert_pdfs_to_images(PDF_DIR)

BATCH_SIZE = 4

print("Generating embeddings and storing in Qdrant...")

print("Indexing complete!")

Once this is done, we can check the embeddings in the dashboard

With the embeddings stored in Qdrant, we can now send a query and retrieve the most similar pages using vector similarity.

# Step 1: Our query

query_text = "What are the effects of oxidation reactions in everyday life ?"

# Step 2: Generate embeddings for the query

with torch.no_grad():

text_embedding = colpali_processor.process_queries([query_text]).to(colpali_model.device)

text_embedding = colpali_model(**text_embedding)

token_query = text_embedding[0].cpu().float().numpy().tolist()

start_time = time.time()

# Step 3: Query the vector database

query_result = client.query_points(collection_name=COLLECTION_NAME,

query=token_query,

limit=5,

search_params=models.SearchParams(

quantization=models.QuantizationSearchParams(

ignore=True,

rescore=True,

oversampling=2.0

)

)

)

print(f"Time taken = {(time.time()-start_time):.3f} s")

print(query_result.points)

This will return the Top-K most similar pages (limit=5) based on the vector similarity between your query and the stored page embeddings.

# output of print(query_result.points)

[ScoredPoint(id=12, version=3, score=21.797455, payload={'doc_id': 0, 'page_num': 12, 'source': 'pdf archive'}, vector=None, shard_key=None, order_value=None),

ScoredPoint(id=11, version=2, score=19.110117, payload={'doc_id': 0, 'page_num': 11, 'source': 'pdf archive'}, vector=None, shard_key=None, order_value=None),

ScoredPoint(id=15, version=3, score=19.051605, payload={'doc_id': 0, 'page_num': 15, 'source': 'pdf archive'}, vector=None, shard_key=None, order_value=None),

ScoredPoint(id=13, version=3, score=18.964575, payload={'doc_id': 0, 'page_num': 13, 'source': 'pdf archive'}, vector=None, shard_key=None, order_value=None),

ScoredPoint(id=8, version=2, score=16.669119, payload={'doc_id': 0, 'page_num': 8, 'source': 'pdf archive'}, vector=None, shard_key=None, order_value=None)]

Building an Agentic RAG System with CrewAI

Now, let’s level up. Retrieval alone isn’t enough, we need orchestration. To build a system that can reason and respond intelligently, we need to orchestrate the entire workflow, from query to retrieval to generation.

That’s where CrewAI steps in.

In this final section, we’ll build an agentic RAG pipeline using CrewAI, where each part of the process will be handled by a dedicated agent, and they will work together to generate accurate, context-rich answers automatically.

We’ll define two agents:

- The Knowledge Retriever Agent : Uses ColPali + Qdrant to fetch the most relevant pages.

- The Multimodal Knowledge Expert Agent : Uses Amazon Nova to analyze the images and generate an answer.

Let’s start by creating these agents:

# Define the Knowledge Retriever Agent

retrieval_agent = Agent(

role="Knowledge Retriever",

goal="Retrieve the most relevant textbook pages from the knowledge base based on the student’s question.",

backstory="An intelligent academic assistant trained with NCERT Class X Science. It specializes in pinpointing the most relevant content based on the student’s question and the subject it pertains to.",

tools=[retrieve_from_qdrant],

allow_delegation=False,

verbose=True,

llm=llm

)

# Define the Multimodal Knowledge Expert Agent

answering_agent = Agent(

role="Multimodal Knowledge Expert",

goal="Accurately interpret the provided images and extract relevant information to answer the question: {query_text}.",

backstory="An advanced AI specialized in multimodal reasoning, capable of analyzing both text and images to provide the most precise and insightful answers.",

multimodal=True,

allow_delegation=False,

verbose=True,

llm=llm

)

Now, if you take a closer look at the retrieval_agent definition, you’ll notice we’ve included a tool called retrieve_from_qdrant.

We haven’t defined it yet, but this tool is the most important component in this agent definition. This is the tool which will enable the agent to perform semantic search under the hood. Using this tool, the agent can interact with the Qdrant vector database, run a vector similarity search, and retrieve the most relevant document pages needed to answer the user’s query.

Let’s go ahead and define that next.

from crewai_tools import tool

# Initialize CrewAI LLM (Amazon Nova Pro)

model_id = "us.amazon.nova-pro-v1:0"

llm = LLM(model=model_id)

# Create a Retrieval Tool

@tool

def retrieve_from_qdrant(query: str):

"""

Retrieve the most relevant documents from Qdrant vector database

based on the given text query.

Args:

query (str): The user query to search in the knowledge base.

Returns:

list: List of paths to the matched images.

"""

global client, COLLECTION_NAME, colpali_processor, colpali_model

print(f"Retrieving documents for query: {query}")

with torch.no_grad():

text_embedding = colpali_processor.process_queries([query]).to(colpali_model.device)

text_embedding = colpali_model(**text_embedding)

token_query = text_embedding[0].cpu().float().numpy().tolist()

start_time = time.time()

# Perform search in Qdrant

query_result = client.query_points(

collection_name=COLLECTION_NAME,

query=token_query,

limit=5,

search_params=models.SearchParams(

quantization=models.QuantizationSearchParams(

ignore=True,

rescore=True,

oversampling=2.0

)

)

)

print(f"Query Time: {(time.time()-start_time):.3f} s")

matched_images_path = []

# Define a folder to save matched images

MATCHED_IMAGES_DIR = "matched_images"

# Delete all files and the directory itself if it exists

if os.path.exists(MATCHED_IMAGES_DIR):

shutil.rmtree(MATCHED_IMAGES_DIR)

os.makedirs(MATCHED_IMAGES_DIR)

for result in query_result.points:

doc_id = result.payload["doc_id"]

page_num = result.payload["page_num"]

for item in dataset:

if item["doc_id"] == doc_id and item["page_num"] == page_num:

image_filename = os.path.join("matched_images", f"match_doc_{doc_id}_page_{page_num}.png")

item["image"].save(image_filename, "PNG")

matched_images_path.append(image_filename)

print(f"Saved: {image_filename}")

break

print("\nAll matched images are saved in the 'matched_images' folder.")

return matched_images_path

Now, we can define the tasks for each of these agents and create the Crew.

# Define the task for the Knowledge Retriever Agent

retrieval_task = Task(

description="Retrieve the most relevant images from the knowledge base based on the given query.",

agent=retrieval_agent,

expected_output="A list of image file paths related to the query."

)

# Define the task for the the Multimodal Knowledge Expert Agent

answering_task = Task(

description="Using the retrieved images at {{matched_images_path}}, generate a precise answer to the query: {{query_text}}.",

agent=answering_agent, # Assign answering agent

expected_output="A clear and well-structured explanation based on the extracted information from the images. No need to include reference to the images in the answer.",

)

# Assemble the Crew

crew = Crew(

agents=[retrieval_agent, answering_agent],

tasks=[retrieval_task, answering_task],

process=Process.sequential,

verbose=False

)

Query Time

Let's see how this system works with a real example. Imagine a student asks about “the proper way to heat a boiling tube containing ferrous sulphate crystals and how to safely smell the odor”,

# Run the Query

query_text = "What is the correct way of heating the boiling tube containing crystals of ferrous sulphate and of smelling the odour"

result = crew.kickoff(inputs={"query_text": query_text})

The final output ? detailed explanation pulled from textbook pages that includes both textual and visual cues. The system identifies diagrams, interprets scientific procedures, and provides safe lab instructions, just like a teacher would :)

Conclusion

This approach represents an exciting step toward more human-like document understanding. By combining vision-language models with specialized agents, we can create systems that process information more holistically - considering layout, visual elements, and text as an integrated whole.

As vision language models continue to improve, and frameworks like CrewAI become more sophisticated, we can expect even more powerful multimodal RAG systems that further close the gap between how humans and machines process information.

What Next

If you’re exploring multimodal retrieval and generation, I’d highly recommend checking out my free course on Multimodal RAG and Embeddings in collaboration with Analytics Vidhya. It covers foundational concepts like embeddings, Byte-Pair Encoding, and vision-language reasoning using Amazon Nova and Bedrock.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)