Deploy Kata Containers in Ubuntu 24.04

In previous post, we’ve explored the core differences between traditional containers and Kata Containers. In this post we will start to install kata containers. Installing Kata Containers isn’t overly complex—and once set up. It can integrate seamlessly with container runtimes like containerd or CRI-O, and even work inside Kubernetes clusters. We will post it later but in this section, I’ll walk you through how to install Kata Containers on an Ubuntu system, step by step, so you can try it out yourself and see the isolation in action. Kata Containers can be installed with 3 methods : Official distro packages Automatic Using kata-deploy ( for running kubernetes ) Recommended way to install Kata Containers is using official distro packages. But unfortunately, Kata Containers doesn't support debian-based packages, so we will install using automatic methods. With this method, we will use kata-manager script to automate installation. Prerequisites Before we begin, we need Ubuntu 24.04 baremetal host or VM with nested virtualization. Kata Containers rely on hardware virtualization to provide the strong isolation that sets them apart from traditional containers. This means each Kata container runs inside its own lightweight virtual machine. So, if you're running Kata Containers inside a virtual machine (like on a public cloud or a development VM), you'll need to enable nested virtualization—a feature that allows a VM to create and manage other VMs. Without it, the underlying hypervisor (like QEMU or Cloud Hypervisor) used by Kata won't be able to launch the isolated guest kernel, and the container runtime will fail to start Kata-based workloads. For example, if you use VMware vSphere you can enable Hardware Assisted Virtualization and IOMMU like this picture below: Installation Login to your Ubuntu host with SSH Update repositoriy and upgrade the Ubuntu system sudo apt-get update && sudo apt-get upgrade -y Reboot the host. Wait until the host powered on and we can do SSH again Download kata-manager script wget https://raw.githubusercontent.com/kata-containers/kata-containers/main/utils/kata-manager.sh Make the script executable chmod +x kata-manager.sh Execute the script to install Kata Containers with Containerd ./kata-manager.sh Installation progress will begin. After the installation completed, check the installed services ./kata-manager.sh -l Make sure Kata Containers and Containerd was installed INFO: Getting version details INFO: Kata Containers: installed version: Kata Containers containerd shim (Golang): id: "io.containerd.kata.v2", version: 3.15.0, commit: c0632f847fe706090d64951ba6b68865a416bdb4 INFO: Kata Containers: latest version: 3.15.0 INFO: containerd: installed version: containerd github.com/containerd/containerd v1.7.27 05044ec0a9a75232cad458027ca83437aae3f4da INFO: containerd: latest version: v2.0.4 INFO: Docker (moby): installed version: INFO: Docker (moby): latest version: v28.0.4 Testing After installation completed, we will test the Kata Containers with deploying a container and see the isolation is working. Run uname -a to make sure the host OS kernel version root@dev-master:~# uname -a Linux dev-master 6.8.0-57-generic #59-Ubuntu SMP PREEMPT_DYNAMIC Sat Mar 15 17:40:59 UTC 2025 x86_64 x86_64 x86_64 GNU/Linux root@dev-master:~# We can see the host OS kernel version is 6.8 Pull some container image to deploy. For example, we will use rocky linux docker image ctr image pull docker.io/rockylinux/rockylinux:latest deploy rocky linux image with defaut runtime, and run command uname -a to see what used kernel root@dev-master:~# ctr run --rm docker.io/rockylinux/rockylinux:latest rocky-defaut uname -a Linux dev-master 6.8.0-57-generic #59-Ubuntu SMP PREEMPT_DYNAMIC Sat Mar 15 17:40:59 UTC 2025 x86_64 x86_64 x86_64 GNU/Linux root@dev-master:~# From this testing, we can see the rocky-default container was using the same OS kernel from the host just like traditional container concept. Now, deploy rocky linux image with Kata runtime root@dev-master:~# ctr run --runtime io.containerd.kata.v2 --rm docker.io/rockylinux/rockylinux:latest rocky-kata uname -a Linux localhost 6.12.13 #1 SMP Thu Mar 13 11:34:50 UTC 2025 x86_64 x86_64 x86_64 GNU/Linux root@dev-master:~# Conclusions When a container is run using the default runtime, it shares the host's kernel. This means if you check the kernel version from inside the container (using uname -a), you'll see the same version as your host operating system. In contrast, when you run a container using Kata Containers, the process runs inside a lightweight virtual machine with its own dedicated kernel. Running uname -a will return a different kernel version, typically the one shipped by Kata itself. This is a simple but powerful way to confirm that Kata is using hardware virtualization to isolate yo

In previous post, we’ve explored the core differences between traditional containers and Kata Containers. In this post we will start to install kata containers. Installing Kata Containers isn’t overly complex—and once set up. It can integrate seamlessly with container runtimes like containerd or CRI-O, and even work inside Kubernetes clusters. We will post it later but in this section, I’ll walk you through how to install Kata Containers on an Ubuntu system, step by step, so you can try it out yourself and see the isolation in action.

Kata Containers can be installed with 3 methods :

- Official distro packages

- Automatic

- Using

kata-deploy( for running kubernetes )

Recommended way to install Kata Containers is using official distro packages. But unfortunately, Kata Containers doesn't support debian-based packages, so we will install using automatic methods. With this method, we will use kata-manager script to automate installation.

Prerequisites

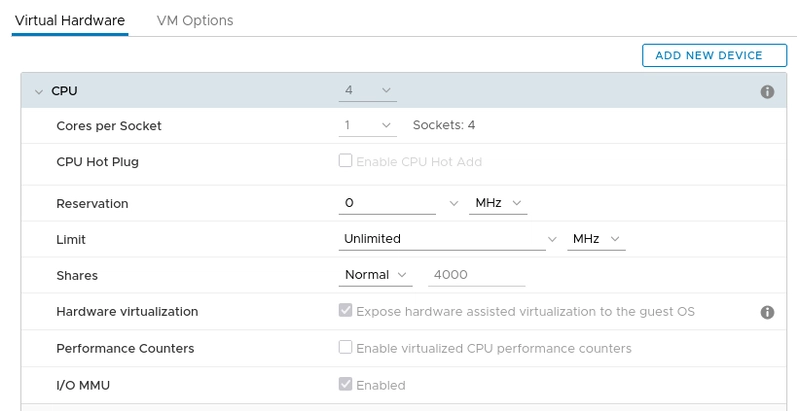

Before we begin, we need Ubuntu 24.04 baremetal host or VM with nested virtualization. Kata Containers rely on hardware virtualization to provide the strong isolation that sets them apart from traditional containers. This means each Kata container runs inside its own lightweight virtual machine. So, if you're running Kata Containers inside a virtual machine (like on a public cloud or a development VM), you'll need to enable nested virtualization—a feature that allows a VM to create and manage other VMs. Without it, the underlying hypervisor (like QEMU or Cloud Hypervisor) used by Kata won't be able to launch the isolated guest kernel, and the container runtime will fail to start Kata-based workloads. For example, if you use VMware vSphere you can enable Hardware Assisted Virtualization and IOMMU like this picture below:

Installation

Login to your Ubuntu host with SSH

Update repositoriy and upgrade the Ubuntu system

sudo apt-get update && sudo apt-get upgrade -y

- Reboot the host. Wait until the host powered on and we can do SSH again

- Download kata-manager script

wget https://raw.githubusercontent.com/kata-containers/kata-containers/main/utils/kata-manager.sh

- Make the script executable

chmod +x kata-manager.sh

- Execute the script to install Kata Containers with Containerd

./kata-manager.sh

- Installation progress will begin. After the installation completed, check the installed services

./kata-manager.sh -l

Make sure Kata Containers and Containerd was installed

INFO: Getting version details

INFO: Kata Containers: installed version: Kata Containers containerd shim (Golang): id: "io.containerd.kata.v2", version: 3.15.0, commit: c0632f847fe706090d64951ba6b68865a416bdb4

INFO: Kata Containers: latest version: 3.15.0

INFO: containerd: installed version: containerd github.com/containerd/containerd v1.7.27 05044ec0a9a75232cad458027ca83437aae3f4da

INFO: containerd: latest version: v2.0.4

INFO: Docker (moby): installed version:

INFO: Docker (moby): latest version: v28.0.4

Testing

After installation completed, we will test the Kata Containers with deploying a container and see the isolation is working.

- Run

uname -ato make sure the host OS kernel version

root@dev-master:~# uname -a

Linux dev-master 6.8.0-57-generic #59-Ubuntu SMP PREEMPT_DYNAMIC Sat Mar 15 17:40:59 UTC 2025 x86_64 x86_64 x86_64 GNU/Linux

root@dev-master:~#

We can see the host OS kernel version is 6.8

- Pull some container image to deploy. For example, we will use rocky linux docker image

ctr image pull docker.io/rockylinux/rockylinux:latest

- deploy rocky linux image with defaut runtime, and run command

uname -ato see what used kernel

root@dev-master:~# ctr run --rm docker.io/rockylinux/rockylinux:latest rocky-defaut uname -a

Linux dev-master 6.8.0-57-generic #59-Ubuntu SMP PREEMPT_DYNAMIC Sat Mar 15 17:40:59 UTC 2025 x86_64 x86_64 x86_64 GNU/Linux

root@dev-master:~#

From this testing, we can see the rocky-default container was using the same OS kernel from the host just like traditional container concept. Now, deploy rocky linux image with Kata runtime

root@dev-master:~# ctr run --runtime io.containerd.kata.v2 --rm docker.io/rockylinux/rockylinux:latest rocky-kata uname -a

Linux localhost 6.12.13 #1 SMP Thu Mar 13 11:34:50 UTC 2025 x86_64 x86_64 x86_64 GNU/Linux

root@dev-master:~#

Conclusions

When a container is run using the default runtime, it shares the host's kernel. This means if you check the kernel version from inside the container (using uname -a), you'll see the same version as your host operating system. In contrast, when you run a container using Kata Containers, the process runs inside a lightweight virtual machine with its own dedicated kernel. Running uname -a will return a different kernel version, typically the one shipped by Kata itself. This is a simple but powerful way to confirm that Kata is using hardware virtualization to isolate your container workloads—each one gets its own kernel, separate from the host.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

(1).jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

.png?#)

-(1).png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

_Christophe_Coat_Alamy.jpg?#)

.webp?#)

.webp?#)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)