How AI Influence Operations Are Testing the Limits of Open Digital Communities

The digital commons is under attack. Last month, a coordinated network of accounts, utilising AI tools to amplify, fabricated stories claiming voter fraud in the Arizona gubernatorial election, pushing demonstrably false narratives to millions before fact-checkers could intervene. This wasn’t the work of human trolls; it was a sophisticated operation leveraging readily available large language models, a stark warning that the lines of reality online are blurring, and few realise the scale of the threat. The rise of increasingly sophisticated AI, coupled with the accessibility of generative models, is creating a new battleground for influence. It’s a battle where the very nature of open digital communities – their reliance on authenticity, trust, and organic interaction – is being actively contested. We're entering an era where distinguishing genuine grassroots movements from astroturfed campaigns orchestrated by AI is becoming exponentially more difficult, and the ethical guardrails surrounding research into these phenomena are proving woefully inadequate. This isn't a futuristic dystopia; it's unfolding now, in real-time, with potentially devastating consequences for democratic processes, social cohesion, and even our collective sense of reality. From Bots to Believable Personas For years, the threat of online manipulation centred on bots – automated accounts designed to amplify specific messages, swamp opposing viewpoints, and generally disrupt online conversation. These early iterations were relatively crude, easily identifiable by their repetitive messaging, lack of nuanced interaction, and often, glaring grammatical errors. They were, essentially, digital spam. But the game has changed. Generative AI, particularly large language models (LLMs), has lowered the barrier to entry for creating far more sophisticated influence operations. Instead of simple bots, we're now seeing the emergence of digital personas – AI-driven accounts capable of generating convincingly human text, engaging in seemingly natural conversations, and adapting their behaviour based on context. These personas can build relationships, infiltrate online communities, and subtly shape narratives without raising immediate suspicion. Imagine an AI persona infiltrating a subreddit dedicated to a specific political candidate, subtly shifting the conversation away from policy details and towards personal attacks on opponents. Or picture a coordinated network of these personas disseminating disinformation about climate change across multiple social media platforms, subtly undermining public trust in scientific consensus. The scale and sophistication of these operations are rapidly increasing, and the tools to detect them are lagging far behind. This isn’t just an academic worry; the same tools used to analyse manipulation are actively being weaponised right now. This isn't simply about spreading ‘fake news’. It's about eroding the fundamental foundations of trust upon which online communities are built. When you can't reliably determine whether the people you're interacting with are real, or whether the opinions you're encountering are genuinely held, the entire social fabric begins to unravel. The Ethics of Studying the Shadows The research community has a crucial role to play in understanding and mitigating the risks posed by AI-driven influence operations. But studying these phenomena presents a unique set of ethical challenges. Unlike traditional social science research, where participants are typically aware they are being observed, investigations into online manipulation often require a degree of covert observation. Researchers need to infiltrate the very communities they're trying to understand, potentially interacting with individuals who are unaware they are part of a study. This raises serious questions about informed consent, privacy, and the potential for harm. If a researcher identifies an AI-driven persona, do they have a responsibility to expose it, even if doing so compromises their investigation? If they collect data on individuals who are unknowingly interacting with these personas, how can they ensure their privacy is protected? Moreover, the very act of studying these techniques risks inadvertently contributing to their proliferation. Describing how AI personas are created and deployed could provide valuable insights for malicious actors, enabling them to refine their tactics and evade detection. This is the ‘dual-use dilemma’ – the knowledge gained from research can be used for both beneficial and harmful purposes. Many researchers are acutely aware of these ethical concerns, and are developing innovative methodologies to address them. Differential privacy techniques, for example, allow researchers to analyse data without revealing the identities of individual users. Red teaming exercises, where researchers simulate attacks to identify vulnerabilities, can help communities strengt

The digital commons is under attack. Last month, a coordinated network of accounts, utilising AI tools to amplify, fabricated stories claiming voter fraud in the Arizona gubernatorial election, pushing demonstrably false narratives to millions before fact-checkers could intervene. This wasn’t the work of human trolls; it was a sophisticated operation leveraging readily available large language models, a stark warning that the lines of reality online are blurring, and few realise the scale of the threat. The rise of increasingly sophisticated AI, coupled with the accessibility of generative models, is creating a new battleground for influence. It’s a battle where the very nature of open digital communities – their reliance on authenticity, trust, and organic interaction – is being actively contested. We're entering an era where distinguishing genuine grassroots movements from astroturfed campaigns orchestrated by AI is becoming exponentially more difficult, and the ethical guardrails surrounding research into these phenomena are proving woefully inadequate. This isn't a futuristic dystopia; it's unfolding now, in real-time, with potentially devastating consequences for democratic processes, social cohesion, and even our collective sense of reality.

From Bots to Believable Personas

For years, the threat of online manipulation centred on bots – automated accounts designed to amplify specific messages, swamp opposing viewpoints, and generally disrupt online conversation. These early iterations were relatively crude, easily identifiable by their repetitive messaging, lack of nuanced interaction, and often, glaring grammatical errors. They were, essentially, digital spam. But the game has changed.

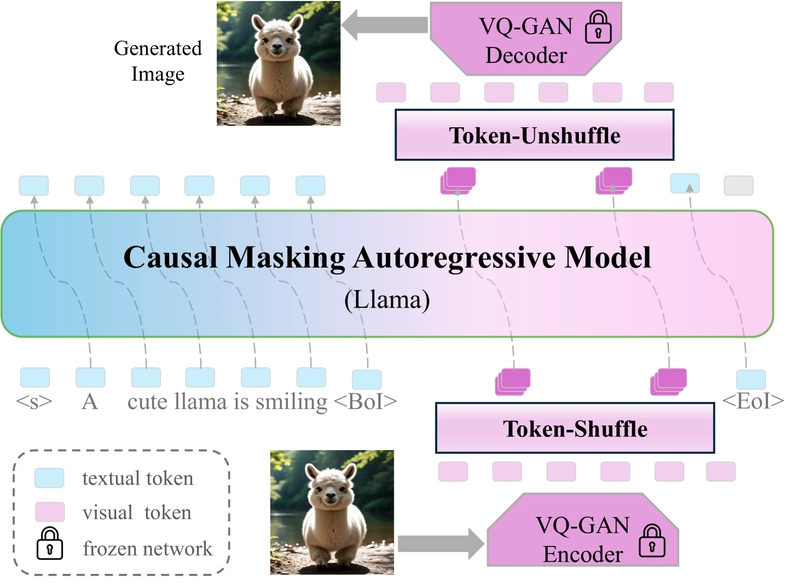

Generative AI, particularly large language models (LLMs), has lowered the barrier to entry for creating far more sophisticated influence operations. Instead of simple bots, we're now seeing the emergence of digital personas – AI-driven accounts capable of generating convincingly human text, engaging in seemingly natural conversations, and adapting their behaviour based on context. These personas can build relationships, infiltrate online communities, and subtly shape narratives without raising immediate suspicion.

Imagine an AI persona infiltrating a subreddit dedicated to a specific political candidate, subtly shifting the conversation away from policy details and towards personal attacks on opponents. Or picture a coordinated network of these personas disseminating disinformation about climate change across multiple social media platforms, subtly undermining public trust in scientific consensus. The scale and sophistication of these operations are rapidly increasing, and the tools to detect them are lagging far behind. This isn’t just an academic worry; the same tools used to analyse manipulation are actively being weaponised right now.

This isn't simply about spreading ‘fake news’. It's about eroding the fundamental foundations of trust upon which online communities are built. When you can't reliably determine whether the people you're interacting with are real, or whether the opinions you're encountering are genuinely held, the entire social fabric begins to unravel.

The Ethics of Studying the Shadows

The research community has a crucial role to play in understanding and mitigating the risks posed by AI-driven influence operations. But studying these phenomena presents a unique set of ethical challenges. Unlike traditional social science research, where participants are typically aware they are being observed, investigations into online manipulation often require a degree of covert observation. Researchers need to infiltrate the very communities they're trying to understand, potentially interacting with individuals who are unaware they are part of a study.

This raises serious questions about informed consent, privacy, and the potential for harm. If a researcher identifies an AI-driven persona, do they have a responsibility to expose it, even if doing so compromises their investigation? If they collect data on individuals who are unknowingly interacting with these personas, how can they ensure their privacy is protected?

Moreover, the very act of studying these techniques risks inadvertently contributing to their proliferation. Describing how AI personas are created and deployed could provide valuable insights for malicious actors, enabling them to refine their tactics and evade detection. This is the ‘dual-use dilemma’ – the knowledge gained from research can be used for both beneficial and harmful purposes.

Many researchers are acutely aware of these ethical concerns, and are developing innovative methodologies to address them. Differential privacy techniques, for example, allow researchers to analyse data without revealing the identities of individual users. Red teaming exercises, where researchers simulate attacks to identify vulnerabilities, can help communities strengthen their defences. But these approaches are not always sufficient, and the ethical landscape remains fraught with ambiguity.

The Open Source Paradox: A Double-Edged Sword

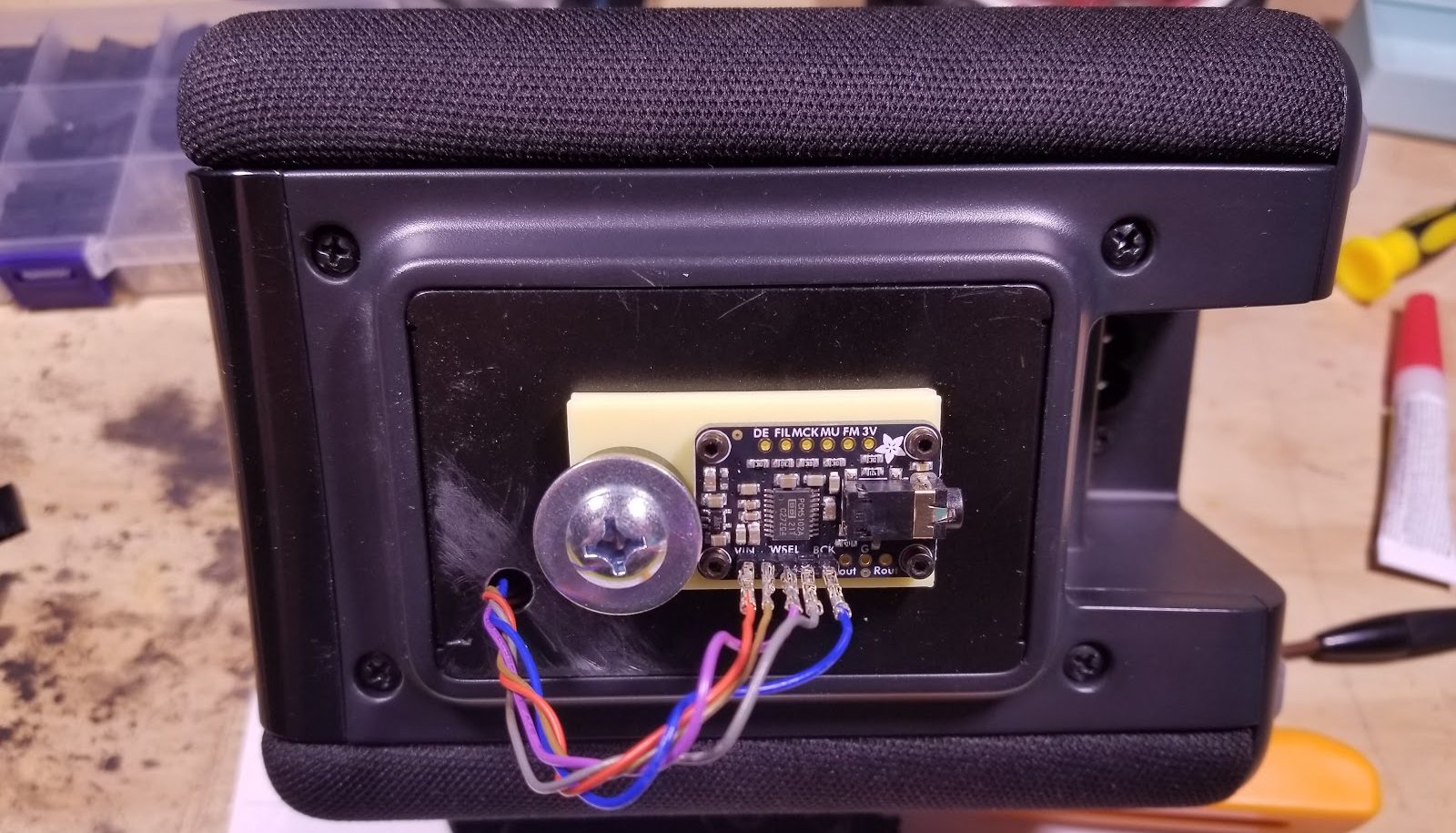

The open-source movement has been instrumental in driving innovation in AI, providing access to powerful tools and technologies that would otherwise be unavailable. But this openness also creates vulnerabilities. The same algorithms that can be used to detect and counter AI-driven influence operations can also be used to create and deploy them.

The availability of open-source LLMs allows anyone with sufficient technical expertise to build their own AI personas, without having to rely on commercial platforms. This democratisation of AI is, in many ways, a positive development. But it also means that the tools of manipulation are now more widely accessible than ever before.

According to a recent report by the Brookings Institution, researchers have linked the use of Alpaca (and similar open-source models) to the amplification of pro-Russian narratives following the invasion of Ukraine. This highlighted a crucial gap: the lack of robust monitoring for misuse within open-source AI communities. While developers emphasise accessibility, they often lack the resources to police the potential for malicious applications.

This creates a paradox: the very principles that underpin the open-source movement – transparency, collaboration, and accessibility – can be exploited by those seeking to undermine the integrity of open digital communities. The challenge lies in finding ways to harness the power of open-source AI for good, while mitigating the risks of malicious use.

Some researchers are racing to develop ‘defensive AI’ – algorithms designed to automatically detect and counter AI-driven manipulation. The team at Stanford’s AI Security Lab developed ‘Sentinel’, an algorithm that analyses linguistic patterns to identify AI-generated text with 85% accuracy as of its 2023 publication. This demonstrates the increasing sophistication of detection technology, though its effectiveness is continually challenged by evolving AI generation techniques and serves as an indication of the dynamic arms race. But these efforts are still in their early stages, and the race between attackers and defenders is likely to be a long and arduous one.

The Rise of Synthetic Trust

The most alarming aspect of AI-driven influence operations isn't just the spread of disinformation; it's the erosion of trust itself. As AI personas become more sophisticated, they're able to exploit our innate psychological vulnerabilities, building relationships based on false pretences and manipulating our emotions.

This is particularly concerning in the context of online communities, where trust is often the currency of interaction. When we join a forum or a social media group, we assume that the people we're interacting with are who they say they are, and that their opinions are genuinely held. But what happens when those assumptions are systematically undermined?

The rise of ‘synthetic trust’ – trust built on artificial relationships and manufactured consensus – threatens to fundamentally alter the dynamics of online interaction. AI personas are designed to mimic the reciprocity principle, offering small favours and consistent engagement to build trust before subtly introducing manipulative messaging. If we can't trust the people we're talking to, or the information we're receiving, the entire social contract of the digital commons begins to collapse.

This has implications far beyond the realm of online communities. In a world where AI can convincingly impersonate individuals and fabricate evidence, the very notion of objective reality becomes negotiable. This is a profoundly unsettling prospect, and one that demands urgent attention.

Navigating Uncharted Waters

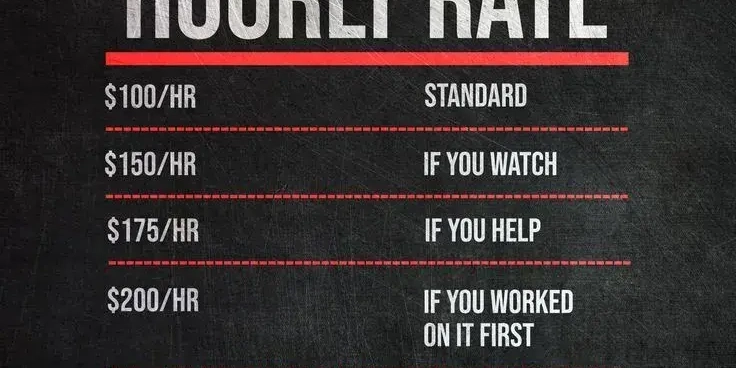

The legal and regulatory frameworks governing AI are still woefully inadequate. Existing laws designed to combat online fraud and disinformation are often ill-equipped to deal with the unique challenges posed by AI-driven influence operations. Attributing responsibility for malicious activity is particularly difficult, as the perpetrators can often operate anonymously and across multiple jurisdictions.

Senator Mark Warner is currently pushing for legislation that would mandate AI developers to disclose the potential risks of their technologies and implement safeguards to prevent their misuse. The proposed “AI Accountability Act” faces significant opposition from tech lobbyists who argue it would stifle innovation. The EU’s Digital Services Act (DSA) is a controversial attempt to address illegal content and disinformation, imposing new obligations on online platforms, but its effectiveness remains to be seen.

With regulators struggling to keep pace, the burden of safeguarding online discourse increasingly falls on the communities themselves. A more comprehensive and coordinated international effort is needed to address this global challenge.

Building Resilience in the Digital Commons

While detecting and countering AI-driven influence operations is crucial, it's not enough. We also need to build resilience into the digital commons, fostering a culture of critical thinking, media literacy, and informed participation.

This requires a multi-faceted approach, involving education, technology, and community-building. Schools and universities need to incorporate media literacy into their curricula, teaching students how to evaluate information critically and identify potential biases. Social media platforms need to invest in tools and features that help users discern between genuine content and AI-generated fakes. And online communities need to develop norms and practices that promote transparency, accountability, and respectful dialogue.

The ‘Debunk EU’ group has played a significant role in identifying and debunking false narratives related to recent European elections. Further information and reporting are available on the Debunk EU website.

Ultimately, the defence against AI-driven influence operations lies not just in technological solutions, but in the collective intelligence and vigilance of the online community. We need to become more discerning consumers of information, more sceptical of claims that seem too good to be true, and more willing to challenge narratives that don't align with our values.

Navigating a World of Synthetic Realities

The tension between technological innovation and ethical research in the realm of AI-driven influence is not simply a technical problem; it’s a fundamental challenge to our understanding of what it means to be human in the digital age. As AI becomes increasingly integrated into our lives, blurring the lines between the real and the artificial, we must confront the uncomfortable truth that our social realities are no longer entirely our own.

The future of connection hinges on our ability to navigate this new landscape with wisdom, foresight, and a commitment to safeguarding the principles of openness, trust, and authenticity. We need to develop new ethical frameworks for research, new regulatory mechanisms for AI, and new social norms for online interaction.

The ghost in the machine isn't going away. The question isn’t whether we can stop it, but whether we can learn to live with a reality where trust is a scarcity, and authenticity is a performance. The digital commons, once a beacon of open exchange, is now a contested territory. The battle for its soul has begun.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

(1).jpg?#)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Developing AI 'Vibe-Coding' Assistant for Xcode With Anthropic [Report]](https://www.iclarified.com/images/news/97200/97200/97200-640.jpg)

![Apple's New Ads Spotlight Apple Watch for Kids [Video]](https://www.iclarified.com/images/news/97197/97197/97197-640.jpg)

![[Weekly funding roundup April 26-May 2] VC inflow continues to remain downcast](https://images.yourstory.com/cs/2/220356402d6d11e9aa979329348d4c3e/WeeklyFundingRoundupNewLogo1-1739546168054.jpg)