High-Quality Transcription of Noisy Dual-Channel Phone Calls

In one of our recent projects, we needed to transcribe phone calls with extremely poor audio quality. The recordings were hard to decipher, often interrupted by overlapping voices from both the operator and the client. Additionally, the client’s side frequently featured noisy backgrounds and extraneous sounds captured by the microphone. To make matters worse, the speech often contained a mix of languages and Surzhyk. In our previous work, we had successfully used WhisperX, which performed reasonably well under decent conditions. However, in this case, the quality of the transcriptions was too poor to be useful. With the release of GPT-4o-transcribe, we decided to evaluate its capabilities. This model accepts detailed prompts, unlike Whisper, which relies on keyword-based prompts. This flexibility significantly improves transcription quality. Moreover, GPT-4o-transcribe can be instructed to distinguish between the Operator and the Client. Unfortunately, due to its lack of true diarization, this often results in confusion between speakers. The client had high expectations but was dissatisfied with the initial output. They requested accurate transcription but were unwilling to allocate time or budget for collecting training samples, annotating audio, or fine-tuning Whisper. The only improvement we had over raw recordings was that the operator’s and client’s audio channels were recorded separately. Initial Attempt (Failed) The first logical approach was to transcribe each channel separately using Whisper, and then merge the transcripts based on the timestamps. This did not work well. Whisper tends to hallucinate heavily during long silences and with short, one-word utterances. Additionally, the returned timestamps often included stretches of surrounding silence rather than isolating the actual speech. We attempted to refine these timestamps using silence detection via ffmpeg, but the results were still inaccurate. A Hybrid Strategy We took a step back and reassessed. GPT-4o-transcribe produces high-quality text but lacks timestamps. Whisper, on the other hand, provides timestamps but delivers lower-quality text. An attentive human could easily deduce the correct structure by comparing both. Thus, we built the following pipeline: Final Algorithm Transcribe the Client’s channel using gpt-4o-transcribe-mini. Then, use GPT-4o to detect the spoken language. The Operator's language is known in advance. Transcribe both channels using whisper-1. Set temperature to 0.0. Use a language-specific prompt with a few representative phrases. The output is in VTT format with timestamps. Transcribe both channels using gpt-4o-transcribe. Set temperature to 0.1. Use detailed prompts tailored to each channel’s content. Operator’s Prompt: You are an expert in the field of audio transcription. 1. The provided audio file contains only the operator’s lines from a phone conversation. 2. The operator represents the company "XXXXXX", operating under the trade name "YYYYYYY", website "ZZZZZZZZ". 3. The operator is advertising credit services. 4. They describe terms, loan issuance process, interest rates, fees, and commissions. 5. The client may ask questions, accept or reject terms, or request no further contact. 6. The language may be Language1, Language2, or a mixture. Preserve original languages. 7. Audio quality is poor. Fix hallucinations and unrelated content. Provide the full transcription. Client’s Prompt: You are an expert in audio transcription. 1. The audio contains only the client’s lines. 2. The operator promotes credit services. 3. The client may ask about terms, rates, fees, or reject the offer. 4. The client may request more info or refuse further contact. 5. Language may be Ukrainian, Russian, or mixed. Preserve original languages. 6. Audio quality is poor. Fix hallucinations and unrelated content. Provide the full transcription. 4. Align the GPT-4o transcript to Whisper’s timestamps using GPT-4.1. Use the following prompt: You are an expert in analyzing and reconstructing transcripts of phone calls promoting credit services. I will provide two texts: 1. A transcript generated using the gpt-4o-transcribe model. 2. A transcript in VTT format with timestamped utterances from whisper-1. Your task: align the first transcript using timestamps from the second. - If text differs due to hallucinations, use GPT-4o’s version. - Include all content from GPT-4o. - If a timestamp is missing, prepend the text to the next line and adjust the start time accordingly. Return only the final VTT transcript. 5. Correct the Operator’s timestamps using silence periods detected via ffmpeg filters. 6. Repeat steps 4–5 for the Client’s transcript. 7. Merge the final VTT transcripts from both channels, ensuring accurate timestamp ordering and correct speaker attribution. Conclu

In one of our recent projects, we needed to transcribe phone calls with extremely poor audio quality. The recordings were hard to decipher, often interrupted by overlapping voices from both the operator and the client. Additionally, the client’s side frequently featured noisy backgrounds and extraneous sounds captured by the microphone. To make matters worse, the speech often contained a mix of languages and Surzhyk.

In our previous work, we had successfully used WhisperX, which performed reasonably well under decent conditions. However, in this case, the quality of the transcriptions was too poor to be useful.

With the release of GPT-4o-transcribe, we decided to evaluate its capabilities. This model accepts detailed prompts, unlike Whisper, which relies on keyword-based prompts. This flexibility significantly improves transcription quality. Moreover, GPT-4o-transcribe can be instructed to distinguish between the Operator and the Client. Unfortunately, due to its lack of true diarization, this often results in confusion between speakers.

The client had high expectations but was dissatisfied with the initial output. They requested accurate transcription but were unwilling to allocate time or budget for collecting training samples, annotating audio, or fine-tuning Whisper. The only improvement we had over raw recordings was that the operator’s and client’s audio channels were recorded separately.

Initial Attempt (Failed)

The first logical approach was to transcribe each channel separately using Whisper, and then merge the transcripts based on the timestamps. This did not work well. Whisper tends to hallucinate heavily during long silences and with short, one-word utterances. Additionally, the returned timestamps often included stretches of surrounding silence rather than isolating the actual speech. We attempted to refine these timestamps using silence detection via ffmpeg, but the results were still inaccurate.

A Hybrid Strategy

We took a step back and reassessed. GPT-4o-transcribe produces high-quality text but lacks timestamps. Whisper, on the other hand, provides timestamps but delivers lower-quality text. An attentive human could easily deduce the correct structure by comparing both.

Thus, we built the following pipeline:

Final Algorithm

Transcribe the Client’s channel using

gpt-4o-transcribe-mini. Then, useGPT-4oto detect the spoken language. The Operator's language is known in advance.-

Transcribe both channels using

whisper-1.- Set temperature to

0.0. - Use a language-specific prompt with a few representative phrases.

- The output is in VTT format with timestamps.

- Set temperature to

-

Transcribe both channels using

gpt-4o-transcribe.- Set temperature to

0.1. - Use detailed prompts tailored to each channel’s content.

- Set temperature to

Operator’s Prompt:

You are an expert in the field of audio transcription.

1. The provided audio file contains only the operator’s lines from a phone conversation.

2. The operator represents the company "XXXXXX", operating under the trade name "YYYYYYY", website "ZZZZZZZZ".

3. The operator is advertising credit services.

4. They describe terms, loan issuance process, interest rates, fees, and commissions.

5. The client may ask questions, accept or reject terms, or request no further contact.

6. The language may be Language1, Language2, or a mixture. Preserve original languages.

7. Audio quality is poor. Fix hallucinations and unrelated content.

Provide the full transcription.

Client’s Prompt:

You are an expert in audio transcription.

1. The audio contains only the client’s lines.

2. The operator promotes credit services.

3. The client may ask about terms, rates, fees, or reject the offer.

4. The client may request more info or refuse further contact.

5. Language may be Ukrainian, Russian, or mixed. Preserve original languages.

6. Audio quality is poor. Fix hallucinations and unrelated content.

Provide the full transcription.

4. Align the GPT-4o transcript to Whisper’s timestamps using GPT-4.1. Use the following prompt:

You are an expert in analyzing and reconstructing transcripts of phone calls promoting credit services.

I will provide two texts:

1. A transcript generated using the gpt-4o-transcribe model.

2. A transcript in VTT format with timestamped utterances from whisper-1.

Your task: align the first transcript using timestamps from the second.

- If text differs due to hallucinations, use GPT-4o’s version.

- Include all content from GPT-4o.

- If a timestamp is missing, prepend the text to the next line and adjust the start time accordingly.

Return only the final VTT transcript.

5. Correct the Operator’s timestamps using silence periods detected via ffmpeg filters.

6. Repeat steps 4–5 for the Client’s transcript.

7. Merge the final VTT transcripts from both channels, ensuring accurate timestamp ordering and correct speaker attribution.

Conclusion

This solution is neither fast nor cheap — it involves multiple calls to OpenAI models — but it yields nearly perfect transcripts even in cases where Whisper alone fails dramatically.

You can find the code adapted for handling Ukrainian and Russian languages here.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

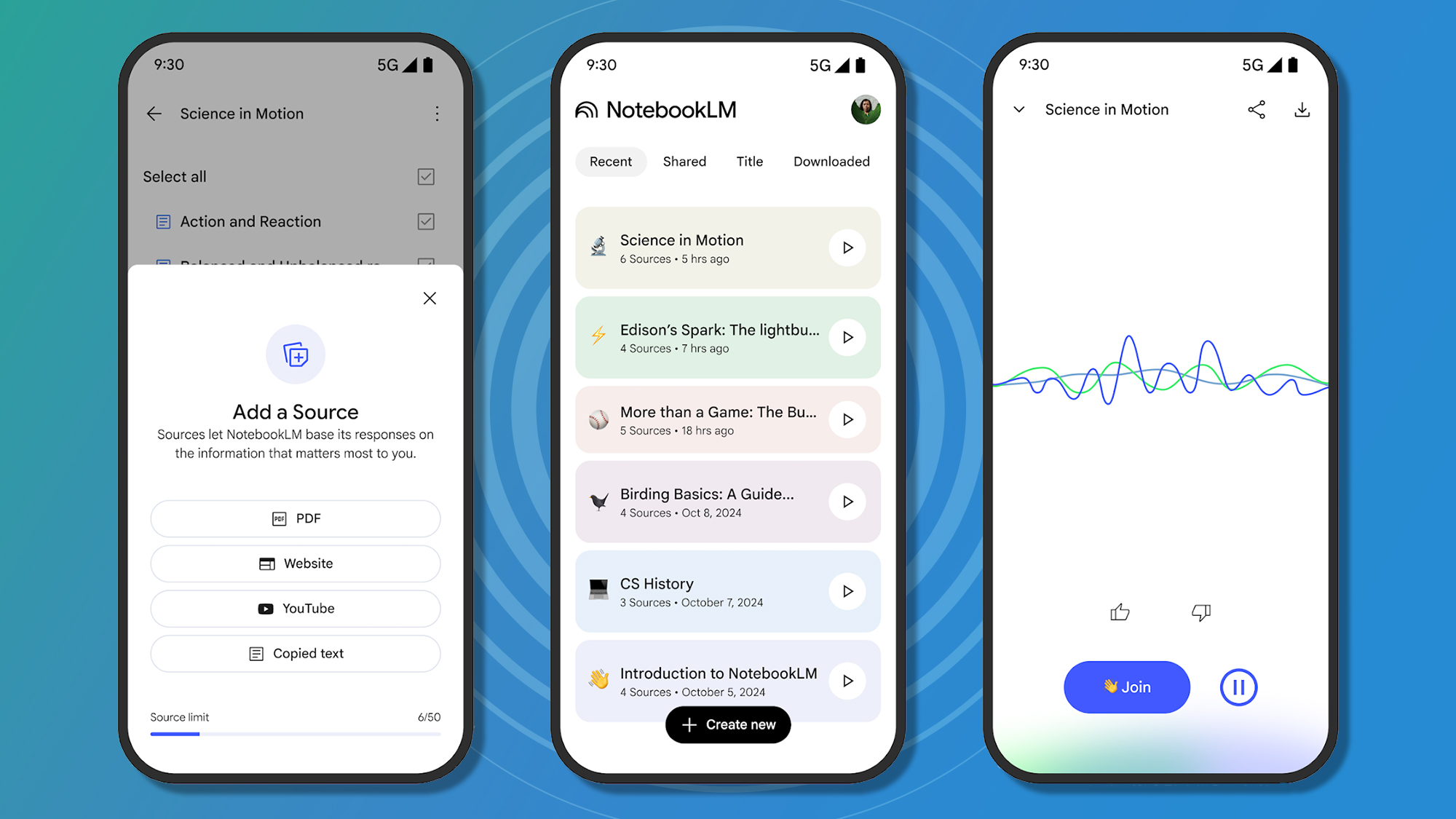

![Google reveals NotebookLM app for Android & iPhone, coming at I/O 2025 [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/05/NotebookLM-Android-iPhone-6-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Reports Q2 FY25 Earnings: $95.4 Billion in Revenue, $24.8 Billion in Net Income [Chart]](https://www.iclarified.com/images/news/97188/97188/97188-640.jpg)