Chunking your data for RAG

txtai is an all-in-one embeddings database for semantic search, LLM orchestration and language model workflows. One of the major workflows in txtai is Retrieval Augmented Generation (RAG). Large Language Models (LLM) are built to generate coherent sounding text. While in many cases it is factually accurate, that is not what they're built to do. RAG steps in to help inject smaller pieces of knowledge into a LLM prompt and increase the overall accuracy of responses. The R in RAG is very important. This article will demonstrate how to extract, chunk and index text to support retrieval operations for RAG. Install dependencies Install txtai and all dependencies. pip install txtai[pipeline-text] Data chunking and indexing Let's dive right in and keep this example simple. The next section creates a Textractor pipeline and an Embeddings database. The Textractor extracts chunks of text from files and the Embeddings takes those chunks and builds an index/database. We'll use a late chunker backed by Chonkie. Then, we'll build an indexing workflow that streams chunks from two files. from txtai import Embeddings from txtai.pipeline import Textractor # Text extraction pipeline with late chunking via Chonkie textractor = Textractor(chunker="late") embeddings = Embeddings(content=True) def stream(): urls = ["https://github.com/neuml/txtai", "https://arxiv.org/pdf/2005.11401"] for x, url in enumerate(urls): chunks = textractor(url) # Add all chunks - use the same document id for each chunk for chunk in chunks: yield x, chunk # Add the document metadata with the same document id # Can be any metadata. Can also be the entire document. yield x, {"url": url} # Index the chunks and metadata embeddings.index(stream()) A key element of txtai that is commonly misunderstood is how to best store chunks of data and join them back to the main document. txtai allows re-using the same logical id multiple times. Behind the scenes, each chunk gets it's own unique index id. The backend database stores chunks in a table called sections and data in a table called documents. This has been the case as far back as txtai 4.0. txtai also has the ability to store associated binary data in a table called objects. It's important to note that each associated document or object is only stored once. To illustrate, let's look at the first 20 rows in the embeddings database created. for x in embeddings.search("SELECT indexid, id, url, text from txtai", 20): print(x) {'indexid': 0, 'id': '0', 'url': 'https://github.com/neuml/txtai', 'text': '**GitHub - neuml/txtai:

txtai is an all-in-one embeddings database for semantic search, LLM orchestration and language model workflows.

One of the major workflows in txtai is Retrieval Augmented Generation (RAG). Large Language Models (LLM) are built to generate coherent sounding text. While in many cases it is factually accurate, that is not what they're built to do. RAG steps in to help inject smaller pieces of knowledge into a LLM prompt and increase the overall accuracy of responses. The R in RAG is very important.

This article will demonstrate how to extract, chunk and index text to support retrieval operations for RAG.

Install dependencies

Install txtai and all dependencies.

pip install txtai[pipeline-text]

Data chunking and indexing

Let's dive right in and keep this example simple. The next section creates a Textractor pipeline and an Embeddings database.

The Textractor extracts chunks of text from files and the Embeddings takes those chunks and builds an index/database. We'll use a late chunker backed by Chonkie.

Then, we'll build an indexing workflow that streams chunks from two files.

from txtai import Embeddings

from txtai.pipeline import Textractor

# Text extraction pipeline with late chunking via Chonkie

textractor = Textractor(chunker="late")

embeddings = Embeddings(content=True)

def stream():

urls = ["https://github.com/neuml/txtai", "https://arxiv.org/pdf/2005.11401"]

for x, url in enumerate(urls):

chunks = textractor(url)

# Add all chunks - use the same document id for each chunk

for chunk in chunks:

yield x, chunk

# Add the document metadata with the same document id

# Can be any metadata. Can also be the entire document.

yield x, {"url": url}

# Index the chunks and metadata

embeddings.index(stream())

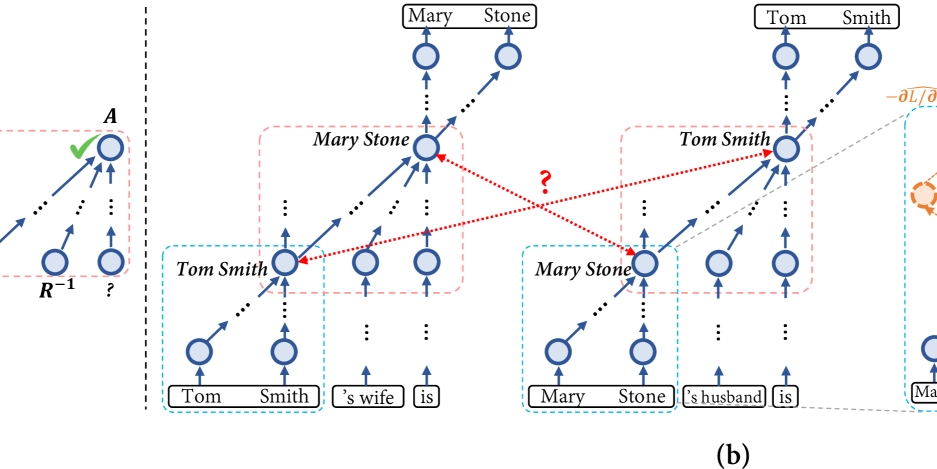

A key element of txtai that is commonly misunderstood is how to best store chunks of data and join them back to the main document. txtai allows re-using the same logical id multiple times.

Behind the scenes, each chunk gets it's own unique index id. The backend database stores chunks in a table called sections and data in a table called documents. This has been the case as far back as txtai 4.0. txtai also has the ability to store associated binary data in a table called objects. It's important to note that each associated document or object is only stored once.

To illustrate, let's look at the first 20 rows in the embeddings database created.

for x in embeddings.search("SELECT indexid, id, url, text from txtai", 20):

print(x)

{'indexid': 0, 'id': '0', 'url': 'https://github.com/neuml/txtai', 'text': '**GitHub - neuml/txtai:

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

.jpg?#)

![YouTube Announces New Creation Tools for Shorts [Video]](https://www.iclarified.com/images/news/96923/96923/96923-640.jpg)

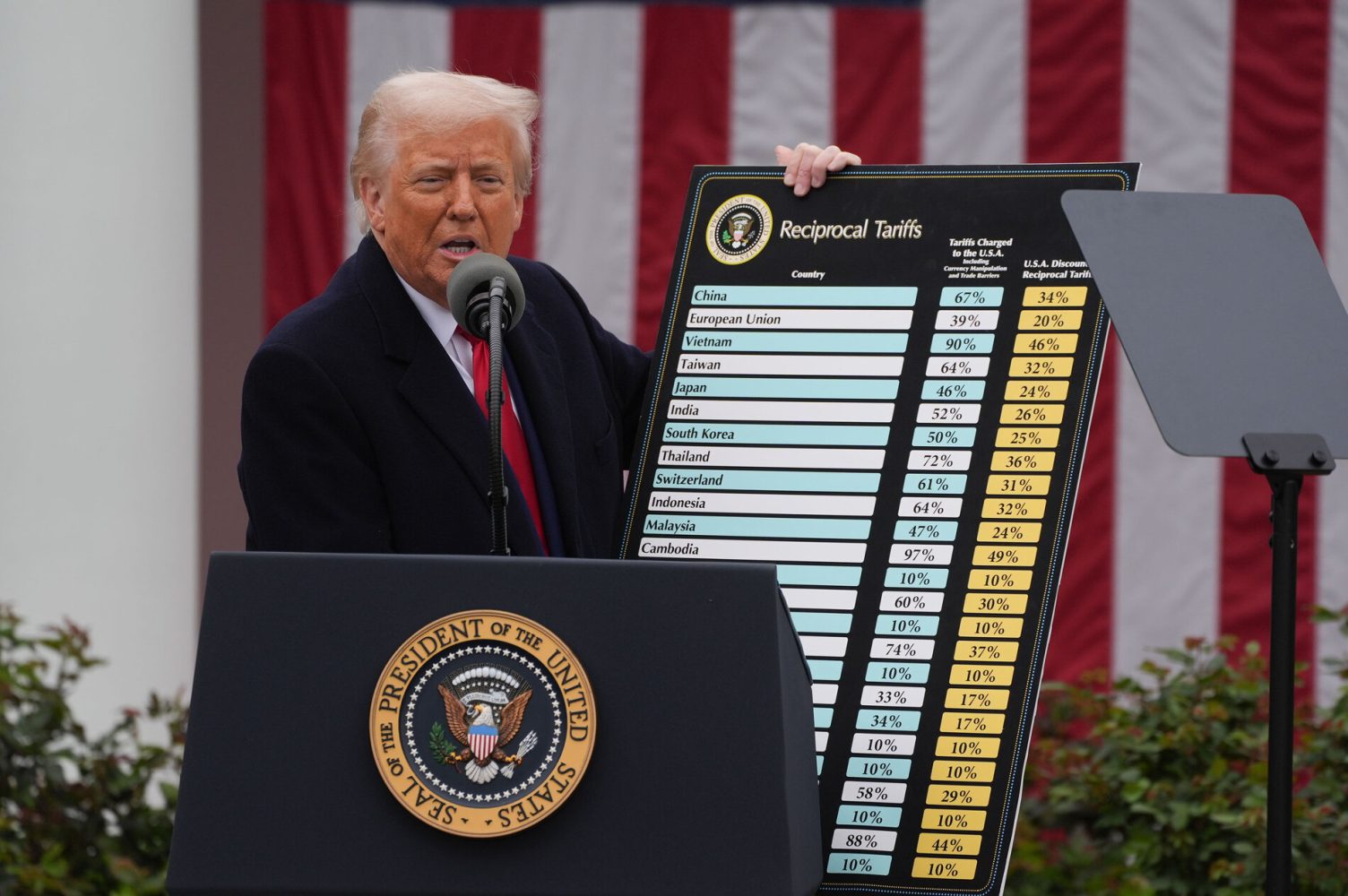

![Apple Faces New Tariffs but Has Options to Soften the Blow [Kuo]](https://www.iclarified.com/images/news/96921/96921/96921-640.jpg)