Building an AI Image Extender with Next.js: Enhancing Images Using AI and React

Summary In this guide, we’ll walk through building an AI Image Extender—a tool that takes an input image, uses AI-based outpainting to expand its canvas, and returns an enhanced result—all within a Next.js + React application. You’ll learn how to scaffold a Next.js project, integrate Tailwind CSS for styling, build a user-friendly upload interface, implement a serverless API route to handle AI calls, connect to your chosen AI outpainting service, and deploy the finished app. We’ll also cover error handling, loading states, and monitoring with Sentry and Google Analytics so you have a production-ready template for your own AI-driven image tools. Technology Stack Next.js (v13+) Provides file-based routing, built-in API routes, and server-side rendering / static optimization. React For composing interactive UI components. Tailwind CSS Utility-first, low-bundle-size styling. AI Outpainting API Could be OpenAI’s image APIs, Stability AI, or a custom model endpoint. Sentry For runtime error tracking. Google Analytics (or GA4) To measure user engagement and API usage. Vercel (or Netlify) Recommended for zero-config deployment of Next.js projects. 1. Setting Up a Next.js Project First, scaffold: npx create-next-app@latest ai-image-extender cd ai-image-extender Choose “App Router” when prompted (or stick with pages/ if you prefer). You now have: /app ← (if using App Router) /pages ← (if using Pages Router) public/ /styles/ /next.config.js package.json All API routes will go under /pages/api/extend.js (or /app/api/extend/route.js). 2. Adding Tailwind CSS for Styling Install Tailwind and its peers: npm install -D tailwindcss postcss autoprefixer npx tailwindcss init -p Configure tailwind.config.js: /** @type {import('tailwindcss').Config} */ module.exports = { content: [ "./app/**/*.{js,ts,jsx,tsx}", "./components/**/*.{js,ts,jsx,tsx}", ], theme: { extend: {} }, plugins: [], }; Import Tailwind into styles/globals.css: @tailwind base; @tailwind components; @tailwind utilities; Now you can use classes like p-4, rounded-2xl, and shadow-lg throughout. 3. Building the User Interface with React Create a component to handle file uploads, previewing, and triggering the extend action. // components/ImageUploader.js import { useState } from 'react'; export default function ImageUploader() { const [file, setFile] = useState(null); const [previewUrl, setPreviewUrl] = useState(''); const [extendedUrl, setExtendedUrl] = useState(''); const [loading, setLoading] = useState(false); const handleUpload = (e) => { const chosen = e.target.files[0]; setFile(chosen); setPreviewUrl(URL.createObjectURL(chosen)); setExtendedUrl(''); }; const handleExtend = async () => { if (!file) return; setLoading(true); const form = new FormData(); form.append('image', file); try { const res = await fetch('/api/extend', { method: 'POST', body: form }); const { extendedImageUrl } = await res.json(); setExtendedUrl(extendedImageUrl); } catch (err) { console.error(err); alert('Failed to extend image. Please try again.'); } finally { setLoading(false); } }; return ( {previewUrl && ( )} {loading ? 'Extending…' : 'Extend Image'} {extendedUrl && ( )} ); } Then import this into your page (e.g. pages/index.js): import ImageUploader from '../components/ImageUploader'; export default function Home() { return ( ); } 4. Implementing the AI Outpainting Backend Next.js API route to receive the upload, forward it to the AI service, and return the result. // pages/api/extend.js import formidable from 'formidable'; import fs from 'fs'; import fetch from 'node-fetch'; export const config = { api: { bodyParser: false } }; export default async function handler(req, res) { if (req.method !== 'POST') return res.status(405).end(); // Parse incoming form const data = await new Promise((resolve, reject) => { const form = new formidable.IncomingForm(); form.parse(req, (err, fields, files) => { if (err) reject(err); else resolve(files.image); }); }); const imageBuffer = fs.readFileSync(data.filepath); // Call AI outpainting endpoint (example) const aiRes = await fetch('https://api.example.com/v1/outpaint', { method: 'POST', headers: { 'Authorization': `Bearer ${process.env.AI_API_KEY}` }, body: imageBuffer }); const blob = await aiRes.blob(); // Convert to base64 for quick demo, or upload to storage const arrayBuffer = await blob.arrayBuffer(); const base64 = Buffer.from(arrayBuffer).toString('base64'); const mime = blob.type; // e.g. "image/png" const extendedImageUrl = `data:${mime};base64,${base64}`; return r

Summary

In this guide, we’ll walk through building an AI Image Extender—a tool that takes an input image, uses AI-based outpainting to expand its canvas, and returns an enhanced result—all within a Next.js + React application. You’ll learn how to scaffold a Next.js project, integrate Tailwind CSS for styling, build a user-friendly upload interface, implement a serverless API route to handle AI calls, connect to your chosen AI outpainting service, and deploy the finished app. We’ll also cover error handling, loading states, and monitoring with Sentry and Google Analytics so you have a production-ready template for your own AI-driven image tools.

Technology Stack

- Next.js (v13+) Provides file-based routing, built-in API routes, and server-side rendering / static optimization.

- React For composing interactive UI components.

- Tailwind CSS Utility-first, low-bundle-size styling.

- AI Outpainting API Could be OpenAI’s image APIs, Stability AI, or a custom model endpoint.

- Sentry For runtime error tracking.

- Google Analytics (or GA4) To measure user engagement and API usage.

- Vercel (or Netlify) Recommended for zero-config deployment of Next.js projects.

1. Setting Up a Next.js Project

First, scaffold:

npx create-next-app@latest ai-image-extender

cd ai-image-extender

Choose “App Router” when prompted (or stick with pages/ if you prefer). You now have:

/app ← (if using App Router)

/pages ← (if using Pages Router)

public/

/styles/

/next.config.js

package.json

All API routes will go under /pages/api/extend.js (or /app/api/extend/route.js).

2. Adding Tailwind CSS for Styling

Install Tailwind and its peers:

npm install -D tailwindcss postcss autoprefixer

npx tailwindcss init -p

Configure tailwind.config.js:

/** @type {import('tailwindcss').Config} */

module.exports = {

content: [

"./app/**/*.{js,ts,jsx,tsx}",

"./components/**/*.{js,ts,jsx,tsx}",

],

theme: { extend: {} },

plugins: [],

};

Import Tailwind into styles/globals.css:

@tailwind base;

@tailwind components;

@tailwind utilities;

Now you can use classes like p-4, rounded-2xl, and shadow-lg throughout.

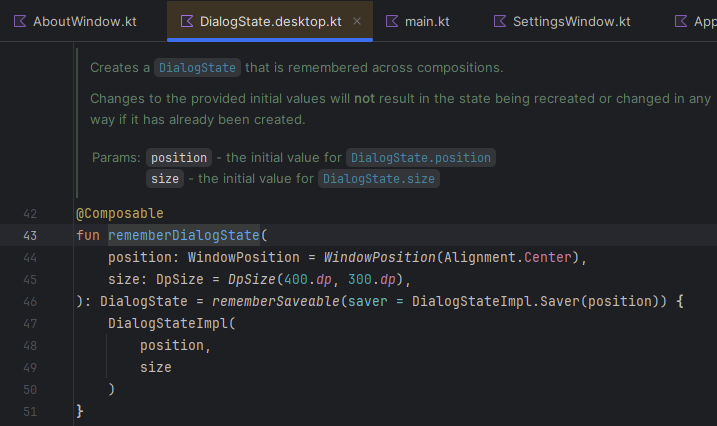

3. Building the User Interface with React

Create a component to handle file uploads, previewing, and triggering the extend action.

// components/ImageUploader.js

import { useState } from 'react';

export default function ImageUploader() {

const [file, setFile] = useState(null);

const [previewUrl, setPreviewUrl] = useState('');

const [extendedUrl, setExtendedUrl] = useState('');

const [loading, setLoading] = useState(false);

const handleUpload = (e) => {

const chosen = e.target.files[0];

setFile(chosen);

setPreviewUrl(URL.createObjectURL(chosen));

setExtendedUrl('');

};

const handleExtend = async () => {

if (!file) return;

setLoading(true);

const form = new FormData();

form.append('image', file);

try {

const res = await fetch('/api/extend', { method: 'POST', body: form });

const { extendedImageUrl } = await res.json();

setExtendedUrl(extendedImageUrl);

} catch (err) {

console.error(err);

alert('Failed to extend image. Please try again.');

} finally {

setLoading(false);

}

};

return (

<div className="max-w-md mx-auto space-y-4 p-6 bg-white rounded-2xl shadow-lg">

<input type="file" accept="image/*" onChange={handleUpload} />

{previewUrl && (

<img src={previewUrl} alt="Preview" className="rounded-xl" />

)}

<button

onClick={handleExtend}

disabled={!file || loading}

className="w-full py-2 bg-blue-600 text-white rounded-lg hover:bg-blue-700 disabled:opacity-50"

>

{loading ? 'Extending…' : 'Extend Image'}

button>

{extendedUrl && (

<img src={extendedUrl} alt="Extended" className="rounded-xl mt-4" />

)}

div>

);

}

Then import this into your page (e.g. pages/index.js):

import ImageUploader from '../components/ImageUploader';

export default function Home() {

return (

<main className="min-h-screen bg-gray-50 flex items-center justify-center">

<ImageUploader />

main>

);

}

4. Implementing the AI Outpainting Backend

Next.js API route to receive the upload, forward it to the AI service, and return the result.

// pages/api/extend.js

import formidable from 'formidable';

import fs from 'fs';

import fetch from 'node-fetch';

export const config = { api: { bodyParser: false } };

export default async function handler(req, res) {

if (req.method !== 'POST') return res.status(405).end();

// Parse incoming form

const data = await new Promise((resolve, reject) => {

const form = new formidable.IncomingForm();

form.parse(req, (err, fields, files) => {

if (err) reject(err);

else resolve(files.image);

});

});

const imageBuffer = fs.readFileSync(data.filepath);

// Call AI outpainting endpoint (example)

const aiRes = await fetch('https://api.example.com/v1/outpaint', {

method: 'POST',

headers: {

'Authorization': `Bearer ${process.env.AI_API_KEY}`

},

body: imageBuffer

});

const blob = await aiRes.blob();

// Convert to base64 for quick demo, or upload to storage

const arrayBuffer = await blob.arrayBuffer();

const base64 = Buffer.from(arrayBuffer).toString('base64');

const mime = blob.type; // e.g. "image/png"

const extendedImageUrl = `data:${mime};base64,${base64}`;

return res.status(200).json({ extendedImageUrl });

}

Notes

- Use streaming or upload to S3/Cloudinary in production instead of data URLs.

- Protect your API key via

process.envand Vercel’s secret management.

5. Monitoring & Analytics

Sentry

Install and initialize in pages/_app.js (or App Router’s layout):

npm install @sentry/nextjs

// sentry.server.config.js & sentry.client.config.js as per docs

// pages/_app.js

import * as Sentry from '@sentry/nextjs';

Sentry.init({ dsn: process.env.SENTRY_DSN });

Google Analytics (GA4)

Add the tag snippet in pages/_document.js or via Next.js’s built-in next/script:

// pages/_document.js

import { Html, Head, Main, NextScript } from 'next/document'

export default function Document() {

return (

<Html>

<Head>

{/* GA4 snippet */}

Head>

<body>

<Main />

<NextScript />

body>

Html>

)

}

Track pageviews and button clicks with gtag.event() inside React handlers if desired.

6. Deployment

- Push your repo to GitHub.

- Connect your project to Vercel (https://vercel.com).

- In Vercel’s dashboard, add your environment variables (

AI_API_KEY,SENTRY_DSN, etc.). - Deploy! Vercel will detect Next.js and handle builds automatically.

Conclusion

You now have a fully functional AI Image Extender built with Next.js and React. This architecture scales: swap out the AI provider, enhance the UI with more controls (canvas crop, multiple directions), or integrate user accounts for saved images. With Tailwind for rapid styling, Next.js API routes for serverless scaling, and monitoring via Sentry/GA, you’re set to ship production-grade AI features on the web. Happy coding!

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Is This Programming Paradigm New? [closed]](https://miro.medium.com/v2/resize:fit:1200/format:webp/1*nKR2930riHA4VC7dLwIuxA.gif)

-Classic-Nintendo-GameCube-games-are-coming-to-Nintendo-Switch-2!-00-00-13.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![M4 MacBook Air Drops to New All-Time Low of $912 [Deal]](https://www.iclarified.com/images/news/97108/97108/97108-640.jpg)

![New iPhone 17 Dummy Models Surface in Black and White [Images]](https://www.iclarified.com/images/news/97106/97106/97106-640.jpg)