Building a Distributed Microservice in Rust

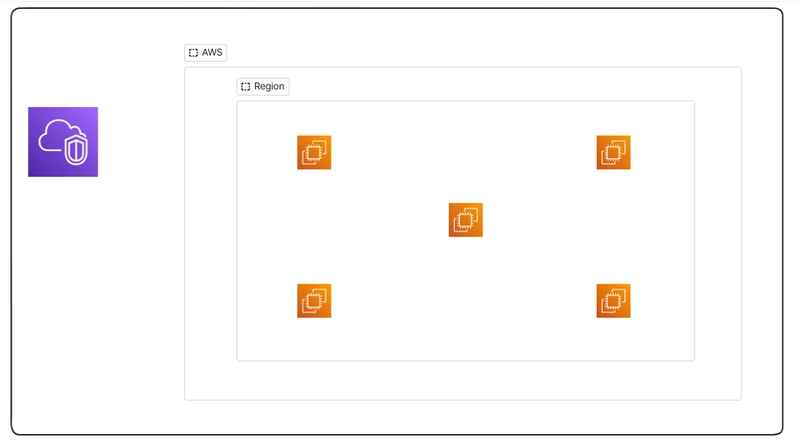

Overview This project demonstrates how to split CPU-bound tasks (like prime factorization) across multiple Rust microservices: Load Balancer (Rust + Actix-Web) Receives requests on http://localhost:9000/compute Forwards them to healthy worker nodes Master Node (Optional) If you have large tasks, you can chunk them out to multiple workers simultaneously Listens on http://localhost:8081/distribute Worker Nodes (Rust + Rayon) Actually perform the factorization (or any heavy logic) Listen on http://localhost:8080/compute (and more if you spin up more workers) Why This Is Cool Scalable: Easily add more workers for heavier traffic. Fault Tolerant: If a worker dies, the load balancer reroutes around it. Modular: Each piece (LB, master, worker) can be tested and deployed independently. Quickstart 1. Clone the Repository git clone https://github.com/copyleftdev/prime_cluster.git cd prime_cluster 2. Use the Makefile We’ve included a Makefile so you can run everything with quick commands. Key Makefile Targets (click to expand) make build – Builds all components (worker, master, load_balancer) make run-all – Runs everything locally in separate tmux panes make docker-build – Builds Docker images for each service make docker-run – Spins them up via Docker Compose 3. Local Setup (No Docker) Build Everything make build This runs cargo build --release for each microservice. Run Option A: Individual make run-load-balancer make run-master make run-worker or Option B: All Together (requires tmux): make run-all At this point: Load Balancer → http://localhost:9000/compute Master → http://localhost:8081/distribute Worker → http://localhost:8080/compute Test a Request curl -X POST -H "Content-Type: application/json" \ -d '{"numbers":[1234567, 9876543]}' \ http://localhost:9000/compute The load balancer picks a healthy worker, which returns the prime factors. 4. Docker Approach If you prefer Docker: Build Images make docker-build Run Services make docker-run Stop make docker-stop Afterward, the same endpoints apply (:9000 for LB, :8081 for Master, :8080 for Worker). 5. Load Testing (Optional) Use our k6 script to see how the system handles traffic. In load_tester/: cd load_tester k6 run perf_load.js You’ll get metrics on how fast the cluster factorizes numbers under various load scenarios. That’s It! You’ve now spun up a distributed microservice in Rust using this code from github.com/copyleftdev/prime_cluster. Feel free to: Modify the worker logic (it can do any CPU-heavy or GPU-accelerated task). Scale by adding more worker containers in the docker-compose.yml. Expand the master node to orchestrate even more complex tasks or parallel pipelines. Have fun exploring this approach to building modular, scalable systems in Rust!

Overview

This project demonstrates how to split CPU-bound tasks (like prime factorization) across multiple Rust microservices:

-

Load Balancer (Rust + Actix-Web)

- Receives requests on

http://localhost:9000/compute - Forwards them to healthy worker nodes

- Receives requests on

-

Master Node (Optional)

- If you have large tasks, you can chunk them out to multiple workers simultaneously

- Listens on

http://localhost:8081/distribute

-

Worker Nodes (Rust + Rayon)

- Actually perform the factorization (or any heavy logic)

- Listen on

http://localhost:8080/compute(and more if you spin up more workers)

Why This Is Cool

- Scalable: Easily add more workers for heavier traffic.

- Fault Tolerant: If a worker dies, the load balancer reroutes around it.

- Modular: Each piece (LB, master, worker) can be tested and deployed independently.

Quickstart

1. Clone the Repository

git clone https://github.com/copyleftdev/prime_cluster.git

cd prime_cluster

2. Use the Makefile

We’ve included a Makefile so you can run everything with quick commands.

Key Makefile Targets (click to expand)

-

make build– Builds all components (worker, master, load_balancer) -

make run-all– Runs everything locally in separate tmux panes -

make docker-build– Builds Docker images for each service -

make docker-run– Spins them up via Docker Compose

3. Local Setup (No Docker)

- Build Everything

make build

This runs cargo build --release for each microservice.

-

Run

- Option A: Individual

make run-load-balancer make run-master make run-workeror

- Option B: All Together (requires tmux):

make run-all

- At this point:

- Load Balancer →

http://localhost:9000/compute - Master →

http://localhost:8081/distribute - Worker →

http://localhost:8080/compute

- Load Balancer →

- Test a Request

curl -X POST -H "Content-Type: application/json" \

-d '{"numbers":[1234567, 9876543]}' \

http://localhost:9000/compute

The load balancer picks a healthy worker, which returns the prime factors.

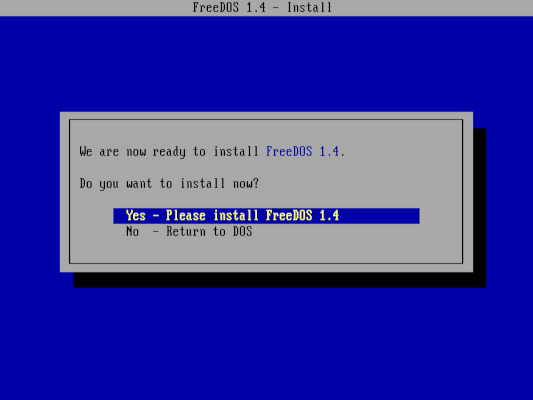

4. Docker Approach

If you prefer Docker:

- Build Images

make docker-build

- Run Services

make docker-run

- Stop

make docker-stop

Afterward, the same endpoints apply (:9000 for LB, :8081 for Master, :8080 for Worker).

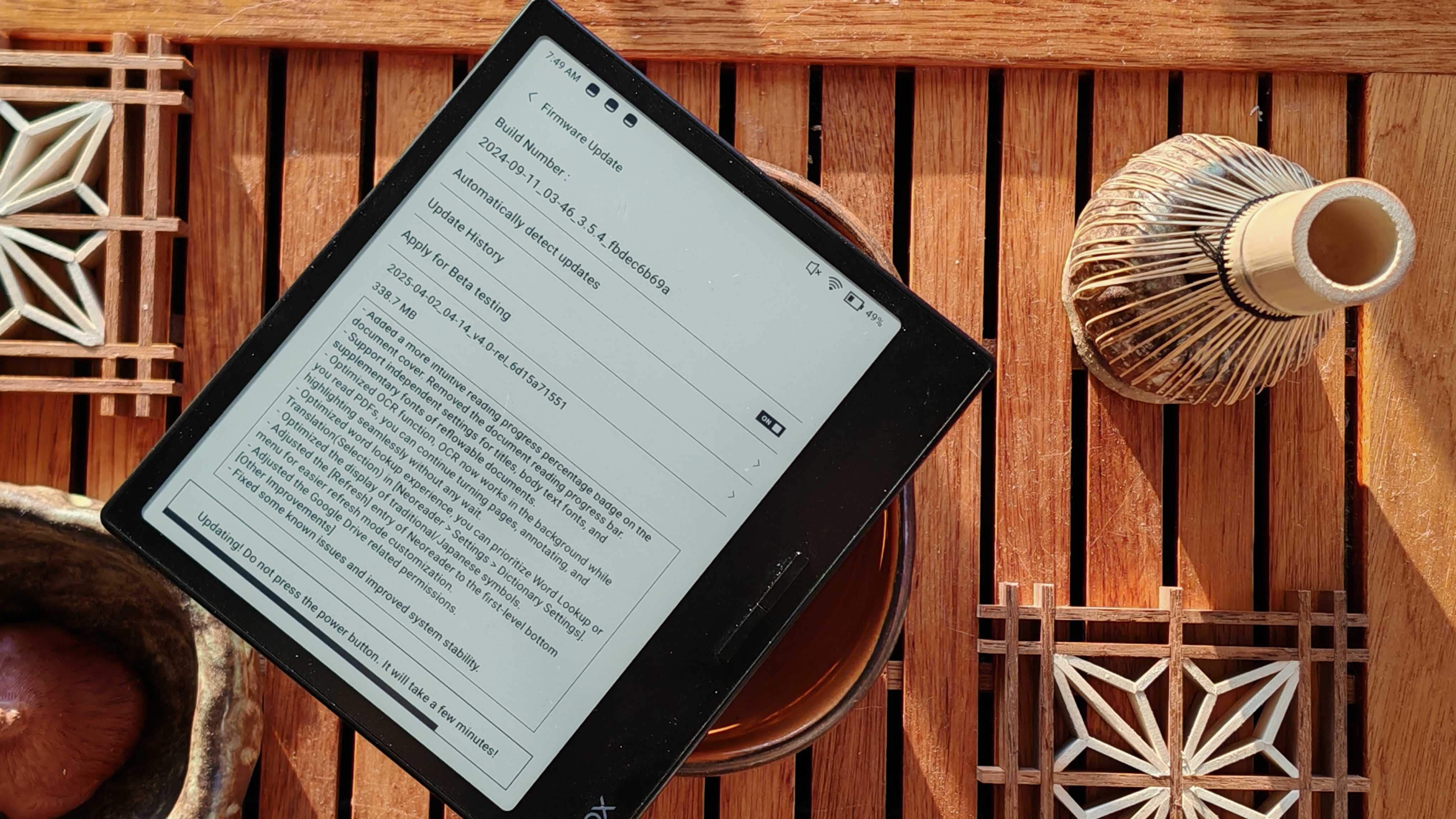

5. Load Testing (Optional)

Use our k6 script to see how the system handles traffic. In load_tester/:

cd load_tester

k6 run perf_load.js

You’ll get metrics on how fast the cluster factorizes numbers under various load scenarios.

That’s It!

You’ve now spun up a distributed microservice in Rust using this code from github.com/copyleftdev/prime_cluster. Feel free to:

- Modify the worker logic (it can do any CPU-heavy or GPU-accelerated task).

-

Scale by adding more worker containers in the

docker-compose.yml. - Expand the master node to orchestrate even more complex tasks or parallel pipelines.

Have fun exploring this approach to building modular, scalable systems in Rust!

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.jpg?#)

_ArtemisDiana_Alamy.jpg?#)

(1).webp?#)

![Yes, the Gemini icon is now bigger and brighter on Android [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/02/Gemini-on-Galaxy-S25.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![New iOS 19 Leak Allegedly Reveals Updated Icons, Floating Tab Bar, More [Video]](https://www.iclarified.com/images/news/96958/96958/96958-640.jpg)