Apache Iceberg: A Comprehensive Guide

Introduction Apache Iceberg is transforming how organizations manage and query large-scale analytical datasets. As a high-performance, open-source table format, Iceberg brings warehouse-like capabilities to data lakes, enabling the creation of cost-effective and scalable lakehouse architectures. In this blog post, we’ll explore what Apache Iceberg is, its key features, benefits, how it compares to alternatives, and how it works. What is a Table Format? How is it Different from a File Format? A file format, such as Apache Parquet, defines how data is serialized and stored within individual files, optimizing for storage efficiency and read performance. In contrast, a table format like Apache Iceberg serves as a management layer on top of file formats. It defines how a logical table is mapped across multiple physical data files, providing database-like semantics for files stored in cost-effective cloud object storage. Table formats track schema, partitioning, and file-level metadata to optimize data access and management. Unlike query engines, table formats do not execute queries but enable query engines to provide optimized, feature-rich access to the underlying data. What is Apache Iceberg? Apache Iceberg is an open-source table format designed for large analytical tables and datasets. It is widely used to implement lakehouse architectures, which combine the ACID transactions and SQL query capabilities of data warehouses with the scalability, flexibility, and cost-effectiveness of data lakes. Iceberg provides a metadata layer that enables warehouse-like functionality on top of data lake storage. As an Apache project, Iceberg is 100% open source and independent of specific tools or data lake engines. Originally developed by Netflix and Apple, it has been proven at scale in production by some of the world’s largest technology companies, handling massive workloads and environments. Key Features of Apache Iceberg Apache Iceberg offers a robust set of features that make it a powerful choice for lakehouse architectures: Table Abstraction Layer: Iceberg allows users to interact with data using familiar SQL commands, simplifying querying and management in lakehouses. ACID Transactions: Iceberg supports atomic, consistent, isolated, and durable (ACID) transactions, ensuring data consistency and reliability. Multiple users can read and write to the same table concurrently without conflicts or data corruption. Schema Evolution: Iceberg enables seamless schema changes, such as adding, dropping, or renaming columns, without requiring costly data migrations. This flexibility supports evolving data requirements. Query Performance Optimizations: Data Compaction: Merges small files into larger ones, reducing the number of files scanned during queries. Partition Pruning: Skips irrelevant partitions, minimizing the data processed during query execution. Time Travel: Iceberg supports querying historical table versions, enabling auditing, debugging, and result reproduction. Users can compare data changes over time and track data lineage. Support for Diverse Workloads: Iceberg is optimized for batch processing, real-time streaming, and machine learning, making lakehouses more adaptable and usable. These features bring warehouse-like capabilities to lakehouses, enabling organizations to run complex queries, perform real-time updates, and support diverse analytical workloads. Apache Iceberg vs. Alternatives While Apache Iceberg is a leading table format for lakehouses, it is not the only option. Alternatives include: Delta Lake: Open-sourced by Databricks, Delta Lake is another table format for lakehouses. Apache Hudi: Originally developed by Uber, Hudi is designed for large-scale data management. Proprietary Table Formats: Some query engines implement their own table formats. However, Iceberg’s open-source nature, lack of vendor lock-in, and rapidly growing ecosystem have positioned it as a leading standard. It is supported by a wide range of tools, including Snowflake, Databricks, Apache Spark, Flink, Trino, Presto, and Hive. How Iceberg Tables Work Apache Iceberg tables are logical entities that reference columnar data stored in cloud object stores (e.g., Amazon S3) and associated metadata. The underlying data is stored in columnar formats like Parquet or ORC, organized according to a partitioning scheme defined in the table metadata. Iceberg manages data at two levels: Central Metadata Store: Tracks the table schema, partitioning, and other properties. File-Level Metadata: Tracks every data file in the table, including file-level statistics and partition information. This sophisticated metadata layer powers Iceberg’s advanced features, such as schema evolution, partition pruning, and time travel. Benefits of the Iceberg Table Format Apache Iceberg offers numerous benefits that make it a compelling choice for lakeho

Introduction

Apache Iceberg is transforming how organizations manage and query large-scale analytical datasets. As a high-performance, open-source table format, Iceberg brings warehouse-like capabilities to data lakes, enabling the creation of cost-effective and scalable lakehouse architectures. In this blog post, we’ll explore what Apache Iceberg is, its key features, benefits, how it compares to alternatives, and how it works.

What is a Table Format? How is it Different from a File Format?

A file format, such as Apache Parquet, defines how data is serialized and stored within individual files, optimizing for storage efficiency and read performance. In contrast, a table format like Apache Iceberg serves as a management layer on top of file formats. It defines how a logical table is mapped across multiple physical data files, providing database-like semantics for files stored in cost-effective cloud object storage.

Table formats track schema, partitioning, and file-level metadata to optimize data access and management. Unlike query engines, table formats do not execute queries but enable query engines to provide optimized, feature-rich access to the underlying data.

What is Apache Iceberg?

Apache Iceberg is an open-source table format designed for large analytical tables and datasets. It is widely used to implement lakehouse architectures, which combine the ACID transactions and SQL query capabilities of data warehouses with the scalability, flexibility, and cost-effectiveness of data lakes. Iceberg provides a metadata layer that enables warehouse-like functionality on top of data lake storage.

As an Apache project, Iceberg is 100% open source and independent of specific tools or data lake engines. Originally developed by Netflix and Apple, it has been proven at scale in production by some of the world’s largest technology companies, handling massive workloads and environments.

Key Features of Apache Iceberg

Apache Iceberg offers a robust set of features that make it a powerful choice for lakehouse architectures:

- Table Abstraction Layer: Iceberg allows users to interact with data using familiar SQL commands, simplifying querying and management in lakehouses.

- ACID Transactions: Iceberg supports atomic, consistent, isolated, and durable (ACID) transactions, ensuring data consistency and reliability. Multiple users can read and write to the same table concurrently without conflicts or data corruption.

- Schema Evolution: Iceberg enables seamless schema changes, such as adding, dropping, or renaming columns, without requiring costly data migrations. This flexibility supports evolving data requirements.

-

Query Performance Optimizations:

- Data Compaction: Merges small files into larger ones, reducing the number of files scanned during queries.

- Partition Pruning: Skips irrelevant partitions, minimizing the data processed during query execution.

- Time Travel: Iceberg supports querying historical table versions, enabling auditing, debugging, and result reproduction. Users can compare data changes over time and track data lineage.

- Support for Diverse Workloads: Iceberg is optimized for batch processing, real-time streaming, and machine learning, making lakehouses more adaptable and usable.

These features bring warehouse-like capabilities to lakehouses, enabling organizations to run complex queries, perform real-time updates, and support diverse analytical workloads.

Apache Iceberg vs. Alternatives

While Apache Iceberg is a leading table format for lakehouses, it is not the only option. Alternatives include:

- Delta Lake: Open-sourced by Databricks, Delta Lake is another table format for lakehouses.

- Apache Hudi: Originally developed by Uber, Hudi is designed for large-scale data management.

- Proprietary Table Formats: Some query engines implement their own table formats.

However, Iceberg’s open-source nature, lack of vendor lock-in, and rapidly growing ecosystem have positioned it as a leading standard. It is supported by a wide range of tools, including Snowflake, Databricks, Apache Spark, Flink, Trino, Presto, and Hive.

How Iceberg Tables Work

Apache Iceberg tables are logical entities that reference columnar data stored in cloud object stores (e.g., Amazon S3) and associated metadata. The underlying data is stored in columnar formats like Parquet or ORC, organized according to a partitioning scheme defined in the table metadata.

Iceberg manages data at two levels:

- Central Metadata Store: Tracks the table schema, partitioning, and other properties.

- File-Level Metadata: Tracks every data file in the table, including file-level statistics and partition information.

This sophisticated metadata layer powers Iceberg’s advanced features, such as schema evolution, partition pruning, and time travel.

Benefits of the Iceberg Table Format

Apache Iceberg offers numerous benefits that make it a compelling choice for lakehouse architectures:

- Open Source and Interoperability: Iceberg’s open standards ensure flexibility and compatibility with a wide range of tools, avoiding vendor lock-in.

- ACID Transactions: Using an optimistic concurrency model, Iceberg enables safe, concurrent reads and writes across multiple engines.

- Schema Evolution: Iceberg simplifies schema changes while maintaining compatibility with existing data and storing full schema history.

- Hidden Partitioning: Partition values are managed automatically based on table configuration, simplifying data layout and enabling partition evolution.

- Time Travel: Iceberg’s metadata store tracks all table versions, allowing users to query historical snapshots or roll back changes.

- Query Optimization: Partition pruning and file-level statistics (e.g., min/max per column) reduce the data scanned per query, improving performance.

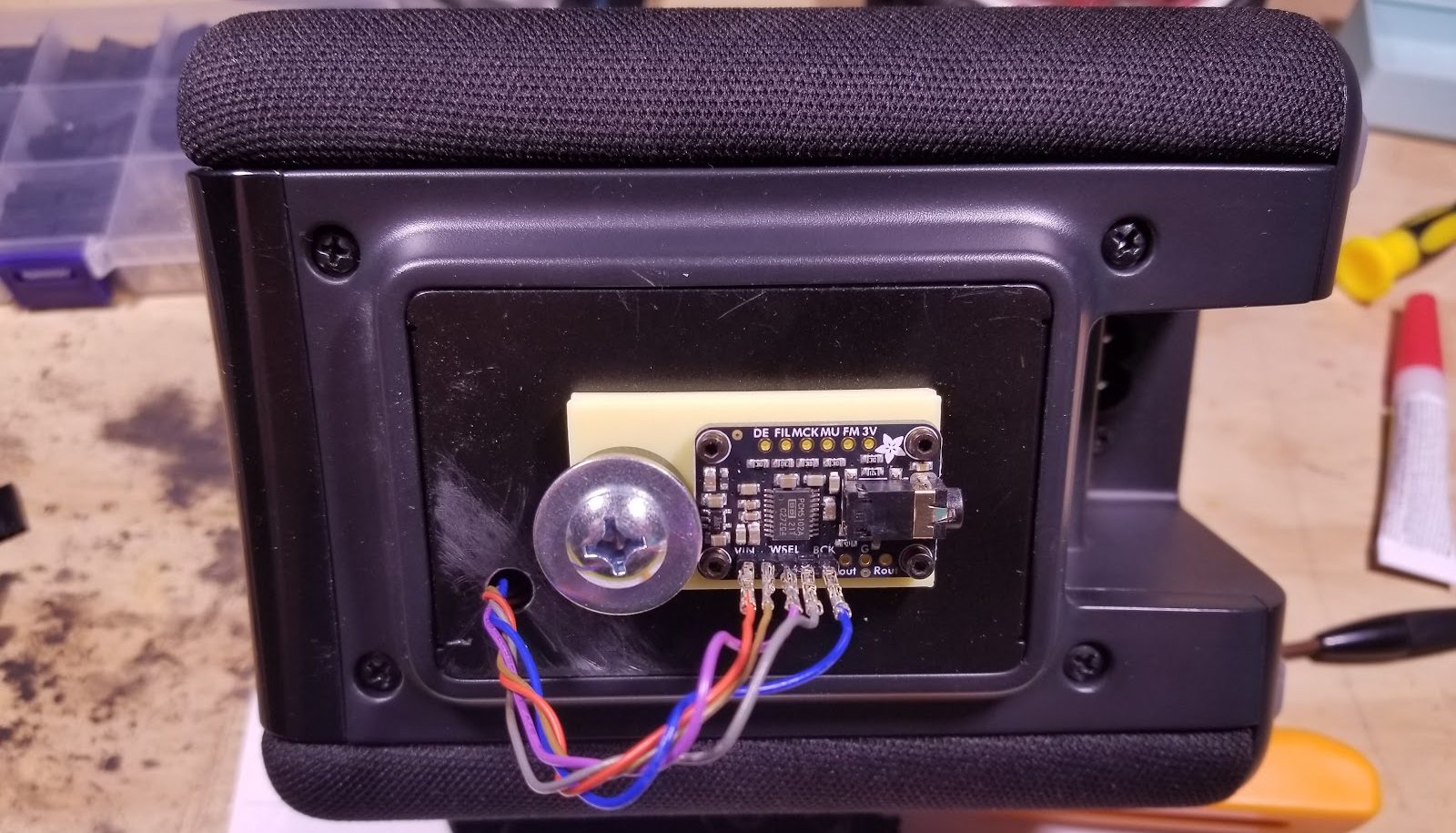

Building and Managing Iceberg Lakehouses

Apache Iceberg provides a powerful foundation for lakehouse architectures, but managing and optimizing Iceberg tables at scale can be complex. Organizations typically need to handle tasks such as data ingestion, schema updates, partitioning, and compaction. Tools and platforms that integrate with Iceberg can automate these processes, allowing data teams to focus on deriving insights rather than managing infrastructure.

Conclusion

Apache Iceberg is a game-changer for organizations building scalable, flexible, and cost-effective lakehouse architectures. With its support for ACID transactions, schema evolution, time travel, and query optimizations, Iceberg brings warehouse-like capabilities to data lakes. Its open-source nature and broad ecosystem make it a versatile choice for diverse workloads, including batch processing, real-time streaming, and machine learning. By leveraging Iceberg, organizations can unlock the full potential of their data and build robust, future-proof data architectures.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

(1).jpg?#)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Developing AI 'Vibe-Coding' Assistant for Xcode With Anthropic [Report]](https://www.iclarified.com/images/news/97200/97200/97200-640.jpg)

![Apple's New Ads Spotlight Apple Watch for Kids [Video]](https://www.iclarified.com/images/news/97197/97197/97197-640.jpg)

![[Weekly funding roundup April 26-May 2] VC inflow continues to remain downcast](https://images.yourstory.com/cs/2/220356402d6d11e9aa979329348d4c3e/WeeklyFundingRoundupNewLogo1-1739546168054.jpg)