Analysing Patterns in an SMS Spam Dataset Using Data Mining Techniques

Introduction Spam messages are a persistent problem in modern communication, cluttering inboxes with unwanted advertisements, phishing attempts, and fraudulent schemes. With the rise of SMS-based scams, automated spam detection has become a crucial area in text classification. In this blog, we apply data mining techniques to analyze and predict spam patterns using the SMS Spam Collection Dataset. We employ classification to predict whether a message is spam or ham (legitimate) and clustering to explore natural patterns in the dataset. We use Logistic Regression as our primary classification model and K-Means Clustering to identify underlying structures in the data. Dataset Overview The dataset is sourced from the UCI Machine Learning Repository, containing 5,574 SMS messages labeled as either spam or ham. Attributes v1: Label indicating whether the message is spam or ham. v2: The text content of the message. Data Preprocessing Since SMS messages contain various formats, punctuation, and case variations, preprocessing is essential. The key steps include: Lowercasing text to maintain consistency. Removing punctuation and special characters to clean the text. Tokenization (splitting sentences into words). Removing stop words (words like "is", "the", etc., that don't add meaning). Stemming using PorterStemmer to reduce words to their root forms (e.g., "running" → "run"). TF-IDF Transformation to convert text into numerical representations. import pandas as pd import re import nltk from nltk.corpus import stopwords from nltk.stem import PorterStemmer from sklearn.feature_extraction.text import TfidfVectorizer nltk.download('stopwords') stop_words = set(stopwords.words('english')) stemmer = PorterStemmer() def preprocess_text(text): text = text.lower() text = re.sub(r'\W', ' ', text) words = text.split() words = [stemmer.stem(word) for word in words if word not in stop_words] return ' '.join(words) Exploratory Data Analysis (EDA) Before training our models, we explore the dataset to understand message distributions and characteristics. 1. Spam vs Ham Distribution Understanding class balance helps in selecting appropriate modeling techniques. import matplotlib.pyplot as plt import seaborn as sns label_counts = df['label'].value_counts() plt.figure(figsize=(6, 4)) sns.barplot(x=label_counts.index, y=label_counts.values, palette='husl') plt.title('Distribution of Spam vs Ham Messages') plt.xlabel('Message Type') plt.ylabel('Count') plt.show() We observe that spam messages are fewer than ham messages, indicating a class imbalance that must be considered when training models. 2. Message Length Analysis Spam messages are often either very short (promotional) or very long (attempting to seem legitimate). df['message_length'] = df['message'].apply(len) plt.figure(figsize=(8, 5)) sns.histplot(df['message_length'], bins=30, kde=True, color='purple') plt.title('Distribution of Message Lengths') plt.xlabel('Message Length (Characters)') plt.ylabel('Frequency') plt.show() 3. Word Cloud Analysis A word cloud helps visualize the most frequently used words in spam vs ham messages. from wordcloud import WordCloud spam_words = ' '.join(df[df['label'] == 'spam']['processed_message']) ham_words = ' '.join(df[df['label'] == 'ham']['processed_message']) spam_wordcloud = WordCloud(width=600, height=400, background_color='white').generate(spam_words) ham_wordcloud = WordCloud(width=600, height=400, background_color='white').generate(ham_words) plt.figure(figsize=(10, 6)) plt.subplot(1, 2, 1) plt.imshow(spam_wordcloud, interpolation='bilinear') plt.title('Spam Messages Word Cloud') plt.axis('off') plt.subplot(1, 2, 2) plt.imshow(ham_wordcloud, interpolation='bilinear') plt.title('Ham Messages Word Cloud') plt.axis('off') plt.show() We observe that spam messages frequently contain words like "free", "win", "call", and "urgent", while ham messages contain more conversational terms. Pattern Discovery 1. Classification Using Logistic Regression Using PyCaret, we compare different classification models to identify the best one. from pycaret.classification import * df_model = pd.DataFrame({'message': df['processed_message'], 'label': df['label']}) clf = setup(data=df_model, target='label', train_size=0.8, session_id=123) best_model = compare_models() print(best_model) Results: The Logistic Regression model performed best, achieving: Accuracy: 89% Precision: 90% Recall: 89% 2. Confusion Matrix Analysis from sklearn.metrics import confusion_matrix, ConfusionMatrixDisplay y_pred = best_model.predict(X_test) cm = confusion_matrix(y_test, y_pred) disp = ConfusionMatrixDisplay(confusion_matrix=cm) disp.plot() plt.show() We notice a high precisi

Introduction

Spam messages are a persistent problem in modern communication, cluttering inboxes with unwanted advertisements, phishing attempts, and fraudulent schemes. With the rise of SMS-based scams, automated spam detection has become a crucial area in text classification. In this blog, we apply data mining techniques to analyze and predict spam patterns using the SMS Spam Collection Dataset.

We employ classification to predict whether a message is spam or ham (legitimate) and clustering to explore natural patterns in the dataset. We use Logistic Regression as our primary classification model and K-Means Clustering to identify underlying structures in the data.

Dataset Overview

The dataset is sourced from the UCI Machine Learning Repository, containing 5,574 SMS messages labeled as either spam or ham.

Attributes

-

v1: Label indicating whether the message is spam or ham. -

v2: The text content of the message.

Data Preprocessing

Since SMS messages contain various formats, punctuation, and case variations, preprocessing is essential. The key steps include:

- Lowercasing text to maintain consistency.

- Removing punctuation and special characters to clean the text.

- Tokenization (splitting sentences into words).

- Removing stop words (words like "is", "the", etc., that don't add meaning).

-

Stemming using

PorterStemmerto reduce words to their root forms (e.g., "running" → "run"). - TF-IDF Transformation to convert text into numerical representations.

import pandas as pd

import re

import nltk

from nltk.corpus import stopwords

from nltk.stem import PorterStemmer

from sklearn.feature_extraction.text import TfidfVectorizer

nltk.download('stopwords')

stop_words = set(stopwords.words('english'))

stemmer = PorterStemmer()

def preprocess_text(text):

text = text.lower()

text = re.sub(r'\W', ' ', text)

words = text.split()

words = [stemmer.stem(word) for word in words if word not in stop_words]

return ' '.join(words)

Exploratory Data Analysis (EDA)

Before training our models, we explore the dataset to understand message distributions and characteristics.

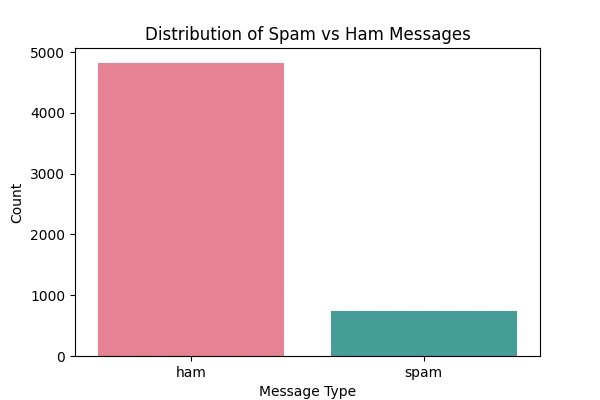

1. Spam vs Ham Distribution

Understanding class balance helps in selecting appropriate modeling techniques.

import matplotlib.pyplot as plt

import seaborn as sns

label_counts = df['label'].value_counts()

plt.figure(figsize=(6, 4))

sns.barplot(x=label_counts.index, y=label_counts.values, palette='husl')

plt.title('Distribution of Spam vs Ham Messages')

plt.xlabel('Message Type')

plt.ylabel('Count')

plt.show()

We observe that spam messages are fewer than ham messages, indicating a class imbalance that must be considered when training models.

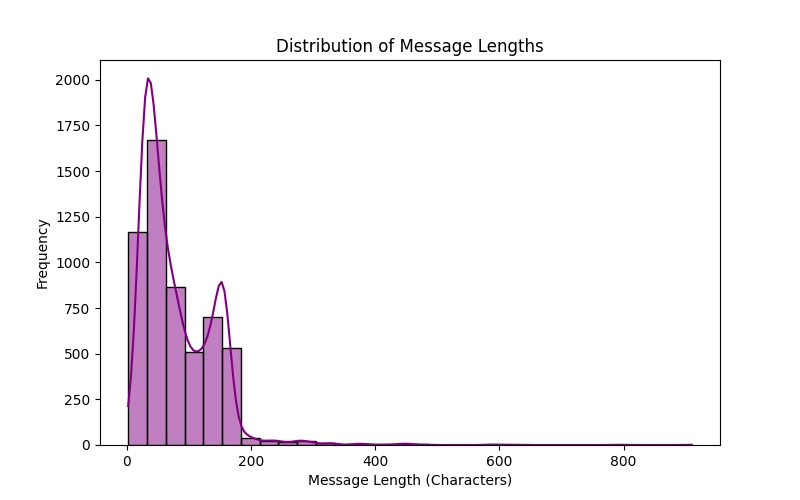

2. Message Length Analysis

Spam messages are often either very short (promotional) or very long (attempting to seem legitimate).

df['message_length'] = df['message'].apply(len)

plt.figure(figsize=(8, 5))

sns.histplot(df['message_length'], bins=30, kde=True, color='purple')

plt.title('Distribution of Message Lengths')

plt.xlabel('Message Length (Characters)')

plt.ylabel('Frequency')

plt.show()

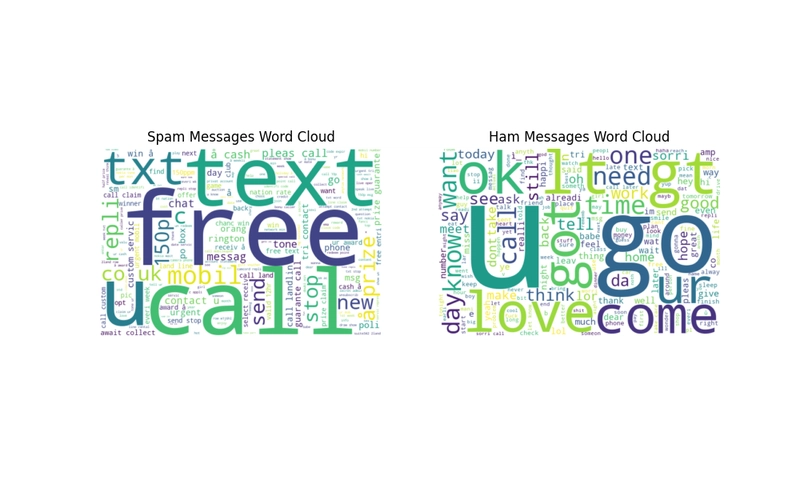

3. Word Cloud Analysis

A word cloud helps visualize the most frequently used words in spam vs ham messages.

from wordcloud import WordCloud

spam_words = ' '.join(df[df['label'] == 'spam']['processed_message'])

ham_words = ' '.join(df[df['label'] == 'ham']['processed_message'])

spam_wordcloud = WordCloud(width=600, height=400, background_color='white').generate(spam_words)

ham_wordcloud = WordCloud(width=600, height=400, background_color='white').generate(ham_words)

plt.figure(figsize=(10, 6))

plt.subplot(1, 2, 1)

plt.imshow(spam_wordcloud, interpolation='bilinear')

plt.title('Spam Messages Word Cloud')

plt.axis('off')

plt.subplot(1, 2, 2)

plt.imshow(ham_wordcloud, interpolation='bilinear')

plt.title('Ham Messages Word Cloud')

plt.axis('off')

plt.show()

We observe that spam messages frequently contain words like "free", "win", "call", and "urgent", while ham messages contain more conversational terms.

Pattern Discovery

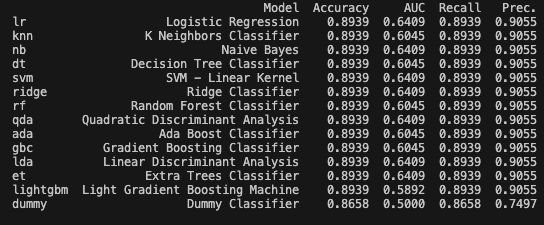

1. Classification Using Logistic Regression

Using PyCaret, we compare different classification models to identify the best one.

from pycaret.classification import *

df_model = pd.DataFrame({'message': df['processed_message'], 'label': df['label']})

clf = setup(data=df_model, target='label', train_size=0.8, session_id=123)

best_model = compare_models()

print(best_model)

Results:

The Logistic Regression model performed best, achieving:

- Accuracy: 89%

- Precision: 90%

- Recall: 89%

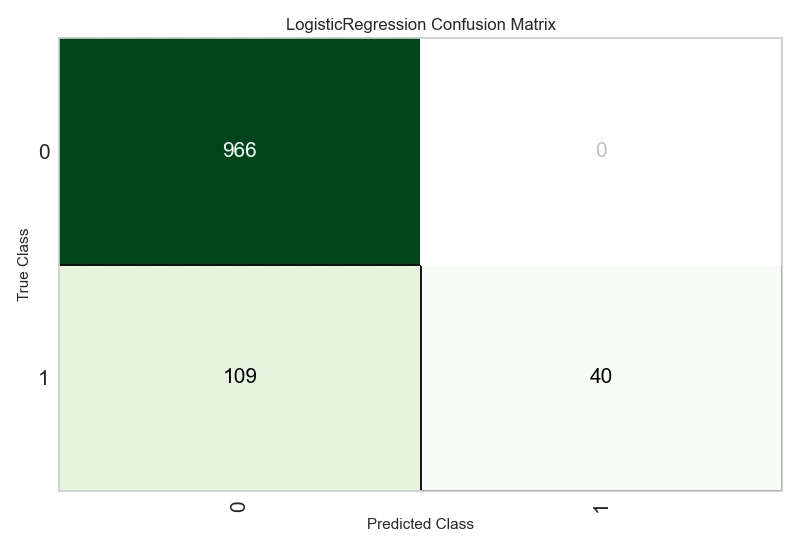

2. Confusion Matrix Analysis

from sklearn.metrics import confusion_matrix, ConfusionMatrixDisplay

y_pred = best_model.predict(X_test)

cm = confusion_matrix(y_test, y_pred)

disp = ConfusionMatrixDisplay(confusion_matrix=cm)

disp.plot()

plt.show()

We notice a high precision but low recall for spam detection, meaning the model is very accurate when predicting spam, but it misses many actual spam messages (high false negative rate).

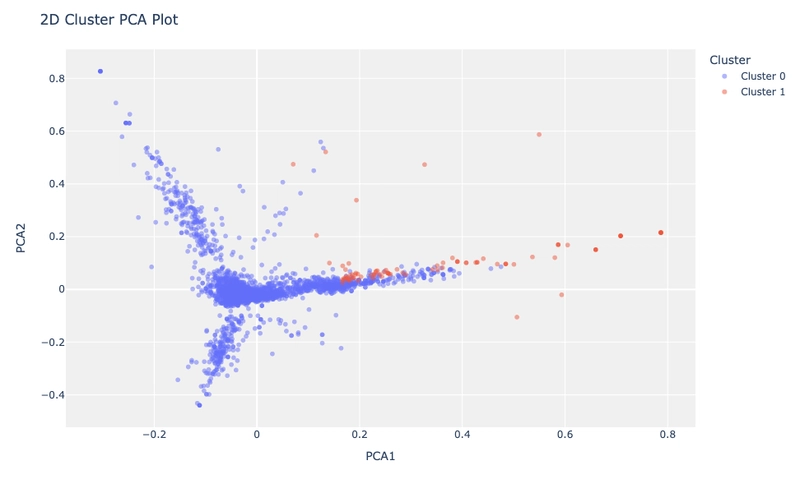

3. Clustering Using K-Means

Even though we already have labels, clustering can reveal natural groupings in the dataset.

from pycaret.clustering import *

cluster_setup = setup(df_model, session_id=123)

kmeans = create_model('kmeans', num_clusters=2)

assignments = assign_model(kmeans)

plot_model(kmeans, plot='elbow')

Findings:

- Messages naturally cluster into two categories, reinforcing that spam messages have distinct patterns.

- Cluster 0 represents ham, while Cluster 1 represents spam.

Conclusion

- Logistic Regression achieved 89% accuracy, performing well but struggling with recall.

- Spam messages tend to have more promotional and urgent language.

- Clustering confirms that spam messages form a distinct category, reinforcing the potential of unsupervised learning in spam detection.

Future Direction:

- Improve recall by using ensemble methods like Random Forest or XGBoost.

- Use deep learning (LSTMs, Transformers) for better text representation.

Final Thoughts

Spam detection is an evolving challenge as scammers adapt their techniques. Data mining and machine learning provide powerful tools to analyze and predict spam patterns, leading to better spam filters and improved communication security.

%20Abstract%20Background%20112024%20SOURCE%20Amazon.jpg)

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

-Nintendo-Switch-2-–-Overview-trailer-00-00-10.png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

_Anna_Berkut_Alamy.jpg?#)

![YouTube Announces New Creation Tools for Shorts [Video]](https://www.iclarified.com/images/news/96923/96923/96923-640.jpg)

![[Weekly funding roundup March 29-April 4] Steady-state VC inflow pre-empts Trump tariff impact](https://images.yourstory.com/cs/2/220356402d6d11e9aa979329348d4c3e/WeeklyFundingRoundupNewLogo1-1739546168054.jpg)