AI Classification models Performance Evaluation using Confusion Matrix -

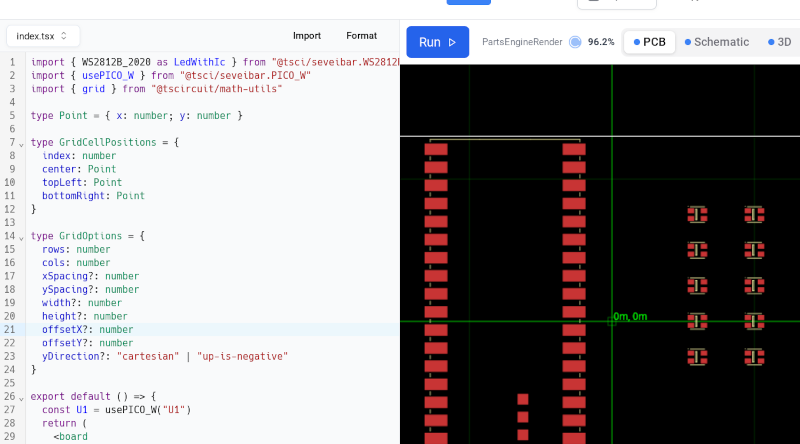

A confusion matrix is a table used to evaluate the performance of a classification model by showing the true and predicted classifications for a set of test data. It helps in visualizing and analyzing how well the model is performing, especially for multi-class classification problems. The confusion matrix provides a detailed breakdown of the correct and incorrect predictions, making it easier to understand the types of errors the model is making. Accuracy: The proportion of correct predictions (both true positives and true negatives) out of all predictions. Accuracy = (TP + TN) / (TP + TN + FP + FN) Precision: The proportion of true positive predictions out of all positive predictions made by the model. Precision (Positive Predictive Value) = TP / TP + FP Recall (Sensitivity, True Positive Rate): The proportion of actual positives correctly identified by the model. Recall represents from all the positive classes, how many we predicted correctly. Recall should be as high as possible. Recall = (TP) / (TP + FN) The term “recall” reflects the model’s ability to “recall” or recognize as many true positive instances as possible from the actual positive cases in the dataset. It focuses on minimizing the number of false negatives, ensuring that the model identifies the majority of relevant instances. F1 Score: The harmonic mean of precision and recall, providing a single metric that balances both. F1 Score = 2 * (Precision * Recall) / (Precision + Recall) Specificity (True Negative Rate): The proportion of actual negatives correctly identified by the model. Specificity = TN / (TN + FP) ROC-AUC (Receiver Operating Characteristic - Area Under the Curve) The ROC curve plots the true positive rate (recall) against the false positive rate at various threshold settings. The AUC (Area Under the Curve) represents the likelihood that the model will rank a randomly chosen positive instance higher than a randomly chosen negative one. https://developers.google.com/machine-learning/crash-course/classification/roc-and-auc PR-AUC (Precision-Recall Area Under the Curve); The Precision-Recall curve plots precision against recall at various threshold settings. The AUC represents the balance between precision and recall across different thresholds. Logarithmic Loss (Log Loss): Log Loss measures the performance of a classification model where the output is a probability value between 0 and 1. It penalizes incorrect classifications with more confidence more than those with less confidence. Mean Absolute Error (MAE): MAE measures the average absolute difference between the predicted values and the actual values. It provides a straightforward interpretation of the error magnitude. Mean Squared Error (MSE): MSE measures the average squared difference between the predicted values and the actual values. It penalizes larger errors more heavily than MAE, due to the squaring of the differences.

A confusion matrix is a table used to evaluate the performance of a classification model by showing the true and predicted classifications for a set of test data. It helps in visualizing and analyzing how well the model is performing, especially for multi-class classification problems. The confusion matrix provides a detailed breakdown of the correct and incorrect predictions, making it easier to understand the types of errors the model is making.

Accuracy: The proportion of correct predictions (both true positives and true negatives) out of all predictions.

Accuracy = (TP + TN) / (TP + TN + FP + FN)

- Precision: The proportion of true positive predictions out of all positive predictions made by the model.

Precision (Positive Predictive Value) = TP / TP + FP

- Recall (Sensitivity, True Positive Rate): The proportion of actual positives correctly identified by the model. Recall represents from all the positive classes, how many we predicted correctly.

Recall should be as high as possible.

Recall = (TP) / (TP + FN)

The term “recall” reflects the model’s ability to “recall” or recognize as many true positive instances as possible from the actual positive cases in the dataset. It focuses on minimizing the number of false negatives, ensuring that the model identifies the majority of relevant instances.

- F1 Score: The harmonic mean of precision and recall, providing a single metric that balances both.

F1 Score = 2 * (Precision * Recall) / (Precision + Recall)

- Specificity (True Negative Rate): The proportion of actual negatives correctly identified by the model.

Specificity = TN / (TN + FP)

- ROC-AUC (Receiver Operating Characteristic - Area Under the Curve) The ROC curve plots the true positive rate (recall) against the false positive rate at various threshold settings. The AUC (Area Under the Curve) represents the likelihood that the model will rank a randomly chosen positive instance higher than a randomly chosen negative one.

https://developers.google.com/machine-learning/crash-course/classification/roc-and-auc

PR-AUC (Precision-Recall Area Under the Curve); The Precision-Recall curve plots precision against recall at various threshold settings. The AUC represents the balance between precision and recall across different thresholds.

Logarithmic Loss (Log Loss): Log Loss measures the performance of a classification model where the output is a probability value between 0 and 1. It penalizes incorrect classifications with more confidence more than those with less confidence.

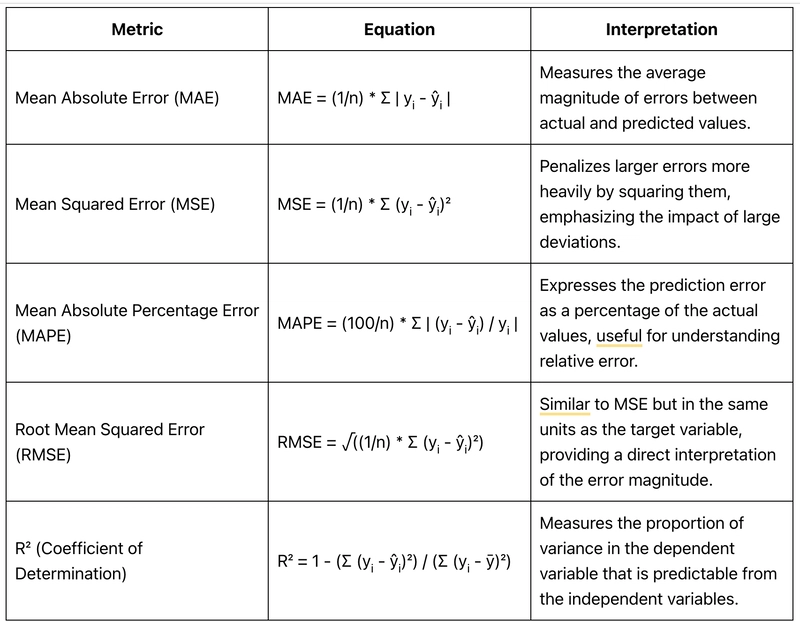

Mean Absolute Error (MAE): MAE measures the average absolute difference between the predicted values and the actual values. It provides a straightforward interpretation of the error magnitude.

Mean Squared Error (MSE): MSE measures the average squared difference between the predicted values and the actual values. It penalizes larger errors more heavily than MAE, due to the squaring of the differences.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![Google Home app fixes bug that repeatedly asked to ‘Set up Nest Cam features’ for Nest Hub Max [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2022/08/youtube-premium-music-nest-hub-max.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Epic Games Wins Major Victory as Apple is Ordered to Comply With App Store Anti-Steering Injunction [Updated]](https://images.macrumors.com/t/Z4nU2dRocDnr4NPvf-sGNedmPGA=/2250x/article-new/2022/01/iOS-App-Store-General-Feature-JoeBlue.jpg)