AI Automates MOOSE Simulations: Introducing the MooseAgent Framework

This is a Plain English Papers summary of a research paper called AI Automates MOOSE Simulations: Introducing the MooseAgent Framework. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter. Automating Complex Simulations with AI: Introduction to MooseAgent The Finite Element Method (FEM) is a cornerstone of modern engineering and scientific computing, but it comes with significant challenges. Creating simulations requires extensive human effort and specialized knowledge across pre-processing, solver configuration, and post-processing stages. While the MOOSE (Multiphysics Object Oriented Simulation Environment) framework offers powerful capabilities for multi-physics simulations, its complexity creates a steep learning curve for many users. To address these challenges, researchers have developed MooseAgent, an automated solution that combines Large Language Models (LLMs) with a multi-agent system. This framework translates natural language descriptions of simulation requirements into executable MOOSE input files. MooseAgent employs several key strategies: natural language understanding to interpret user requirements, task decomposition to break complex problems into manageable parts, multi-round iterative verification to ensure accuracy, and a specialized vector database to reduce model hallucinations. Through experimental evaluation across heat transfer, mechanics, phase field, and multi-physics coupling problems, the researchers demonstrate MooseAgent's potential to automate the MOOSE simulation process, particularly for single-physics problems. This innovation represents a significant step toward lowering the barriers to entry for finite element simulation and points toward the future of intelligent simulation software. The Evolution of AI in Simulation: Related Work The integration of LLMs and multi-agent systems into scientific computation processes represents a growing trend in research. Several efforts in Computational Fluid Dynamics (CFD) have already demonstrated how multi-agent LLM systems can automate complex simulation workflows. However, similar approaches have been largely absent in finite element software platforms, especially when it comes to the challenges of multi-physics coupling modeling. Understanding LLM-Based Multi-Agent Frameworks Multi-agent frameworks based on LLMs can be categorized into four types based on their level of encapsulation and automation: Foundational frameworks like Langchain and Langgraph require developers to manually code and manage agent interactions and workflows, offering high flexibility but demanding more development effort. Higher-level frameworks such as CrewAI, AutoGen, and MetaGPT provide greater encapsulation, where users primarily define task goals and agent prompts while the framework handles inter-agent communication and collaboration. GUI-based frameworks including Dify, Bisheng, and Coze achieve even higher abstraction through visual interfaces, allowing users to build workflows without coding through drag-and-drop configuration. Self-optimizing frameworks treat multi-agent workflows as optimizable variables, automatically learning and finding optimal collaboration strategies based on feedback, similar to supervised learning approaches. The researchers behind MooseAgent selected LangGraph as their framework of choice: "Given the current technological maturity and controllability, this research chooses to adopt a multi-agent framework based on LangGraph." This choice allowed for precise control over agent interactions, crucial for the complex requirements of finite element simulation. The development of such frameworks continues to evolve, as seen in related work on optimizing collaboration between LLM-based agents for finite element analysis. AI Agents for Scientific Software AI agent applications are making significant strides in scientific software domains. AutoFLUKA, for example, automates Monte Carlo simulation workflows using the LangChain Python framework, reducing manual intervention and errors throughout the process from input generation to visualization. In the CFD field, FoamPilot enhances FireFOAM's usability through Retrieval Augmented Generation (RAG) for code navigation and summarization, while also interpreting natural language requests to modify simulation setups. Similarly, MetaOpenFOAM combines Chain of Thought decomposition with iterative verification to make CFD simulation more accessible to non-experts, while OpenFOAMGPT handles complex tasks including zero-shot case setup and code translation. These examples demonstrate how LLM agents can serve as mechanical designers and scientific assistants, enhancing software efficiency and accessibility by automating complex tasks that previously required specialized expertise. As these technologies mature, they promise to transform how researchers and engineers interact with scientific software.

This is a Plain English Papers summary of a research paper called AI Automates MOOSE Simulations: Introducing the MooseAgent Framework. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Automating Complex Simulations with AI: Introduction to MooseAgent

The Finite Element Method (FEM) is a cornerstone of modern engineering and scientific computing, but it comes with significant challenges. Creating simulations requires extensive human effort and specialized knowledge across pre-processing, solver configuration, and post-processing stages. While the MOOSE (Multiphysics Object Oriented Simulation Environment) framework offers powerful capabilities for multi-physics simulations, its complexity creates a steep learning curve for many users.

To address these challenges, researchers have developed MooseAgent, an automated solution that combines Large Language Models (LLMs) with a multi-agent system. This framework translates natural language descriptions of simulation requirements into executable MOOSE input files. MooseAgent employs several key strategies: natural language understanding to interpret user requirements, task decomposition to break complex problems into manageable parts, multi-round iterative verification to ensure accuracy, and a specialized vector database to reduce model hallucinations.

Through experimental evaluation across heat transfer, mechanics, phase field, and multi-physics coupling problems, the researchers demonstrate MooseAgent's potential to automate the MOOSE simulation process, particularly for single-physics problems. This innovation represents a significant step toward lowering the barriers to entry for finite element simulation and points toward the future of intelligent simulation software.

The Evolution of AI in Simulation: Related Work

The integration of LLMs and multi-agent systems into scientific computation processes represents a growing trend in research. Several efforts in Computational Fluid Dynamics (CFD) have already demonstrated how multi-agent LLM systems can automate complex simulation workflows. However, similar approaches have been largely absent in finite element software platforms, especially when it comes to the challenges of multi-physics coupling modeling.

Understanding LLM-Based Multi-Agent Frameworks

Multi-agent frameworks based on LLMs can be categorized into four types based on their level of encapsulation and automation:

Foundational frameworks like Langchain and Langgraph require developers to manually code and manage agent interactions and workflows, offering high flexibility but demanding more development effort.

Higher-level frameworks such as CrewAI, AutoGen, and MetaGPT provide greater encapsulation, where users primarily define task goals and agent prompts while the framework handles inter-agent communication and collaboration.

GUI-based frameworks including Dify, Bisheng, and Coze achieve even higher abstraction through visual interfaces, allowing users to build workflows without coding through drag-and-drop configuration.

Self-optimizing frameworks treat multi-agent workflows as optimizable variables, automatically learning and finding optimal collaboration strategies based on feedback, similar to supervised learning approaches.

The researchers behind MooseAgent selected LangGraph as their framework of choice: "Given the current technological maturity and controllability, this research chooses to adopt a multi-agent framework based on LangGraph." This choice allowed for precise control over agent interactions, crucial for the complex requirements of finite element simulation. The development of such frameworks continues to evolve, as seen in related work on optimizing collaboration between LLM-based agents for finite element analysis.

AI Agents for Scientific Software

AI agent applications are making significant strides in scientific software domains. AutoFLUKA, for example, automates Monte Carlo simulation workflows using the LangChain Python framework, reducing manual intervention and errors throughout the process from input generation to visualization. In the CFD field, FoamPilot enhances FireFOAM's usability through Retrieval Augmented Generation (RAG) for code navigation and summarization, while also interpreting natural language requests to modify simulation setups. Similarly, MetaOpenFOAM combines Chain of Thought decomposition with iterative verification to make CFD simulation more accessible to non-experts, while OpenFOAMGPT handles complex tasks including zero-shot case setup and code translation.

These examples demonstrate how LLM agents can serve as mechanical designers and scientific assistants, enhancing software efficiency and accessibility by automating complex tasks that previously required specialized expertise. As these technologies mature, they promise to transform how researchers and engineers interact with scientific software.

Building MooseAgent: Architecture and Implementation

System Architecture: How MooseAgent Works

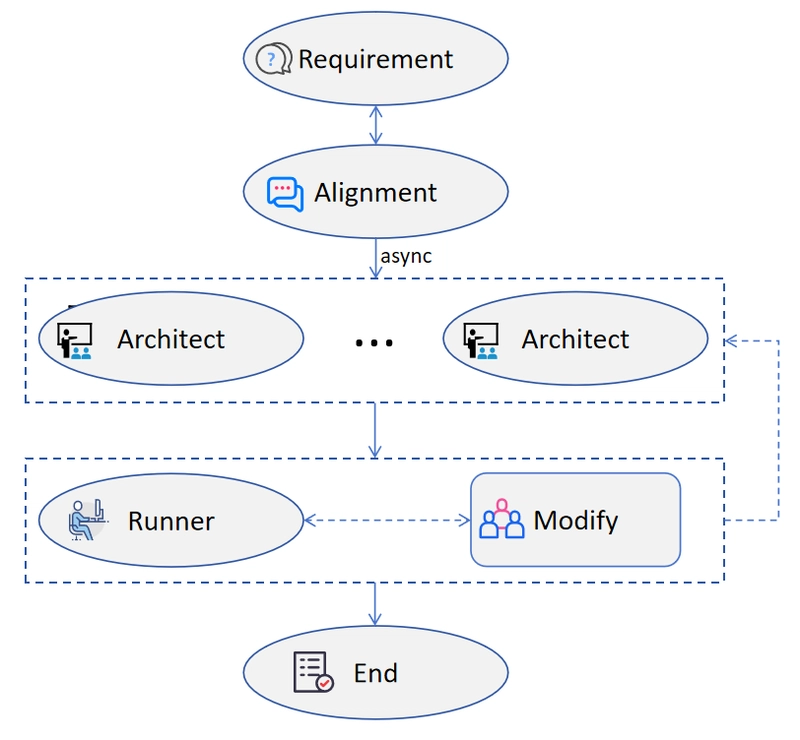

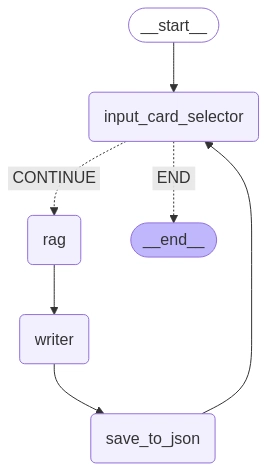

MooseAgent follows a structured workflow designed to transform natural language input into executable MOOSE simulations:

- The user describes their simulation requirements in natural language

- The LLM parses these requirements, clarifying any ambiguities through dialogue

- The system generates retrieval content based on the requirements and searches for relevant input cards in its vector knowledge base

- The "architect" agent uses these references to generate appropriate MOOSE input cards

- The system performs multiple rounds of error correction based on MOOSE's output error messages and rule-based syntax checks

- If the same error persists after multiple correction attempts, the system suggests alternative approaches

The process terminates either when the input cards execute successfully or when the maximum number of iterations is reached. This approach mirrors feedback-driven methods for enhancing LLMs in power system simulations, where iterative refinement based on execution feedback improves results.

Figure 1: Overall Framework of Moose Agent showing the workflow from user input to simulation execution.

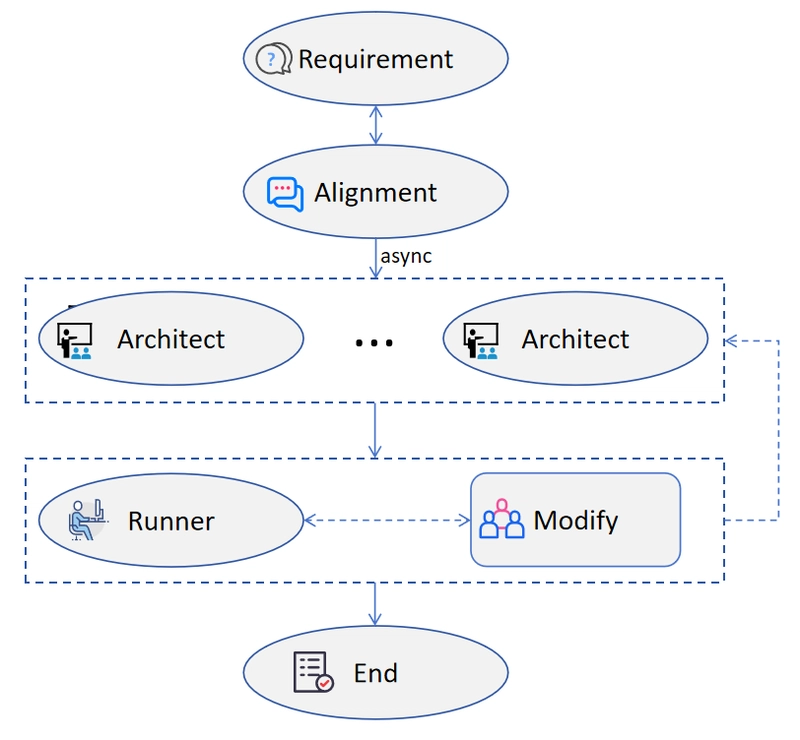

Knowledge Base: Reducing Hallucination Through Retrieval

To improve accuracy and reduce model hallucinations, MooseAgent builds and utilizes a comprehensive vector database with two primary components:

-

Annotated input cards: The researchers extracted over 8,000 input cards from the MOOSE repository. However, most lacked annotations, making them difficult to interpret and retrieve effectively. To address this, the team developed an automatic annotation workflow:

- Randomly select unannotated input cards

- Analyze the MOOSE APPs used by these cards

- Query the documentation for descriptions and usage information

- Generate annotated cards using retrieval-augmented generation techniques

MOOSE function documentation: While MOOSE can export function input parameter descriptions, these alone don't provide a complete understanding of each APP's purpose. The researchers developed a Python script to locate detailed descriptions of each APP from the repository.

Both components are stored in JSON format, capturing critical information like APP names, function descriptions, and input parameters. This structured knowledge base enables more precise retrieval and generation of simulation input files.

Figure 2: Automatic annotation workflow showing the process of enriching raw input cards with relevant contextual information.

Putting MooseAgent to the Test: Experimental Evaluation

Testing Across Physics Domains: Experimental Design

The researchers implemented MooseAgent using the langgraph framework to build the multi-agent collaborative workflow. They selected Deepseek-R1 as the reasoning model for overall input card architecture, while other modules used Deepseek-V3. To ensure consistent results, they set the temperature parameter to 0.01 across all models. For retrieval-augmented generation, they employed the BGE-M3 embedding model with a FAISS vector database, using similarity search to find relevant information. Each experiment allowed a maximum of 3 iterations for error correction.

To validate MooseAgent's effectiveness, the team designed eight test cases spanning various physics domains:

- Steady-State Heat Conduction (HeatSteady): A metal rod with constant temperatures at both ends

- Transient Heat Conduction (HeatTran): A square domain with time-dependent temperature evolution

- Linear Elasticity (Elasticity): A rectangular plate under tensile stress

- Plastic Strain (Plasticity): A square plate exhibiting elastoplastic behavior

- Porous Media Flow (Porous): A cubic soil block with water flow

- Phase Change Heat Conduction (PhaseChange): A metal rod undergoing phase change

- Phase Field (PhaseField): Simulation of metal solidification

- Thermal-Mechanic coupling (ThermalMechanic): Combined thermal and mechanical analysis

These test cases represent a progression from simpler single-physics problems to more complex multi-physics coupling scenarios, allowing the researchers to evaluate MooseAgent's performance across varying levels of complexity.

Performance Analysis: Success Rates and Efficiency

The experimental results revealed varying levels of success across different physics domains:

| Case | Pass | Token | Productivity |

|---|---|---|---|

| HeatSteady | 1 | 24673 | 28 |

| HeatTran | 0.8 | 37695 | 24 |

| Elasticity | 1 | 40857 | 16 |

| Plasticity | 0.6 | 15874 | 8 |

| PhaseChange | 0.6 | 23742 | 10 |

| Porous | 0.6 | 79176 | 39 |

| PhaseField | 1 | 86696 | 40 |

| ThermalMechanic | 0.4 | 77020 | 22 |

Table 1: Performance of MooseAgent across different test cases, showing success rates, token usage, and productivity metrics.

MooseAgent achieved a 100% success rate for Steady-State Heat Conduction, Linear Elasticity, and Phase Field cases, demonstrating excellent performance for mature physical problems with many reference cases. Transient Heat Conduction had an 80% success rate, indicating room for improvement in handling time-dependent problems. Plastic Strain, Porous Media Flow, and Phase Change Heat Transfer all achieved a 60% success rate, showing moderate capability in handling nonlinear material behavior, fluid flow, and phase changes.

The Thermal-Mechanic coupling case had the lowest success rate at 40%, primarily because it involves the strong coupling of two sub-problems (thermal analysis and mechanical analysis), requiring more complex model configuration and parameter settings. This highlights the challenge of multi-physics coupling for automated simulation frameworks.

Token usage varied significantly between cases, with Phase Field consuming the most tokens (86,696) due to longer simulation times and complex parameter configurations. Productivity metrics (tokens consumed per code character generated) also varied, with Porous Media Flow and Phase Field showing the highest productivity at 39 and 40 respectively, suggesting they generated substantial effective code. In contrast, Plastic Strain had the lowest productivity at 8, indicating more correction attempts resulting in higher token consumption but less effective code generation.

These results demonstrate MooseAgent's potential for automating finite element simulations while highlighting areas for future improvement, particularly in handling complex multi-physics coupling scenarios and optimizing token efficiency.

Advancing Simulation Automation: Conclusion and Future Work

MooseAgent represents a significant step forward in automating finite element analysis through the MOOSE platform. By combining large language models with a multi-agent system, the framework successfully translates natural language requirements into executable MOOSE input cards through task decomposition and multi-round iterative verification. The integration of MOOSE knowledge in a vector database enhances retrieval accuracy and reduces hallucinations, contributing to the system's overall reliability.

Experimental results validate MooseAgent's potential, with high success rates for problems like steady-state heat conduction and linear elasticity. However, the performance gap when handling complex multi-physics problems, particularly thermal-mechanic coupling, indicates areas for improvement. The main contribution lies in exploring "the automated process from natural language to MOOSE simulation based on technologies such as LLM, multi-agent systems, task decomposition, and multi-round iterative verification," opening new possibilities for automated scientific computing.

Future work will focus on optimizing knowledge retrieval and iteration strategies while incorporating human feedback during the iteration process. These enhancements aim to further improve the framework's performance on complex problems, continuing the journey toward more accessible and efficient finite element simulation tools. As the evaluation of language models for multiphysics reasoning advances, frameworks like MooseAgent will continue to evolve, bridging the gap between natural language understanding and sophisticated scientific computing.

![[Webinar] AI Is Already Inside Your SaaS Stack — Learn How to Prevent the Next Silent Breach](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiOWn65wd33dg2uO99NrtKbpYLfcepwOLidQDMls0HXKlA91k6HURluRA4WXgJRAZldEe1VReMQZyyYt1PgnoAn5JPpILsWlXIzmrBSs_TBoyPwO7hZrWouBg2-O3mdeoeSGY-l9_bsZB7vbpKjTSvG93zNytjxgTaMPqo9iq9Z5pGa05CJOs9uXpwHFT4/s1600/ai-cyber.jpg?#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What’s new in Android’s April 2025 Google System Updates [U: 4/18]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)