Which AWS Service Should You Choose? The Ultimate Guide to Making the Right Cloud Decision

How do you navigate the maze of AWS services and choose the best fit for your project? Choosing the right AWS service feels overwhelming at first. With over 200 services available, even experienced architects can struggle to determine which combination will best serve their needs. Decision paralysis is real when facing AWS’s vast catalog. This guide cuts through the complexity to help you make confident, informed choices for your specific use case. We’ll explore the major service categories, examine selection criteria, and provide decision frameworks to match your requirements with the optimal AWS solutions. Understanding Your Requirements First Before diving into AWS’s offerings, clearly define what you’re trying to accomplish. This step is often rushed, yet it’s the most crucial for making the right choices. Business objectives should drive technical decisions, not the other way around. Are you optimizing for speed, cost, compliance, or scalability? Your priorities will point you toward different service combinations. Consider your team’s expertise too. Some services require specialized knowledge, while others offer simplified management at the cost of some flexibility. Compute Services: The Foundation AWS offers multiple ways to run your code, each with distinct advantages: EC2 (Elastic Compute Cloud) gives you complete control over virtual machines. It’s ideal when you need specific configurations, legacy compatibility, or want to manage your own scaling. EC2 remains the most flexible compute option but requires more management overhead. Lambda represents the serverless approach. It handles scaling automatically and charges only for execution time. Lambda works perfectly for event-driven workloads and microservices but has limitations on execution duration and resource allocation. ECS (Elastic Container Service) and EKS (Elastic Kubernetes Service) bridge the gap, offering container orchestration with different management models. Choose ECS for simplicity or EKS when you require Kubernetes-specific features or want to avoid vendor lock-in. **Fargate **removes infrastructure management from containers, functioning as “serverless containers.” It combines container flexibility with reduced operational burden, albeit at a higher cost than EC2-hosted containers. Storage: Data at Rest Your storage choices impact performance, cost, and accessibility: S3 (Simple Storage Service) offers highly durable object storage with eleven 9’s of durability. It excels for static content, backups, and data lakes, with its API enabling programmatic access across services. EBS (Elastic Block Storage) provides persistent block storage for EC2 instances. Different volume types balance performance and cost — from general-purpose (gp3) to provisioned IOPS (io2) for database workloads. **EFS (Elastic File System) **delivers managed NFS that can be mounted to multiple instances simultaneously. It’s perfect for shared content, though more expensive than block or object alternatives. **S3 Glacier and S3 Glacier **Deep Archive store rarely accessed data at significantly lower costs, with retrieval times ranging from minutes to hours. Use these for compliance archives and long-term backups. Database Services: The Right Tool for Each Job The database landscape has evolved beyond simple relational vs. non-relational choices: RDS (Relational Database Service) manages traditional databases like MySQL, PostgreSQL, and SQL Server. Choose it when you need transaction support and strict schema enforcement without managing the underlying infrastructure. **Aurora **is AWS’s enhanced MySQL and PostgreSQL offering, delivering up to 5x performance improvement over standard engines. Though more expensive than standard RDS, it often justifies its cost through performance gains and reduced operational complexity. **DynamoDB **provides single-digit millisecond performance at virtually any scale. This NoSQL option works best for applications with known access patterns that need consistent performance regardless of data size. **DocumentDB **offers MongoDB compatibility for document database needs, while Neptune specializes in graph relationships. These purpose-built options shine for specific data models. **Redshift **handles analytics workloads with columnar storage and parallel processing. When paired with Redshift Spectrum, it can query exabytes of data in S3, making it perfect for data warehousing. Networking: The Connective Tissue Networking services determine how components communicate and how users access your application: VPC (Virtual Private Cloud) forms the foundation of your AWS network. It enables you to create isolated environments with fine-grained security controls through subnet design and security groups. Route 53 provides DNS management with health checking capabilities. Its routing policies — from simple to weighted and latency-based — give you precis

How do you navigate the maze of AWS services and choose the best fit for your project?

Choosing the right AWS service feels overwhelming at first. With over 200 services available, even experienced architects can struggle to determine which combination will best serve their needs.

Decision paralysis is real when facing AWS’s vast catalog. This guide cuts through the complexity to help you make confident, informed choices for your specific use case.

We’ll explore the major service categories, examine selection criteria, and provide decision frameworks to match your requirements with the optimal AWS solutions.

Understanding Your Requirements First

Before diving into AWS’s offerings, clearly define what you’re trying to accomplish. This step is often rushed, yet it’s the most crucial for making the right choices.

Business objectives should drive technical decisions, not the other way around. Are you optimizing for speed, cost, compliance, or scalability? Your priorities will point you toward different service combinations.

Consider your team’s expertise too. Some services require specialized knowledge, while others offer simplified management at the cost of some flexibility.

Compute Services: The Foundation

AWS offers multiple ways to run your code, each with distinct advantages:

EC2 (Elastic Compute Cloud) gives you complete control over virtual machines. It’s ideal when you need specific configurations, legacy compatibility, or want to manage your own scaling. EC2 remains the most flexible compute option but requires more management overhead.

Lambda represents the serverless approach. It handles scaling automatically and charges only for execution time. Lambda works perfectly for event-driven workloads and microservices but has limitations on execution duration and resource allocation.

ECS (Elastic Container Service) and EKS (Elastic Kubernetes Service) bridge the gap, offering container orchestration with different management models. Choose ECS for simplicity or EKS when you require Kubernetes-specific features or want to avoid vendor lock-in.

**Fargate **removes infrastructure management from containers, functioning as “serverless containers.” It combines container flexibility with reduced operational burden, albeit at a higher cost than EC2-hosted containers.

Storage: Data at Rest

Your storage choices impact performance, cost, and accessibility:

S3 (Simple Storage Service) offers highly durable object storage with eleven 9’s of durability. It excels for static content, backups, and data lakes, with its API enabling programmatic access across services.

EBS (Elastic Block Storage) provides persistent block storage for EC2 instances. Different volume types balance performance and cost — from general-purpose (gp3) to provisioned IOPS (io2) for database workloads.

**EFS (Elastic File System) **delivers managed NFS that can be mounted to multiple instances simultaneously. It’s perfect for shared content, though more expensive than block or object alternatives.

**S3 Glacier and S3 Glacier **Deep Archive store rarely accessed data at significantly lower costs, with retrieval times ranging from minutes to hours. Use these for compliance archives and long-term backups.

Database Services: The Right Tool for Each Job

The database landscape has evolved beyond simple relational vs. non-relational choices:

RDS (Relational Database Service) manages traditional databases like MySQL, PostgreSQL, and SQL Server. Choose it when you need transaction support and strict schema enforcement without managing the underlying infrastructure.

**Aurora **is AWS’s enhanced MySQL and PostgreSQL offering, delivering up to 5x performance improvement over standard engines. Though more expensive than standard RDS, it often justifies its cost through performance gains and reduced operational complexity.

**DynamoDB **provides single-digit millisecond performance at virtually any scale. This NoSQL option works best for applications with known access patterns that need consistent performance regardless of data size.

**DocumentDB **offers MongoDB compatibility for document database needs, while Neptune specializes in graph relationships. These purpose-built options shine for specific data models.

**Redshift **handles analytics workloads with columnar storage and parallel processing. When paired with Redshift Spectrum, it can query exabytes of data in S3, making it perfect for data warehousing.

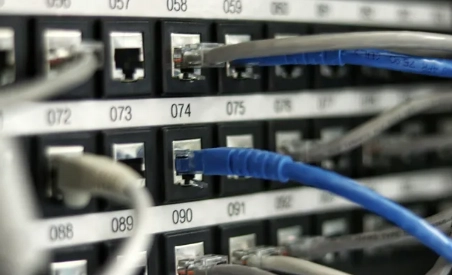

Networking: The Connective Tissue

Networking services determine how components communicate and how users access your application:

VPC (Virtual Private Cloud) forms the foundation of your AWS network. It enables you to create isolated environments with fine-grained security controls through subnet design and security groups.

Route 53 provides DNS management with health checking capabilities. Its routing policies — from simple to weighted and latency-based — give you precise control over traffic distribution.

**CloudFront **accelerates content delivery through edge locations worldwide. Beyond speed benefits, it provides an additional security layer and can reduce your origin servers’ load.

API Gateway manages API endpoints with authentication, throttling, and monitoring. It creates a clean separation between clients and backend implementations, allowing services to evolve independently.

Security Services: Defense in Depth

Security isn’t optional — it’s integrated throughout AWS’s offerings:

IAM (Identity and Access Management) controls who can access what with fine-grained policies. The principle of least privilege should guide your permission strategy, granting only what’s necessary.

AWS Shield protects against DDoS attacks, with the Standard tier included at no cost. The Advanced tier adds specialized protection and response teams for mission-critical applications.

**WAF (Web Application Firewall) **blocks common attack patterns like SQL injection and cross-site scripting. Combined with AWS Firewall Manager, it simplifies security management across accounts.

KMS (Key Management Service) and Secrets Manager safeguard encryption keys and credentials. These services eliminate the dangerous practice of hardcoding sensitive information in your applications.

Monitoring and Management: Visibility is Key

You can’t improve what you can’t measure. AWS’s observability tools provide crucial insights:

**CloudWatch **collects metrics, logs, and events across your resources. Custom dashboards and alarms help you respond to issues before they impact users.

X-Ray traces requests through distributed applications, identifying bottlenecks and failures. This end-to-end visibility proves invaluable when diagnosing complex issues in microservice architectures.

**CloudTrail **logs API calls for security analysis and compliance. It answers the critical “who did what and when” questions that arise during incident investigations.

AWS Config tracks resource configurations and changes over time. This historical record helps with compliance auditing and understanding the evolution of your environment.

Integration Services: Connecting the Pieces

Modern architectures rely on efficient communication between components:

SQS (Simple Queue Service) decouples producers from consumers, improving reliability. It enables asynchronous processing and helps manage traffic spikes without data loss.

SNS (Simple Notification Service) broadcasts messages to multiple subscribers. Its pub/sub model works well for event-driven architectures where multiple systems need notification of the same event.

EventBridge (formerly CloudWatch Events) routes events between AWS services and applications. Its rules engine can trigger workflows based on schedule or in response to system events.

Step Functions coordinates complex workflows with visual modeling. For multi-step processes requiring robust error handling and state management, it provides clear visualization of execution flows.

Decision Framework: Making the Choice

With so many options, how do you decide? Consider these factors:

Operational burden vs. control — managed services reduce your maintenance workload but sometimes limit customization. Assess your team’s capacity and expertise realistically.

Cost structure varies significantly between services. Serverless options eliminate idle capacity costs but may become more expensive at consistent high usage. Model your expected workloads to find cost-efficiency sweet spots.

**Scaling **characteristics differ between services. Some handle scale automatically while others require manual intervention or pre-planning. Match these to your growth projections and demand patterns.

**Integration **requirements with existing systems may constrain your choices. API compatibility and data migration paths should factor into your decision.

Common Architectures and Their Service Combinations

Certain service combinations have emerged as proven patterns for specific needs:

**Web applications **typically combine Route 53, CloudFront, API Gateway, Lambda or EC2, and RDS or DynamoDB. This stack balances performance, scalability, and operational simplicity.

Data processing pipelines often leverage S3, Lambda, Step Functions, and Glue for ETL workloads. This combination handles large volumes while minimizing infrastructure management.

Microservice architectures frequently use ECS or EKS with service discovery, coupled with DynamoDB and event-driven communication through SNS and SQS. This approach enables independent scaling and deployment of components.

Machine learning workflows combine S3 for data storage, SageMaker for model training and deployment, and Lambda for inference APIs. This pattern accelerates the path from data to deployed models.

Migration Strategies: Starting from Existing Systems

Few projects start from scratch. When migrating existing workloads:

*Rehosting *(“lift and shift”) moves applications to EC2 with minimal changes. It’s the fastest approach but misses opportunities for cloud-native benefits.

**Replatforming **makes targeted modifications to leverage managed services. For example, moving from self-managed databases to RDS reduces operational overhead while maintaining compatibility.

**Refactoring **redesigns applications to fully leverage cloud capabilities. Though requiring more investment, it delivers the greatest long-term benefits in cost and scalability.

AWS Application Migration Service accelerates rehosting by automating the conversion of source servers to run natively on AWS. It’s particularly useful for large-scale migrations with tight timelines.

Cost Optimization Strategies

Cloud spending can spiral without proper controls. Implement these practices:

**Right-sizing **ensures resources match actual needs rather than theoretical maximums. CloudWatch metrics help identify overprovisioned instances and opportunities for downsizing.

**Reserved Instances and Savings Plans **offer significant discounts for committed usage. Even partial commitments can substantially reduce costs compared to on-demand pricing.

Auto Scaling adapts capacity to demand, reducing waste during low-traffic periods. Combined with proper instance selection, it balances performance and cost effectively.

AWS Cost Explorer and AWS Budgets provide visibility and control over spending. Regular reviews of these tools help identify optimization opportunities and prevent surprise bills.

Avoiding Common Pitfalls

Even experienced teams encounter challenges when navigating AWS services:

Over-engineering solutions with unnecessarily complex architectures. Start simple and add complexity only when needed, guided by actual requirements rather than theoretical scenarios.

Neglecting data transfer costs between services and regions. These can accumulate quickly, especially with data-intensive applications spanning multiple availability zones or regions.

Ignoring performance boundaries of managed services. Each service has documented limits — understand these before committing to an architecture that might hit scaling constraints.

Security misconfiguration, particularly with public access to storage buckets and overly permissive IAM policies. Follow the principle of least privilege and use AWS security tools to identify vulnerabilities.

Evolving Your Architecture

Your AWS architecture shouldn’t remain static:

Start with core services that address immediate needs rather than implementing everything at once. This incremental approach reduces complexity and accelerates time to market.

Regularly reevaluate service choices as requirements change and AWS launches new offerings. What was optimal a year ago might not be today.

Consider hybrid approaches that combine different service models where appropriate. Many successful architectures use serverless functions alongside container-based microservices and traditional EC2 instances.

Embrace infrastructure as code using CloudFormation or Terraform to document your architecture decisions. This approach improves reproducibility and enables systematic evolution.

Final thoughts

The truth about AWS service selection is that context matters more than absolutes. What works brilliantly for one use case may be completely wrong for another.

Continuous learning remains essential as AWS regularly launches new services and enhances existing ones. Stay informed through AWS blogs, re

announcements, and community resources.

Remember that the best architecture is one that meets your current needs while remaining flexible enough to evolve with your requirements. Don’t chase perfection at the expense of progress.

By understanding your requirements, applying appropriate decision criteria, and learning from established patterns, you can navigate AWS’s service landscape confidently and build solutions that deliver real business value.

Let’s Keep the Conversation Going!

Enjoyed this article? If you found it helpful, give it a like! And if you’re not following me yet, now’s the time.

I share real insights on software engineering, cybersecurity, and the latest tech trends — no fluff, just practical advice.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] Sterling Stock Picker: Lifetime Subscription (85% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_NicoElNino_Alamy.png?width=1280&auto=webp&quality=80&disable=upscale#)

_Olekcii_Mach_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple appealing $570M EU fine, White House says it won’t be tolerated [U]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/04/Apple-says-570M-EU-fine-is-unfair-White-House-says-it-wont-be-tolerated.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![At Least Three iPhone 17 Models to Feature 12GB RAM [Kuo]](https://www.iclarified.com/images/news/97122/97122/97122-640.jpg)

![Dummy Models Showcase 'Unbelievably' Thin iPhone 17 Air Design [Images]](https://www.iclarified.com/images/news/97114/97114/97114-640.jpg)