The Ethics of Using AI for Surveillance and Fraud Detection

Artificial Intelligence (AI) is transforming the way we detect fraud and maintain security. From monitoring financial transactions in real-time to analyzing surveillance footage for suspicious behavior, AI is playing a key role in reducing fraud and protecting both organizations and individuals. But as these technologies become more powerful, they also raise critical ethical questions: Where should we draw the line between safety and privacy? How do we ensure fairness and accountability in AI-driven decisions? Who watches the watchers? Let’s explore the ethical dimensions of using AI for surveillance and fraud detection. The Power of AI in Fraud Detection and Surveillance AI systems are incredibly efficient at detecting patterns and anomalies that might go unnoticed by humans. In fraud detection, machine learning models can flag suspicious transactions, synthetic identities, or unauthorized access attempts with impressive accuracy. In surveillance, facial recognition and behavior analysis can enhance security in public and private spaces. This technology has clear benefits: Early detection of fraud, reducing financial losses Increased efficiency, freeing up human investigators Scalability, monitoring massive data sets in real-time Automation, reducing human error and bias (at least in theory) But these benefits come with risks. Ethical Concerns Privacy Invasion AI surveillance often involves tracking individuals without their consent—whether through biometric data, online behavior, or transaction monitoring. When organizations or governments deploy AI tools without transparency, it erodes public trust and threatens the right to privacy. Key question: Are we becoming a surveillance society under the guise of safety? 2. Bias and Discrimination AI systems are only as fair as the data they’re trained on. If historical data reflects human bias (e.g., racial profiling, socioeconomic inequality), the AI may reinforce these patterns. In fraud detection, biased models may unfairly flag certain demographic groups as "high risk" based on flawed assumptions. Solution: Ongoing audits, diverse datasets, and transparent model development are essential. 3. Lack of Transparency and Accountability AI decisions—especially those made by black-box models—are often difficult to explain. If someone is denied access to a service, flagged for fraud, or surveilled unfairly, how can they appeal the decision? Ethical AI must include explainability and clear channels for recourse. 4. Function Creep A tool built for fraud detection might later be used for other purposes—such as monitoring employees, enforcing laws, or even tracking political dissent. This repurposing, often without consent, raises major ethical red flags. 5. Consent and Data Ownership Who owns the data that AI models rely on? Were users properly informed that their data would be used in this way? In many cases, individuals aren’t aware their behavior is being analyzed, let alone how it's being used to train AI systems. Building Ethical AI Systems: Best Practices - Transparency: Inform users when AI is being used, how it works, and what data it collects. - Consent: Ensure meaningful consent, especially for biometric and sensitive data. - Fairness: Use diverse data and regularly test for bias. - Accountability: Create mechanisms for appealing AI-driven decisions. - Oversight: Establish clear governance for how AI is implemented and monitored. Final Thoughts AI is a powerful ally in the fight against fraud and crime, but power must be wielded responsibly. The goal isn’t to abandon AI, but to build ethical frameworks that protect both security and individual rights. In a world where the lines between surveillance and protection are increasingly blurred, the true challenge is designing AI systems that are not just smart—but just.

Artificial Intelligence (AI) is transforming the way we detect fraud and maintain security. From monitoring financial transactions in real-time to analyzing surveillance footage for suspicious behavior, AI is playing a key role in reducing fraud and protecting both organizations and individuals. But as these technologies become more powerful, they also raise critical ethical questions:

- Where should we draw the line between safety and privacy?

- How do we ensure fairness and accountability in AI-driven decisions?

- Who watches the watchers?

Let’s explore the ethical dimensions of using AI for surveillance and fraud detection.

The Power of AI in Fraud Detection and Surveillance

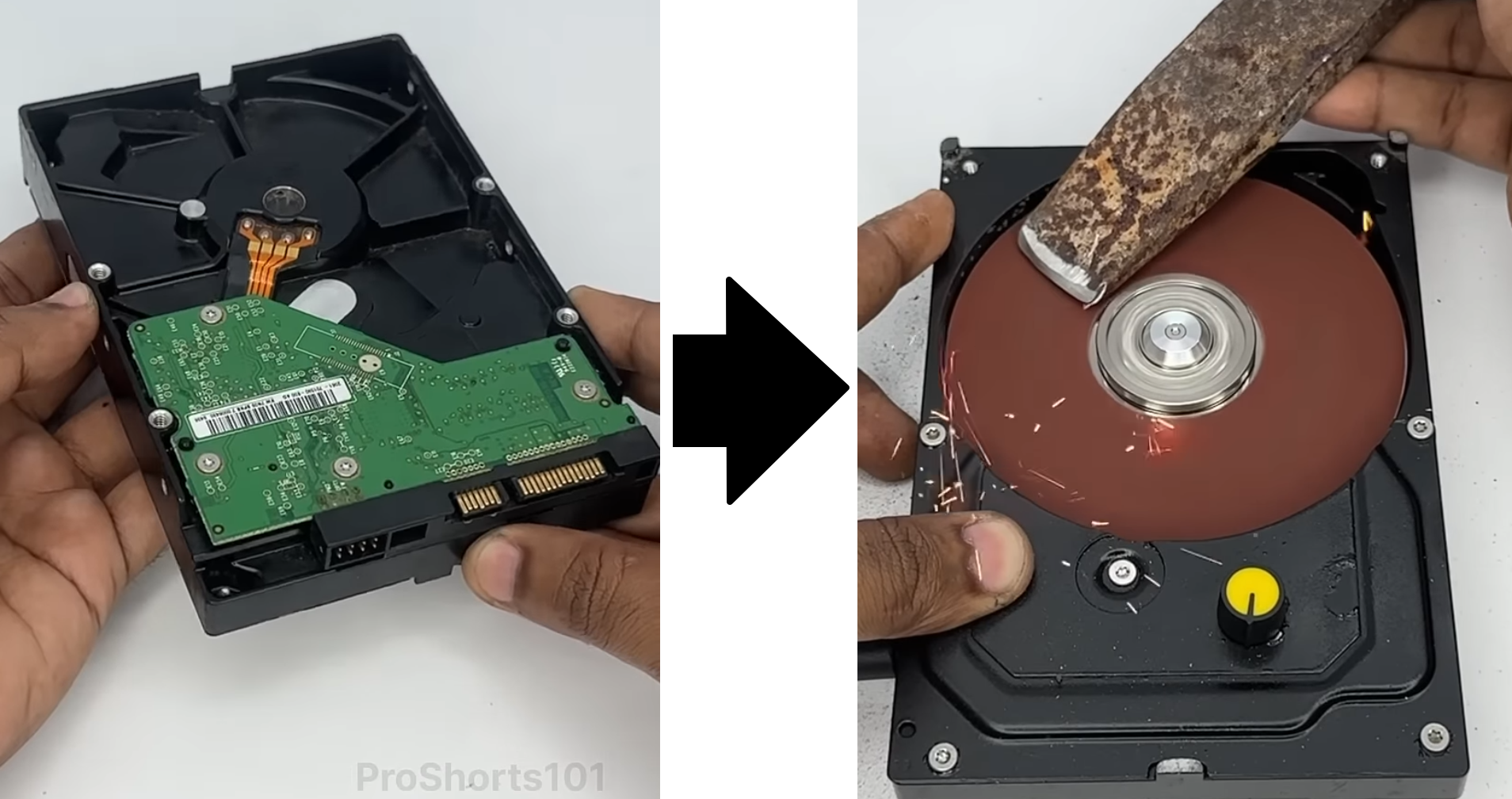

AI systems are incredibly efficient at detecting patterns and anomalies that might go unnoticed by humans. In fraud detection, machine learning models can flag suspicious transactions, synthetic identities, or unauthorized access attempts with impressive accuracy. In surveillance, facial recognition and behavior analysis can enhance security in public and private spaces.

This technology has clear benefits:

- Early detection of fraud, reducing financial losses

- Increased efficiency, freeing up human investigators

- Scalability, monitoring massive data sets in real-time

- Automation, reducing human error and bias (at least in theory)

But these benefits come with risks.

Ethical Concerns

- Privacy Invasion

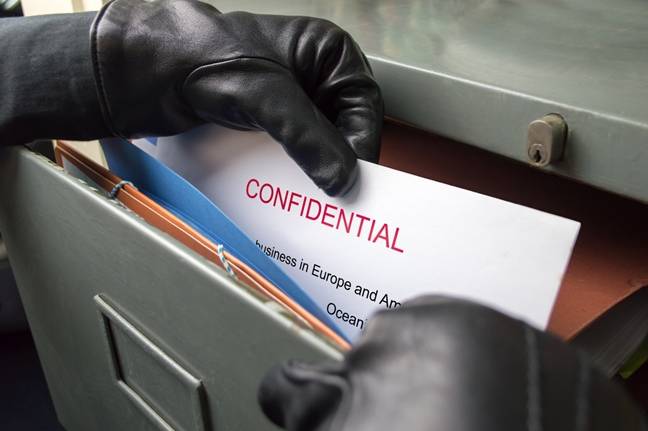

AI surveillance often involves tracking individuals without their consent—whether through biometric data, online behavior, or transaction monitoring. When organizations or governments deploy AI tools without transparency, it erodes public trust and threatens the right to privacy.

Key question: Are we becoming a surveillance society under the guise of safety?

2. Bias and Discrimination

AI systems are only as fair as the data they’re trained on. If historical data reflects human bias (e.g., racial profiling, socioeconomic inequality), the AI may reinforce these patterns. In fraud detection, biased models may unfairly flag certain demographic groups as "high risk" based on flawed assumptions.

Solution: Ongoing audits, diverse datasets, and transparent model development are essential.

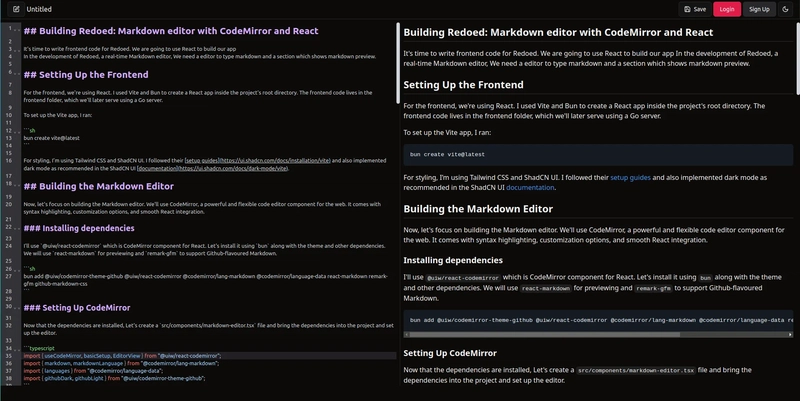

3. Lack of Transparency and Accountability

AI decisions—especially those made by black-box models—are often difficult to explain. If someone is denied access to a service, flagged for fraud, or surveilled unfairly, how can they appeal the decision?

Ethical AI must include explainability and clear channels for recourse.

4. Function Creep

A tool built for fraud detection might later be used for other purposes—such as monitoring employees, enforcing laws, or even tracking political dissent. This repurposing, often without consent, raises major ethical red flags.

5. Consent and Data Ownership

Who owns the data that AI models rely on? Were users properly informed that their data would be used in this way? In many cases, individuals aren’t aware their behavior is being analyzed, let alone how it's being used to train AI systems.

Building Ethical AI Systems: Best Practices

- Transparency: Inform users when AI is being used, how it works, and what data it collects.

- Consent: Ensure meaningful consent, especially for biometric and sensitive data.

- Fairness: Use diverse data and regularly test for bias.

- Accountability: Create mechanisms for appealing AI-driven decisions.

- Oversight: Establish clear governance for how AI is implemented and monitored.

Final Thoughts

AI is a powerful ally in the fight against fraud and crime, but power must be wielded responsibly. The goal isn’t to abandon AI, but to build ethical frameworks that protect both security and individual rights. In a world where the lines between surveillance and protection are increasingly blurred, the true challenge is designing AI systems that are not just smart—but just.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![GrandChase tier list of the best characters available [April 2025]](https://media.pocketgamer.com/artwork/na-33057-1637756796/grandchase-ios-android-3rd-anniversary.jpg?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![Global security vulnerability database gets 11 more months of funding [u]](https://photos5.appleinsider.com/gallery/63338-131616-62453-129471-61060-125967-51013-100774-49862-97722-Malware-Image-xl-xl-xl-(1)-xl-xl.jpg)

![Apple M4 13-inch iPad Pro On Sale for $200 Off [Deal]](https://www.iclarified.com/images/news/97056/97056/97056-640.jpg)

![Apple Shares New 'Mac Does That' Ads for MacBook Pro [Video]](https://www.iclarified.com/images/news/97055/97055/97055-640.jpg)