Simulating Chaos: My Latest Foray into Failure Testing

Resilient apps aren’t just built, they’re battle tested. This week, I got my second real taste of chaos engineering using LocalStack’s Chaos Dashboard (the first was for my interview project), and let me tell you: it was both humbling and weirdly fun. I’m still deep into building my serverless meme caption game (yes, still), but I wanted to see how it holds up under failure. Spoiler: not great! But that’s the point. Wait… what is chaos engineering again? At a high level, chaos engineering is the practice of intentionally introducing failures into your system to observe how it behaves. The goal isn’t to break things just for fun (though, it is kinda fun). It’s to build confidence that your app can recover gracefully when issues happen irl. Network glitches, service outages, bad configs, etc. With LocalStack (the pro version, sorry), I could do this locally using the Chaos Dashboard, which makes it ridiculously easy to simulate things like: DynamoDB returning 500 errors S3 being unavailable Lambda timing out And a bunch more My Setup: Meme App Meets Mayhem I’ve got a simple serverless app: Users upload memes to S3 They vote on captions stored in DynamoDB Everything’s wired up via Lambda + API Gateway I'm using Terraform to manage infra, and running it all in LocalStack Once I had the basic flow working, I launched the Chaos Dashboard, clicked the “DynamoDB Error” experiment… and watched my app fall apart. Literally. The Lambda function that calls PutItemAsync in DynamoDB started throwing internal server errors like confetti. What Went Wrong (And Why That’s Good) This wasn’t a polished app. It had: No retries No fallback logic No graceful error handling And I got to see all of that immediately once the chaos kicked in. Takeaway: If your app can’t tolerate even a brief hiccup from a cloud service, it’s not ready for prod. And without chaos testing, you might not know until it’s too late. My Favorite Part: Testing in LocalStack > Prod Nightmares What I loved about this: I didn’t need to spin up a real AWS environment I didn’t risk breaking anything critical I could toggle failure modes with a literal switch I will be adding retries and proper logging soon. But even just running this gave me a new perspective on what “robust” actually means. Turning This into a Talk This experiment started as a blog post, but it’s shaping up to be something bigger. I’m putting together a lightning talk based on this journey—equal parts meme app, chaos engineering, and “what not to do.” My goal? Take this talk on the road to show other devs that you don’t need to be Netflix to start building resilient systems. You just need a curious mind, a busted app, and some good local chaos. Coming Soon: More Chaos. More Learning. Next up, I want to test how my app handles: S3 outages (can users still vote on memes?) Lambda timeouts (will the frontend hang or recover?) Multi-service chaos (because AWS failures don’t happen in isolation) Still building. Still breaking. Still blogging. If you’ve dabbled in chaos engineering, or you’re curious about trying it, I’d love to hear about it! Hit me up on Twitter or LinkedIn and let’s swap stories. Until next week

Resilient apps aren’t just built, they’re battle tested. This week, I got my second real taste of chaos engineering using LocalStack’s Chaos Dashboard (the first was for my interview project), and let me tell you: it was both humbling and weirdly fun.

I’m still deep into building my serverless meme caption game (yes, still), but I wanted to see how it holds up under failure. Spoiler: not great! But that’s the point.

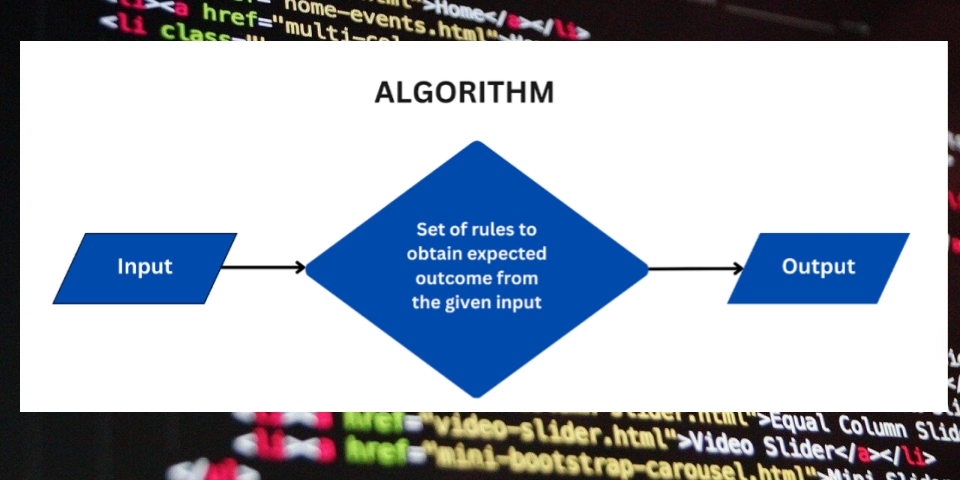

Wait… what is chaos engineering again?

At a high level, chaos engineering is the practice of intentionally introducing failures into your system to observe how it behaves.

The goal isn’t to break things just for fun (though, it is kinda fun). It’s to build confidence that your app can recover gracefully when issues happen irl. Network glitches, service outages, bad configs, etc.

With LocalStack (the pro version, sorry), I could do this locally using the Chaos Dashboard, which makes it ridiculously easy to simulate things like:

- DynamoDB returning 500 errors

- S3 being unavailable

- Lambda timing out

- And a bunch more

My Setup: Meme App Meets Mayhem

I’ve got a simple serverless app:

- Users upload memes to S3

- They vote on captions stored in DynamoDB

- Everything’s wired up via Lambda + API Gateway

- I'm using Terraform to manage infra, and running it all in LocalStack

Once I had the basic flow working, I launched the Chaos Dashboard, clicked the “DynamoDB Error” experiment… and watched my app fall apart. Literally.

The Lambda function that calls PutItemAsync in DynamoDB started throwing internal server errors like confetti.

What Went Wrong (And Why That’s Good)

This wasn’t a polished app. It had:

- No retries

- No fallback logic

- No graceful error handling

And I got to see all of that immediately once the chaos kicked in.

Takeaway:

If your app can’t tolerate even a brief hiccup from a cloud service, it’s not ready for prod. And without chaos testing, you might not know until it’s too late.

My Favorite Part: Testing in LocalStack > Prod Nightmares

What I loved about this:

- I didn’t need to spin up a real AWS environment

- I didn’t risk breaking anything critical

- I could toggle failure modes with a literal switch

I will be adding retries and proper logging soon. But even just running this gave me a new perspective on what “robust” actually means.

Turning This into a Talk

This experiment started as a blog post, but it’s shaping up to be something bigger. I’m putting together a lightning talk based on this journey—equal parts meme app, chaos engineering, and “what not to do.”

My goal?

Take this talk on the road to show other devs that you don’t need to be Netflix to start building resilient systems. You just need a curious mind, a busted app, and some good local chaos.

Coming Soon: More Chaos. More Learning.

Next up, I want to test how my app handles:

- S3 outages (can users still vote on memes?)

- Lambda timeouts (will the frontend hang or recover?)

- Multi-service chaos (because AWS failures don’t happen in isolation)

Still building. Still breaking. Still blogging.

If you’ve dabbled in chaos engineering, or you’re curious about trying it, I’d love to hear about it!

Hit me up on Twitter or LinkedIn and let’s swap stories.

Until next week

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

.jpg?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Olekcii_Mach_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Drops New Immersive Adventure Episode for Vision Pro: 'Hill Climb' [Video]](https://www.iclarified.com/images/news/97133/97133/97133-640.jpg)

![Most iPhones Sold in the U.S. Will Be Made in India by 2026 [Report]](https://www.iclarified.com/images/news/97130/97130/97130-640.jpg)

![Apple to Shift Robotics Unit From AI Division to Hardware Engineering [Report]](https://www.iclarified.com/images/news/97128/97128/97128-640.jpg)