Pathology AI Breakthrough: Train SOTA Models With 1000x Less Data

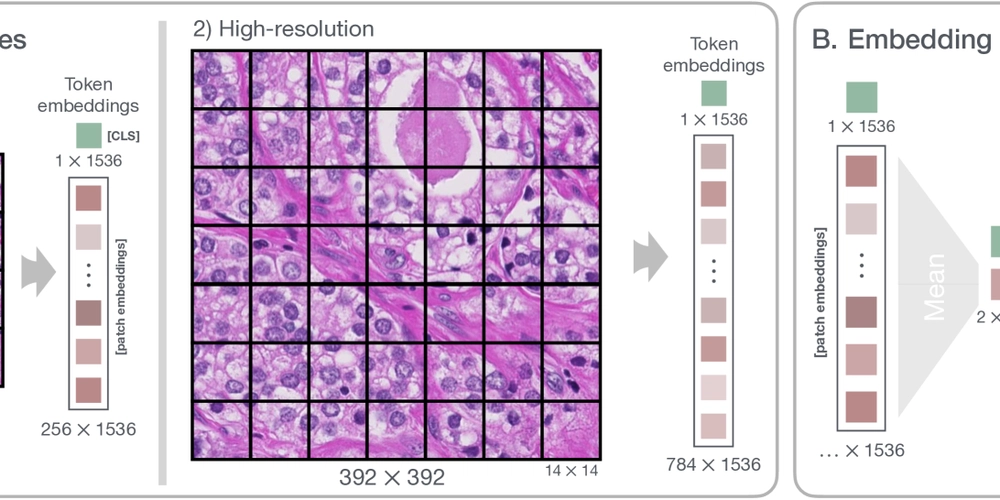

This is a Plain English Papers summary of a research paper called Pathology AI Breakthrough: Train SOTA Models With 1000x Less Data. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter. Overview Researchers trained pathology foundation models using only 70,000 patches Achieved performance comparable to models trained on 80 million patches Used contrastive learning and medical imaging transformers Results suggest smaller, more diverse datasets are more efficient Method enables high-quality models with much less computational resources Plain English Explanation Medical AI has a data problem. To build good models for analyzing medical images like tissue slides, researchers typically need enormous datasets - often tens of millions of image patches. This creates significant barriers: gathering and storing that much medical data is diffic... Click here to read the full summary of this paper

This is a Plain English Papers summary of a research paper called Pathology AI Breakthrough: Train SOTA Models With 1000x Less Data. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

- Researchers trained pathology foundation models using only 70,000 patches

- Achieved performance comparable to models trained on 80 million patches

- Used contrastive learning and medical imaging transformers

- Results suggest smaller, more diverse datasets are more efficient

- Method enables high-quality models with much less computational resources

Plain English Explanation

Medical AI has a data problem. To build good models for analyzing medical images like tissue slides, researchers typically need enormous datasets - often tens of millions of image patches. This creates significant barriers: gathering and storing that much medical data is diffic...

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Blue Archive tier list [April 2025]](https://media.pocketgamer.com/artwork/na-33404-1636469504/blue-archive-screenshot-2.jpg?#)

.png?#)

.jpg?#)

.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

.png?#)

.webp?#)

![Global security vulnerability database gets 11 more months of funding [u]](https://photos5.appleinsider.com/gallery/63338-131616-62453-129471-61060-125967-51013-100774-49862-97722-Malware-Image-xl-xl-xl-(1)-xl-xl.jpg)

![Apple Releases tvOS 18.4.1 for Apple TV [Download]](https://www.iclarified.com/images/news/97047/97047/97047-640.jpg)

![Apple Releases macOS Sequoia 15.4.1 [Download]](https://www.iclarified.com/images/news/97049/97049/97049-640.jpg)

![Apple Releases iOS 18.4.1 and iPadOS 18.4.1 [Download]](https://www.iclarified.com/images/news/97043/97043/97043-640.jpg)