Open-source Granite

Granite TimeSeries model completely open-source available on Hugging Face. Introduction This is the definition of time series data and what they are good for; it is a sequence of data points collected or recorded at regular time intervals. These data points can represent various measurements, observations, or events, and they are ordered chronologically. Time series data is used in a wide range of fields because it allows us to analyze how things change over time and make predictions about the future. Here are some key applications: Forecasting and Prediction Anomaly Detection Understanding and Analysis Control Systems Optimization In short, time series data and its analysis are crucial for understanding the dynamics of various systems, making informed decisions, and planning for the future across diverse domains. Excerpt from Hugging Face; “TinyTimeMixers (TTMs) are compact pre-trained models for Multivariate Time-Series Forecasting, open-sourced by IBM Research. With less than 1 Million parameters, TTM (accepted in NeurIPS 24) introduces the notion of the first-ever “tiny” pre-trained models for Time-Series Forecasting. TTM outperforms several popular benchmarks demanding billions of parameters in zero-shot and few-shot forecasting. TTMs are lightweight forecasters, pre-trained on publicly available time series data with various augmentations. TTM provides state-of-the-art zero-shot forecasts and can easily be fine-tuned for multi-variate forecasts with just 5% of the training data to be competitive. Refer to our paper for more details.” Simple test — Getting started with TinyTimeMixer How test the Granite model which is available on “Hugging Face” as an open model? Just fetch the GitHub samples and try them. The very first notebook runs as a charm locally on my laptop. # Install the tsfm library ! pip install "granite-tsfm[notebooks] @ git+https://github.com/ibm-granite/granite-tsfm.git@v0.2.22" ### import math import os import tempfile import pandas as pd from torch.optim import AdamW from torch.optim.lr_scheduler import OneCycleLR from transformers import EarlyStoppingCallback, Trainer, TrainingArguments, set_seed from transformers.integrations import INTEGRATION_TO_CALLBACK from tsfm_public import TimeSeriesPreprocessor, TrackingCallback, count_parameters, get_datasets from tsfm_public.toolkit.get_model import get_model from tsfm_public.toolkit.lr_finder import optimal_lr_finder from tsfm_public.toolkit.visualization import plot_predictions ### import warnings # Suppress all warnings warnings.filterwarnings("ignore") ### # Set seed for reproducibility SEED = 42 set_seed(SEED) # TTM Model path. The default model path is Granite-R2. Below, you can choose other TTM releases. TTM_MODEL_PATH = "ibm-granite/granite-timeseries-ttm-r2" # TTM_MODEL_PATH = "ibm-granite/granite-timeseries-ttm-r1" # TTM_MODEL_PATH = "ibm-research/ttm-research-r2" # Context length, Or Length of the history. # Currently supported values are: 512/1024/1536 for Granite-TTM-R2 and Research-Use-TTM-R2, and 512/1024 for Granite-TTM-R1 CONTEXT_LENGTH = 512 # Granite-TTM-R2 supports forecast length upto 720 and Granite-TTM-R1 supports forecast length upto 96 PREDICTION_LENGTH = 96 TARGET_DATASET = "etth1" dataset_path = "https://raw.githubusercontent.com/zhouhaoyi/ETDataset/main/ETT-small/ETTh1.csv" # Results dir OUT_DIR = "ttm_finetuned_models/" #### # Dataset TARGET_DATASET = "etth1" dataset_path = "https://raw.githubusercontent.com/zhouhaoyi/ETDataset/main/ETT-small/ETTh1.csv" timestamp_column = "date" id_columns = [] # mention the ids that uniquely identify a time-series. target_columns = ["HUFL", "HULL", "MUFL", "MULL", "LUFL", "LULL", "OT"] split_config = { "train": [0, 8640], "valid": [8640, 11520], "test": [ 11520, 14400, ], } # Understanding the split config -- slides data = pd.read_csv( dataset_path, parse_dates=[timestamp_column], ) column_specifiers = { "timestamp_column": timestamp_column, "id_columns": id_columns, "target_columns": target_columns, "control_columns": [], } #### def zeroshot_eval(dataset_name, batch_size, context_length=512, forecast_length=96): # Get data tsp = TimeSeriesPreprocessor( **column_specifiers, context_length=context_length, prediction_length=forecast_length, scaling=True, encode_categorical=False, scaler_type="standard", ) # Load model zeroshot_model = get_model( TTM_MODEL_PATH, context_length=context_length, prediction_length=forecast_length, freq_prefix_tuning=False, freq=None, prefer_l1_loss=False, prefer_longer_context=True, ) dset_train, dset_valid, dset_test = get_datasets( tsp, data, split_config, use_frequency_token=zeroshot_model.config.resolution_prefix_tuning ) temp_dir = tempfile.mkdtemp() # zeroshot_trainer zeros

Granite TimeSeries model completely open-source available on Hugging Face.

Introduction

This is the definition of time series data and what they are good for; it is a sequence of data points collected or recorded at regular time intervals. These data points can represent various measurements, observations, or events, and they are ordered chronologically. Time series data is used in a wide range of fields because it allows us to analyze how things change over time and make predictions about the future. Here are some key applications:

- Forecasting and Prediction

- Anomaly Detection

- Understanding and Analysis

- Control Systems

- Optimization

In short, time series data and its analysis are crucial for understanding the dynamics of various systems, making informed decisions, and planning for the future across diverse domains.

Excerpt from Hugging Face; “TinyTimeMixers (TTMs) are compact pre-trained models for Multivariate Time-Series Forecasting, open-sourced by IBM Research. With less than 1 Million parameters, TTM (accepted in NeurIPS 24) introduces the notion of the first-ever “tiny” pre-trained models for Time-Series Forecasting.

TTM outperforms several popular benchmarks demanding billions of parameters in zero-shot and few-shot forecasting. TTMs are lightweight forecasters, pre-trained on publicly available time series data with various augmentations. TTM provides state-of-the-art zero-shot forecasts and can easily be fine-tuned for multi-variate forecasts with just 5% of the training data to be competitive. Refer to our paper for more details.”

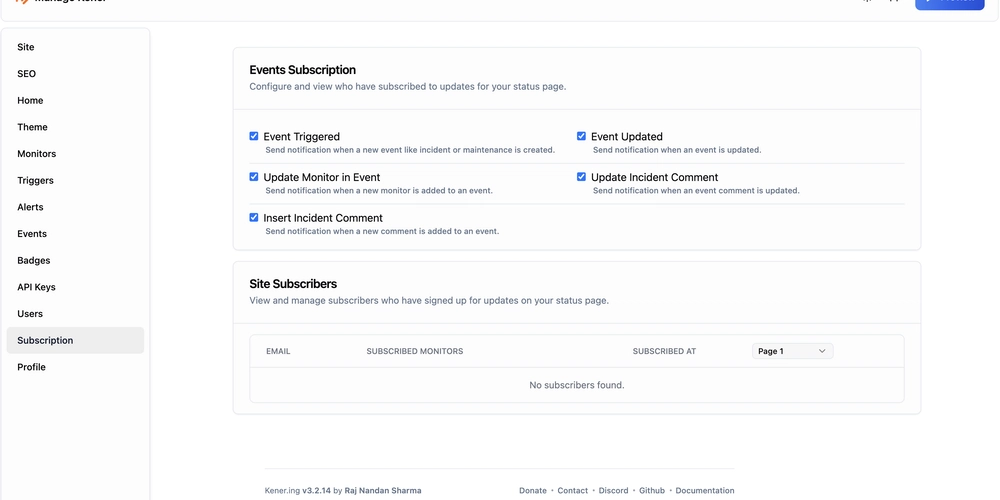

Simple test — Getting started with TinyTimeMixer

How test the Granite model which is available on “Hugging Face” as an open model?

Just fetch the GitHub samples and try them. The very first notebook runs as a charm locally on my laptop.

# Install the tsfm library

! pip install "granite-tsfm[notebooks] @ git+https://github.com/ibm-granite/granite-tsfm.git@v0.2.22"

###

import math

import os

import tempfile

import pandas as pd

from torch.optim import AdamW

from torch.optim.lr_scheduler import OneCycleLR

from transformers import EarlyStoppingCallback, Trainer, TrainingArguments, set_seed

from transformers.integrations import INTEGRATION_TO_CALLBACK

from tsfm_public import TimeSeriesPreprocessor, TrackingCallback, count_parameters, get_datasets

from tsfm_public.toolkit.get_model import get_model

from tsfm_public.toolkit.lr_finder import optimal_lr_finder

from tsfm_public.toolkit.visualization import plot_predictions

###

import warnings

# Suppress all warnings

warnings.filterwarnings("ignore")

###

# Set seed for reproducibility

SEED = 42

set_seed(SEED)

# TTM Model path. The default model path is Granite-R2. Below, you can choose other TTM releases.

TTM_MODEL_PATH = "ibm-granite/granite-timeseries-ttm-r2"

# TTM_MODEL_PATH = "ibm-granite/granite-timeseries-ttm-r1"

# TTM_MODEL_PATH = "ibm-research/ttm-research-r2"

# Context length, Or Length of the history.

# Currently supported values are: 512/1024/1536 for Granite-TTM-R2 and Research-Use-TTM-R2, and 512/1024 for Granite-TTM-R1

CONTEXT_LENGTH = 512

# Granite-TTM-R2 supports forecast length upto 720 and Granite-TTM-R1 supports forecast length upto 96

PREDICTION_LENGTH = 96

TARGET_DATASET = "etth1"

dataset_path = "https://raw.githubusercontent.com/zhouhaoyi/ETDataset/main/ETT-small/ETTh1.csv"

# Results dir

OUT_DIR = "ttm_finetuned_models/"

####

# Dataset

TARGET_DATASET = "etth1"

dataset_path = "https://raw.githubusercontent.com/zhouhaoyi/ETDataset/main/ETT-small/ETTh1.csv"

timestamp_column = "date"

id_columns = [] # mention the ids that uniquely identify a time-series.

target_columns = ["HUFL", "HULL", "MUFL", "MULL", "LUFL", "LULL", "OT"]

split_config = {

"train": [0, 8640],

"valid": [8640, 11520],

"test": [

11520,

14400,

],

}

# Understanding the split config -- slides

data = pd.read_csv(

dataset_path,

parse_dates=[timestamp_column],

)

column_specifiers = {

"timestamp_column": timestamp_column,

"id_columns": id_columns,

"target_columns": target_columns,

"control_columns": [],

}

####

def zeroshot_eval(dataset_name, batch_size, context_length=512, forecast_length=96):

# Get data

tsp = TimeSeriesPreprocessor(

**column_specifiers,

context_length=context_length,

prediction_length=forecast_length,

scaling=True,

encode_categorical=False,

scaler_type="standard",

)

# Load model

zeroshot_model = get_model(

TTM_MODEL_PATH,

context_length=context_length,

prediction_length=forecast_length,

freq_prefix_tuning=False,

freq=None,

prefer_l1_loss=False,

prefer_longer_context=True,

)

dset_train, dset_valid, dset_test = get_datasets(

tsp, data, split_config, use_frequency_token=zeroshot_model.config.resolution_prefix_tuning

)

temp_dir = tempfile.mkdtemp()

# zeroshot_trainer

zeroshot_trainer = Trainer(

model=zeroshot_model,

args=TrainingArguments(

output_dir=temp_dir,

per_device_eval_batch_size=batch_size,

seed=SEED,

report_to="none",

),

)

# evaluate = zero-shot performance

print("+" * 20, "Test MSE zero-shot", "+" * 20)

zeroshot_output = zeroshot_trainer.evaluate(dset_test)

print(zeroshot_output)

# get predictions

predictions_dict = zeroshot_trainer.predict(dset_test)

predictions_np = predictions_dict.predictions[0]

print(predictions_np.shape)

# get backbone embeddings (if needed for further analysis)

backbone_embedding = predictions_dict.predictions[1]

print(backbone_embedding.shape)

# plot

plot_predictions(

model=zeroshot_trainer.model,

dset=dset_test,

plot_dir=os.path.join(OUT_DIR, dataset_name),

plot_prefix="test_zeroshot",

indices=[685, 118, 902, 1984, 894, 967, 304, 57, 265, 1015],

channel=0,

)

####

zeroshot_eval(

dataset_name=TARGET_DATASET, context_length=CONTEXT_LENGTH, forecast_length=PREDICTION_LENGTH, batch_size=64

)

####

def fewshot_finetune_eval(

dataset_name,

batch_size,

learning_rate=None,

context_length=512,

forecast_length=96,

fewshot_percent=5,

freeze_backbone=True,

num_epochs=50,

save_dir=OUT_DIR,

loss="mse",

quantile=0.5,

):

out_dir = os.path.join(save_dir, dataset_name)

print("-" * 20, f"Running few-shot {fewshot_percent}%", "-" * 20)

# Data prep: Get dataset

tsp = TimeSeriesPreprocessor(

**column_specifiers,

context_length=context_length,

prediction_length=forecast_length,

scaling=True,

encode_categorical=False,

scaler_type="standard",

)

# change head dropout to 0.7 for ett datasets

if "ett" in dataset_name:

finetune_forecast_model = get_model(

TTM_MODEL_PATH,

context_length=context_length,

prediction_length=forecast_length,

freq_prefix_tuning=False,

freq=None,

prefer_l1_loss=False,

prefer_longer_context=True,

# Can also provide TTM Config args

head_dropout=0.7,

loss=loss,

quantile=quantile,

)

else:

finetune_forecast_model = get_model(

TTM_MODEL_PATH,

context_length=context_length,

prediction_length=forecast_length,

freq_prefix_tuning=False,

freq=None,

prefer_l1_loss=False,

prefer_longer_context=True,

# Can also provide TTM Config args

loss=loss,

quantile=quantile,

)

dset_train, dset_val, dset_test = get_datasets(

tsp,

data,

split_config,

fewshot_fraction=fewshot_percent / 100,

fewshot_location="first",

use_frequency_token=finetune_forecast_model.config.resolution_prefix_tuning,

)

if freeze_backbone:

print(

"Number of params before freezing backbone",

count_parameters(finetune_forecast_model),

)

# Freeze the backbone of the model

for param in finetune_forecast_model.backbone.parameters():

param.requires_grad = False

# Count params

print(

"Number of params after freezing the backbone",

count_parameters(finetune_forecast_model),

)

# Find optimal learning rate

# Use with caution: Set it manually if the suggested learning rate is not suitable

if learning_rate is None:

learning_rate, finetune_forecast_model = optimal_lr_finder(

finetune_forecast_model,

dset_train,

batch_size=batch_size,

)

print("OPTIMAL SUGGESTED LEARNING RATE =", learning_rate)

print(f"Using learning rate = {learning_rate}")

finetune_forecast_args = TrainingArguments(

output_dir=os.path.join(out_dir, "output"),

overwrite_output_dir=True,

learning_rate=learning_rate,

num_train_epochs=num_epochs,

do_eval=True,

eval_strategy="epoch",

per_device_train_batch_size=batch_size,

per_device_eval_batch_size=batch_size,

dataloader_num_workers=8,

report_to="none",

save_strategy="epoch",

logging_strategy="epoch",

save_total_limit=1,

logging_dir=os.path.join(out_dir, "logs"), # Make sure to specify a logging directory

load_best_model_at_end=True, # Load the best model when training ends

metric_for_best_model="eval_loss", # Metric to monitor for early stopping

greater_is_better=False, # For loss

seed=SEED,

)

# Create the early stopping callback

early_stopping_callback = EarlyStoppingCallback(

early_stopping_patience=10, # Number of epochs with no improvement after which to stop

early_stopping_threshold=1e-5, # Minimum improvement required to consider as improvement

)

tracking_callback = TrackingCallback()

# Optimizer and scheduler

optimizer = AdamW(finetune_forecast_model.parameters(), lr=learning_rate)

scheduler = OneCycleLR(

optimizer,

learning_rate,

epochs=num_epochs,

steps_per_epoch=math.ceil(len(dset_train) / (batch_size)),

)

finetune_forecast_trainer = Trainer(

model=finetune_forecast_model,

args=finetune_forecast_args,

train_dataset=dset_train,

eval_dataset=dset_val,

callbacks=[early_stopping_callback, tracking_callback],

optimizers=(optimizer, scheduler),

)

finetune_forecast_trainer.remove_callback(INTEGRATION_TO_CALLBACK["codecarbon"])

# Fine tune

finetune_forecast_trainer.train()

# Evaluation

print("+" * 20, f"Test MSE after few-shot {fewshot_percent}% fine-tuning", "+" * 20)

finetune_forecast_trainer.model.loss = "mse" # fixing metric to mse for evaluation

fewshot_output = finetune_forecast_trainer.evaluate(dset_test)

print(fewshot_output)

print("+" * 60)

# get predictions

predictions_dict = finetune_forecast_trainer.predict(dset_test)

predictions_np = predictions_dict.predictions[0]

print(predictions_np.shape)

# get backbone embeddings (if needed for further analysis)

backbone_embedding = predictions_dict.predictions[1]

print(backbone_embedding.shape)

# plot

plot_predictions(

model=finetune_forecast_trainer.model,

dset=dset_test,

plot_dir=os.path.join(OUT_DIR, dataset_name),

plot_prefix="test_fewshot",

indices=[685, 118, 902, 1984, 894, 967, 304, 57, 265, 1015],

channel=0,

)

####

fewshot_finetune_eval(

dataset_name=TARGET_DATASET,

context_length=CONTEXT_LENGTH,

forecast_length=PREDICTION_LENGTH,

batch_size=64,

fewshot_percent=5,

learning_rate=0.001,

)

####

The training data source is (are) provided on the Hugging Face’s model page.

You can access the datasets. If you want to use any of the dataset above or other ones and make CSV files of them in order to test/benchmark the model, it could be also a way to test the model.

I wrote two little Python scripts to make a CSV out of a TSF file and another one to determine the CSV file’s structure (for future use, with hard-coded paths

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![Apple Shares Official Teaser for 'Highest 2 Lowest' Starring Denzel Washington [Video]](https://www.iclarified.com/images/news/97221/97221/97221-640.jpg)

![Under-Display Face ID Coming to iPhone 18 Pro and Pro Max [Rumor]](https://www.iclarified.com/images/news/97215/97215/97215-640.jpg)