Modeling Earth using GeoData in a few lines of code

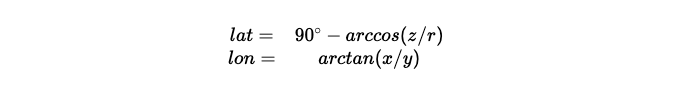

Have you ever played Outer Wilds? The planets there are incredibly beautiful. This actually became the main motivation to create my own simple model of a planet, using real geographical elevation data and a bit of Wolfram Language magic. TL;DR; Here is an interactive animation Notes on IDE In this tutorial, I (@jerryi) am going to use an open-source notebook interface, WLJS Notebook, based on the freeware Wolfram Engine. Spreading points on a sphere Obviously, even if we grab Earth's elevation map, there’s the problem of projecting it onto a sphere. This is a complex topic involving triangulation (since we need to create polygons from it afterward) and more, with plenty written on the subject. For example: Delaunay and Voronoi on a Sphere Four Ways to Create a Mesh for a Sphere As one approach, we’ll go the other way around—first generating sample points on the sphere, then querying the elevation data for those points. So, we need to evenly distribute N points across a sphere. No need to reinvent the wheel when a rich standard library is available. Graphics3D[{ Sphere[], Red, PointSize[0.01], SpherePoints[1000]//Point }] Get Elevation Data Fortunately (or unfortunately), the standard WL library already includes a rough map of the entire Earth (just in case). If more precision is needed, it can go online and fetch higher-resolution data. So, let’s get down to business GeoElevationData[GeoPosition[Here]] output Quantity[490, "Meters"] Here, Here returns the current latitude and longitude in degrees. The function can take not just a single value but an entire list. Naturally, we’ll use this list from the points on the sphere. points = SpherePoints[5000]; latLon = (180/Pi) {90Degree - ArcCos[#[[3]]], ArcTan[#[[1]], #[[2]]]} &/@ points; elevation = GeoElevationData[GeoPosition[latLon]]; elevation = (elevation + Min[elevation])/Max[elevation]; Here we convert from Cartesian coordinates to spherical (more precisely, geodetic) coordinates we retrieve the elevations and normalize them. The final step is to link them back to the original points on the sphere, using the normalized elevation as the distance along the normal. surface = MapThread[(#1 (0.8 + 0.1 #2))&, {points, elevation}]; Here, we scale them by eye so that the height above sea level only slightly "modulates" the sphere’s surface. This way, we get a visible relief of the Earth rainbow = ColorData["DarkRainbow"]; ListSurfacePlot3D[surface, Mesh->None, MaxPlotPoints->100, ColorFunction -> Function[{x,y,z}, rainbow[1.5(2 Norm[{x,y,z}]-1)] ], ColorFunctionScaling -> False ] That looks like Earth! Generating clouds What’s Earth without clouds? And what are clouds without Perlin noise? The next piece I honestly stole from one of the forums (no GPT for you!) n = 128; k2 = Outer[Plus, #, #] &[RotateRight[N@Range[-n, n - 1, 2]/n, n/2]^2]; spectrum = With[{d := RandomReal[NormalDistribution[], {n, n}]}, (1/n) (d + I d)/(0.002 + k2)]; spectrum[[1, 1]] *= 0; im[p_] := Clip[Re[InverseFourier[spectrum Exp[I p]]], {0, ∞}]^0.5 p0 = p = Sqrt[k2]; Image[im[p0 += p], Magnification->2] Here animated version Module[{buffer = im[p0 += p], frame = CreateUUID[]}, EventHandler[frame, (buffer = im[p0 += p])&]; Image[buffer // Offload, Magnification->2, Epilog->AnimationFrameListener[buffer // Offload, "Event"->frame] ] ] How do we make them three-dimensional? I couldn’t think of anything better than using the Marching Cubes technique to generate low-poly clouds. However, to start, we’ll "stretch" the 2D image into 3D with fading at the edges. With[{plain = im[p0+=p]}, Table[plain Exp[-( i)^2/200.], {i, -20,20}]][[;;;;8, ;;;;8, ;;;;8]] // Image3D To turn this scalar field into polygons, we can use the ImageMesh function. However, it's quite challenging to work with when nonlinear transformations need to be applied to the mesh. And we will need to do these transformations, otherwise, how will we map this square onto the sphere? Let’s use an external library, wl-marchingcubes. PacletRepositories[{ Github -> "https://github.com/JerryI/wl-marching-cubes" -> "master" }] pnormals] } // Graphics3D If VertexNormals is explicitly set in GraphicsComplex, then when shading, the surface will be interpolated across all three normals of the triangle, instead of just one (which is automatically calculated by the graphics library if no other data is provided, in which case the polygon looks like a flat plane — a.k.a. the effect seen in 80s demos). Putting it all together We can extend the original SurfacePlot with options and add additional graphic primitives through them. Oh, and don’t forget to turn off the default lighting. After all, we’re in space! rainbow = ColorData["DarkRainbow"]; ListSurfacePlot3D[ MapThread[(#1 (0.8 + 0.1 #2))&, {points, elevation}],

Have you ever played Outer Wilds? The planets there are incredibly beautiful. This actually became the main motivation to create my own simple model of a planet, using real geographical elevation data and a bit of Wolfram Language magic.

TL;DR; Here is an interactive animation

Notes on IDE

In this tutorial, I (@jerryi) am going to use an open-source notebook interface, WLJS Notebook, based on the freeware Wolfram Engine.

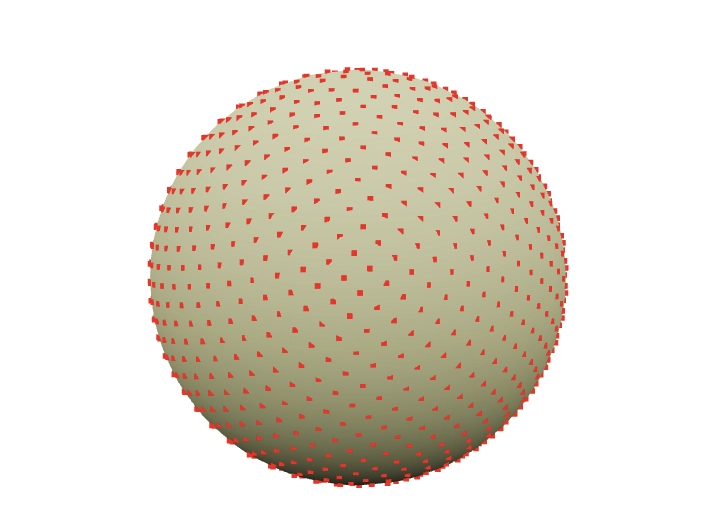

Spreading points on a sphere

Obviously, even if we grab Earth's elevation map, there’s the problem of projecting it onto a sphere. This is a complex topic involving triangulation (since we need to create polygons from it afterward) and more, with plenty written on the subject. For example:

As one approach, we’ll go the other way around—first generating sample points on the sphere, then querying the elevation data for those points.

So, we need to evenly distribute N points across a sphere. No need to reinvent the wheel when a rich standard library is available.

Graphics3D[{

Sphere[],

Red, PointSize[0.01], SpherePoints[1000]//Point

}]

Get Elevation Data

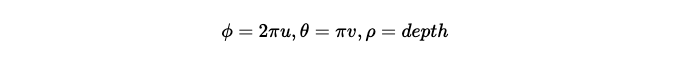

Fortunately (or unfortunately), the standard WL library already includes a rough map of the entire Earth (just in case). If more precision is needed, it can go online and fetch higher-resolution data.

So, let’s get down to business

GeoElevationData[GeoPosition[Here]]

output

Quantity[490, "Meters"]

Here, Here returns the current latitude and longitude in degrees. The function can take not just a single value but an entire list. Naturally, we’ll use this list from the points on the sphere.

points = SpherePoints[5000];

latLon = (180/Pi) {90Degree - ArcCos[#[[3]]], ArcTan[#[[1]], #[[2]]]} &/@ points;

elevation = GeoElevationData[GeoPosition[latLon]];

elevation = (elevation + Min[elevation])/Max[elevation];

Here we convert from Cartesian coordinates to spherical (more precisely, geodetic) coordinates

we retrieve the elevations and normalize them.

The final step is to link them back to the original points on the sphere, using the normalized elevation as the distance along the normal.

surface = MapThread[(#1 (0.8 + 0.1 #2))&, {points, elevation}];

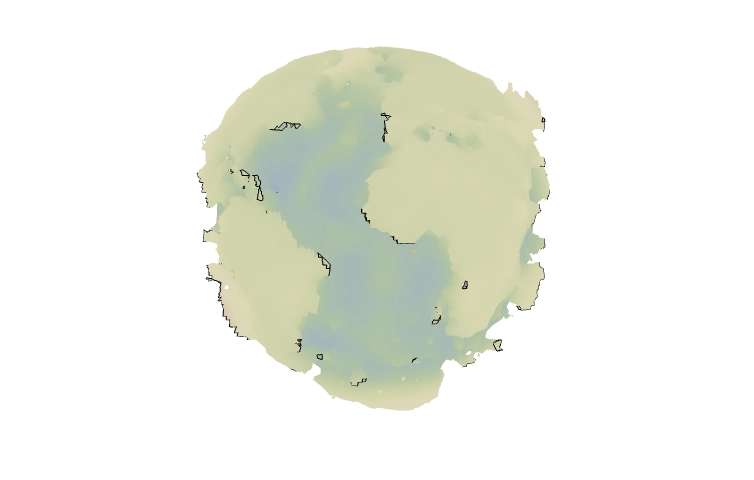

Here, we scale them by eye so that the height above sea level only slightly "modulates" the sphere’s surface. This way, we get a visible relief of the Earth

rainbow = ColorData["DarkRainbow"];

ListSurfacePlot3D[surface,

Mesh->None, MaxPlotPoints->100,

ColorFunction -> Function[{x,y,z},

rainbow[1.5(2 Norm[{x,y,z}]-1)]

], ColorFunctionScaling -> False

]

That looks like Earth!

Generating clouds

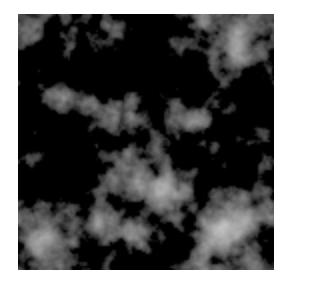

What’s Earth without clouds? And what are clouds without Perlin noise?

The next piece I honestly stole from one of the forums (no GPT for you!)

n = 128;

k2 = Outer[Plus, #, #] &[RotateRight[N@Range[-n, n - 1, 2]/n, n/2]^2];

spectrum = With[{d := RandomReal[NormalDistribution[], {n, n}]},

(1/n) (d + I d)/(0.002 + k2)];

spectrum[[1, 1]] *= 0;

im[p_] := Clip[Re[InverseFourier[spectrum Exp[I p]]], {0, ∞}]^0.5

p0 = p = Sqrt[k2];

Image[im[p0 += p], Magnification->2]

Here animated version

Module[{buffer = im[p0 += p], frame = CreateUUID[]},

EventHandler[frame, (buffer = im[p0 += p])&];

Image[buffer // Offload,

Magnification->2,

Epilog->AnimationFrameListener[buffer // Offload, "Event"->frame]

]

]

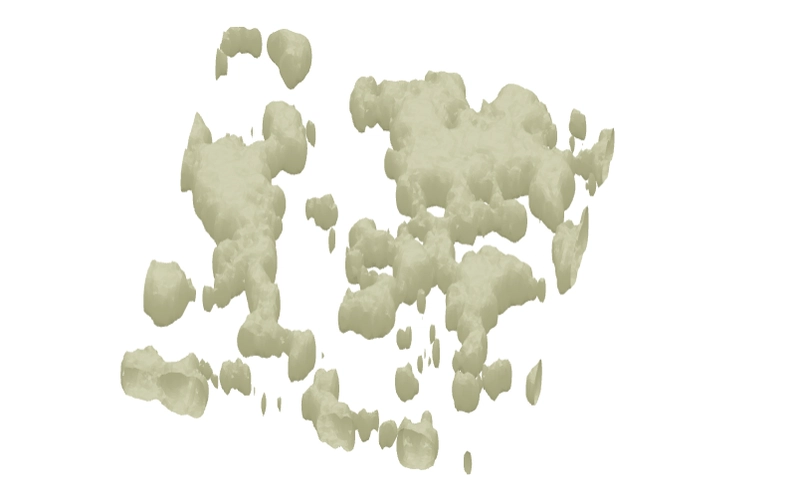

How do we make them three-dimensional? I couldn’t think of anything better than using the Marching Cubes technique to generate low-poly clouds.

However, to start, we’ll "stretch" the 2D image into 3D with fading at the edges.

With[{plain = im[p0+=p]}, Table[plain Exp[-( i)^2/200.], {i, -20,20}]][[;;;;8, ;;;;8, ;;;;8]] // Image3D

To turn this scalar field into polygons, we can use the ImageMesh function. However, it's quite challenging to work with when nonlinear transformations need to be applied to the mesh. And we will need to do these transformations, otherwise, how will we map this square onto the sphere?

Let’s use an external library, wl-marchingcubes.

PacletRepositories[{

Github -> "https://github.com/JerryI/wl-marching-cubes" -> "master"

}]

<<JerryI`MarchingCubes`

With[{plain = im[p0+=p]}, Table[plain Exp[-( i)^2/200.], {i, -20,20}]];

{vertices, normals} = CMarchingCubes[%, 0.2];

The result is stored in vertices. By default, the library generates an unindexed set of triangle vertices. This means we can directly interpret them as

Polygon[{

{1, 2, 3}, Triangle 1

{4, 5, 6}, Triangle 2

...

}]

Where none of the vertices are reused by another triangle. This format is especially simple for the GPU, as only one such list needs to be sent to one of the WebGL buffers. Fortunately, our (WLJS Notebook) implementation of Polygon primitive supports this format unlike Wolfram Mathematica

GraphicsComplex[vertices, Polygon[1, Length[vertices]]] // Graphics3D

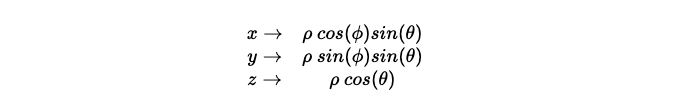

Inverse UV-mapping

It is important to note, that on the next few steps we will map those clouds on the sphere (our Earth surface model). The easiest way is to perform so-called UV-mapping, which will naturally distort our original texture. Especially on poles.

For the educational purpose let's perform a naive inverse UV-mapping (from the the "texture" to a sphere)

where

Those 3 coordinates are vertices of generated clouds normalized in the range from 0 to 1 (except 3rd one)

With[{plain = im[p0 += p]}, Table[plain Exp[-( i)^2/200.], {i, -20,20}]];

{vertices, normals} = CMarchingCubes[%, 0.2, "CalculateNormals" -> False];

vertices = With[{

offset = {Min[vertices[[All,1]]], Min[vertices[[All,2]]], 0},

maxX = Max[vertices[[All,1]]] - Min[vertices[[All,1]]],

maxY = Max[vertices[[All,2]]] - Min[vertices[[All,2]]]

}, Map[Function[v, With[{p = v - offset}, {p[[1]]/maxX, p[[2]]/maxY, p[[3]]}]], vertices]];

pvertices = Map[Function[v,

With[{\[Rho] = 50.0 + 0.25 (v[[3]] - 10), \[Phi] = 2 Pi Clip[v[[1]], {0,1}], \[Theta] = Pi Clip[v[[2]], {0,1}]},

{\[Rho] Cos[\[Phi]] Sin[\[Theta]], \[Rho] Sin[\[Phi]] Sin[\[Theta]], \[Rho] Cos[\[Theta]]}

]

]

, vertices];

{

GraphicsComplex[0.017 pvertices, Polygon[1, Length[vertices]]]

} // Graphics3D

This looks horrible on the poles

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)