[Mini Project] Serverless Blog Generator Using AWS Lambda, API Gateway, S3 & Amazon Bedrock

Before Starting, Consider this Options Too: -For direct implementation steps, visit the GitHub repo: Serverless Blog Generator In this tutorial, we will walk through building a Serverless Blog Generator using AWS services like Lambda, API Gateway, Amazon S3, and Amazon Bedrock (or OpenAI). The goal of this project is to create a scalable and cost-effective system that generates detailed blog content from a given topic using Generative AI and stores the result in a publicly accessible location. This project is designed to be beginner-friendly and will guide you step-by-step. You’ll also get hands-on experience with AWS Bedrock, Lambda, API Gateway, and S3 – essential components in modern serverless and AI-powered architectures. Prerequisites Before we start, ensure that you have the following prerequisites: AWS Account: If you don’t already have an AWS account sign up (below if working with CLI) AWS CLI: The AWS Command Line Interface (CLI) allows you to interact with AWS services via terminal. Install AWS CLI for Windows Python 3.x: Our Lambda function will be written in Python. Make sure Python 3.x is installed on your system from python.org. Postman: For testing APIs, download and install Postman here Step-by-Step Implementation Step 1: Setting Up AWS Bedrock To get started with generating blogs, the first step is to request a model from AWS Bedrock. Bedrock provides various pre-trained models, and you can choose the model best suited for your region. In this case, although I initially considered a chat-based model like Llama, I ended up using a model available in my region for practice. Go to the AWS Bedrock Console. From the Bedrock Dashboard, click on Create New Model. Choose the model that fits your use case, keeping in mind your region's availability. (You can use any model that provides text generation capabilities). Once you have selected the model, note down the Model ARN (Amazon Resource Name), which will be used later in Lambda to make requests to the model. Step 2: Prepare Lambda for Model Integration 2.1 Create a Lambda Function Now, we need to set up an AWS Lambda function that will interact with the Bedrock model. Here are the steps: Go to the AWS Lambda Console. Click on Create function. Choose Author from scratch. For the runtime, select the latest Python version (Python 3.12 is a good choice). Choose the necessary role permissions (You can create a new role or use an existing one with permissions to interact with AWS Bedrock). import boto3 import botocore.config import json from datetime import datetime def blog_generate_using_bedrock(blogtopic:str)->str: prompt=f""" [INST]Human:Write a 200 words blog on he topic {blogtopic} Assistant:[/INST] """ body={ "prompt":prompt, "max_gen_len":512, "temperature":0.5, "top_p":0.9 } try: bedrock=boto3.client("bedrock-runtime",region_name="ap-south-1",config=botocore.config.Config(read_timeout=300,retries={'max_attempts':3})) response=bedrock.invoke_model(body=json.dumps(body),modelId="meta.llama3-8b-instruct-v1:0") response_content=response.get('body').read() response_data=json.loads(response_content) print(response_data) blog_details=response_data['generation'] return blog_details except Exception as e: print(f"Error generating the blog:{e}") return "" def save_blog_details_s3(s3_key,s3_bucket,generate_blog): s3=boto3.client('s3') try: s3.put_object(Bucket = s3_bucket, Key = s3_key, Body =generate_blog ) print("Code saved to s3") except Exception as e: print("Error when saving the code to s3") def lambda_handler(event, context): # TODO implement event=json.loads(event['body']) blogtopic=event['blog_topic'] generate_blog=blog_generate_using_bedrock(blogtopic=blogtopic) if generate_blog: curr_time=datetime.now().strftime('%H%M%S') s3_key=f"blog-output/{curr_time}.txt" s3_bucket='awsbedrockproject01' save_blog_details_s3(s3_key,s3_bucket,generate_blog) else: print("No blog was generated ") return{ 'statusCode':200, 'body':json.dumps('Blog Generation is Completed') } 2.2 Import the Latest boto3 Package Since Lambda does not include the boto3 package by default in the function environment, we will need to include it. You can either: Option 1: Upload a ZIP file containing the latest boto3 package directly to Lambda. Option 2: Create a Lambda Layer for the boto3 library and attach it to your function. This will allow you to keep the function’s size smaller. How to Create and Upload the boto3 ZIP File On your local machine, create a new directory and install the boto3 package there: mkdir my_lambda_package cd my_lambda_package pip install boto3 -t . Compress the directory into a ZIP file:

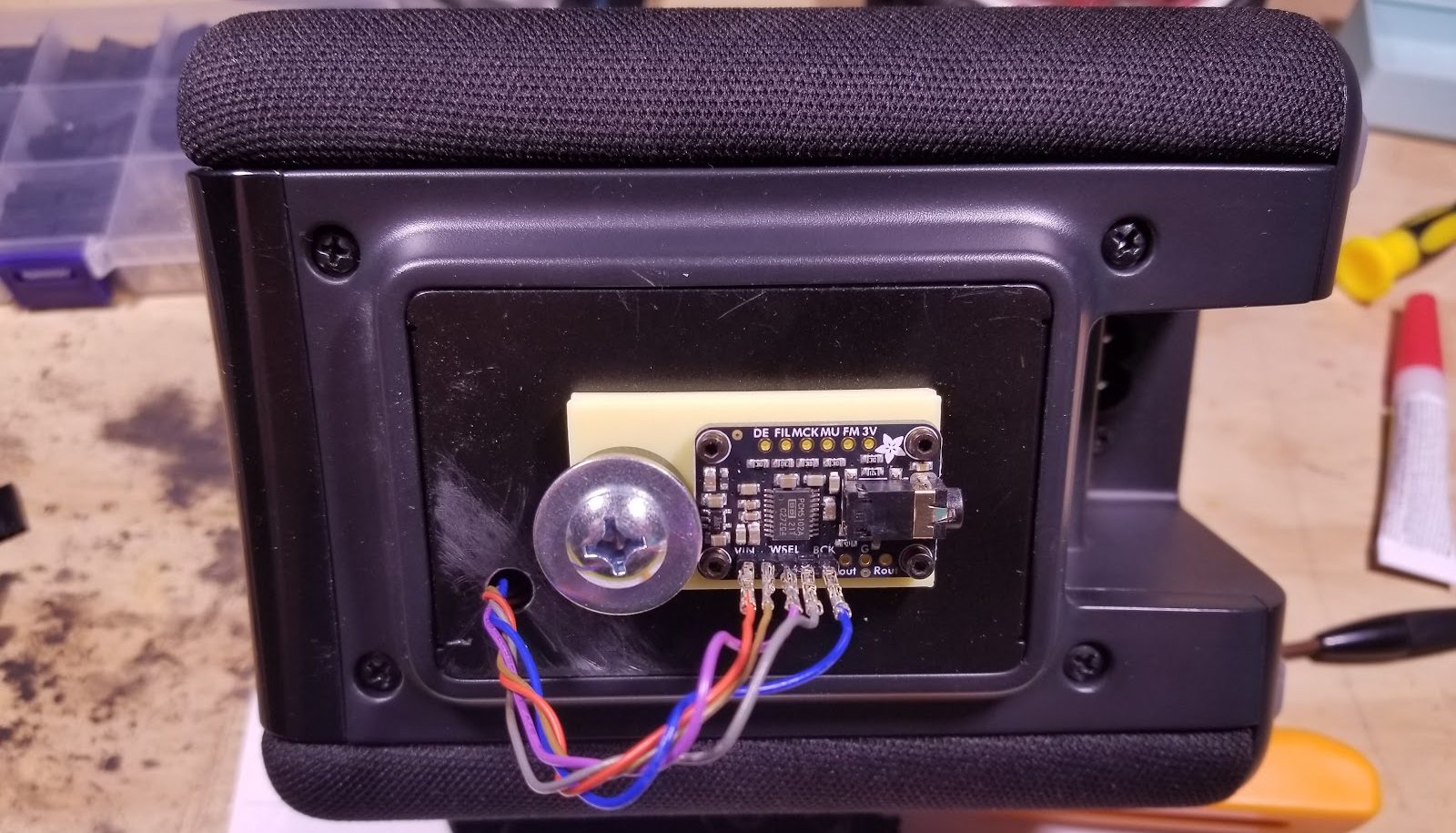

![[Mini Project] Serverless Blog Generator Using AWS Lambda, API Gateway, S3 & Amazon Bedrock](https://media2.dev.to/dynamic/image/width=800%2Cheight=%2Cfit=scale-down%2Cgravity=auto%2Cformat=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2F65loqvfxh9uwko30pzzs.png)

Before Starting, Consider this Options Too:

-For direct implementation steps, visit the GitHub repo:

Serverless Blog Generator

In this tutorial, we will walk through building a Serverless Blog Generator using AWS services like Lambda, API Gateway, Amazon S3, and Amazon Bedrock (or OpenAI). The goal of this project is to create a scalable and cost-effective system that generates detailed blog content from a given topic using Generative AI and stores the result in a publicly accessible location.

This project is designed to be beginner-friendly and will guide you step-by-step. You’ll also get hands-on experience with AWS Bedrock, Lambda, API Gateway, and S3 – essential components in modern serverless and AI-powered architectures.

Prerequisites

Before we start, ensure that you have the following prerequisites:

AWS Account: If you don’t already have an AWS account sign up

(below if working with CLI)

AWS CLI: The AWS Command Line Interface (CLI) allows you to interact with AWS services via terminal. Install AWS CLI for Windows

Python 3.x: Our Lambda function will be written in Python. Make sure Python 3.x is installed on your system from python.org.

Postman: For testing APIs, download and install Postman here

Step-by-Step Implementation

Step 1: Setting Up AWS Bedrock

To get started with generating blogs, the first step is to request a model from AWS Bedrock. Bedrock provides various pre-trained models, and you can choose the model best suited for your region. In this case, although I initially considered a chat-based model like Llama, I ended up using a model available in my region for practice.

- Go to the AWS Bedrock Console.

- From the Bedrock Dashboard, click on Create New Model.

- Choose the model that fits your use case, keeping in mind your region's availability. (You can use any model that provides text generation capabilities).

- Once you have selected the model, note down the Model ARN (Amazon Resource Name), which will be used later in Lambda to make requests to the model.

Step 2: Prepare Lambda for Model Integration

2.1 Create a Lambda Function

Now, we need to set up an AWS Lambda function that will interact with the Bedrock model. Here are the steps:

- Go to the AWS Lambda Console.

- Click on Create function.

- Choose Author from scratch.

- For the runtime, select the latest Python version (Python 3.12 is a good choice).

- Choose the necessary role permissions (You can create a new role or use an existing one with permissions to interact with AWS Bedrock).

import boto3

import botocore.config

import json

from datetime import datetime

def blog_generate_using_bedrock(blogtopic:str)->str:

prompt=f""" [INST]Human:Write a 200 words blog on he topic {blogtopic}

Assistant:[/INST]

"""

body={

"prompt":prompt,

"max_gen_len":512,

"temperature":0.5,

"top_p":0.9

}

try:

bedrock=boto3.client("bedrock-runtime",region_name="ap-south-1",config=botocore.config.Config(read_timeout=300,retries={'max_attempts':3}))

response=bedrock.invoke_model(body=json.dumps(body),modelId="meta.llama3-8b-instruct-v1:0")

response_content=response.get('body').read()

response_data=json.loads(response_content)

print(response_data)

blog_details=response_data['generation']

return blog_details

except Exception as e:

print(f"Error generating the blog:{e}")

return ""

def save_blog_details_s3(s3_key,s3_bucket,generate_blog):

s3=boto3.client('s3')

try:

s3.put_object(Bucket = s3_bucket, Key = s3_key, Body =generate_blog )

print("Code saved to s3")

except Exception as e:

print("Error when saving the code to s3")

def lambda_handler(event, context):

# TODO implement

event=json.loads(event['body'])

blogtopic=event['blog_topic']

generate_blog=blog_generate_using_bedrock(blogtopic=blogtopic)

if generate_blog:

curr_time=datetime.now().strftime('%H%M%S')

s3_key=f"blog-output/{curr_time}.txt"

s3_bucket='awsbedrockproject01'

save_blog_details_s3(s3_key,s3_bucket,generate_blog)

else:

print("No blog was generated ")

return{

'statusCode':200,

'body':json.dumps('Blog Generation is Completed')

}

2.2 Import the Latest boto3 Package

Since Lambda does not include the boto3 package by default in the function environment, we will need to include it. You can either:

-

Option 1: Upload a ZIP file containing the latest

boto3package directly to Lambda. -

Option 2: Create a Lambda Layer for the

boto3library and attach it to your function. This will allow you to keep the function’s size smaller.

How to Create and Upload the boto3 ZIP File

- On your local machine, create a new directory and install the

boto3package there:

mkdir my_lambda_package

cd my_lambda_package

pip install boto3 -t .

- Compress the directory into a ZIP file:

zip -r9 boto3-layer.zip .

- Go to your Lambda Console, navigate to your function, and under Layers, click on Add a layer.

- Upload the

boto3-layer.zipfile.

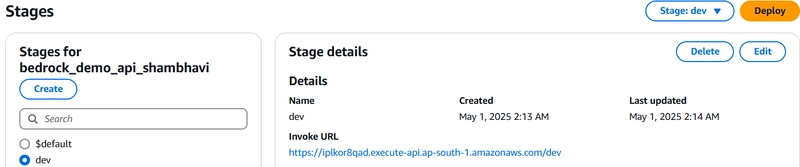

Step 3: Set Up API Gateway

Next, we will use API Gateway to expose a REST API for our Lambda function. API Gateway will handle incoming requests and route them to Lambda.

3.1 Create a New API

- Go to the API Gateway Console and click on Create API.

- Choose REST API and click Build.

- Select New API, name it (e.g.,

BlogGenAPI), and click Create API.

Step 4: Configure S3 Bucket for Blog Storage

In this step, we'll set up an S3 bucket to store the generated blogs.

4.1 Create an S3 Bucket

- Go to the S3 Console.

- Click Create bucket.

- Choose a unique name for your bucket (e.g.,

my-blog-storage). - Click Create.

4.2 Update Lambda Code for S3 Integration

Now, we need to modify the Lambda function to save the generated blog content to the S3 bucket. Add the following steps to your Lambda function:

- Use the

boto3library to interact with S3. - After generating the blog content, save it as a text file in the S3 bucket.

Here is a simple code snippet for the Lambda function to save content to S3:

import boto3

import json

import uuid

s3_client = boto3.client('s3')

def lambda_handler(event, context):

# Generate blog content using Bedrock model

blog_content = generate_blog_content()

# Define the S3 bucket and file name

bucket_name = 'your-bucket-name'

file_name = f"blog-{str(uuid.uuid4())}.txt"

# Upload the blog content to S3

s3_client.put_object(Body=blog_content, Bucket=bucket_name, Key=file_name)

return {

'statusCode': 200,

'body': json.dumps({'message': 'Blog generated successfully!', 'file': file_name})

}

def generate_blog_content():

# Call Bedrock model to generate content (pseudo-code)

content = "Your generated blog content goes here."

return content

Step 5: Test the API Using ReqBin (Free Online Tool)

How to Test:

Go to Reqbin.

Select method: POST.

Enter the API endpoint:

https://iplkor8qad.execute-api.ap-south-1.amazonaws.com/dev/blog_generation

Choose Content-Type: application/json.

Add the request body:

{

"blog_topic": "Benefits of Rhodendron tea"

}

Click Send and verify the response.

You will see:

Now you can check your S3 Bucket for the blog that is generated:

Output

Architecture Diagram

Conclusion

This blog walks you through the complete process of setting up an AI-driven blog generation system using AWS services like Bedrock, Lambda, API Gateway, and S3. By following these steps, you can easily generate and store blog content using AWS's powerful infrastructure. This is just one of many possibilities for integrating AWS into your applications, and I encourage you to explore more AWS services and capabilities.

Feel free to contribute to this project and help improve it! You can find the repository here.

Connect with Me:

Project Repository:

Thanks for reading - Shambhavi Chaukiyal

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

(1).jpg?#)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Developing AI 'Vibe-Coding' Assistant for Xcode With Anthropic [Report]](https://www.iclarified.com/images/news/97200/97200/97200-640.jpg)

![Apple's New Ads Spotlight Apple Watch for Kids [Video]](https://www.iclarified.com/images/news/97197/97197/97197-640.jpg)

![[Weekly funding roundup April 26-May 2] VC inflow continues to remain downcast](https://images.yourstory.com/cs/2/220356402d6d11e9aa979329348d4c3e/WeeklyFundingRoundupNewLogo1-1739546168054.jpg)