MCP: The REST Revolution of AI Why This Protocol Changes Everything

You most probably looked up this post to clarify this new AI jargon, and I get you—it's not easy to keep up with all the terminology and know how everything fits together. Good news! You're at the right place! Follow along and you'll understand what all this MCP buzz is about! Before MCP: The Evolution of LLM Apps To better understand how MCP came to be, let's look at how LLM apps have evolved. Simple LLM Interaction First, we built AI apps interacting directly with an LLM model. This was great for getting answers to general questions based on the knowledge the model was trained on. The downside? The LLM model couldn't take any actions in the real world. For example, you could ask the LLM, "What are the best Caribbean islands with white sandy beaches?" and it might tell you about Turks and Caicos or Aruba. But if you then ask, "Can you book me a flight to Aruba for next weekend?" it would have to respond with something like "I don't have the ability to search or book flights" because it can't access real-time flight data or booking systems. LLM with Tools To solve this limitation, we equipped LLMs with tools. Think of tools as separate services that execute specific actions (search flights, book hotels, etc.). With tools ready, you could build an AI travel assistant where you first tell the LLM, "You have access to a flight_search tool that takes departure_city, destination_city, and dates as parameters, and a hotel_booking tool that takes location, check_in_date, check_out_date, and guests as parameters." Now when a user asks, "I want to go to Aruba next weekend from Chicago. Can you help me plan this trip?", the LLM might respond: "I'll help you plan your Aruba trip! Let me check flight options first." Then it would call: flight_search(departure_city='Chicago', destination_city='Aruba', departure_date='2025-04-25', return_date='2025-04-27') After receiving flight results, it might say: "I found several flights! Now let me check hotels." Then call: hotel_booking(location='Aruba', check_in_date='2025-04-25', check_out_date='2025-04-27', guests=1) This works and enables the LLM to take actions, but there's a major downside: complexity! For every tool you want to use, you need to: Write custom code and maintain it Handle API authentication and error cases Process response formats Update your code when APIs change Behind the scenes, your AI app needs code that looks like this: def flight_search(departure_city, destination_city, departure_date, return_date): # Connect to airline API with authentication api_key = os.environ.get("AIRLINE_API_KEY") headers = {"Authorization": f"Bearer {api_key}"} # Format request payload = {...} # Format parameters correctly # Make API call response = requests.post("https://airline-api.com/search", json=payload, headers=headers) # Handle errors if response.status_code != 200: handle_error(response) # Process and format response flights = process_flight_data(response.json()) return flights And you need similar code for every single integration. When the airline API changes, you have to update your code. It's a maintenance nightmare! Cloud Provider Solutions (Like AWS Bedrock Agents) Cloud providers recognized this complexity and started offering solutions like AWS Bedrock Agents to simplify the process. With these solutions, much of the orchestration is abstracted away. But you still need to write custom code to execute the actions. If the airline API changes its parameters or response format, you still need to update your code. The Problem MCP Solves Did you notice the pattern? There's a hard dependency between the AI app/agent and the tools it uses. If something changes in an external API, your tool code breaks. Everything is tightly coupled. MCP - A Paradigm Shift in AI Architecture MCP (Model Context Protocol) introduces a fundamental change in paradigm. Instead of AI developers writing custom tool code for every integration, MCP provides a standardized protocol for LLMs to communicate with external services. With MCP, there's a clear separation: LLMs focus on understanding user requests and reasoning MCP servers handle the specific domain functionality Let's see how our travel planning example changes with MCP: User asks: "I want to go to Aruba next weekend from Chicago" Your AI app connects to a travel MCP server The LLM says to the MCP server: "I need flight options from Chicago to Aruba for next weekend" The MCP server handles all the API calls, authentication, and formatting The MCP server returns structured results to the LLM The LLM presents the options to the user You don't need to write any custom integration code. The MCP server handles all those details for you! It's like the difference between building your own payment processing from scratch versus integrating with Stripe. MCP

You most probably looked up this post to clarify this new AI jargon, and I get you—it's not easy to keep up with all the terminology and know how everything fits together.

Good news! You're at the right place!

Follow along and you'll understand what all this MCP buzz is about!

Before MCP: The Evolution of LLM Apps

To better understand how MCP came to be, let's look at how LLM apps have evolved.

Simple LLM Interaction

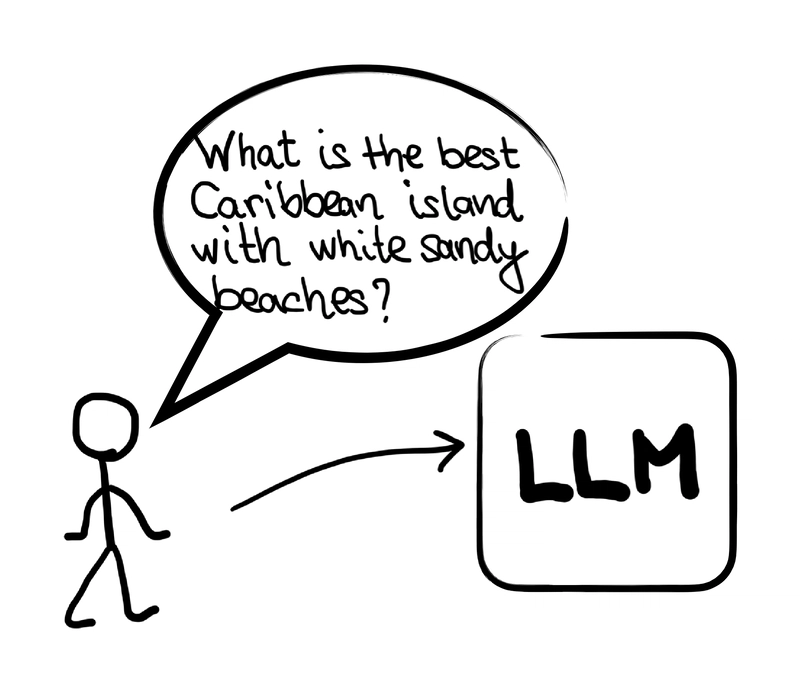

First, we built AI apps interacting directly with an LLM model. This was great for getting answers to general questions based on the knowledge the model was trained on.

The downside? The LLM model couldn't take any actions in the real world.

For example, you could ask the LLM, "What are the best Caribbean islands with white sandy beaches?" and it might tell you about Turks and Caicos or Aruba. But if you then ask, "Can you book me a flight to Aruba for next weekend?" it would have to respond with something like "I don't have the ability to search or book flights" because it can't access real-time flight data or booking systems.

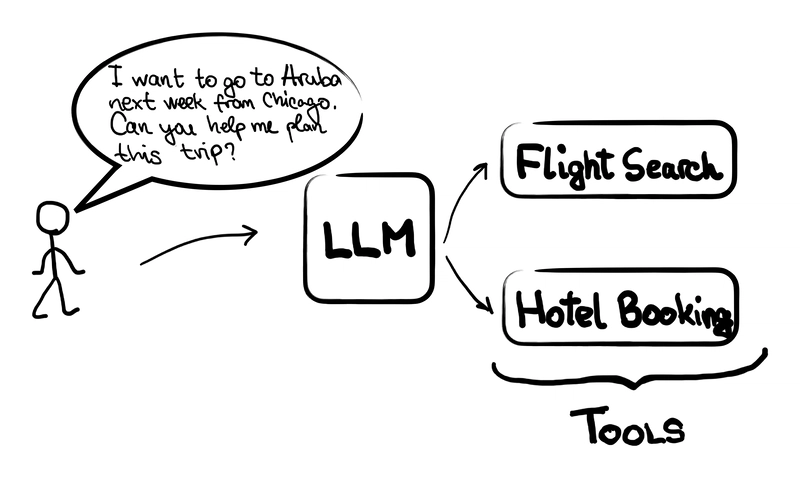

LLM with Tools

To solve this limitation, we equipped LLMs with tools.

Think of tools as separate services that execute specific actions (search flights, book hotels, etc.).

With tools ready, you could build an AI travel assistant where you first tell the LLM, "You have access to a flight_search tool that takes departure_city, destination_city, and dates as parameters, and a hotel_booking tool that takes location, check_in_date, check_out_date, and guests as parameters."

Now when a user asks, "I want to go to Aruba next weekend from Chicago. Can you help me plan this trip?", the LLM might respond: "I'll help you plan your Aruba trip! Let me check flight options first."

Then it would call:

flight_search(departure_city='Chicago', destination_city='Aruba', departure_date='2025-04-25', return_date='2025-04-27')

After receiving flight results, it might say: "I found several flights! Now let me check hotels."

Then call:

hotel_booking(location='Aruba', check_in_date='2025-04-25', check_out_date='2025-04-27', guests=1)

This works and enables the LLM to take actions, but there's a major downside: complexity!

For every tool you want to use, you need to:

- Write custom code and maintain it

- Handle API authentication and error cases

- Process response formats

- Update your code when APIs change

Behind the scenes, your AI app needs code that looks like this:

def flight_search(departure_city, destination_city, departure_date, return_date):

# Connect to airline API with authentication

api_key = os.environ.get("AIRLINE_API_KEY")

headers = {"Authorization": f"Bearer {api_key}"}

# Format request

payload = {...} # Format parameters correctly

# Make API call

response = requests.post("https://airline-api.com/search", json=payload, headers=headers)

# Handle errors

if response.status_code != 200:

handle_error(response)

# Process and format response

flights = process_flight_data(response.json())

return flights

And you need similar code for every single integration. When the airline API changes, you have to update your code. It's a maintenance nightmare!

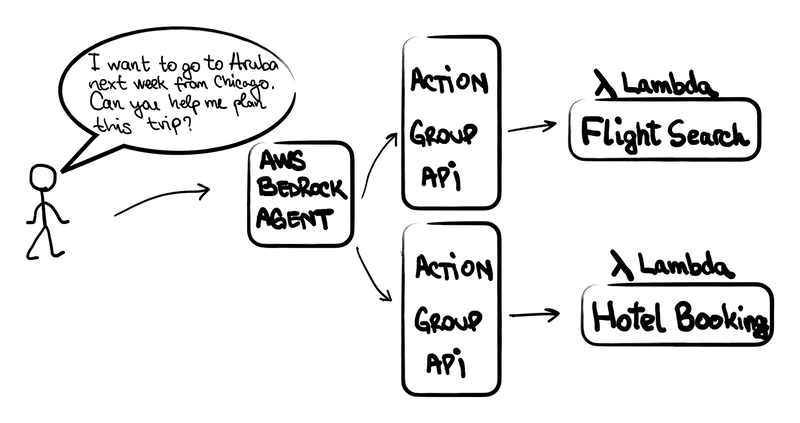

Cloud Provider Solutions (Like AWS Bedrock Agents)

Cloud providers recognized this complexity and started offering solutions like AWS Bedrock Agents to simplify the process.

With these solutions, much of the orchestration is abstracted away. But you still need to write custom code to execute the actions. If the airline API changes its parameters or response format, you still need to update your code.

The Problem MCP Solves

Did you notice the pattern? There's a hard dependency between the AI app/agent and the tools it uses.

If something changes in an external API, your tool code breaks. Everything is tightly coupled.

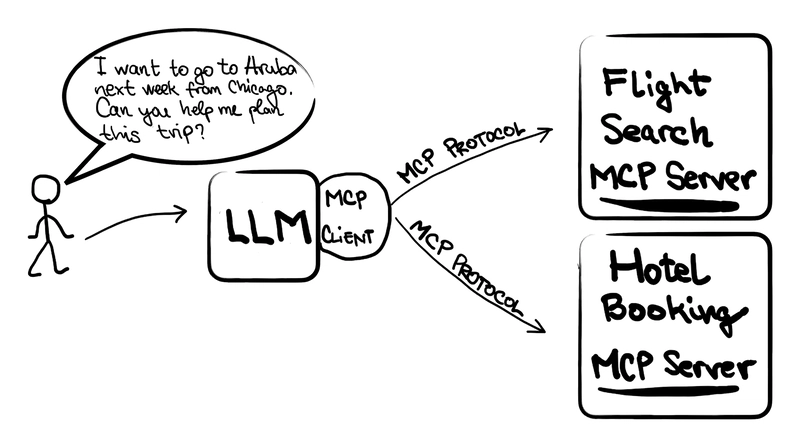

MCP - A Paradigm Shift in AI Architecture

MCP (Model Context Protocol) introduces a fundamental change in paradigm.

Instead of AI developers writing custom tool code for every integration, MCP provides a standardized protocol for LLMs to communicate with external services.

With MCP, there's a clear separation:

- LLMs focus on understanding user requests and reasoning

- MCP servers handle the specific domain functionality

Let's see how our travel planning example changes with MCP:

- User asks: "I want to go to Aruba next weekend from Chicago"

- Your AI app connects to a travel MCP server

- The LLM says to the MCP server: "I need flight options from Chicago to Aruba for next weekend"

- The MCP server handles all the API calls, authentication, and formatting

- The MCP server returns structured results to the LLM

- The LLM presents the options to the user

You don't need to write any custom integration code. The MCP server handles all those details for you!

It's like the difference between building your own payment processing from scratch versus integrating with Stripe. MCP servers do for AI what API providers did for web development - they provide ready-made capabilities that you can simply plug into.

Why MCP is the New REST

Just like services communicate with each other through REST APIs, LLMs now communicate with servers through MCP.

Remember when REST revolutionized web development by providing a standard way for systems to talk to each other? MCP is doing the same thing for AI systems.

It creates a clean separation of concerns:

- AI apps focus on reasoning and user interaction

- MCP servers focus on providing specific capabilities

As an AI developer, you don't need to write and maintain custom tool code. And since the MCP server handles the integration details, you're insulated from changes in the underlying systems.

The Future: MCP Alongside REST, Then Beyond

Today, most digital services expose REST APIs. My prediction is that we'll soon see services offering both REST APIs and MCP servers side by side.

Just as REST largely replaced SOAP for most web service integrations, MCP could eventually replace traditional REST APIs for many AI-driven use cases.

Why? Because MCP is designed specifically for the way modern AI needs to interact with services—with rich context and semantic understanding rather than just rigid data structures.

The Big Picture

MCP servers are like interfaces. Your LLM only needs to know what the server offers and doesn't depend on the concrete implementation.

This is huge for AI development because:

- Reduced complexity - No more custom tool code for every integration

- Better maintenance - MCP servers handle API changes

- Standardization - A common protocol that works across different AI systems

- Specialization - LLMs can focus on what they do best

The next time you hear about MCP, remember: it's not just another AI buzzword. It's a fundamental architectural pattern that's reshaping how AI systems interact with the world—just like REST did for web services years ago.

Are you building AI applications? MCP might just be the abstraction you've been waiting for.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Is this too much for a modular monolith system? [closed]](https://i.sstatic.net/pYL1nsfg.png)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)