lecture 5 (HTMLLMS):

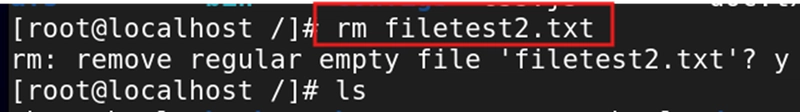

Points GPT models are trained on huge amounts of data. The sources of data include common crawl, Wikipedia, books, news articles, scientific journals, reddit posts etc. GPT models are just scaled up version of the classical transformer architecture. scaled up = shit loads of parameters (hundereds of billions) and many more layers of transformers. GPT-3 has 96 transformer layers and 175 billion parameters. GPT models do not have an encoder, unlike the classical Transformer architecture. The pre-training for these models is done in an un-supervised way, meaning, there is no output label. Or you could say the output label is already present in the input sentence. Example : Input: Big Brains. Input is broken down into "Big", "Brains" "Big" -> input to the model -> model tries to predict "Brains" Obviously the model fails, we calculate the loss and use backpropogation to update the weights of the transformer archthiture using SGD -  The models are auto-regressive, meaning, the output of the previous iteration is added to the input in the next iteration.

Points

- GPT models are trained on huge amounts of data. The sources of data include common crawl, Wikipedia, books, news articles, scientific journals, reddit posts etc.

- GPT models are just scaled up version of the classical transformer architecture. scaled up = shit loads of parameters (hundereds of billions) and many more layers of transformers. GPT-3 has 96 transformer layers and 175 billion parameters.

- GPT models do not have an encoder, unlike the classical Transformer architecture.

- The pre-training for these models is done in an un-supervised way, meaning, there is no output label. Or you could say the output label is already present in the input sentence.

- Example : Input: Big Brains.

- Input is broken down into "Big", "Brains"

- "Big" -> input to the model -> model tries to predict "Brains"

- Obviously the model fails, we calculate the loss and use backpropogation to update the weights of the transformer archthiture using SGD

-

- The models are auto-regressive, meaning, the output of the previous iteration is added to the input in the next iteration.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.jpg?#)

_ArtemisDiana_Alamy.jpg?#)

(1).webp?#)

-xl.jpg)

![Yes, the Gemini icon is now bigger and brighter on Android [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/02/Gemini-on-Galaxy-S25.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Rushes Five Planes of iPhones to US Ahead of New Tariffs [Report]](https://www.iclarified.com/images/news/96967/96967/96967-640.jpg)

![Apple Vision Pro 2 Allegedly in Production Ahead of 2025 Launch [Rumor]](https://www.iclarified.com/images/news/96965/96965/96965-640.jpg)