LangGraph Meets Vector Databases: A Love Story (With Code)

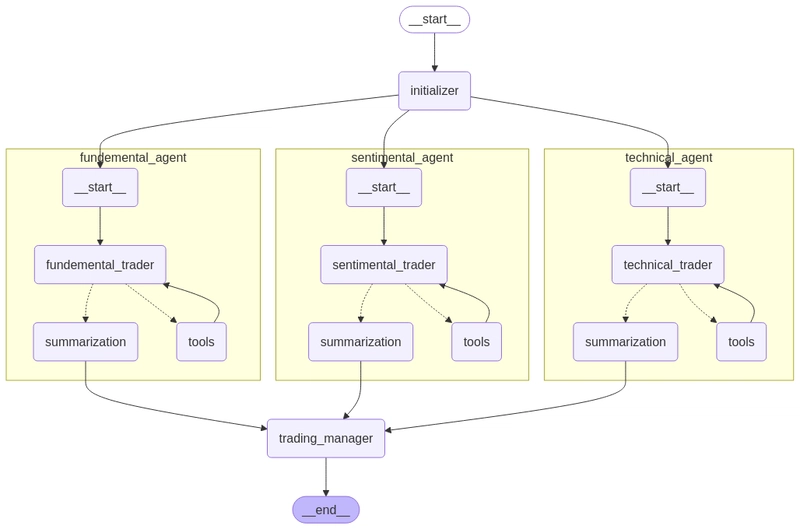

The Meet-Cute: LangGraph and Vector Databases Walk Into a Bar... Picture this: It's a quiet night at the local dev watering hole. LangGraph, the cool new kid on the block, is sipping a carefully crafted cocktail of natural language processing. Suddenly, in walks Vector Database, turning heads with its efficiency and speed. Their eyes meet across the room, and... okay, I'll stop before this turns into a rom-com. But seriously, folks, if you're not excited about the potential of integrating LangGraph with vector databases, you might want to check your pulse. This dynamic duo is set to revolutionize how we handle language models and structured data. So, grab your favorite caffeinated beverage, and let's dive into this practical guide that'll have you playing cupid to these two powerhouse technologies. Why Should You Care? (Or: How I Learned to Stop Worrying and Love the Graph) Before we get our hands dirty with code, let's talk about why this integration is more exciting than finding an unattended pizza at a hackathon. Supercharged Language Understanding: LangGraph brings structure to the chaotic world of natural language, while vector databases offer lightning-fast similarity searches. Scalability That Makes Your Head Spin: Vector databases can handle massive amounts of data without breaking a sweat (unlike me after climbing one flight of stairs). Contextual Awareness on Steroids: Combine the contextual understanding of graphs with the nuanced similarities vector databases can surface, and you've got a match made in AI heaven. Efficiency That Would Make Your Old Code Blush: This integration can significantly reduce computational overhead, making your models run faster than you can say "Big O notation." Now that we're all appropriately hyped, let's roll up our sleeves and get to the good stuff. Setting the Stage: What You'll Need Before we start our coding adventure, make sure you've got these ingredients: Python 3.7+ LangGraph library A vector database (we'll use Pinecone in this example, but feel free to substitute your favorite) A sense of humor (optional, but highly recommended) First, let's install our dependencies: pip install langgraph pinecone-client The Main Event: Integrating LangGraph with Pinecone Step 1: Initialize Your Vector Database Let's start by setting up our Pinecone index: import pinecone # Initialize Pinecone pinecone.init(api_key="your_api_key", environment="your_environment") # Create or connect to an index index_name = "langgraph-demo" if index_name not in pinecone.list_indexes(): pinecone.create_index(index_name, dimension=1536) # Adjust dimension as needed index = pinecone.Index(index_name) Step 2: Create Your LangGraph Structure Now, let's define a simple LangGraph structure: from langgraph.graph import Graph from langgraph.node import FunctionNode def process_query(query: str) -> dict: # Your language processing logic here return {"processed_query": query.lower()} def search_vector_db(processed_query: str) -> list: # Search Pinecone and return results results = index.query(vector=[0.1]*1536, top_k=5, include_metadata=True) return [r.metadata for r in results.matches] def format_response(search_results: list) -> str: # Format the results into a nice response return f"Found {len(search_results)} relevant items." # Create nodes process_node = FunctionNode(process_query) search_node = FunctionNode(search_vector_db) format_node = FunctionNode(format_response) # Create the graph graph = Graph() graph.add_node("process", process_node) graph.add_node("search", search_node) graph.add_node("format", format_node) # Connect the nodes graph.add_edge("process", "search") graph.add_edge("search", "format") Step 3: Run Your Integrated System Time to see our creation come to life: def run_query(query: str) -> str: result = graph.run({"query": query}) return result["format"] # Example usage print(run_query("What is the meaning of life?")) The Plot Thickens: Advanced Techniques Now that we've got the basics down, let's spice things up a bit. Here are some advanced techniques to take your LangGraph and vector database integration to the next level: 1. Dynamic Graph Construction Instead of hardcoding our graph structure, we can dynamically construct it based on the input: def build_dynamic_graph(query_type: str) -> Graph: graph = Graph() graph.add_node("process", process_node) graph.add_node("search", search_node) if query_type == "complex": graph.add_node("analyze", FunctionNode(analyze_complex_query)) graph.add_edge("process", "analyze") graph.add_edge("analyze", "search") else: graph.add_edge("process", "search") graph.add_node("format", format_node) graph.add_edge("search", "format") return graph # Usage dynamic_graph = build_dynamic_g

The Meet-Cute: LangGraph and Vector Databases Walk Into a Bar...

Picture this: It's a quiet night at the local dev watering hole. LangGraph, the cool new kid on the block, is sipping a carefully crafted cocktail of natural language processing. Suddenly, in walks Vector Database, turning heads with its efficiency and speed. Their eyes meet across the room, and... okay, I'll stop before this turns into a rom-com.

But seriously, folks, if you're not excited about the potential of integrating LangGraph with vector databases, you might want to check your pulse. This dynamic duo is set to revolutionize how we handle language models and structured data. So, grab your favorite caffeinated beverage, and let's dive into this practical guide that'll have you playing cupid to these two powerhouse technologies.

Why Should You Care? (Or: How I Learned to Stop Worrying and Love the Graph)

Before we get our hands dirty with code, let's talk about why this integration is more exciting than finding an unattended pizza at a hackathon.

Supercharged Language Understanding: LangGraph brings structure to the chaotic world of natural language, while vector databases offer lightning-fast similarity searches.

Scalability That Makes Your Head Spin: Vector databases can handle massive amounts of data without breaking a sweat (unlike me after climbing one flight of stairs).

Contextual Awareness on Steroids: Combine the contextual understanding of graphs with the nuanced similarities vector databases can surface, and you've got a match made in AI heaven.

Efficiency That Would Make Your Old Code Blush: This integration can significantly reduce computational overhead, making your models run faster than you can say "Big O notation."

Now that we're all appropriately hyped, let's roll up our sleeves and get to the good stuff.

Setting the Stage: What You'll Need

Before we start our coding adventure, make sure you've got these ingredients:

- Python 3.7+

- LangGraph library

- A vector database (we'll use Pinecone in this example, but feel free to substitute your favorite)

- A sense of humor (optional, but highly recommended)

First, let's install our dependencies:

pip install langgraph pinecone-client

The Main Event: Integrating LangGraph with Pinecone

Step 1: Initialize Your Vector Database

Let's start by setting up our Pinecone index:

import pinecone

# Initialize Pinecone

pinecone.init(api_key="your_api_key", environment="your_environment")

# Create or connect to an index

index_name = "langgraph-demo"

if index_name not in pinecone.list_indexes():

pinecone.create_index(index_name, dimension=1536) # Adjust dimension as needed

index = pinecone.Index(index_name)

Step 2: Create Your LangGraph Structure

Now, let's define a simple LangGraph structure:

from langgraph.graph import Graph

from langgraph.node import FunctionNode

def process_query(query: str) -> dict:

# Your language processing logic here

return {"processed_query": query.lower()}

def search_vector_db(processed_query: str) -> list:

# Search Pinecone and return results

results = index.query(vector=[0.1]*1536, top_k=5, include_metadata=True)

return [r.metadata for r in results.matches]

def format_response(search_results: list) -> str:

# Format the results into a nice response

return f"Found {len(search_results)} relevant items."

# Create nodes

process_node = FunctionNode(process_query)

search_node = FunctionNode(search_vector_db)

format_node = FunctionNode(format_response)

# Create the graph

graph = Graph()

graph.add_node("process", process_node)

graph.add_node("search", search_node)

graph.add_node("format", format_node)

# Connect the nodes

graph.add_edge("process", "search")

graph.add_edge("search", "format")

Step 3: Run Your Integrated System

Time to see our creation come to life:

def run_query(query: str) -> str:

result = graph.run({"query": query})

return result["format"]

# Example usage

print(run_query("What is the meaning of life?"))

The Plot Thickens: Advanced Techniques

Now that we've got the basics down, let's spice things up a bit. Here are some advanced techniques to take your LangGraph and vector database integration to the next level:

1. Dynamic Graph Construction

Instead of hardcoding our graph structure, we can dynamically construct it based on the input:

def build_dynamic_graph(query_type: str) -> Graph:

graph = Graph()

graph.add_node("process", process_node)

graph.add_node("search", search_node)

if query_type == "complex":

graph.add_node("analyze", FunctionNode(analyze_complex_query))

graph.add_edge("process", "analyze")

graph.add_edge("analyze", "search")

else:

graph.add_edge("process", "search")

graph.add_node("format", format_node)

graph.add_edge("search", "format")

return graph

# Usage

dynamic_graph = build_dynamic_graph("complex")

result = dynamic_graph.run({"query": "Explain quantum computing in simple terms"})

2. Feedback Loops for Continuous Learning

We can create a feedback loop to improve our system over time:

def update_vector_db(query: str, response: str, feedback: int):

# Update the vector database based on user feedback

vector = generate_vector(query + " " + response) # You'll need to implement this

index.upsert([(query, vector, {"feedback": feedback})])

def run_query_with_feedback(query: str) -> str:

result = graph.run({"query": query})

response = result["format"]

# Simulate user feedback (1-5 rating)

feedback = get_user_feedback(response) # You'll need to implement this

update_vector_db(query, response, feedback)

return response

Wrapping Up: You've Got the Power!

And there you have it, folks! We've successfully played matchmaker for LangGraph and vector databases, creating a powerful duo that's ready to tackle your toughest language processing challenges. From basic integration to advanced techniques, you're now armed with the knowledge to create systems that are smarter, faster, and cooler than ever before.

Remember, with great power comes great responsibility. Use this newfound knowledge wisely, and maybe don't use it to create an AI that can beat you at your favorite video game (trust me, it's not as fun as it sounds).

Now go forth and code, you brilliant developer, you! And if you found this guide helpful, consider following me for more tech shenanigans. After all, sharing is caring, and code is poetry (even if it sometimes reads like a limerick written by a sleep-deprived programmer).

Until next time, may your bugs be few and your coffee be strong!

P.S. If you made it this far, you deserve a cookie.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Is this too much for a modular monolith system? [closed]](https://i.sstatic.net/pYL1nsfg.png)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)

![Python File System Guide: 10 Essential Operations with Code Examples [2024]](https://media2.dev.to/dynamic/image/width%3D1000,height%3D500,fit%3Dcover,gravity%3Dauto,format%3Dauto/https:%2F%2Fjsschools.com%2Fimages%2Faf30e84f-6028-4aa4-a36d-07cb12fbd7aa.webp)