Introduction to Data Engineering Concepts |1| What is Data Engineering?

Free Resources Free Apache Iceberg Course Free Copy of “Apache Iceberg: The Definitive Guide” Free Copy of “Apache Polaris: The Definitive Guide” 2025 Apache Iceberg Architecture Guide How to Join the Iceberg Community Iceberg Lakehouse Engineering Video Playlist Ultimate Apache Iceberg Resource Guide Data engineering sits at the heart of modern data-driven organizations. While data science often grabs headlines with predictive models and AI, it's the data engineer who builds and maintains the infrastructure that makes all of that possible. In this first post of our series, we’ll explore what data engineering is, why it matters, and how it fits into the broader data ecosystem. The Role of the Data Engineer Think of a data engineer as the architect and builder of the data highways. These professionals design, construct, and maintain systems that move, transform, and store data efficiently. Their job is to ensure that data flows from various sources into data warehouses or lakes where it can be used reliably for analysis, reporting, and machine learning. In a practical sense, this means working with pipelines that connect everything from transactional databases and API feeds to large-scale storage systems. Data engineers work closely with data analysts, scientists, and platform teams to ensure the data is clean, consistent, and available when needed. From Raw to Refined: The Journey of Data Raw data is rarely useful as-is. It often arrives incomplete, messy, or inconsistently formatted. Data engineers are responsible for shepherding this raw material through a series of processing stages to prepare it for consumption. This involves tasks like: Data ingestion (bringing data in from various sources) Data transformation (cleaning, enriching, and reshaping the data) Data storage (choosing optimal formats and storage solutions) Data delivery (ensuring end users can access data quickly and easily) At each stage, considerations around scalability, performance, security, and governance come into play. Data Engineering vs Data Science It's common to see some confusion between the roles of data engineers and data scientists. While their work is often complementary, their responsibilities are distinct. A data scientist focuses on analyzing data and building predictive models. Their tools often include Python, R, and statistical frameworks. On the other hand, data engineers build the systems that make the data usable in the first place. They are often more focused on infrastructure, system design, and optimization. In short: the data scientist asks questions; the data engineer ensures the data is ready to answer them. A Brief History of the Data Stack The evolution of data engineering can be seen in how the data stack has changed over time. In traditional environments, organizations relied heavily on ETL tools to move data from relational databases into on-premise warehouses. These systems were tightly controlled but not particularly flexible or scalable. With the rise of big data, open-source tools like Hadoop and Spark introduced new ways to process data at scale. More recently, cloud-native services and modern orchestration frameworks have enabled even more agility and scalability in data workflows. This evolution has led to concepts like the modern data stack and data lakehouse—topics we’ll cover later in this series. Why It Matters Every modern organization depends on data. But without a solid foundation, data becomes a liability rather than an asset. Poorly managed data can lead to flawed insights, compliance issues, and lost opportunities. Good data engineering practices ensure that data is: Accurate and timely Secure and compliant Scalable and performant In a world where data volumes and velocity are only increasing, the importance of data engineering will only continue to grow. What’s Next Now that we’ve outlined the role and importance of data engineering, the next step is to explore how data gets into a system in the first place. In the next post, we’ll dig into data sources and the ingestion process—how data flows from the outside world into your ecosystem.

Free Resources

- Free Apache Iceberg Course

- Free Copy of “Apache Iceberg: The Definitive Guide”

- Free Copy of “Apache Polaris: The Definitive Guide”

- 2025 Apache Iceberg Architecture Guide

- How to Join the Iceberg Community

- Iceberg Lakehouse Engineering Video Playlist

- Ultimate Apache Iceberg Resource Guide

Data engineering sits at the heart of modern data-driven organizations. While data science often grabs headlines with predictive models and AI, it's the data engineer who builds and maintains the infrastructure that makes all of that possible. In this first post of our series, we’ll explore what data engineering is, why it matters, and how it fits into the broader data ecosystem.

The Role of the Data Engineer

Think of a data engineer as the architect and builder of the data highways. These professionals design, construct, and maintain systems that move, transform, and store data efficiently. Their job is to ensure that data flows from various sources into data warehouses or lakes where it can be used reliably for analysis, reporting, and machine learning.

In a practical sense, this means working with pipelines that connect everything from transactional databases and API feeds to large-scale storage systems. Data engineers work closely with data analysts, scientists, and platform teams to ensure the data is clean, consistent, and available when needed.

From Raw to Refined: The Journey of Data

Raw data is rarely useful as-is. It often arrives incomplete, messy, or inconsistently formatted. Data engineers are responsible for shepherding this raw material through a series of processing stages to prepare it for consumption.

This involves tasks like:

- Data ingestion (bringing data in from various sources)

- Data transformation (cleaning, enriching, and reshaping the data)

- Data storage (choosing optimal formats and storage solutions)

- Data delivery (ensuring end users can access data quickly and easily)

At each stage, considerations around scalability, performance, security, and governance come into play.

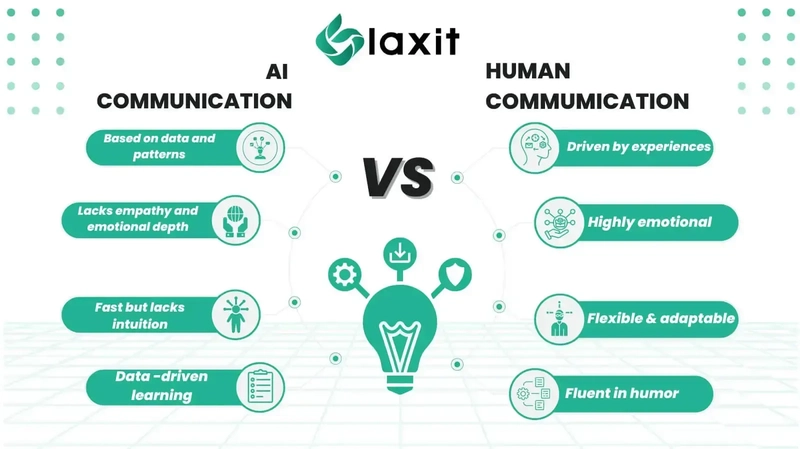

Data Engineering vs Data Science

It's common to see some confusion between the roles of data engineers and data scientists. While their work is often complementary, their responsibilities are distinct.

A data scientist focuses on analyzing data and building predictive models. Their tools often include Python, R, and statistical frameworks. On the other hand, data engineers build the systems that make the data usable in the first place. They are often more focused on infrastructure, system design, and optimization.

In short: the data scientist asks questions; the data engineer ensures the data is ready to answer them.

A Brief History of the Data Stack

The evolution of data engineering can be seen in how the data stack has changed over time.

In traditional environments, organizations relied heavily on ETL tools to move data from relational databases into on-premise warehouses. These systems were tightly controlled but not particularly flexible or scalable.

With the rise of big data, open-source tools like Hadoop and Spark introduced new ways to process data at scale. More recently, cloud-native services and modern orchestration frameworks have enabled even more agility and scalability in data workflows.

This evolution has led to concepts like the modern data stack and data lakehouse—topics we’ll cover later in this series.

Why It Matters

Every modern organization depends on data. But without a solid foundation, data becomes a liability rather than an asset. Poorly managed data can lead to flawed insights, compliance issues, and lost opportunities.

Good data engineering practices ensure that data is:

- Accurate and timely

- Secure and compliant

- Scalable and performant

In a world where data volumes and velocity are only increasing, the importance of data engineering will only continue to grow.

What’s Next

Now that we’ve outlined the role and importance of data engineering, the next step is to explore how data gets into a system in the first place. In the next post, we’ll dig into data sources and the ingestion process—how data flows from the outside world into your ecosystem.

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![[DEALS] Microsoft 365: 1-Year Subscription (Family/Up to 6 Users) (23% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

(1).jpg?#)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple to Split iPhone Launches Across Fall and Spring in Major Shakeup [Report]](https://www.iclarified.com/images/news/97211/97211/97211-640.jpg)

![Apple to Move Camera to Top Left, Hide Face ID Under Display in iPhone 18 Pro Redesign [Report]](https://www.iclarified.com/images/news/97212/97212/97212-640.jpg)

![Apple Developing Battery Case for iPhone 17 Air Amid Battery Life Concerns [Report]](https://www.iclarified.com/images/news/97208/97208/97208-640.jpg)

![AirPods 4 On Sale for $99 [Lowest Price Ever]](https://www.iclarified.com/images/news/97206/97206/97206-640.jpg)

![[Updated] Samsung’s 65-inch 4K Smart TV Just Crashed to $299 — That’s Cheaper Than an iPad](https://www.androidheadlines.com/wp-content/uploads/2025/05/samsung-du7200.jpg)