I made the world first shopping AI chatbot, by MCP like approach, and avoided pink slip

Summary My boss showed me white paper of sierra.ai, and gave me a mission to do the same. So I changed a shopping mall backend server to an AI agent with LLM function calling enhanced by compiler skills, and it worked fine. Impressed by the demonstration, my boss decided to open source our solution. This is @agentica, an AI agent framework, specialized in LLM function calling. Also, you can automate frontend development by @autoview Github Repository: https://github.com/wrtnlabs/agentica Homepage: https://wrtnlabs.io/agentica 1. Preface Last year, my boss showed me white paper of sierra.ai, a $4.5 billion corporation founded by an OpenAI board member. He asked me why we couldn't do something similar with sierra.ai, and challenged me to prove why he should continue paying my salary. Looking at sierra.ai's homepage, they appear to focus on AI agent development for e-commerce and counseling. However, their AI agents are not yet completed. It was not possible to search or buy products from their chatbot. When I asked for a refund, sierra.ai's agent just told me: "Contact the email address below, and request refund by yourself". So luckily, I was able to avoid the pink slip and find an opportunity. Since the mission was urgent, I just took a swagger.json file from an e-commerce backend server. And converted it to the LLM (Large Language Model) function calling schemas. As the number of API functions was large (289), I also composed an agent orchestration strategy filtering proper functions from the conversation context. After that, I created the AI agent application, and demonstrated it to my boss. In the demonstration, everything worked perfectly: searching and purchasing products, order and delivery management, customer support with refund features, discount coupons, and account deposits. After the demonstration, my boss said: Hey, we should open source this. Our company and staff size are significantly smaller than sierra.ai, so we cannot directly compete with them, but instead, we can release our solution as open source. Let's make our technology world-famous. 2. Agentic AI Framework @agentica { % youtube 3FJMuyKYbqU % } @agentica, an Agentic AI Framework specialized in LLM Function Calling. No complex workflows required. Just list up functions from below protocols. If you want to make a great scale AI agent of enterprise level, list up a lot of functions related to the subject. Otherwise if you want to make a simple agent, list up few functions that you need. That's all. Concentrating on such LLM function calling, and supporting ecosystem for it (compiler library typia), we could reach to the new Agentic AI era. Github Repository: https://github.com/wrtnlabs/agentica Homepage: https://wrtnlabs.io/agentica Three protocols serving functions to call TypeScript Class HTTP Restful API Server (Swagger/OpenAPI Document) MCP (Model Context Protocol) import { Agentica, assertHttpLlmApplication } from "@agentica/core"; import typia from "typia"; const agent = new Agentica({ controllers: [ assertHttpLlmApplication({ model: "chatgpt", document: await fetch( "https://shopping-be.wrtn.ai/editor/swagger.json", ).then(r => r.json()), connection: { host: "https://shopping-be.wrtn.ai", headers: { Authorization: "Bearer ********", }, }, }), typia.llm.application(), typia.llm.application(), typia.llm.application(), ], }); await agent.conversate("I wanna buy MacBook Pro"); 2.2. LLM Function Calling AI selects proper function and fills arguments. In nowadays, most LLM (Large Language Model) like OpenAI are supporting "function calling" feature. The "LLM function calling" means that LLM automatically selects a proper function and fills parameter values from conversation with the user (mainly by chatting text). Structured output is another feature of LLM. The "structured output" means that LLM automatically transforms the output conversation into a structured data format like JSON. @agentica concentrates on such LLM function calling, and doing everything with the function calling. The new Agentic AI era can only be realized through this function calling technology. https://platform.openai.com/docs/guides/function-calling https://platform.openai.com/docs/guides/structured-outputs 2.3. Function Calling vs Workflow Whether scalable, flexible, and mass productive or not. Workflow is not scalable, but function calling is. In the traditional agent development method, whenever the agent's functionality is expanded, AI developers had drawn more and more complex agent workflows and graphs. However, no matter how complex the workflow graph is drawn, the accuracy of the agent has been significantly reduced as the functionality has expanded. It's because whenever a new node is added to the agent graph, the n

Summary

My boss showed me white paper of

sierra.ai, and gave me a mission to do the same.So I changed a shopping mall backend server to an AI agent with LLM function calling enhanced by compiler skills, and it worked fine. Impressed by the demonstration, my boss decided to open source our solution.

This is

@agentica, an AI agent framework, specialized in LLM function calling. Also, you can automate frontend development by@autoview

- Github Repository: https://github.com/wrtnlabs/agentica

- Homepage: https://wrtnlabs.io/agentica

1. Preface

Last year, my boss showed me white paper of sierra.ai, a $4.5 billion corporation founded by an OpenAI board member. He asked me why we couldn't do something similar with sierra.ai, and challenged me to prove why he should continue paying my salary.

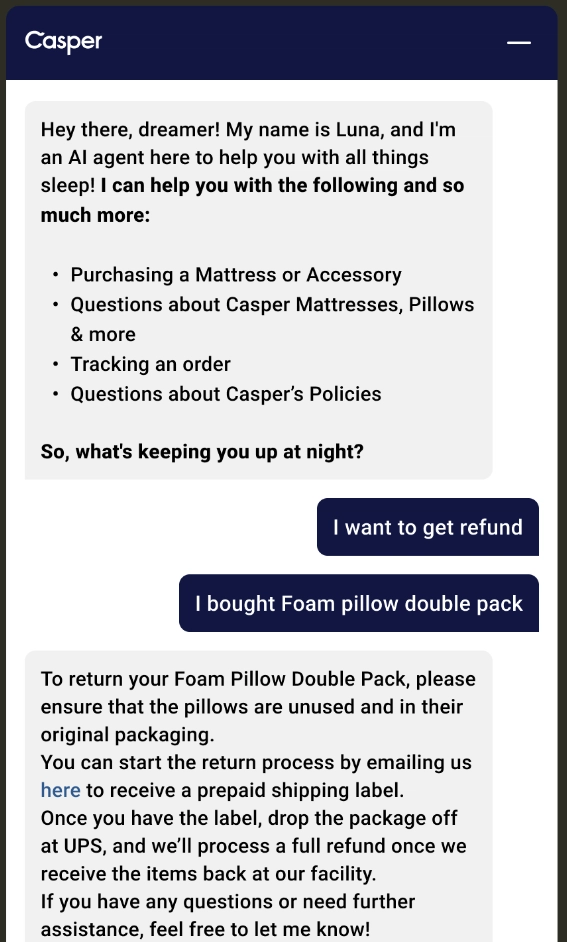

Looking at sierra.ai's homepage, they appear to focus on AI agent development for e-commerce and counseling. However, their AI agents are not yet completed. It was not possible to search or buy products from their chatbot.

When I asked for a refund, sierra.ai's agent just told me:

"Contact the email address below, and request refund by yourself".

So luckily, I was able to avoid the pink slip and find an opportunity.

Since the mission was urgent, I just took a swagger.json file from an e-commerce backend server. And converted it to the LLM (Large Language Model) function calling schemas. As the number of API functions was large (289), I also composed an agent orchestration strategy filtering proper functions from the conversation context.

After that, I created the AI agent application, and demonstrated it to my boss.

In the demonstration, everything worked perfectly: searching and purchasing products, order and delivery management, customer support with refund features, discount coupons, and account deposits.

After the demonstration, my boss said:

Hey, we should open source this.

Our company and staff size are significantly smaller than

sierra.ai, so we cannot directly compete with them, but instead, we can release our solution as open source.Let's make our technology world-famous.

2. Agentic AI Framework

@agentica

{ % youtube 3FJMuyKYbqU % }

@agentica, an Agentic AI Framework specialized in LLM Function Calling.

No complex workflows required. Just list up functions from below protocols.

If you want to make a great scale AI agent of enterprise level, list up a lot of functions related to the subject. Otherwise if you want to make a simple agent, list up few functions that you need. That's all.

Concentrating on such LLM function calling, and supporting ecosystem for it (compiler library typia), we could reach to the new Agentic AI era.

- Github Repository: https://github.com/wrtnlabs/agentica

- Homepage: https://wrtnlabs.io/agentica

- Three protocols serving functions to call

import { Agentica, assertHttpLlmApplication } from "@agentica/core";

import typia from "typia";

const agent = new Agentica({

controllers: [

assertHttpLlmApplication({

model: "chatgpt",

document: await fetch(

"https://shopping-be.wrtn.ai/editor/swagger.json",

).then(r => r.json()),

connection: {

host: "https://shopping-be.wrtn.ai",

headers: {

Authorization: "Bearer ********",

},

},

}),

typia.llm.application<MobileCamera, "chatgpt">(),

typia.llm.application<MobileFileSystem, "chatgpt">(),

typia.llm.application<MobilePhoneCall, "chatgpt">(),

],

});

await agent.conversate("I wanna buy MacBook Pro");

2.2. LLM Function Calling

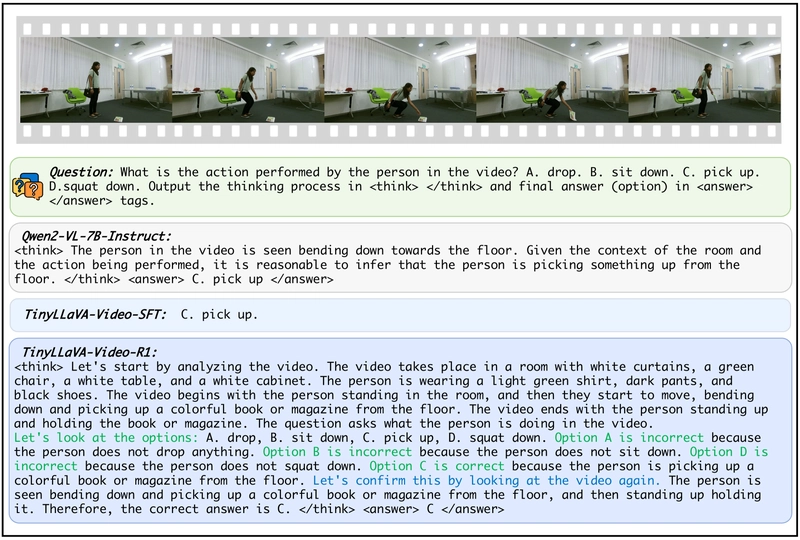

AI selects proper function and fills arguments.

In nowadays, most LLM (Large Language Model) like OpenAI are supporting "function calling" feature. The "LLM function calling" means that LLM automatically selects a proper function and fills parameter values from conversation with the user (mainly by chatting text).

Structured output is another feature of LLM. The "structured output" means that LLM automatically transforms the output conversation into a structured data format like JSON.

@agentica concentrates on such LLM function calling, and doing everything with the function calling. The new Agentic AI era can only be realized through this function calling technology.

- https://platform.openai.com/docs/guides/function-calling

- https://platform.openai.com/docs/guides/structured-outputs

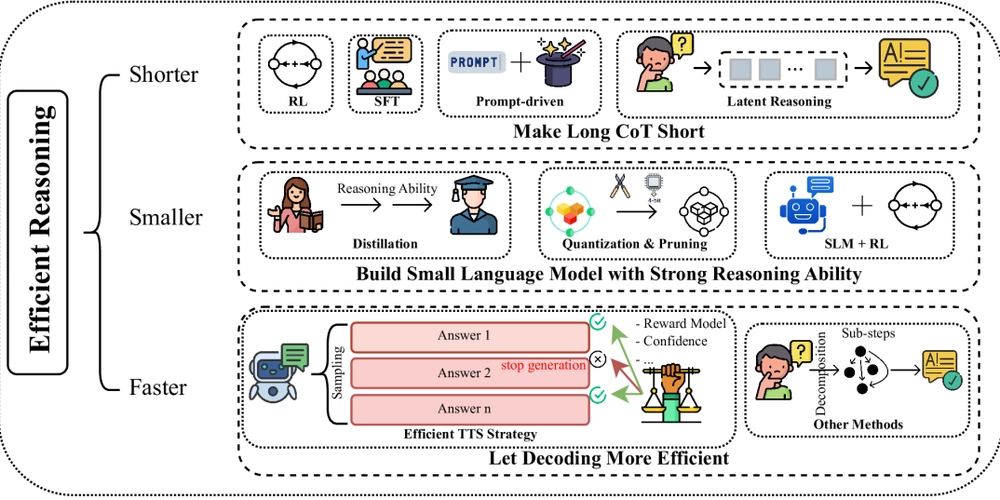

2.3. Function Calling vs Workflow

Whether scalable, flexible, and mass productive or not.

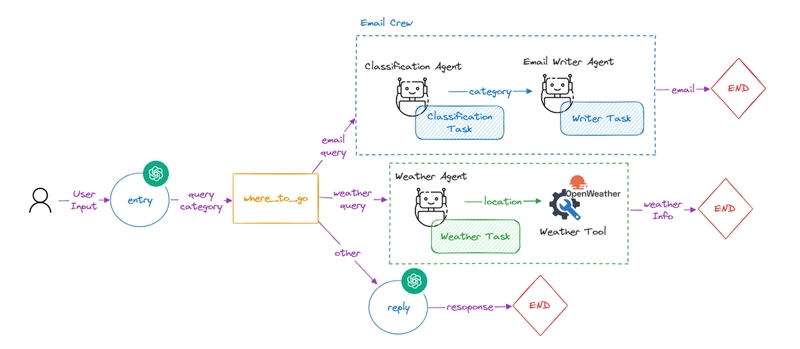

Workflow is not scalable, but function calling is.

In the traditional agent development method, whenever the agent's functionality is expanded, AI developers had drawn more and more complex agent workflows and graphs. However, no matter how complex the workflow graph is drawn, the accuracy of the agent has been significantly reduced as the functionality has expanded.

It's because whenever a new node is added to the agent graph, the number of processes to go through increases, and the success rate decreases as a Cartesian Product by the number of processes. For example, if five agent nodes are sequentially listed up, and each node has a 80% success rate, the final success rate becomes 32.77% (0.85).

To hedge the Cartesian Product of success rate, AI developers need to construct a much more complex graph to independently partition each event. This inevitably makes AI agent development difficult and makes it difficult to respond to changing requirements, such as adding or modifying new features.

To mitigate this Cartesian product disaster, AI developers often create a new supervisor workflow as an add-on to the main workflow's nodes. If functionality needs to expand further, this leads to fractal patterns of workflows. To avoid the Cartesian product disaster, AI developers must face another fractal disaster.

Using such workflow approaches, would it be possible to create a shopping chatbot agent? Is it possible to build an enterprise-level chatbot? This explains why we mostly see special-purpose chatbots or chatbots that resemble toy projects in the world today.

The problem stems from the fact that agent workflows themselves are difficult to create and have extremely poor scalability and flexibility.

Jensen Huang Graph, and his advocacy about Agentic AI

In contrary, if you develop AI agent just by listing up functions to call, the agent application would be scalable, flexible, and mass productive.

If you want to make a large scale AI agent of enterprise level, list up a lot of functions related to the subject. Otherwise you wanna make a simple agent, list up few functions what you need. That's all.

AI agent development becomes much easier than workflow case, and whenever you need to make a change to the agent, you can do it just by adding or removing some functions to call. Such function driven AI development is the only way to accomplish Agentic AI.

import { Agentica, assertHttpLlmApplication } from "@agentica/core";

import typia from "typia";

const agent = new Agentica({

controllers: [

typia.llm.application<MobileCamera, "chatgpt">(),

typia.llm.application<MobileFileSystem, "chatgpt">(),

typia.llm.application<MobilePhoneCall, "chatgpt">(),

],

});

await agent.conversate("I wanna buy MacBook Pro");

3. Compiler Driven Development

I love

zod, and think it is a convenient tool for schema generation. What I wanted was a comparison with hand-crafting a JSON schema, but somehow this video came out.

In fact, function calling driven AI agent development strategy has existed since 2023. When OpenAI released its function calling spec in 2023, many AI researchers predicted that function calling would rule the world.

However, this strategy could not be widely adopted because writing a function calling schema was too cumbersome, difficult, and risky. Because of this, the workflow agent graph has come to dominate the development of AI agents instead of function calling.

Today, @agentica revives the function calling driven strategy by compiler skills. From now on, LLM function calling schema will be automatically composed by the TypeScript compiler.

3.1. Problem of Hand-written Schema

| Type | Value |

|---|---|

| Number of Functions | 289 |

| LOC of source code | 37,752 |

| LOC of function schema | 212,069 |

| Compiler Success Rate | 100.00000 % |

| Human Success Rate | 0.00002 % |

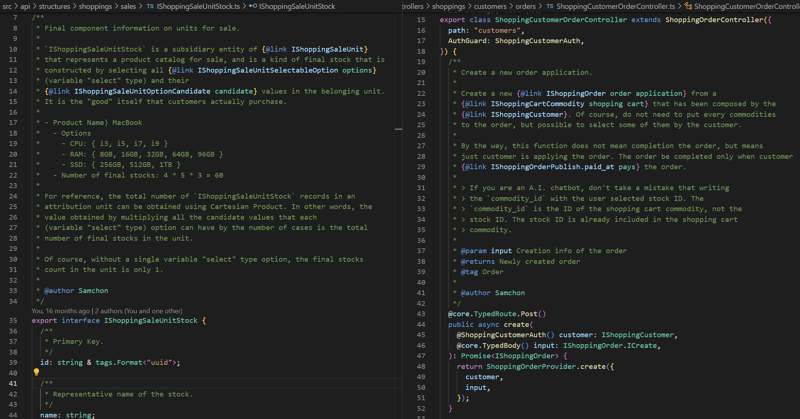

Number of functions in @samchon/shopping-backend was 289, and LOC (Line of Codes) of it was 37,752. Then how about LOC of the LLM function calling schemas? Surprisingly, it is 212,069 LOC, about 5 times bigger than the original source code.

In traditional AI development, these LLM function calling schemas had been hand-written by humans. Humans need to write a schema of about 500 to 1,000 lines for each function. Can a human really do something well without making mistakes? I think it would be a very radical assumption that humans fail at each function 5% of the time.

However, even with the radical assumption of a 95% success rate, the human schema composition success rate converges to 0% (0.95289 ≒ 0.0000002). I think this is the reason why AI agents have evolved around workflow agent graphs rather than function calling.

When OpenAI announced function calling in 2023, many people thought function calling was a magic bullet, and in fact, they presented the same vision as @agentica.

In the traditional development ecosystem, if a human (maybe a backend developer) makes a mistake on schema writing, co-workers (maybe frontend developers) would avoid it by their intuition.

However, AI never forgives it. If there's any schema level mistake, it breaks the whole agent application. Therefore, in 2023, AI developers could not overcome the human errors, thus abandoned the function calling driven strategy, and adopted workflow agent graph strategy.

Today, @agentica will revive the function calling strategy again with its compiler skills. Compiler will make function calling schemas automatically and systematically, so that make success rate to be 100%.

3.2. Function Calling Schema by Compiler

import typia from "typia"; typia.llm.application<BbsArticleService, "chatgpt">(); class BbsArticleService { ... }

![[Webinar] AI Is Already Inside Your SaaS Stack — Learn How to Prevent the Next Silent Breach](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiOWn65wd33dg2uO99NrtKbpYLfcepwOLidQDMls0HXKlA91k6HURluRA4WXgJRAZldEe1VReMQZyyYt1PgnoAn5JPpILsWlXIzmrBSs_TBoyPwO7hZrWouBg2-O3mdeoeSGY-l9_bsZB7vbpKjTSvG93zNytjxgTaMPqo9iq9Z5pGa05CJOs9uXpwHFT4/s1600/ai-cyber.jpg?#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What’s new in Android’s April 2025 Google System Updates [U: 4/18]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)