Are LLMs Just ETL Pipelines on Steroids? Rethinking AI Training

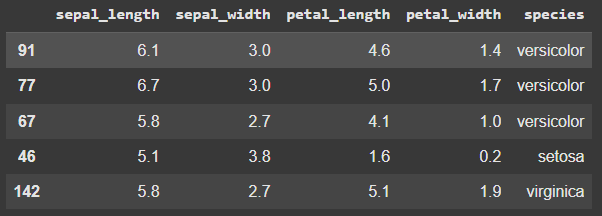

We talk a lot about Large Language Models (LLMs) like GPT, Claude, and Llama – their incredible generative capabilities, their potential, and their complexities. But have you ever stopped to think about the process that gets them there? Strip away the most advanced layers, and the initial training phase starts to look surprisingly familiar to something many of us work with daily: ETL (Extract, Transform, Load). It sounds a bit reductive at first, but let's break down this mental model. Could viewing LLM training through an ETL lens help us demystify some of the magic? E is for Extract: The Data Hoover Every ETL process starts with extraction. For an LLM, this means pulling in truly mind-boggling amounts of data from diverse sources: the open web, books, scientific articles, code repositories, conversations – essentially, a huge chunk of human-generated text and code. This is the raw material, the source database from which knowledge will be derived. It's extraction on an unprecedented scale. T is for Transform: Where the Real "Learning" Happens This is where the analogy gets really interesting and, arguably, where the "steroids" part comes in. Unlike traditional ETL which might focus on cleaning, standardizing, or aggregating data, the "transformation" in LLM training is about deep pattern recognition and representation learning. This involves: Tokenization: Breaking down raw text into smaller units (tokens) the model can process. Embedding Generation: Converting these tokens into dense numerical vectors (embeddings) that capture semantic meaning and relationships. Words with similar meanings end up closer together in this vector space. Pattern Recognition & Weight Adjustment: This is the core of training. The model processes the token embeddings, learning statistical relationships, grammatical structures, contextual nuances, facts, and even reasoning patterns. It does this by constantly adjusting its internal parameters (weights and biases) to get better at predicting the next token in a sequence based on the preceding ones. This iterative adjustment transforms the raw data into learned knowledge encoded within the model's architecture. It's not just reshaping data; it's fundamentally changing the model to remember the patterns in the data. L is for Load: Storing the Knowledge So, where does this transformed knowledge get "loaded"? Not into a traditional relational database or data warehouse. Instead, the "load" destination is the model's final set of trained parameters – billions of weights and biases. These parameters are the compressed, transformed representation of the patterns learned from the initial data dump. The model itself becomes the vessel holding the processed knowledge. Vector Databases: The ETL Extension for Inference? The analogy extends further when we consider Retrieval-Augmented Generation (RAG). Here, we often take specific documents, transform them into embeddings (another 'T' step), and load them into a specialized vector database. When you query the LLM, it uses your query's embedding to retrieve relevant chunks from this vector database (an 'E' step during inference!) and uses that context to generate a better answer. This looks remarkably like using a specialized data store, loaded via a transformation process, to enhance the main application. Beyond ETL: Generation and Dynamism Of course, the analogy isn't perfect. Standard ETL pipelines don't typically generate novel data the way LLMs do during inference. LLMs aren't just static repositories; they are dynamic systems that apply their learned transformations in real-time to generate new text based on prompts. Furthermore, processes like fine-tuning and RLHF represent continuous learning loops that go beyond a typical one-way ETL flow. Why Think This Way? Viewing LLM training through an ETL lens helps ground these complex systems in familiar data processing concepts. It highlights that, at their core, LLMs are products of massive data processing pipelines designed to extract, transform, and encode information. It reminds us that the quality and nature of the initial "extracted" data fundamentally shape the resulting model. So, next time you marvel at an LLM's output, remember the gargantuan ETL-like process that laid its foundation – extracting the world's text, transforming it into learned patterns, and loading that knowledge into the intricate web of its neural network. What do you think? Does the ETL analogy resonate with how you understand LLM training? Share your thoughts in the comments!

We talk a lot about Large Language Models (LLMs) like GPT, Claude, and Llama – their incredible generative capabilities, their potential, and their complexities. But have you ever stopped to think about the process that gets them there? Strip away the most advanced layers, and the initial training phase starts to look surprisingly familiar to something many of us work with daily: ETL (Extract, Transform, Load).

It sounds a bit reductive at first, but let's break down this mental model. Could viewing LLM training through an ETL lens help us demystify some of the magic?

E is for Extract: The Data Hoover

Every ETL process starts with extraction. For an LLM, this means pulling in truly mind-boggling amounts of data from diverse sources: the open web, books, scientific articles, code repositories, conversations – essentially, a huge chunk of human-generated text and code. This is the raw material, the source database from which knowledge will be derived. It's extraction on an unprecedented scale.

T is for Transform: Where the Real "Learning" Happens

This is where the analogy gets really interesting and, arguably, where the "steroids" part comes in. Unlike traditional ETL which might focus on cleaning, standardizing, or aggregating data, the "transformation" in LLM training is about deep pattern recognition and representation learning. This involves:

- Tokenization: Breaking down raw text into smaller units (tokens) the model can process.

- Embedding Generation: Converting these tokens into dense numerical vectors (embeddings) that capture semantic meaning and relationships. Words with similar meanings end up closer together in this vector space.

- Pattern Recognition & Weight Adjustment: This is the core of training. The model processes the token embeddings, learning statistical relationships, grammatical structures, contextual nuances, facts, and even reasoning patterns. It does this by constantly adjusting its internal parameters (weights and biases) to get better at predicting the next token in a sequence based on the preceding ones. This iterative adjustment transforms the raw data into learned knowledge encoded within the model's architecture. It's not just reshaping data; it's fundamentally changing the model to remember the patterns in the data.

L is for Load: Storing the Knowledge

So, where does this transformed knowledge get "loaded"? Not into a traditional relational database or data warehouse. Instead, the "load" destination is the model's final set of trained parameters – billions of weights and biases. These parameters are the compressed, transformed representation of the patterns learned from the initial data dump. The model itself becomes the vessel holding the processed knowledge.

Vector Databases: The ETL Extension for Inference?

The analogy extends further when we consider Retrieval-Augmented Generation (RAG). Here, we often take specific documents, transform them into embeddings (another 'T' step), and load them into a specialized vector database. When you query the LLM, it uses your query's embedding to retrieve relevant chunks from this vector database (an 'E' step during inference!) and uses that context to generate a better answer. This looks remarkably like using a specialized data store, loaded via a transformation process, to enhance the main application.

Beyond ETL: Generation and Dynamism

Of course, the analogy isn't perfect. Standard ETL pipelines don't typically generate novel data the way LLMs do during inference. LLMs aren't just static repositories; they are dynamic systems that apply their learned transformations in real-time to generate new text based on prompts. Furthermore, processes like fine-tuning and RLHF represent continuous learning loops that go beyond a typical one-way ETL flow.

Why Think This Way?

Viewing LLM training through an ETL lens helps ground these complex systems in familiar data processing concepts. It highlights that, at their core, LLMs are products of massive data processing pipelines designed to extract, transform, and encode information. It reminds us that the quality and nature of the initial "extracted" data fundamentally shape the resulting model.

So, next time you marvel at an LLM's output, remember the gargantuan ETL-like process that laid its foundation – extracting the world's text, transforming it into learned patterns, and loading that knowledge into the intricate web of its neural network.

What do you think? Does the ETL analogy resonate with how you understand LLM training? Share your thoughts in the comments!

![[Webinar] AI Is Already Inside Your SaaS Stack — Learn How to Prevent the Next Silent Breach](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiOWn65wd33dg2uO99NrtKbpYLfcepwOLidQDMls0HXKlA91k6HURluRA4WXgJRAZldEe1VReMQZyyYt1PgnoAn5JPpILsWlXIzmrBSs_TBoyPwO7hZrWouBg2-O3mdeoeSGY-l9_bsZB7vbpKjTSvG93zNytjxgTaMPqo9iq9Z5pGa05CJOs9uXpwHFT4/s1600/ai-cyber.jpg?#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What’s new in Android’s April 2025 Google System Updates [U: 4/18]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)