How to Trigger AWS Lambda Functions Using S3 Events (With Full Code Example)

Triggering AWS Lambda functions with S3 events is a powerful way to create reactive, event-driven applications. Whether you're processing image uploads, logging changes, or running backend tasks, this pattern allows you to run code without provisioning servers. In this guide, we’ll walk through the full setup using the AWS Console and a Node.js Lambda function. Step 1: Create an S3 Bucket Go to the AWS S3 console and create a new bucket. Make sure to uncheck "Block all public access" if your use case requires public access (e.g., image uploads). Step 2: Create a New Lambda Function In the AWS Lambda console, create a new function: Runtime: Node.js 18.x Permissions: Create a new role with basic Lambda permissions Here’s a simple example Lambda function that logs the uploaded file’s metadata: exports.handler = async (event) => { const record = event.Records[0]; const bucket = record.s3.bucket.name; const key = decodeURIComponent(record.s3.object.key.replace(/\+/g, ' ')); const size = record.s3.object.size; console.log(`New file uploaded: ${key} (${size} bytes) to bucket ${bucket}`); return { statusCode: 200, body: JSON.stringify('S3 event processed successfully'), }; }; Step 3: Set Up S3 Trigger In the Lambda designer, click "Add trigger" and choose S3: Select the bucket you created Event type: PUT (for new uploads) Prefix/suffix: Optional filters like uploads/ or .jpg Save the trigger. Now your Lambda is connected to the S3 bucket! Step 4: Upload a Test File Upload any file to your S3 bucket. Then check the Lambda logs in CloudWatch. You should see a log entry with the file's key and size. Common Use Cases Generating thumbnails after image upload Parsing CSV files into a database Triggering downstream APIs based on file input Conclusion Using S3 to trigger Lambda functions is a powerful building block for event-driven apps in the cloud. With just a few clicks and lines of code, you can automate backends without managing infrastructure. If you found this guide helpful, consider buying me a coffee to support more content like this: buymeacoffee.com/hexshift

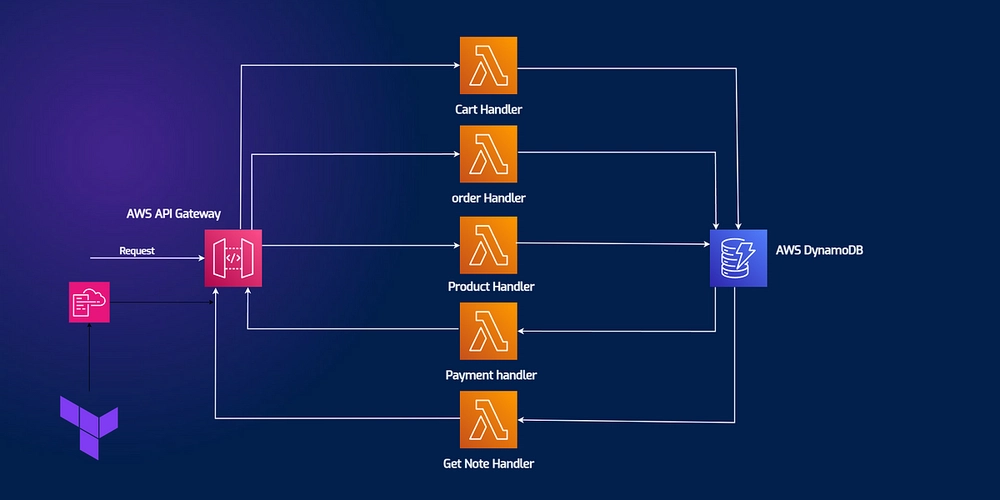

Triggering AWS Lambda functions with S3 events is a powerful way to create reactive, event-driven applications. Whether you're processing image uploads, logging changes, or running backend tasks, this pattern allows you to run code without provisioning servers. In this guide, we’ll walk through the full setup using the AWS Console and a Node.js Lambda function.

Step 1: Create an S3 Bucket

Go to the AWS S3 console and create a new bucket. Make sure to uncheck "Block all public access" if your use case requires public access (e.g., image uploads).

Step 2: Create a New Lambda Function

In the AWS Lambda console, create a new function:

- Runtime: Node.js 18.x

- Permissions: Create a new role with basic Lambda permissions

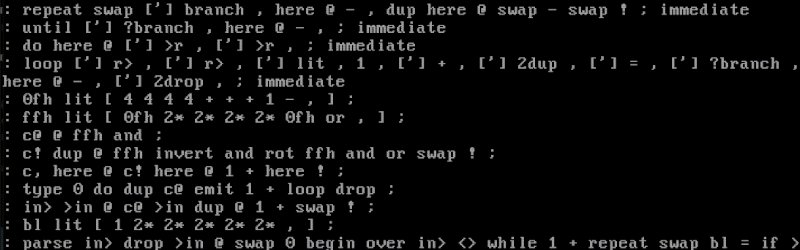

Here’s a simple example Lambda function that logs the uploaded file’s metadata:

exports.handler = async (event) => {

const record = event.Records[0];

const bucket = record.s3.bucket.name;

const key = decodeURIComponent(record.s3.object.key.replace(/\+/g, ' '));

const size = record.s3.object.size;

console.log(`New file uploaded: ${key} (${size} bytes) to bucket ${bucket}`);

return {

statusCode: 200,

body: JSON.stringify('S3 event processed successfully'),

};

};Step 3: Set Up S3 Trigger

In the Lambda designer, click "Add trigger" and choose S3:

- Select the bucket you created

- Event type:

PUT(for new uploads) - Prefix/suffix: Optional filters like

uploads/or.jpg

Save the trigger. Now your Lambda is connected to the S3 bucket!

Step 4: Upload a Test File

Upload any file to your S3 bucket. Then check the Lambda logs in CloudWatch. You should see a log entry with the file's key and size.

Common Use Cases

- Generating thumbnails after image upload

- Parsing CSV files into a database

- Triggering downstream APIs based on file input

Conclusion

Using S3 to trigger Lambda functions is a powerful building block for event-driven apps in the cloud. With just a few clicks and lines of code, you can automate backends without managing infrastructure.

If you found this guide helpful, consider buying me a coffee to support more content like this: buymeacoffee.com/hexshift

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)