How to Identify Malicious Content: Key Behavioral Patterns and Dissemination Methods

Reliable information is the currency of the digital age, yet the mechanisms for spreading harmful online content have evolved with alarming speed. A subtle tweet, a sensational headline, or viral misinformation campaign can erode public trust and inflict real-world harm. Understanding the behavioral patterns of malicious content not only strengthens personal digital hygiene but also enables informed professionals to counteract sophisticated manipulation operations. This article explores the tactics of malicious actors, highlights typical behavioral signals, and offers paths toward prompt and effective detection. Understanding Malicious Content and Its Impact Malicious content encompasses a broad range of digital materials designed to deceive, swindle, incite fear, or otherwise manipulate audiences for nefarious objectives. Whether driven by financial gain, political agendas, or malice, such content undermines the very foundation of online trust. These threats vary in form—fraudulent scams, fake news, phishing attempts, or manipulated multimedia—yet share a reliance on identifiable behavioral patterns for successful propagation. Detecting The Behavioral Patterns of Malicious Content Advances in cyber awareness have exposed the recurring signatures shared by harmful content online. Identifying these patterns offers unique protection against falling victim to deception techniques. Rapid, Unusual Sharing Behavior A distinctive hallmark among the behavioral patterns of malicious content is the sudden and coordinated amplification across multiple platforms. Harmful posts are often disseminated at lightning speed, leveraging automation and reverse-engineered psychological triggers for virality. An abrupt uptick in post shares by newly-created or infrequently-used accounts is a powerfully suggestive sign. Emotionally Manipulative Messaging Destructive digital campaigns frequently appeal to primal emotions like anger, fear, or outrage, thereby promoting impulsive resharing or engagement. Malicious content utilizes highly-charged language, fearmongering statistics, or imagery designed to circumvent rational thought. If online material consistently provokes strong emotional responses without substantiated evidence, it likely fits this adverse pattern. Sensational Headlines and Surprising “Revelations” Malicious actors expertly craft clickbait—or highly sensationalized headlines—that oversimplify complex topics, distort facts, or promise shocking revelations. Paired with unverified “leaks” or urgently timed updates, this tactic encourages short-circuiting of critical thinking, increasing clickthrough and share rates. Fake or Impersonated Accounts Bad actors rarely distribute messages through personal channels alone. False profiles often parade as official news, brands, or influencers, lending perceived legitimacy to malicious material. Detection of profile inconsistencies—such as lack of published history, mismatched biographies, disproportionate follower-to-engagement ratios, or recycled images—should prompt skepticism. Evasion of Detection Every innovation in recognizing dangerous content is met with equal creativity in obfuscation. URL shorteners mask suspicious destinations, intentionally-misspelled keywords evade moderation, and images harboring malicious links are normalized as memes or infographics. The consistent use of these tactics reveals not only intent to deceive, but also adaptability—a key behavioral indicator. The Most Common Dissemination Tactics Used By Threat Actors Insight into the unique methods deployed to spread malicious materials enables collective vigilance on the web. Coordinated Sharing and Automated Bots A staple among the behavioral patterns of malicious content is the use of automation—specifically social media bots, script-based interactions, and bulk-messaging services. Large volumes of content, posted with high frequency and identical language, reflect the handiwork of these programs and those who control them. Hashtag Hijacking and Trend Manipulation By coordinating posts around trending topics, malicious actors insert harmful content into viral conversations, maximizing reach while masking nefarious intent. Hijacked hashtags not only expose content to unsuspecting groups but also suppress genuine information by flooding digital space with calculated noise. Shadow Distribution Channels Not all dissemination unfolds openly. Encrypted messengers, private forums, “dark social” media (such as direct messages, closed groups), and invite-only discussion platforms have become critical tools for the covert proliferation of dangerous messages. Unfortunately, such tactics complicate traditional detection efforts, requiring vigilance within marginalized digital spaces. Techniques for Identifying and Counteracting Malicious Content Recognizing malicious d

Reliable information is the currency of the digital age, yet the mechanisms for spreading harmful online content have evolved with alarming speed. A subtle tweet, a sensational headline, or viral misinformation campaign can erode public trust and inflict real-world harm. Understanding the behavioral patterns of malicious content not only strengthens personal digital hygiene but also enables informed professionals to counteract sophisticated manipulation operations. This article explores the tactics of malicious actors, highlights typical behavioral signals, and offers paths toward prompt and effective detection.

Understanding Malicious Content and Its Impact

Malicious content encompasses a broad range of digital materials designed to deceive, swindle, incite fear, or otherwise manipulate audiences for nefarious objectives. Whether driven by financial gain, political agendas, or malice, such content undermines the very foundation of online trust. These threats vary in form—fraudulent scams, fake news, phishing attempts, or manipulated multimedia—yet share a reliance on identifiable behavioral patterns for successful propagation.

Detecting The Behavioral Patterns of Malicious Content

Advances in cyber awareness have exposed the recurring signatures shared by harmful content online. Identifying these patterns offers unique protection against falling victim to deception techniques.

Rapid, Unusual Sharing Behavior

A distinctive hallmark among the behavioral patterns of malicious content is the sudden and coordinated amplification across multiple platforms. Harmful posts are often disseminated at lightning speed, leveraging automation and reverse-engineered psychological triggers for virality. An abrupt uptick in post shares by newly-created or infrequently-used accounts is a powerfully suggestive sign.

Emotionally Manipulative Messaging

Destructive digital campaigns frequently appeal to primal emotions like anger, fear, or outrage, thereby promoting impulsive resharing or engagement. Malicious content utilizes highly-charged language, fearmongering statistics, or imagery designed to circumvent rational thought. If online material consistently provokes strong emotional responses without substantiated evidence, it likely fits this adverse pattern.

Sensational Headlines and Surprising “Revelations”

Malicious actors expertly craft clickbait—or highly sensationalized headlines—that oversimplify complex topics, distort facts, or promise shocking revelations. Paired with unverified “leaks” or urgently timed updates, this tactic encourages short-circuiting of critical thinking, increasing clickthrough and share rates.

Fake or Impersonated Accounts

Bad actors rarely distribute messages through personal channels alone. False profiles often parade as official news, brands, or influencers, lending perceived legitimacy to malicious material. Detection of profile inconsistencies—such as lack of published history, mismatched biographies, disproportionate follower-to-engagement ratios, or recycled images—should prompt skepticism.

Evasion of Detection

Every innovation in recognizing dangerous content is met with equal creativity in obfuscation. URL shorteners mask suspicious destinations, intentionally-misspelled keywords evade moderation, and images harboring malicious links are normalized as memes or infographics. The consistent use of these tactics reveals not only intent to deceive, but also adaptability—a key behavioral indicator.

The Most Common Dissemination Tactics Used By Threat Actors

Insight into the unique methods deployed to spread malicious materials enables collective vigilance on the web.

Coordinated Sharing and Automated Bots

A staple among the behavioral patterns of malicious content is the use of automation—specifically social media bots, script-based interactions, and bulk-messaging services. Large volumes of content, posted with high frequency and identical language, reflect the handiwork of these programs and those who control them.

Hashtag Hijacking and Trend Manipulation

By coordinating posts around trending topics, malicious actors insert harmful content into viral conversations, maximizing reach while masking nefarious intent. Hijacked hashtags not only expose content to unsuspecting groups but also suppress genuine information by flooding digital space with calculated noise.

Shadow Distribution Channels

Not all dissemination unfolds openly. Encrypted messengers, private forums, “dark social” media (such as direct messages, closed groups), and invite-only discussion platforms have become critical tools for the covert proliferation of dangerous messages. Unfortunately, such tactics complicate traditional detection efforts, requiring vigilance within marginalized digital spaces.

Techniques for Identifying and Counteracting Malicious Content

Recognizing malicious digital signals is crucial for individuals and institutions that value secure information navigation. Combining careful observation with available technological aids vastly enhances your defense against sophisticated digital threats.

Develop Critical Digital Literacy

Building personal expertise begins with pausing before resharing—scrutinizing messages for logical inconsistencies, emotional manipulation, rapidly trending sources, or missing citations often exposes harmful intentions.

Analyze the Origin and Distribution

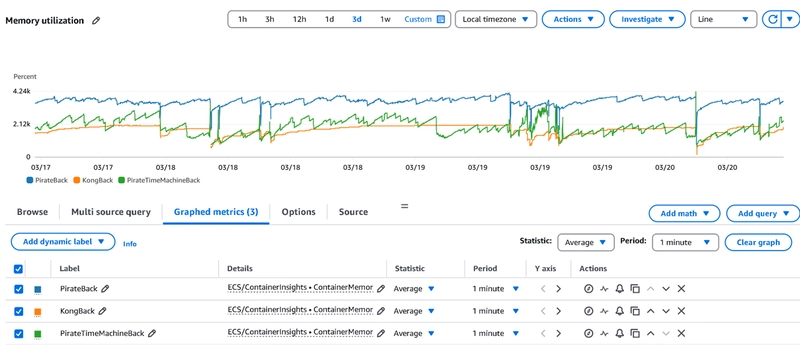

Tools exist that can map content origin, trace information flow, and highlight clusters of suspicious activity. Digital forensics and content analysis can pinpoint where, how, and why particular narratives emerge.

Leverage Automated Detection Solutions

Given the complexity of online landscapes, reliable detection relies on technological reinforcement. The harmful content detector offers robust defenses tailored to uncover both overt and subtle forms of malicious behavior, automating much of the heavy lifting formerly left to manual review teams. Leveraging such a tool can help websmasters, IT teams, and communicators identify hazardous information, interdicting threats before they spiral out of control.

Foster Organizational Vigilance

Training seminars, internal awareness campaigns, routine updates on digital threat trends, and guided simulation exercises prepare teams for the reality of coordinated manipulation campaigns.

Conclusion: Building Resilience Against Online Malicious Content

From municipality offices safeguarding vital public information to individuals managing personal accounts, everyone can benefit from distinguishing between harmless virality and manipulative digital threats. By mastering the behavioral patterns of malicious content, recognizing contemporary dissemination tactics, and utilizing cutting-edge detection technology, the resilience of information ecosystems can be substantially increased. Silent vigilance backed by education and automation transforms the internet into a safer and more trustworthy domain for all users.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Is this too much for a modular monolith system? [closed]](https://i.sstatic.net/pYL1nsfg.png)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)