Google DeepMind reveals details about the future of Gemini & AI

The post Google DeepMind reveals details about the future of Gemini & AI appeared first on Android Headlines.

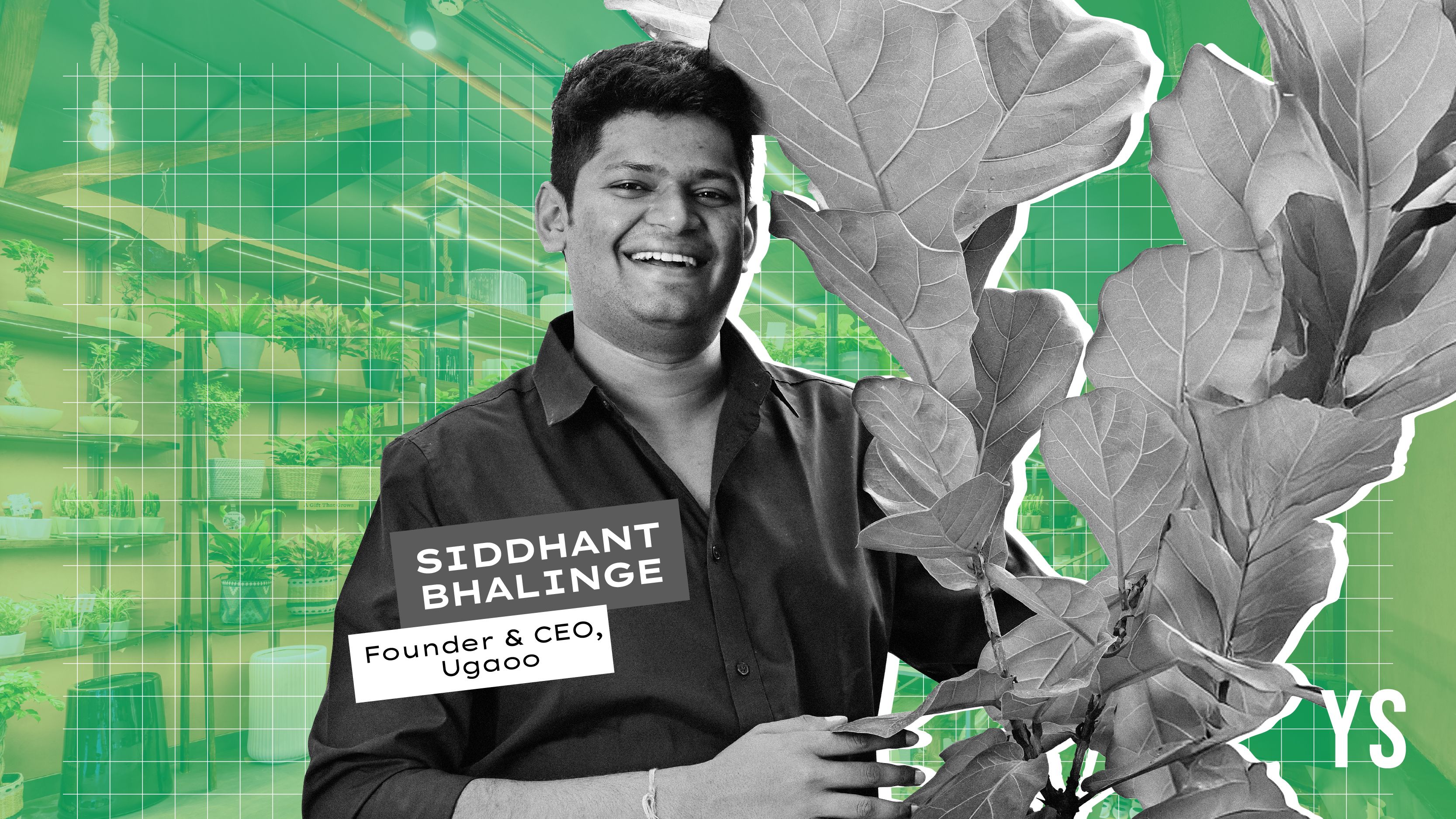

DeepMind, Google’s AI-focused division, has been the source of many advanced developments. These artificial intelligence technologies have reached most of the company’s products. However, there are projects in development that are still awaiting widespread implementation. Gemini is one of the services that could receive some major improvements soon. Demis Hassabis, CEO of Google DeepMind, revealed more details about the path they are following in the field of AI in an interview.

60 Minutes conducted the interview with the CEO of Google DeepMind, revealing more about the company’s vision for AI.

Gemini AI could get major memory improvements, Google DeepMind CEO teases

The interview began with a greeting from Project Astra. “Hi, Scott. Nice to see you again,” said the AI. If you’re not familiar, Project Astra is Google’s advanced, multimodal conversational experience. As the greeting suggests, the AI ”remembered” the interviewer from a previous occasion.

Project Astra features have been arriving in Gemini Live. Recently, the chatbot received the long-awaited multimodal capabilities that allow the AI to “see” your surroundings or what’s on your screen. Thanks to this, you can ask Gemini questions about what it is “seeing” in real time. However, remembering previous conversations isn’t something Gemini can currently do. So, how did it remember the interviewer?

Well, the interview revealed that Gemini developers have a version with a longer memory. It can remember previous conversations and their details. This allows it to further customize follow-up questions. Hassabis also revealed that this internal version has a “10-minute memory” for ongoing conversations. These features will likely arrive in Gemini Live at some point. However, there’s no ETA for it yet.

The Google DeepMind CEO also revealed that Astra’s AI-powered features can work with smart glasses. Recent reports claimed that Google will launch Samsung-made smart glasses in 2026. So, the product could boast Astra’s advanced capabilities through Gemini.

Gemini to become more “agentic”

Hassabis also offered details about the future of Gemini, the company’s AI assistant. He said that DeepMind is “training its AI model called Gemini to not just reveal the world but to act in it, like booking tickets and shopping online.” So, you can expect “agentic” capabilities in Gemini going forward. Google CEO Sundar Pichai previously teased such capabilities under the name Project Mariner.

Although Gemini aims to receive agentic features, Hassabis insists that AGIs (artificial general intelligence) are about 5to10 years away. By 2030, the DeepMind CEO said there will be “a system that really understands everything around you in very nuanced and deep ways and is kind of embedded in your everyday life.”

Is an AI “self-aware” system in development?

60 Minutes’ Scott also asked about a possible “self-aware” system. He seems to be referring to so-called superintelligent AI, a concept that has been talked about a lot in recent months. Hassabis responded that current systems don’t have those capabilities but that their existence is “possible.” Still, he didn’t confirm that Google is working on a similar system.

“I don’t think any of today’s systems make me feel self-aware or, you know, conscious in any way. Obviously, everyone needs to make their own decisions by interacting with these chatbots. I think theoretically it’s possible,” he said.

That said, he added that a self-aware AI system is something DeepMind could achieve, but as a natural consequence of advances in artificial intelligence and not as a main goal to pursue. He stated that “it may happen implicitly. These systems might acquire some feeling of self-awareness. That’s possible. I think it’s important for these systems to understand you, yourself, and others. And that’s probably the beginning of something like self-awareness.”

DeepMind CEO’s vision of self-aware AI

Lastly, the CEO of Google DeepMind further explained his vision of a self-aware AI system:

“I think there are two reasons why we consider ourselves conscious. One is that you exhibit the behavior of a conscious being, very similar to mine. But the second is that we operate on the same substrate. We’re made of the same carbon matter with our soft brains. Now, obviously, machines run on silicon. So, even if they exhibit the same behaviors, and even if they say the same things, it doesn’t necessarily mean that this sense of consciousness that we have is the same one they will have.”

You can watch the full interview below.

The post Google DeepMind reveals details about the future of Gemini & AI appeared first on Android Headlines.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![BPMN-procesmodellering [closed]](https://i.sstatic.net/l7l8q49F.png)

-All-will-be-revealed-00-35-05.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-All-will-be-revealed-00-17-36.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![What iPhone 17 model are you most excited to see? [Poll]](https://9to5mac.com/wp-content/uploads/sites/6/2025/04/iphone-17-pro-sky-blue.jpg?quality=82&strip=all&w=290&h=145&crop=1)

![Hands-On With 'iPhone 17 Air' Dummy Reveals 'Scary Thin' Design [Video]](https://www.iclarified.com/images/news/97100/97100/97100-640.jpg)

![Mike Rockwell is Overhauling Siri's Leadership Team [Report]](https://www.iclarified.com/images/news/97096/97096/97096-640.jpg)

![Instagram Releases 'Edits' Video Creation App [Download]](https://www.iclarified.com/images/news/97097/97097/97097-640.jpg)

![Inside Netflix's Rebuild of the Amsterdam Apple Store for 'iHostage' [Video]](https://www.iclarified.com/images/news/97095/97095/97095-640.jpg)