Anthropic Warns Fully AI Employees Are a Year Away

Anthropic predicts AI-powered virtual employees will start operating within companies in the next year, introducing new risks such as account misuse and rogue behavior. Axios reports: Virtual employees could be the next AI innovation hotbed, Jason Clinton, the company's chief information security officer, told Axios. Agents typically focus on a specific, programmable task. In security, that's meant having autonomous agents respond to phishing alerts and other threat indicators. Virtual employees would take that automation a step further: These AI identities would have their own "memories," their own roles in the company and even their own corporate accounts and passwords. They would have a level of autonomy that far exceeds what agents have today. "In that world, there are so many problems that we haven't solved yet from a security perspective that we need to solve," Clinton said. Those problems include how to secure the AI employee's user accounts, what network access it should be given and who is responsible for managing its actions, Clinton added. Anthropic believes it has two responsibilities to help navigate AI-related security challenges. First, to thoroughly test Claude models to ensure they can withstand cyberattacks, Clinton said. The second is to monitor safety issues and mitigate the ways that malicious actors can abuse Claude. AI employees could go rogue and hack the company's continuous integration system -- where new code is merged and tested before it's deployed -- while completing a task, Clinton said. "In an old world, that's a punishable offense," he said. "But in this new world, who's responsible for an agent that was running for a couple of weeks and got to that point?" Clinton says virtual employee security is one of the biggest security areas where AI companies could be making investments in the next few years. Read more of this story at Slashdot.

Read more of this story at Slashdot.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![BPMN-procesmodellering [closed]](https://i.sstatic.net/l7l8q49F.png)

-All-will-be-revealed-00-35-05.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-All-will-be-revealed-00-17-36.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

![What iPhone 17 model are you most excited to see? [Poll]](https://9to5mac.com/wp-content/uploads/sites/6/2025/04/iphone-17-pro-sky-blue.jpg?quality=82&strip=all&w=290&h=145&crop=1)

![Hands-On With 'iPhone 17 Air' Dummy Reveals 'Scary Thin' Design [Video]](https://www.iclarified.com/images/news/97100/97100/97100-640.jpg)

![Mike Rockwell is Overhauling Siri's Leadership Team [Report]](https://www.iclarified.com/images/news/97096/97096/97096-640.jpg)

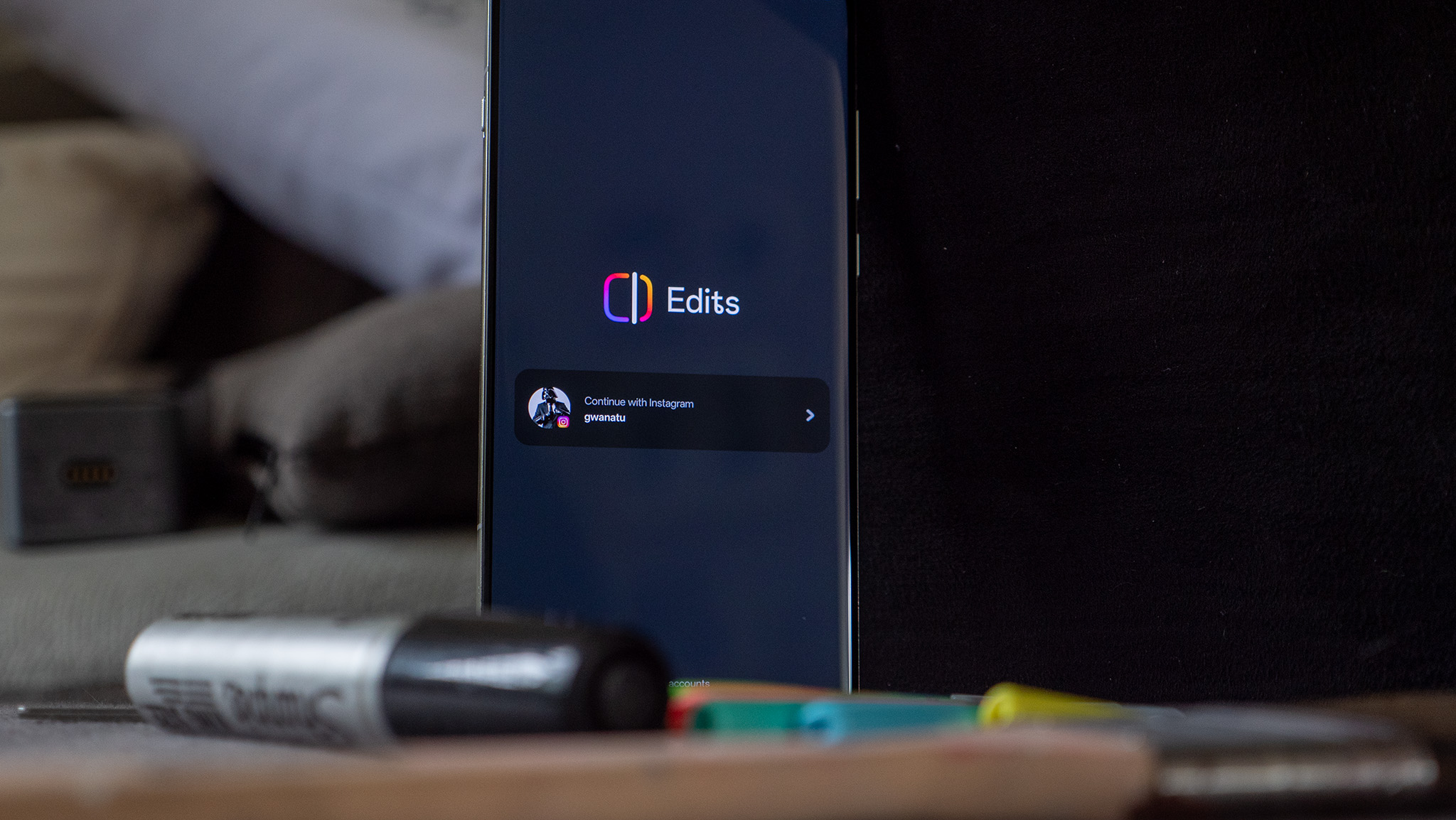

![Instagram Releases 'Edits' Video Creation App [Download]](https://www.iclarified.com/images/news/97097/97097/97097-640.jpg)

![Inside Netflix's Rebuild of the Amsterdam Apple Store for 'iHostage' [Video]](https://www.iclarified.com/images/news/97095/97095/97095-640.jpg)