GenAI enabled Glue Job

Glue Job is now widely used AWS native ETL tool. It is a serverless data integration service that simplifies the process of discovering, preparing, moving, and integrating data from multiple sources for analytics, machine learning, and application development. It offers a centralized data catalog, allowing users to manage their data efficiently. With AWS Glue, you can create, run, and monitor ETL (extract, transform, load) pipelines to load data into your data lakes. It supports various workloads, including ETL, ELT, and streaming, and integrates seamlessly with AWS analytics services and Amazon S3 data lakes. AWS Glue also provides a graphical interface called AWS Glue Studio, which makes it easy to create and manage data integration jobs visually. Gen AI enabled AWS Glue Generative AI has significantly enhanced AWS Glue by streamlining data integration and boosting developer productivity. With the integration of generative AI capabilities, AWS Glue now allows users to build data integration pipelines using natural language. This means you can describe your intent through a chat interface, and AWS Glue will generate a complete job for you. This feature, known as Amazon Bedrock, helps you create data integration jobs with minimal coding experience, allowing you to focus more on analyzing data rather than on mundane tasks. Additionally, generative AI has modernized Spark jobs within AWS Glue, accelerating troubleshooting and reducing the time spent on undifferentiated tasks. This AI-powered assistance provides expert-level guidance throughout the entire data integration lifecycle, making it easier to maintain and troubleshoot jobs. Moreover, generative AI has automated the generation of comprehensive metadata descriptions for data assets in the AWS Glue Data Catalog. This automation enhances data discoverability, understanding, and overall data governance within the AWS Cloud environment. These advancements have made AWS Glue more efficient and user-friendly, enabling users to integrate data faster and improve developer productivity. Solution Overview- The solution we have proposed is to create automated Glue job using Anthropic Claude 3 sonnet model which will generate the scripts based on the prompt provided by the user. Why Claude-3-sonnet model: - It has the ability to perform nuanced content creation, accurate summarization and handle complex scientific queries. This model demonstrates increased proficiency in non-English languages and coding tasks with more accurate responses, supporting a wider range of use cases on a global scale. *Pre-Requisites: * Creation of IAM role for Lambda to provide access to the services that are being used in the automation i.e. S3, Bedrock, CloudWatch, Glue. Creation of IAM Glue Service role for glue job that is being created through lambda. Creation of S3 Bucket in which the files that are being read through lambda should be stored. Creation of Lambda function containing the automation script with necessary layers and configurations. Enablement of access to Bedrock Anthropic Claude-3-sonnet model. Data Flow- 1) User will fill the table in excel requesting different inputs required to create glue job(eg: Job name, source data file details, target details and transformation required to be done on the source data. This excel will then be uploaded to S3 bucket. 2) The event-based architecture triggers an event as S3 push to call the respective Lambda function to start the bedrock invocation process. 3) Bedrock model will be invoked using boto3 – invoke_model as shown below: 4) Response from the bedrock will be used for creation of template for script deployment or directly as an executable script. 5) Lambda will use the generated template for creation of Glue job using the code snippet as shown below: Benefits: 1) Cost Optimization: 40-50% cost reduction in writing the transformation script, creation of Glue/Lambda jobs. 2) Metadata driven processing: User just need to fill in the metadata document which contains input details (like Source, target, prompt(text format of what transformation is required),etc) no need to alter scripts all the time based on user input. 3) Code generation with high efficiency: We are using anthropic.claude-3-sonnet foundation model available in Amazon Bedrock which gives efficient code and needs less optimization. 4) Plug and play kind architecture: There are 2 main components ( Excel file for metadata input and Python script to be executed in lambda ) which can be easily integrated across multi cloud platforms and also to the existing environments in delivery environments. 5) Less Overheads: Since we are using all serverless components so nearly 0 maintenance overheads

Glue Job is now widely used AWS native ETL tool. It is a serverless data integration service that simplifies the process of discovering, preparing, moving, and integrating data from multiple sources for analytics, machine learning, and application development. It offers a centralized data catalog, allowing users to manage their data efficiently. With AWS Glue, you can create, run, and monitor ETL (extract, transform, load) pipelines to load data into your data lakes. It supports various workloads, including ETL, ELT, and streaming, and integrates seamlessly with AWS analytics services and Amazon S3 data lakes. AWS Glue also provides a graphical interface called AWS Glue Studio, which makes it easy to create and manage data integration jobs visually.

Gen AI enabled AWS Glue

Generative AI has significantly enhanced AWS Glue by streamlining data integration and boosting developer productivity. With the integration of generative AI capabilities, AWS Glue now allows users to build data integration pipelines using natural language. This means you can describe your intent through a chat interface, and AWS Glue will generate a complete job for you. This feature, known as Amazon Bedrock, helps you create data integration jobs with minimal coding experience, allowing you to focus more on analyzing data rather than on mundane tasks.

Additionally, generative AI has modernized Spark jobs within AWS Glue, accelerating troubleshooting and reducing the time spent on undifferentiated tasks. This AI-powered assistance provides expert-level guidance throughout the entire data integration lifecycle, making it easier to maintain and troubleshoot jobs.

Moreover, generative AI has automated the generation of comprehensive metadata descriptions for data assets in the AWS Glue Data Catalog. This automation enhances data discoverability, understanding, and overall data governance within the AWS Cloud environment.

These advancements have made AWS Glue more efficient and user-friendly, enabling users to integrate data faster and improve developer productivity.

Solution Overview-

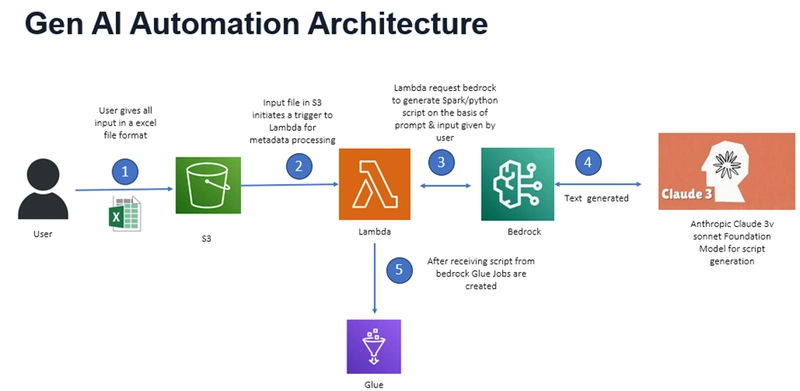

The solution we have proposed is to create automated Glue job using Anthropic Claude 3 sonnet model which will generate the scripts based on the prompt provided by the user.

Why Claude-3-sonnet model: -

It has the ability to perform nuanced content creation, accurate summarization and handle complex scientific queries. This model demonstrates increased proficiency in non-English languages and coding tasks with more accurate responses, supporting a wider range of use cases on a global scale.

*Pre-Requisites: *

- Creation of IAM role for Lambda to provide access to the services that are being used in the automation i.e. S3, Bedrock, CloudWatch, Glue.

- Creation of IAM Glue Service role for glue job that is being created through lambda.

- Creation of S3 Bucket in which the files that are being read through lambda should be stored.

- Creation of Lambda function containing the automation script with necessary layers and configurations.

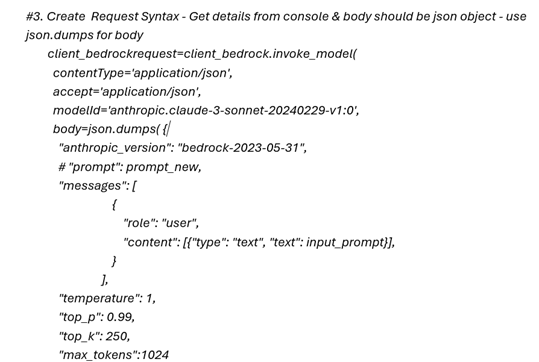

- Enablement of access to Bedrock Anthropic Claude-3-sonnet model. Data Flow- 1) User will fill the table in excel requesting different inputs required to create glue job(eg: Job name, source data file details, target details and transformation required to be done on the source data. This excel will then be uploaded to S3 bucket. 2) The event-based architecture triggers an event as S3 push to call the respective Lambda function to start the bedrock invocation process. 3) Bedrock model will be invoked using boto3 – invoke_model as shown below:

4) Response from the bedrock will be used for creation of template for script deployment or directly as an executable script.

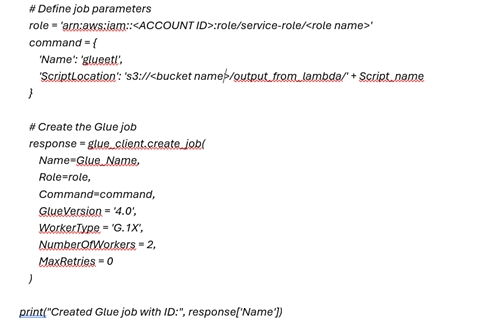

5) Lambda will use the generated template for creation of Glue job using the code snippet as shown below:

Benefits:

1) Cost Optimization: 40-50% cost reduction in writing the transformation script, creation of Glue/Lambda jobs.

2) Metadata driven processing: User just need to fill in the metadata document which contains input details (like Source, target, prompt(text format of what transformation is required),etc) no need to alter scripts all the time based on user input.

3) Code generation with high efficiency: We are using anthropic.claude-3-sonnet foundation model available in Amazon Bedrock which gives efficient code and needs less optimization.

4) Plug and play kind architecture: There are 2 main components ( Excel file for metadata input and Python script to be executed in lambda ) which can be easily integrated across multi cloud platforms and also to the existing environments in delivery environments.

5) Less Overheads: Since we are using all serverless components so nearly 0 maintenance overheads

![[Webinar] AI Is Already Inside Your SaaS Stack — Learn How to Prevent the Next Silent Breach](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiOWn65wd33dg2uO99NrtKbpYLfcepwOLidQDMls0HXKlA91k6HURluRA4WXgJRAZldEe1VReMQZyyYt1PgnoAn5JPpILsWlXIzmrBSs_TBoyPwO7hZrWouBg2-O3mdeoeSGY-l9_bsZB7vbpKjTSvG93zNytjxgTaMPqo9iq9Z5pGa05CJOs9uXpwHFT4/s1600/ai-cyber.jpg?#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)