Designing interpretable/maintainable python code that use pandas DataFrames?

I am working with/writing a good amount of code in python using pandas dataframes. One thing I'm really struggling with is how to enforce a "schema" of sorts or make it apparent what data fields are inside the dataframe. For example say I have a dataframe df with the following columns customer_id | order_id | order_amount | order_date | order_time | now I have some function that is get_average_order_amount_per_customer which will just take the average of the column order_amount per customer. Like a group by def get_average_order_amount_per_customer(df): df = df.groupby(['customer_id']).mean() return pd.DataFrame(df['order_amount']) Now when I go look at this function a few weeks from now I have no idea what is inside this dataframe other than customer_id and order_amount. I would need to go look at preprocessing steps that use that DF and hope to find other functions that use order_id, order_date, order_time. This sometimes requires me tracing back the processing/usage all the way to the file/database schemas where it originated. What I would really love is if the dataframe was strongly-typed or had some schema that was visible in the code without printing it out and checking logs. so I could see what columns it has and rename them if needed or add a field with a default value like I would with a class. Like in a class I could just make an Order object and put the fields that I want in there, and then I can just check the Order class file and see what fields are available for use. I can't get rid of dataframes all together because some of the code is relying on dataframes as inputs i.e. some machine learning libraries like scikit-learn and for doing some visualization with the dataframes. Using Python Typing library I don't think I can name a schema that is inside a dataframe. So is there any type of design pattern or technique I could follow which would allow me to over come this hurdle of ambiguity in the dataframe contents?

I am working with/writing a good amount of code in python using pandas dataframes. One thing I'm really struggling with is how to enforce a "schema" of sorts or make it apparent what data fields are inside the dataframe. For example

say I have a dataframe df with the following columns

customer_id | order_id | order_amount | order_date | order_time |

now I have some function that is get_average_order_amount_per_customer which will just take the average of the column order_amount per customer. Like a group by

def get_average_order_amount_per_customer(df):

df = df.groupby(['customer_id']).mean()

return pd.DataFrame(df['order_amount'])

Now when I go look at this function a few weeks from now I have no idea what is inside this dataframe other than customer_id and order_amount. I would need to go look at preprocessing steps that use that DF and hope to find other functions that use order_id, order_date, order_time. This sometimes requires me tracing back the processing/usage all the way to the file/database schemas where it originated. What I would really love is if the dataframe was strongly-typed or had some schema that was visible in the code without printing it out and checking logs. so I could see what columns it has and rename them if needed or add a field with a default value like I would with a class.

Like in a class I could just make an Order object and put the fields that I want in there, and then I can just check the Order class file and see what fields are available for use.

I can't get rid of dataframes all together because some of the code is relying on dataframes as inputs i.e. some machine learning libraries like scikit-learn and for doing some visualization with the dataframes.

Using Python Typing library I don't think I can name a schema that is inside a dataframe.

So is there any type of design pattern or technique I could follow which would allow me to over come this hurdle of ambiguity in the dataframe contents?

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

.jpg?#)

![YouTube Announces New Creation Tools for Shorts [Video]](https://www.iclarified.com/images/news/96923/96923/96923-640.jpg)

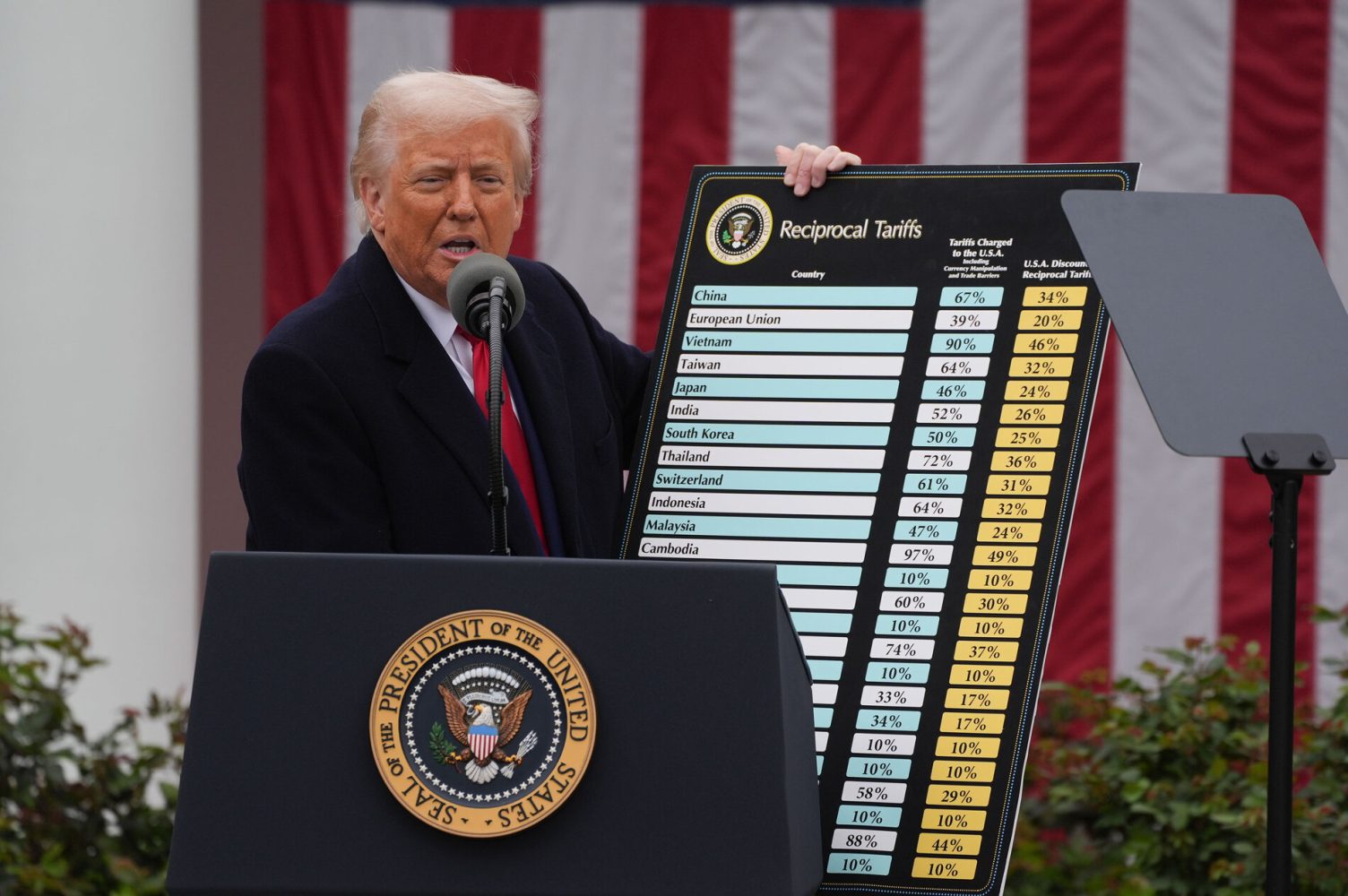

![Apple Faces New Tariffs but Has Options to Soften the Blow [Kuo]](https://www.iclarified.com/images/news/96921/96921/96921-640.jpg)