Deploying an R Model as a Containerised API with Docker, Plumber, and renv

In this post, I’ll walk you through the entire process of building an R model, containerising it as a RESTful API using the Plumber package, and adding detailed logging. We’ll cover every step, including troubleshooting common issues (like missing system libraries) and ensuring reproducibility with renv. By the end of this tutorial, you’ll have a Docker image that serves your model via an API endpoint—ready for local testing or deployment to cloud services like AWS. You can find the entire code and Dockerfiles in the GitHub repo. 1. Overview & Prerequisites In this guide, we build a predictive model using the classic iris dataset and train a random forest classifier. We then containerize our R code with Docker and expose a RESTful API via Plumber. We’ll add logging using the logger package and manage dependencies with renv for reproducibility. Key components include: R Model Training: Using randomForest to train on the iris dataset. Containerisation: Creating a reproducible R environment using Docker. Dependency Management: Using renv to lock package versions. Plumber API: Exposing a /predict endpoint with Swagger documentation. Logging: Detailed logging of API requests and responses, with logs mounted on the host. To effectively monitor our deployment workflow, I have established a dual logging system. The outside_container_logs directory captures logs generated during local development—this includes running and testing individual R scripts, building the Docker container, and future deployments. Meanwhile, logs from processes running inside the container are directed to inside_container_logs, which is mounted to /app/logs within the container. This separation enables us to clearly differentiate between pre-deployment activities and runtime behavior inside the container, allowing us to efficiently troubleshoot and ensure that both our development and production environments are operating as expected. 2. Building & Saving an R Model First, we create a training script (train.R) that trains a random forest model using the iris dataset. We also integrate logging so that we can track the training process. train.R # train.R library(randomForest) library(logger) # Choose the log directory based on whether /app/logs exists (inside container) or not (outside) log_dir

In this post, I’ll walk you through the entire process of building an R model, containerising it as a RESTful API using the Plumber package, and adding detailed logging. We’ll cover every step, including troubleshooting common issues (like missing system libraries) and ensuring reproducibility with renv. By the end of this tutorial, you’ll have a Docker image that serves your model via an API endpoint—ready for local testing or deployment to cloud services like AWS.

You can find the entire code and Dockerfiles in the GitHub repo.

1. Overview & Prerequisites

In this guide, we build a predictive model using the classic iris dataset and train a random forest classifier. We then containerize our R code with Docker and expose a RESTful API via Plumber. We’ll add logging using the logger package and manage dependencies with renv for reproducibility.

Key components include:

- R Model Training: Using randomForest to train on the iris dataset.

- Containerisation: Creating a reproducible R environment using Docker.

- Dependency Management: Using renv to lock package versions.

- Plumber API: Exposing a /predict endpoint with Swagger documentation.

- Logging: Detailed logging of API requests and responses, with logs mounted on the host.

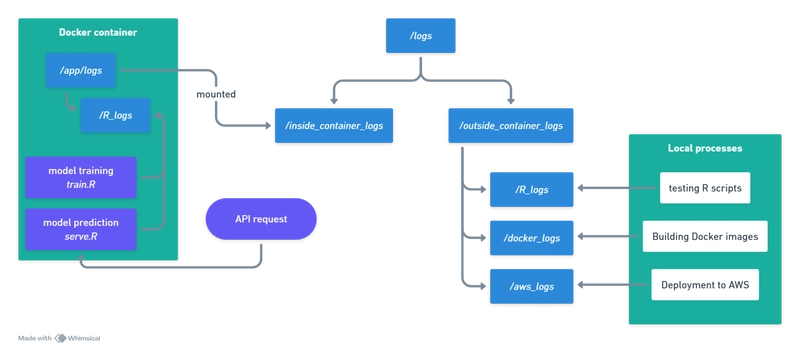

To effectively monitor our deployment workflow, I have established a dual logging system. The outside_container_logs directory captures logs generated during local development—this includes running and testing individual R scripts, building the Docker container, and future deployments. Meanwhile, logs from processes running inside the container are directed to inside_container_logs, which is mounted to /app/logs within the container. This separation enables us to clearly differentiate between pre-deployment activities and runtime behavior inside the container, allowing us to efficiently troubleshoot and ensure that both our development and production environments are operating as expected.

2. Building & Saving an R Model

First, we create a training script (train.R) that trains a random forest model using the iris dataset. We also integrate logging so that we can track the training process.

train.R

# train.R

library(randomForest)

library(logger)

# Choose the log directory based on whether /app/logs exists (inside container) or not (outside)

log_dir <- if (dir.exists("/app/logs")) {

"/app/logs/R_logs"

} else {

"logs/outside_container_logs/R_logs"

}

# Ensure the directory exists; create it if necessary

if (!dir.exists(log_dir)) {

dir.create(log_dir, recursive = TRUE)

}

# Create a timestamp for the log file name

timestamp <- format(Sys.time(), "%Y%m%d_%H%M%S")

log_file <- file.path(log_dir, paste0(timestamp, "_training.log"))

# Configure logger with a unique file name for each run

log_appender(appender_file(log_file))

log_info("Starting model training...")

# Train a random forest model to classify iris species

data(iris)

model <- randomForest(Species ~ ., data = iris, ntree = 100)

log_info("Model training complete.")

# Capture the output of print(model)

model_output <- capture.output(print(model))

# Log the captured output

log_info("Model details:\n{paste(model_output, collapse = '\n')}")

# Save the trained model to disk

saveRDS(model, file = "data/model.rds")

log_info("Model saved to model.rds.")

Run this script (e.g., as part of your Docker build) to generate your model and log its training progress.

3. Building the Base Image with System Dependencies and renv

To ensure that the heavy lifting of installing system dependencies and R packages is done only once, we created a base image. This image includes R 4.4.1, necessary system libraries (for packages such as textshaping and ragg), and restores R packages using an renv.lock file.

Dockerfile.base

# Use a specific R base image

FROM r-base:4.4.1

# Install system dependencies required by R packages

RUN apt-get update && apt-get install -y \

libcurl4-openssl-dev \

libssl-dev \

libxml2 \

libxml2-dev \

libz-dev \

libsodium-dev \

libfontconfig1-dev \

libfreetype6-dev \

libharfbuzz-dev \

libfribidi-dev \

libpng-dev \

libtiff5-dev \

libjpeg-dev \

build-essential \

pkg-config \

wget \

apt-transport-https \

ca-certificates \

&& rm -rf /var/lib/apt/lists/*

# Create a directory for your application

WORKDIR /app

# Copy your renv lock file to restore R packages

COPY renv.lock /app/renv.lock

# Install renv and restore packages using renv.lock

RUN R -e "install.packages('renv', repos='http://cran.rstudio.com/'); \

renv::restore(repos = c(CRAN = 'http://cran.rstudio.com/'))"

This image (tagged as, for example, r-base-mlops:4.4.1) serves as a stable foundation.

4. Iterative Model Image for Rapid Development

Building on the base image, we created a smaller model iteration image that contains only our model code. This image allows us to rapidly iterate on our model (or API) without reinstalling all the system dependencies and R packages every time.

We’ll create a Docker image that:

- Uses the R base image (here, version 4.4.1).

- Copies your scripts and model into the container.

- Exposes the Plumber API on port 8080.

Dockerfile.model

# Use the prebuilt base image that contains R 4.4.1 and all system dependencies & renv packages

FROM r-base-mlops:4.4.1

# Set the working directory

WORKDIR /app

# Create directories for logs and model data

RUN mkdir -p /app/logs/docker_logs /app/logs/R_logs /app/logs/aws_logs

RUN mkdir -p data

# Copy your model scripts into the container

COPY ./R/train.R /app/train.R

COPY ./R/serve.R /app/serve.R

# Copy the runner script to start the API

COPY ./R/run_api.R /app/run_api.R

# Run the training script to generate or update your model (e.g. data/model.rds)

RUN Rscript train.R

# Expose the port on which your Plumber API will run

EXPOSE 8080

# Start the Plumber API using the runner script

CMD ["Rscript", "run_api.R"]

The run_api.R script simply loads serve.R and starts the API:

# run_api.R

pr <- plumber::plumb("serve.R")

pr$run(host = "0.0.0.0", port = 8080)

This setup ensures that when you change your model code (in train.R or serve.R), you only need to rebuild the model image—not the entire base image.

5. Managing R Dependencies with renv

Using renv ensures that your container installs the exact versions of R packages required by your project. To set this up locally:

# 1. Initialise renv:

renv::init()

# 2. Install any packages you use (eg. plumber, randomForest, logger, tidyverse).

# 3. Snapshot the environment:

renv::snapshot()

6. Creating a Plumber API Endpoint

Next, create an API using Plumber. We’ll define an endpoint /predict that:

- Loads the pre-trained model.

- Parses the incoming JSON request.

- Makes a prediction.

- Logs request and response details.

serve.R

# serve.R

library(plumber)

library(randomForest)

library(logger)

library(jsonlite)

# Set up logging

log_dir <- if (dir.exists("/app/logs")) {

"/app/logs/R_logs"

} else {

"logs/outside_container_logs/R_logs"

}

if (!dir.exists(log_dir)) {

dir.create(log_dir, recursive = TRUE)

}

# Create a timestamp for the log file name

timestamp <- format(Sys.time(), "%Y%m%d_%H%M%S")

log_file <- file.path(log_dir, paste0(timestamp, "_request.log"))

log_appender(appender_file(log_file))

# Load the trained model

model <- readRDS("data/model.rds")

#* @filter log_requests

function(req, res) {

log_info("Incoming request: {req$REQUEST_METHOD} {req$PATH_INFO}")

log_info("Request body: {req$postBody}")

plumber::forward()

}

#* @post /predict

#* @serializer json

function(req) {

data <- fromJSON(req$postBody)

input_data <- as.data.frame(data)

pred <- predict(model, input_data)

log_info("Prediction: {paste(pred, collapse=', ')}")

list(prediction = as.character(pred))

}

Plumber automatically generates Swagger documentation (accessible at http://localhost:8080/__docs__/) from these annotations.

7. Testing the API

Using Curl

curl -X POST "http://localhost:8080/predict" \

-H "Content-Type: application/json" \

-d '{"Sepal.Length": 5.1, "Sepal.Width": 3.5, "Petal.Length": 1.4, "Petal.Width": 0.2}'

You should be getting this result:

Prediction: setosa

Using Postman

- Set the HTTP method to POST.

- Enter the URL: http://localhost:8080/predict.

- Under the Headers tab, add

Content-Typefor Key with valueapplication/json. - In the Body tab, select raw and choose JSON from the dropdown.

- Paste the following JSON:

{

"Sepal.Length": 5.1,

"Sepal.Width": 3.5,

"Petal.Length": 1.4,

"Petal.Width": 0.2

}

- Click Send to view the response.

Using R’s httr Package

library(httr)

library(jsonlite)

url <- "http://localhost:8080/predict"

input <- list(

Sepal.Length = 5.1,

Sepal.Width = 3.5,

Petal.Length = 1.4,

Petal.Width = 0.2

)

response <- POST(

url,

body = toJSON(input, auto_unbox = TRUE),

encode = "raw",

add_headers("Content-Type" = "application/json")

)

result <- content(response, as = "parsed")

print(result)

Troubleshooting

1. Container fails to install a package

If a package fails to install (e.g., textshaping or ragg), check the error message for missing system libraries. Update your Dockerfile.base to install the required development packages (such as libharfbuzz-dev, libfribidi-dev, libpng-dev, libtiff5-dev, and libjpeg-dev).

2. Docker Volume Mount

When running your container, use the Docker -v (or --volume) flag to map the host directory to the container directory. For example, if your host directory is in the current working directory, you could run:

docker run -v "$(pwd)/logs/inside_container_logs:/app/logs" your_container_image

This command tells Docker to mount your host’s logs/inside_container_logs directory into the container at /app/logs.

After running the container, use docker container inspect to verify that the mount is correctly set, and check the local folder to ensure that logs are being written there.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)