CACHE in NODE

In our previous posts, we’ve explored the garbage collector in V8, diving into how different object sizes and their allocation places affect performance. In this article, we’ll go one level deeper into hardware — specifically, how CPU caches play a key role in performance, and how object sizes can affect the efficiency of memory access. While it may seem like memory access is fast, it’s actually quite slow compared to the speed at which the CPU operates. The CPU cache helps mitigate this by providing a faster way to access frequently-used data, and understanding how this cache works is crucial for designing performance-oriented Node.js applications. CPU Caches: A Brief Overview Over the years, CPU speed has dramatically outpaced memory speed, which creates a gap when accessing data stored in memory. CPUs today can handle multiple operations simultaneously and even pre-fetch data from memory to stay ahead of execution. However, the real bottleneck occurs when the CPU has to fetch data directly from main memory (RAM), which is slow compared to reading from CPU caches. Modern CPUs have multiple layers of cache, typically L1, L2, and L3. These caches are closer to the CPU than RAM, both physically and in terms of access speed, and each layer has its scope and size: L1 Cache: Fastest and smallest, scoped to a specific CPU core. It’s further divided into two parts: one for data and one for instructions. L2 Cache: Larger but slower than L1, still scoped to the core. L3 Cache: Shared among cores, with much larger capacity but slower access time than L1 and L2. In general, as you move further from the CPU (L1 → L3 → RAM), the size increases, but the speed decreases. Cache Latencies Let’s compare the latency (or delay) involved in accessing different memory levels, from CPU caches to RAM: L1 Cache: Typically 1 to 3 CPU cycles. Accessing data here is nearly instantaneous. L2 Cache: Slightly slower, taking 4 to 10 cycles. L3 Cache: Even slower, with latencies ranging from 10 to 40 cycles. RAM: Accessing data from main memory takes 60 to 100 cycles or more — orders of magnitude slower than accessing data from the L1 cache. As you can see, accessing data from RAM is significantly slower than accessing it from the CPU cache. A more detailed article about the time it takes to access the data can be read here. How Does This Impact Node.js? Node.js objects are not always stored in contiguous blocks of memory, as JavaScript objects are made up of pointers to different places in memory. This means that JavaScript’s memory layout is not always cache-friendly, making it difficult to fully leverage cache locality. However, for certain large data processing tasks, understanding CPU cache behavior can help us design more efficient algorithms. Example: Iterating Over Large Data Sets Let’s look at an example where we allocate increasingly larger arrays of integers and compute their sum. As the array grows in size, we can observe how performance is affected when we exceed the cache’s capacity and begin accessing RAM. for (let size = 10_000; size

In our previous posts, we’ve explored the garbage collector in V8, diving into how different object sizes and their allocation places affect performance. In this article, we’ll go one level deeper into hardware — specifically, how CPU caches play a key role in performance, and how object sizes can affect the efficiency of memory access.

While it may seem like memory access is fast, it’s actually quite slow compared to the speed at which the CPU operates. The CPU cache helps mitigate this by providing a faster way to access frequently-used data, and understanding how this cache works is crucial for designing performance-oriented Node.js applications.

CPU Caches: A Brief Overview

Over the years, CPU speed has dramatically outpaced memory speed, which creates a gap when accessing data stored in memory. CPUs today can handle multiple operations simultaneously and even pre-fetch data from memory to stay ahead of execution. However, the real bottleneck occurs when the CPU has to fetch data directly from main memory (RAM), which is slow compared to reading from CPU caches.

Modern CPUs have multiple layers of cache, typically L1, L2, and L3. These caches are closer to the CPU than RAM, both physically and in terms of access speed, and each layer has its scope and size:

L1 Cache: Fastest and smallest, scoped to a specific CPU core. It’s further divided into two parts: one for data and one for instructions.

L2 Cache: Larger but slower than L1, still scoped to the core.

L3 Cache: Shared among cores, with much larger capacity but slower access time than L1 and L2.

In general, as you move further from the CPU (L1 → L3 → RAM), the size increases, but the speed decreases.

Cache Latencies

Let’s compare the latency (or delay) involved in accessing different memory levels, from CPU caches to RAM:

L1 Cache: Typically 1 to 3 CPU cycles. Accessing data here is nearly instantaneous.

L2 Cache: Slightly slower, taking 4 to 10 cycles.

L3 Cache: Even slower, with latencies ranging from 10 to 40 cycles.

RAM: Accessing data from main memory takes 60 to 100 cycles or more — orders of magnitude slower than accessing data from the L1 cache.

As you can see, accessing data from RAM is significantly slower than accessing it from the CPU cache. A more detailed article about the time it takes to access the data can be read here.

How Does This Impact Node.js?

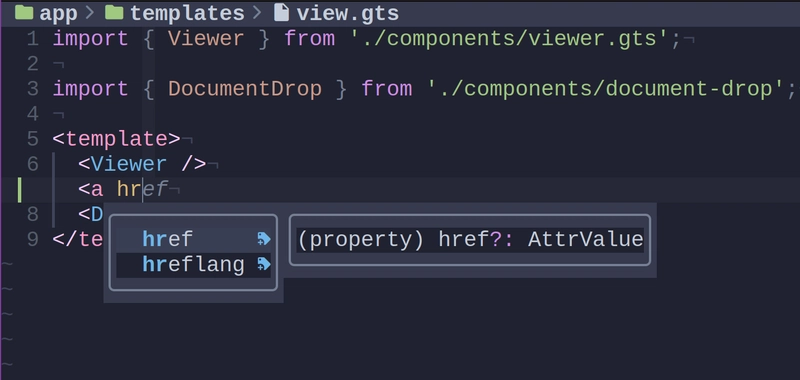

Node.js objects are not always stored in contiguous blocks of memory, as JavaScript objects are made up of pointers to different places in memory. This means that JavaScript’s memory layout is not always cache-friendly, making it difficult to fully leverage cache locality. However, for certain large data processing tasks, understanding CPU cache behavior can help us design more efficient algorithms.

Example: Iterating Over Large Data Sets

Let’s look at an example where we allocate increasingly larger arrays of integers and compute their sum. As the array grows in size, we can observe how performance is affected when we exceed the cache’s capacity and begin accessing RAM.

for (let size = 10_000; size <= 10_000_00; size += 10_000) {

const data = new Uint8Array(i);

const startTime = performance.now();

let sum = 0;

for (let i = 0; i < data.length; i++) {

sum += data[i];

}

const endTime = performance.now();

// store the size and time in a file

}

As the size of the array increases, we will eventually see a sudden jump in the time it takes to iterate over the array. This occurs when the data no longer fits in the CPU cache and the processor is forced to read from the much slower main memory.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)