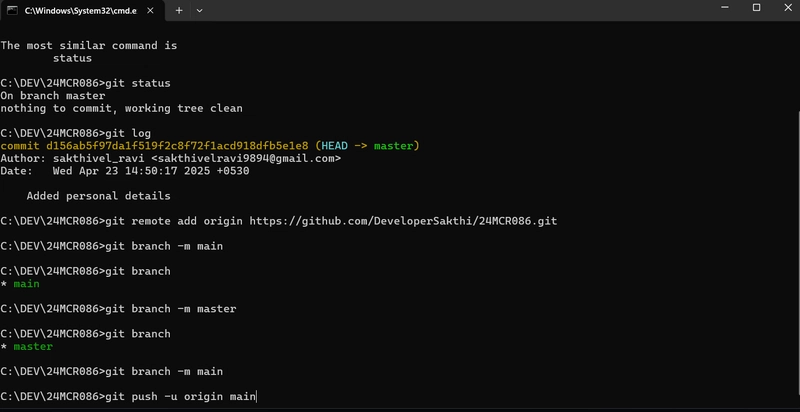

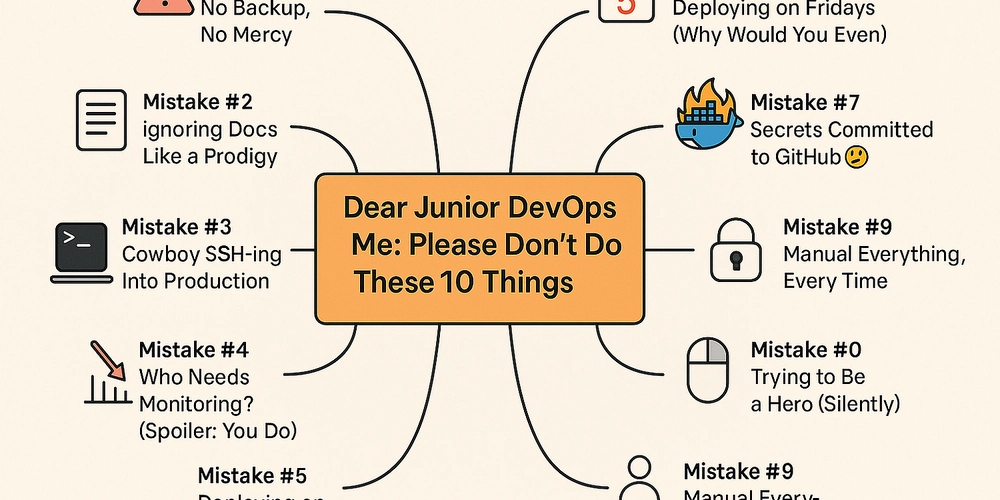

Dear Junior DevOps Me: Please Don’t Do These 10 Things

Introduction Hey, junior me. I know you’re excited. You’ve just landed that shiny new DevOps role, and your terminal has never looked sexier. You’ve got htop running in one tab, a Jenkins pipeline halfway through deployment in another, and you just discovered what kubectl does. You feel unstoppable. Until, of course, you push to prod on a Friday. Or delete a critical config file without a backup. Or hardcode secrets into your repo, thinking no one will notice (spoiler: they did, and so did GitHub’s automated scanners). This isn’t a guide for perfect engineers. It’s a survival log10 mistakes I made that cost time, caused chaos, or just made me feel like I had no idea what I was doing (because I didn’t). Each one comes with the lesson I wish someone had told me back then. Not in a lecture. Just like a teammate pulling me aside and saying: “Hey, don’t do that. Trust me.” So if you’re new to DevOps, still figuring out what half the logs mean, and learning why deploying on a Friday is basically signing a blood pact with Murphy’s Law this one’s for you. Mistake #1: No Backup, No Mercy It started with a YAML file. Doesn’t it always? I was tweaking a production Kubernetes config you know, just a “small change” (red flag #1). I SSH’d into the cluster, opened the file in vim, did my thing, hit save... and something felt off. Ten minutes later, the entire app was down. Why? Because that “small change” nuked a critical volume mount path. And guess what? There was no backup. No previous version. No rollback script. Just me, my broken prod, and the crushing silence of Slack when no one has noticed yet. At that moment, I learned two things: You can go from DevOps to OOPS real fast. “I thought someone else had backups” is not a valid disaster recovery strategy. What I Learned Version Control Everything If it’s not in Git, it doesn’t exist. Automate Backups Set it, test it, forget it. Tools like Velero for Kubernetes or Restic for file-level backups are your best friends. Test Restores A backup that you haven’t tested is just a hope file. Set alerts for failed backups Because if it breaks silently, it might as well not be there. Tools You Can Use Velero: For Kubernetes cluster backups & restores. Restic: A fast, secure, and efficient backup tool. Duplicati: Great for encrypted cloud backups. GitHub Actions: Automate backup scripts on schedule. TL;DR Backups aren’t optional. They’re your undo button in production. Set them up, test them often, and make sure your future self doesn’t hate you. Mistake #2: Ignoring Docs Like a Prodigy You know that moment when you think, “Eh, I don’t need to read the docs — I got this.” Yeah, I lived that moment. A lot. I once tried to configure a CI/CD pipeline with zero experience in YAML, never having touched the platform before. Spent the entire day debugging a mysterious error that, it turns out, was literally explained in the first three lines of the documentation. I just… didn’t read it. Why? Arrogance. Impatience. Or maybe just that classic junior dev belief that figuring things out on your own makes you smarter. Spoiler: it doesn’t. It just makes you slower. What I Learned Docs exist for a reason They’re not optional. They’re cheat codes. The official docs should always be your first stop Not Stack Overflow. Not some 3-year-old blog post. The actual docs. Learn to search docs efficiently Ctrl + F is your best friend. Contribute to docs when you find gaps It’s a power move and good karma. Docs Worth Bookmarking Kubernetes Docs Docker Docs Terraform Docs GitHub Actions Docs AWS Documentation Bonus: Tools like Dash (Mac) or DevDocs (browser-based) make it easier to browse docs offline or faster. TL;DR Being a DevOps engineer isn’t about memorizing everything it’s about knowing where to look. RTFM isn’t just a meme. It’s survival. Mistake #3: Cowboy SSH-ing Into Production There was a time not so long ago when I thought I was a DevOps gunslinger. Something broke in production? I’d whip open a terminal, SSH straight into the server, and start debugging like a hero in a hacker movie. Top, tail, vim, restart service. Boom. Fixed. At least… until it wasn’t. One day, I manually “fixed” a service by tweaking something in /etc directly on the prod box. It worked. But I forgot to replicate the change in code or config. A week later, another deploy wiped it out. Guess what broke again? That’s when I learned: if it’s not reproducible, it’s a liability. What I Learned Never treat production like your dev machine It’s not a playground, it’s sacred ground. Infrastructure as Code (IaC) is your safety net Your setup should live in Git, not in your memory. CI/CD should handle deployments, not your hands The fewer buttons you press, the fewer chances you have to break something. Auditability matters If you can’t trace a change, it doesn’t exist. Tools to Be Less Cowboy, More Engineer Terraform Define infra in code. Ansible Au

Introduction

Hey, junior me.

I know you’re excited. You’ve just landed that shiny new DevOps role, and your terminal has never looked sexier. You’ve got htop running in one tab, a Jenkins pipeline halfway through deployment in another, and you just discovered what kubectl does.

You feel unstoppable.

Until, of course, you push to prod on a Friday.

Or delete a critical config file without a backup.

Or hardcode secrets into your repo, thinking no one will notice (spoiler: they did, and so did GitHub’s automated scanners).

This isn’t a guide for perfect engineers. It’s a survival log10 mistakes I made that cost time, caused chaos, or just made me feel like I had no idea what I was doing (because I didn’t). Each one comes with the lesson I wish someone had told me back then. Not in a lecture. Just like a teammate pulling me aside and saying: “Hey, don’t do that. Trust me.”

So if you’re new to DevOps, still figuring out what half the logs mean, and learning why deploying on a Friday is basically signing a blood pact with Murphy’s Law this one’s for you.

Mistake #1: No Backup, No Mercy

It started with a YAML file. Doesn’t it always?

I was tweaking a production Kubernetes config you know, just a “small change” (red flag #1). I SSH’d into the cluster, opened the file in vim, did my thing, hit save... and something felt off.

Ten minutes later, the entire app was down.

Why? Because that “small change” nuked a critical volume mount path. And guess what?

There was no backup.

No previous version.

No rollback script.

Just me, my broken prod, and the crushing silence of Slack when no one has noticed yet.

At that moment, I learned two things:

- You can go from DevOps to OOPS real fast.

- “I thought someone else had backups” is not a valid disaster recovery strategy.

What I Learned

- Version Control Everything If it’s not in Git, it doesn’t exist.

- Automate Backups Set it, test it, forget it. Tools like Velero for Kubernetes or Restic for file-level backups are your best friends.

- Test Restores A backup that you haven’t tested is just a hope file.

- Set alerts for failed backups Because if it breaks silently, it might as well not be there.

Tools You Can Use

- Velero: For Kubernetes cluster backups & restores.

- Restic: A fast, secure, and efficient backup tool.

- Duplicati: Great for encrypted cloud backups.

- GitHub Actions: Automate backup scripts on schedule.

TL;DR

Backups aren’t optional. They’re your undo button in production. Set them up, test them often, and make sure your future self doesn’t hate you.

Mistake #2: Ignoring Docs Like a Prodigy

You know that moment when you think, “Eh, I don’t need to read the docs — I got this.”

Yeah, I lived that moment. A lot.

I once tried to configure a CI/CD pipeline with zero experience in YAML, never having touched the platform before. Spent the entire day debugging a mysterious error that, it turns out, was literally explained in the first three lines of the documentation. I just… didn’t read it.

Why? Arrogance. Impatience. Or maybe just that classic junior dev belief that figuring things out on your own makes you smarter.

Spoiler: it doesn’t. It just makes you slower.

What I Learned

- Docs exist for a reason They’re not optional. They’re cheat codes.

- The official docs should always be your first stop Not Stack Overflow. Not some 3-year-old blog post. The actual docs.

- Learn to search docs efficiently Ctrl + F is your best friend.

- Contribute to docs when you find gaps It’s a power move and good karma.

Docs Worth Bookmarking

- Kubernetes Docs

- Docker Docs

- Terraform Docs

- GitHub Actions Docs

- AWS Documentation

Bonus: Tools like Dash (Mac) or DevDocs (browser-based) make it easier to browse docs offline or faster.

TL;DR

Being a DevOps engineer isn’t about memorizing everything it’s about knowing where to look. RTFM isn’t just a meme. It’s survival.

Mistake #3: Cowboy SSH-ing Into Production

There was a time not so long ago when I thought I was a DevOps gunslinger.

Something broke in production? I’d whip open a terminal, SSH straight into the server, and start debugging like a hero in a hacker movie. Top, tail, vim, restart service. Boom. Fixed.

At least… until it wasn’t.

One day, I manually “fixed” a service by tweaking something in /etc directly on the prod box. It worked. But I forgot to replicate the change in code or config. A week later, another deploy wiped it out. Guess what broke again?

That’s when I learned: if it’s not reproducible, it’s a liability.

What I Learned

- Never treat production like your dev machine It’s not a playground, it’s sacred ground.

- Infrastructure as Code (IaC) is your safety net Your setup should live in Git, not in your memory.

- CI/CD should handle deployments, not your hands The fewer buttons you press, the fewer chances you have to break something.

- Auditability matters If you can’t trace a change, it doesn’t exist.

Tools to Be Less Cowboy, More Engineer

- Terraform Define infra in code.

- Ansible Automate configurations and setups.

- GitHub Actions / GitLab CI/CD Set up safe, repeatable pipelines.

- Bastion Hosts If you must SSH, make it secure and auditable.

TL;DR

Manual fixes in production might work once but they’re time bombs waiting to explode later. Script it, pipeline it, version it, or expect to relive the same fire.

Mistake #4: “Who Needs Monitoring?” (Spoiler: You Do)

Early in my DevOps career, I was weirdly confident that if something breaks, I’ll just know.

Narrator: He would not just know.

There was this one time our staging environment silently died overnight. No alerts, no metrics, no logs being collected. I only found out because a product manager casually asked, “Hey, is staging supposed to be a blank page right now?”

Turns out, a pod had been OOMKilled for 6 hours. And we were flying blind because I hadn’t set up any monitoring or alerting.

It wasn’t that I didn’t want observability. I just thought it was something we’d “set up later.”

Spoiler: Later is always too late.

What I Learned

- If you can’t see it, you can’t fix it Monitoring isn’t an add-on; it’s core infrastructure.

- Set up alerts before you need them No one enjoys a 3 AM PagerDuty ping… but it’s better than a client call.

- Track metrics and logs Metrics tell you what’s wrong. Logs tell you why.

- Dashboards aren’t just pretty charts They’re your early warning system.

Tools for Better Observability

- Prometheus: Powerful metrics and time-series alerting.

- Grafana: Dashboards that actually make sense.

- Loki: Lightweight log aggregation.

- ELK Stack: Logs from everywhere, searchable in one place.

- Datadog, New Relic, Sentry: Paid, but excellent full-stack solutions.

TL;DR

Monitoring is not optional. It’s your first line of defense. Set up metrics, logs, and alerts before things go wrong not after.

Mistake #5: Deploying on Fridays (Why Would You Even?)

It was a beautiful Friday afternoon.

Pull request ✅

Tests ✅

Pipeline ✅

Me: “Looks good to me, let’s ship it before the weekend!”

Cue dramatic music.

That innocent-looking deployment spiraled into an emergency rollback, a broken dependency, and me in a hoodie staring at logs at 2 AM Saturday — with a pizza box and existential dread as company.

What caused it? One minor config issue.

Why was it a nightmare? Because nobody wants to debug prod at 10 PM on a Friday. Not you. Not your manager. Not even the cloud provider.

What I Learned

- Friday deploys are cursed Murphy’s Law thrives in weekend push scenarios.

- Time matters Deploy when the team is around to spot and fix things fast.

- Mitigation > Bravado Use release strategies that let you fail safely.

Smart Alternatives to “YOLO Deploys”

- Feature Flags: Deploy code without activating it. Toggle it on when ready.

- Canary Releases: Roll out to a small % of users first. Watch for errors.

- Blue-Green Deployments: Switch traffic between two environments for zero-downtime rollouts.

- Rollback Scripts: If all else fails, get back to a safe state fast.

Tools That Help You Not Suffer

- LaunchDarkly or Flagsmith: Feature flagging at scale.

- Argo Rollouts: Kubernetes-native progressive delivery.

- Spinnaker: Advanced release strategies.

- GitOps: Treat deploys like Git commits. Revert easily.

TL;DR

Deployments don’t care that it’s Friday. They’ll still break things. Unless you’re fully confident in your rollback, save it for Monday. Future You will thank you.

Mistake #6: My Dockerfile Was a Dumpster Fire

When I first learned Docker, I thought it was magic. And like every junior wizard with a new wand, I promptly abused it.

My first Dockerfile was… a mess.

I installed everything inside it — Python, Node, curl, unzip, random dependencies I “might need later,” even a GUI package (don’t ask).

The result? A 4.2GB container image that took ages to build, failed half the time, and needed a strong Wi-Fi signal just to push to the registry.

I even had COPY . . with no .dockerignore. Hello, node_modules and secret config files.

It worked, sure. But it was the DevOps version of duct-taping a server together.

What I Learned

- Your Dockerfile is your app’s blueprint — Keep it lean and readable.

-

Use a

.dockerignoreIt’s the.gitignoreof Docker. Keeps the junk out. - Leverage caching layers Order your commands right so you don’t break the cache with every change.

- Use multi-stage builds Compile in one stage, run in another. Smaller, safer images.

Tools to Clean Up Your Containers

- Dive: Analyze and explore Docker image layers.

- Hadolint: Linter for Dockerfiles. It’ll yell at you (in a good way).

- DockerSlim: Minifies container sizes drastically.

- BuildKit: Faster and smarter builds.

TL;DR

Your container should not be heavier than your codebase. Clean your Dockerfiles. Use caching. And for the love of DevOps stop copying the whole repo.

Mistake #7: Secrets Committed to GitHub

This one still haunts me.

I was working on a new feature and needed to test an API integration. I dropped the API key into a .env file.

Then I ran git add .

Then I committed.

Then I pushed to a public repo.

I didn’t notice. But GitHub did — and I got the dreaded email:

⚠️ We’ve detected a secret key in your public repository…

I panicked. I revoked the key. Rotated the secrets. Deleted the commit. Rewrote history with git filter-branch.

But it was too late — the key had been scraped within minutes.

It was the moment I realized: Git doesn’t forget, and the internet never sleeps.

What I Learned

-

Secrets do not belong in code. Ever. Not in

.env, not inconfig.json, not even by “accident.” - Use environment variables and keep them out of version control.

- Use secret management tools that encrypt and rotate automatically.

- Scan your repos before pushing — always.

Tools to Avoid Leaking Secrets

- git-secrets: Scans commits for keys.

- TruffleHog: Finds secrets across history.

- Talisman: Pre-commit hook to prevent sensitive file commits.

- Doppler, Vault, AWS Secrets Manager: Store, manage, and rotate secrets securely.

TL;DR

A leaked secret isn’t just a mistake it’s a security incident. Treat your credentials like passwords: hidden, protected, and never versioned.

Mistake #8: Logs? What Logs?

In my early DevOps days, I treated logs like junk drawer contents. They were there, technically — scattered across different servers, written in different formats, and living in the dark like forgotten monsters.

Whenever something broke, I’d SSH into a server and run:

tail -f /var/log/whatever.logAuto (Bash)

…and hope.

If that file had nothing useful? On to the next one. Rinse and repeat until I found the issue — or gave up and restarted the service, hoping it would fix itself (it usually didn’t).

There was no log aggregation, no search, no alerts. Just vibes and grep.

What I Learned

- Centralized logging is not a “nice to have” it’s a lifeline.

- Standardize your log formats JSON, structured logging, whatever you choose just be consistent.

- Logs should be searchable scrolling endlessly is not troubleshooting.

- Set up log alerts don’t wait for someone to tell you something’s broken.

Tools for Log Nirvana

- ELK Stack (Elasticsearch, Logstash, Kibana): The classic logging powerhouse.

- Loki + Grafana: Lightweight log aggregation with killer dashboards.

- Fluentd: Log collector that works across many systems.

- CloudWatch Logs: For AWS folks who love pain (but still useful).

- Logtail: Sleek cloud-based logging with instant setup.

TL;DR

If you’re still tailing individual files to debug production issues, you’re living in log darkness. Centralize, structure, and search your logs — because production doesn’t have time for grep -r doom.

Mistake #9: Manual Everything, Every Time

I used to do everything manually.

Spin up EC2 instance? Console.

Configure security groups? Click.

Set up a new service? Click… click… scroll… click.

Every task was a mini adventure through a UI maze. It felt fast at first. But here’s the thing about ClickOps:

It doesn’t scale.

It doesn’t document itself.

And it absolutely doesn’t forgive.

One day, I had to replicate our exact staging environment from scratch. I thought, “No problem, I’ll just do what I did before.”

Except I didn’t remember everything I did before.

And guess what? The new environment had a weird bug — all because of one misconfigured setting I couldn’t trace.

What I Learned

- Manual = Unreliable If you can’t recreate it in code, you can’t trust it.

- Automation is your backup brain Scripts never forget a step.

- Infrastructure as Code (IaC) saves time, prevents errors, and gives you version control over your setup.

- Documentation through code is documentation that actually stays updated.

Tools to Break Up with ClickOps

- Terraform: Define, deploy, and version cloud infra.

- Ansible: Automate setup and config across servers.

- Pulumi: IaC using real programming languages.

- AWS CloudFormation: Great if you live entirely in AWS land.

- Bonus: Even

bashscripts and Makefiles are better than clicking 30 times and hoping for the best.

TL;DR

Manual setups are fragile and unrepeatable. Automate everything you can, and let your code speak louder than your clicks.

Mistake #10: Trying to Be a Hero (Silently)

When I was new to DevOps, I had this idea in my head:

Real engineers figure things out on their own.

So when I got stuck, I didn’t ask for help. I buried myself in logs, docs, Stack Overflow threads from 2013, and spiraled deeper into confusion. Hours passed. Then days.

The worst part? Half the time, the solution was something simple a flag I missed, a missing semicolon, a permissions issue.

Something a teammate could’ve pointed out in ten seconds.

But I was too worried about looking inexperienced to say:

“Hey, can someone take a look at this with me?”

What I Learned

- Not asking for help wastes time, not saves face There’s no prize for suffering in silence.

- Even seniors ask for help The best engineers are the ones who debug as a team.

- Pair programming is a cheat code Especially for complex systems or unknown territory.

- Asking early saves more time than googling forever Don’t be your own blocker.

Culture Tools to Lean On

- Slack or Teams? Ask in public channels. Others learn from the answers too.

- Daily standups? Bring up blockers early.

- Use tools like Tuple or VS Code Live Share to pair on issues.

- Write up what you learned afterward helps others and builds your internal rep.

TL;DR

You don’t need to be a solo hero. DevOps is a team sport. Ask, collaborate, learn faster and you’ll get stronger way quicker than going solo in the logs.

Conclusion: From Chaos to Confidence

If there’s one thing I’ve learned from all these mistakes, it’s this: DevOps is less about being perfect, and more about building systems that forgive imperfection.

When I started out, I thought making mistakes meant I wasn’t good enough. But the truth is, every incident, every broken deploy, every sweaty-palmed rollback they were all part of leveling up.

You don’t become a senior by avoiding mistakes. You get there by surviving them, learning from them, and then helping the next person avoid them.

So to my younger self and to anyone reading this who’s in those early days here’s the real lesson:

- Break things (just not prod).

- Ask questions early.

- Automate everything.

- Read the docs.

- And for the love of uptime, never deploy on a Friday.

These 10 mistakes made me a better engineer. They gave me scar tissue that now reads like documentation. And if you can dodge even one of them because of this post?

That’s a win in my book.

Keep learning. Keep breaking (safely). And keep shipping.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![[FREE EBOOKS] AI and Business Rule Engines for Excel Power Users, Machine Learning Hero & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Hostinger Horizons lets you effortlessly turn ideas into web apps without coding [10% off]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/04/IMG_1551.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![This new Google TV streaming dongle looks just like a Chromecast [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/04/thomson-cast-150-google-tv-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Drops New Immersive Adventure Episode for Vision Pro: 'Hill Climb' [Video]](https://www.iclarified.com/images/news/97133/97133/97133-640.jpg)

![Most iPhones Sold in the U.S. Will Be Made in India by 2026 [Report]](https://www.iclarified.com/images/news/97130/97130/97130-640.jpg)