Codex CLI – Adding Azure OpenAI to Codex by using… Codex!

You might have missed it amid the launch of the new models o3 and o4‑mini, but OpenAI also announced Codex, a lightweight, open‑source coding agent that runs entirely in your terminal. Because Codex is open source, it immediately caught my attention. Three questions sprang to mind: How well does it work with the latest frontier models? How does it work under the hood—how complex is an agent like this? How easy is it to adapt the code and make it enterprise‑ready by connecting it to an Azure OpenAI endpoint? I’ll tackle each of these in the sections below. What exactly is Codex? Originally “Codex” referred to an early OpenAI code‑generation model, but the name has now been repurposed for an open‑source CLI companion for software engineers. https://github.com/openai/codex In practice, Codex is a coding assistant you run from your terminal. You can: Ask questions about your code base Request suggestions or refactors Let it automatically edit, test, and even run parts of your project—safely isolated inside a sandbox to prevent unwanted commands or outbound connections If you’ve used tools like Cursor, Claude Code, or GitHub Copilot, you’ll feel right at home. What sets Codex apart is its fully open‑source nature and its simple CLI interface, which makes it easy to integrate into existing workflows. Extending Codex with… Codex! During the launch stream the presenters hinted that you could modify Codex using Codex itself. Challenge accepted! My goal: wire Codex to an enterprise‑ready Azure OpenAI deployment—using only Codex. Setting up Clone the repo and install the latest release. Export your OpenAI API key as OPENAI_API_KEY. Run Codex in the directory you want to target. By default, the CLI selects the gpt‑4o model, which is brilliant for many tasks but can struggle with very large code bases. I switched to o4‑mini immediately. (In the more recent versions it is defaulted to o4-mini) Add this to ~/.codex/config.yaml: model: o4-mini # default model —or pass it ad‑hoc: codex -m o4-mini Finding the hook After a few exploratory questions I located the heart of the system: agent-loop.ts. That’s the place to add Azure OpenAI support. But manual edits were slow, so I relaunched Codex in full‑auto mode: codex -m o4-mini --approval-mode full-auto Then I asked it to update the code so that, when a specific environment variable is present, it calls my Azure OpenAI endpoint instead of api.openai.com. Watching it work Codex began reading files, drafting patches, and applying them. Because I was still using my regular OpenAI account, the agent occasionally hit rate limits on o4‑mini and crashed, losing context. Annoying, but expected in an early release; simply re‑running the last instruction resumes after it scans the project again. I was impressed by how economically it sliced the repo into small context windows. One reason I only burned ~4.5 M tokens during the experiment. The results The generated code was surprisingly solid—minor syntax errors cropped up once or twice, usually after an interrupted run, but nothing serious. My only real blunder was pointing Codex to the wrong Azure endpoint (/chat/completions instead of /responses), but Codex even helped debug that. You can find the patched fork here on Github: https://github.com/Frankiey/codex/tree/feature/add_openai_service Conclusion Codex isn’t just another code‑completion toy; it’s a hackable, scriptable engineering agent small enough to grasp in an afternoon and powerful enough to refactor itself. With a few tweaks (and a lot of tokens) I had it talking to Azure OpenAI, proving that an enterprise‑ready workflow is within easy reach. Next Steps Here’s the personal checklist I’m working through to take Codex from “cool prototype” to production‑ready helper: Wire up Azure Entra ID so Codex authenticates through SSO without API keys scattered around. Add robust retries and back‑off - rate limits should become log lines, not crashes. Drop Codex into the CI pipeline so every pull request gets an automated review (and, where safe, an auto‑patch). Spin up companion agents for docs, security, and test generation, all sharing the same project context. Automate releases once the workflow feels stable, keeping a final human approval gate in place. I’ll report back on each milestone and any surprises in the next post!

You might have missed it amid the launch of the new models o3 and o4‑mini, but OpenAI also announced Codex, a lightweight, open‑source coding agent that runs entirely in your terminal.

Because Codex is open source, it immediately caught my attention. Three questions sprang to mind:

- How well does it work with the latest frontier models?

- How does it work under the hood—how complex is an agent like this?

- How easy is it to adapt the code and make it enterprise‑ready by connecting it to an Azure OpenAI endpoint?

I’ll tackle each of these in the sections below.

What exactly is Codex?

Originally “Codex” referred to an early OpenAI code‑generation model, but the name has now been repurposed for an open‑source CLI companion for software engineers.

https://github.com/openai/codex

In practice, Codex is a coding assistant you run from your terminal. You can:

- Ask questions about your code base

- Request suggestions or refactors

- Let it automatically edit, test, and even run parts of your project—safely isolated inside a sandbox to prevent unwanted commands or outbound connections

If you’ve used tools like Cursor, Claude Code, or GitHub Copilot, you’ll feel right at home. What sets Codex apart is its fully open‑source nature and its simple CLI interface, which makes it easy to integrate into existing workflows.

Extending Codex with… Codex!

During the launch stream the presenters hinted that you could modify Codex using Codex itself. Challenge accepted! My goal: wire Codex to an enterprise‑ready Azure OpenAI deployment—using only Codex.

Setting up

- Clone the repo and install the latest release.

- Export your OpenAI API key as

OPENAI_API_KEY. - Run Codex in the directory you want to target.

By default, the CLI selects the gpt‑4o model, which is brilliant for many tasks but can struggle with very large code bases. I switched to o4‑mini immediately. (In the more recent versions it is defaulted to o4-mini) Add this to ~/.codex/config.yaml:

model: o4-mini # default model

—or pass it ad‑hoc:

codex -m o4-mini

Finding the hook

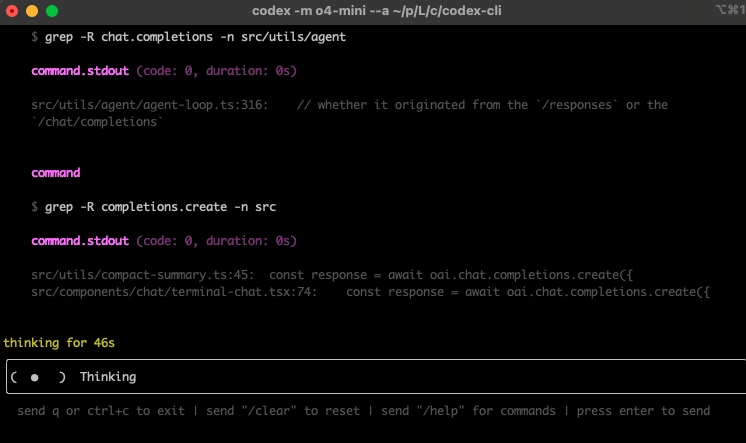

After a few exploratory questions I located the heart of the system: agent-loop.ts. That’s the place to add Azure OpenAI support. But manual edits were slow, so I relaunched Codex in full‑auto mode:

codex -m o4-mini --approval-mode full-auto

Then I asked it to update the code so that, when a specific environment variable is present, it calls my Azure OpenAI endpoint instead of api.openai.com.

Watching it work

Codex began reading files, drafting patches, and applying them. Because I was still using my regular OpenAI account, the agent occasionally hit rate limits on o4‑mini and crashed, losing context. Annoying, but expected in an early release; simply re‑running the last instruction resumes after it scans the project again.

I was impressed by how economically it sliced the repo into small context windows. One reason I only burned ~4.5 M tokens during the experiment.

The results

The generated code was surprisingly solid—minor syntax errors cropped up once or twice, usually after an interrupted run, but nothing serious. My only real blunder was pointing Codex to the wrong Azure endpoint (/chat/completions instead of /responses), but Codex even helped debug that.

You can find the patched fork here on Github: https://github.com/Frankiey/codex/tree/feature/add_openai_service

Conclusion

Codex isn’t just another code‑completion toy; it’s a hackable, scriptable engineering agent small enough to grasp in an afternoon and powerful enough to refactor itself. With a few tweaks (and a lot of tokens) I had it talking to Azure OpenAI, proving that an enterprise‑ready workflow is within easy reach.

Next Steps

Here’s the personal checklist I’m working through to take Codex from “cool prototype” to production‑ready helper:

- Wire up Azure Entra ID so Codex authenticates through SSO without API keys scattered around.

- Add robust retries and back‑off - rate limits should become log lines, not crashes.

- Drop Codex into the CI pipeline so every pull request gets an automated review (and, where safe, an auto‑patch).

- Spin up companion agents for docs, security, and test generation, all sharing the same project context.

- Automate releases once the workflow feels stable, keeping a final human approval gate in place.

I’ll report back on each milestone and any surprises in the next post!

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Is this too much for a modular monolith system? [closed]](https://i.sstatic.net/pYL1nsfg.png)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)