Building an Intelligent Document Analyzer: My Gen AI Capstone Project with RAG

We're living in an age of information overload. From research papers and technical manuals to financial reports and legal documents, we often face mountains of text that contain the specific answers we need, if only we could find them quickly. Manually searching through lengthy documents is time-consuming and inefficient. What if we could just ask the document our question? For my capstone project in Gen AI Intensive Course Capstone 2025Q1, I decided to tackle this exact problem by building an Intelligent Document Analyzer using the power of Generative AI, specifically a technique called Retrieval Augmented Generation (RAG). The Challenge: Drowning in Documents Imagine needing to understand the key findings of a 96-page climate change report or find a specific clause in a dense contract. Reading everything takes hours. Keyword searches might miss relevant sections if the phrasing doesn't match exactly. This is where modern AI techniques can offer a powerful solution. The Solution: An AI Assistant for Your Documents My project aimed to create a system where a user could provide a PDF document and ask questions in plain English, receiving concise, relevant answers based only on the information contained within that document. The core idea is Retrieval Augmented Generation (RAG). Instead of feeding the entire (potentially massive) document directly to a Large Language Model (LLM) – which often exceeds context limits and can lead to generic answers – RAG works smarter: Retrieve: First, find the specific snippets of text within the document that are most relevant to the user's question. Augment: Provide only these relevant snippets as context alongside the original question to the LLM. Generate: Ask the LLM to generate an answer based strictly on the provided context snippets. This approach keeps the LLM focused, grounds its answers in the actual document content, and significantly reduces the chance of irrelevant information or "hallucinations." How It Works: The Tech Behind the Scenes I built this system within a Kaggle Notebook, leveraging several key tools and techniques: Document Loading & Understanding (pypdf): The first step was to load the PDF document and extract its raw text content, page by page. This involves understanding the PDF structure. Text Chunking (langchain.text_splitter): The extracted text was then broken down into smaller, manageable, overlapping chunks. This helps ensure that relevant information isn't cut off arbitrarily and prepares the text for embedding. Embedding Generation (Google's text-embedding-004): Each text chunk was converted into a numerical vector (an "embedding") using Google's powerful embedding model. These vectors capture the semantic meaning of the text. Vector Store & Search (faiss-cpu): The generated embeddings were stored in a FAISS index. FAISS allows for incredibly fast searching to find the chunk embeddings that are most semantically similar (closest in vector space) to the embedding of the user's query. Retrieval & Generation (Google's gemini-1.5-flash-latest): When a query comes in, its embedding is generated, FAISS finds the most relevant text chunks, and these chunks are combined with the query into a carefully crafted prompt. This prompt is then sent to Google's Gemini model, instructing it to generate the answer based only on the provided context. Seeing It in Action: Example Q&A Here are some examples of the system querying the "Climate Change Indicators in the United States, 2016" report: Query: What is the main topic of this document? Answer: Based on the provided text, the main topic of the document is climate change indicators in the United States. The document details its purpose of informing readers about climate change, its structure (organized into chapters focusing on different aspects of climate change), and the type of information included within each indicator. Query: Summarize the key findings mentioned. Answer: The provided text summarizes key findings related to climate change indicators. Seven new indicators were added (River Flooding, Coastal Flooding, Antarctic Sea Ice, Heat-Related Illnesses, West Nile Virus, Stream Temperature, and Marine Species Distribution), and three existing indicators (Arctic Sea Ice, Snow Cover, and Heat-Related Deaths) were expanded. Nearly all indicators were updated with newer data. River flooding has generally increased in the Northeast and Midwest, but decreased in the West, southern Appalachia, and northern Michigan. The report also highlights the impact of stream temperature changes on salmon, a tribally important resource. Finally, the report anticipates even more climate change indicators in the future. Query: What is the suggested citation for the report? Answer: U.S. Environmental Protection Agency. 2016. Climate change indicators in the United States, 2016. Fourth edition. EPA 430-R-16-004. www.epa.gov/climate-indicators. As yo

We're living in an age of information overload. From research papers and technical manuals to financial reports and legal documents, we often face mountains of text that contain the specific answers we need, if only we could find them quickly. Manually searching through lengthy documents is time-consuming and inefficient. What if we could just ask the document our question?

For my capstone project in Gen AI Intensive Course Capstone 2025Q1, I decided to tackle this exact problem by building an Intelligent Document Analyzer using the power of Generative AI, specifically a technique called Retrieval Augmented Generation (RAG).

The Challenge: Drowning in Documents

Imagine needing to understand the key findings of a 96-page climate change report or find a specific clause in a dense contract. Reading everything takes hours. Keyword searches might miss relevant sections if the phrasing doesn't match exactly. This is where modern AI techniques can offer a powerful solution.

The Solution: An AI Assistant for Your Documents

My project aimed to create a system where a user could provide a PDF document and ask questions in plain English, receiving concise, relevant answers based only on the information contained within that document.

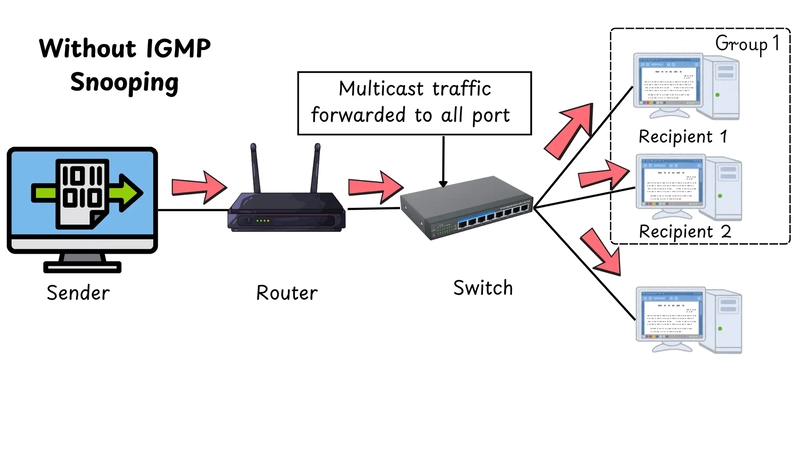

The core idea is Retrieval Augmented Generation (RAG). Instead of feeding the entire (potentially massive) document directly to a Large Language Model (LLM) – which often exceeds context limits and can lead to generic answers – RAG works smarter:

- Retrieve: First, find the specific snippets of text within the document that are most relevant to the user's question.

- Augment: Provide only these relevant snippets as context alongside the original question to the LLM.

- Generate: Ask the LLM to generate an answer based strictly on the provided context snippets.

This approach keeps the LLM focused, grounds its answers in the actual document content, and significantly reduces the chance of irrelevant information or "hallucinations."

How It Works: The Tech Behind the Scenes

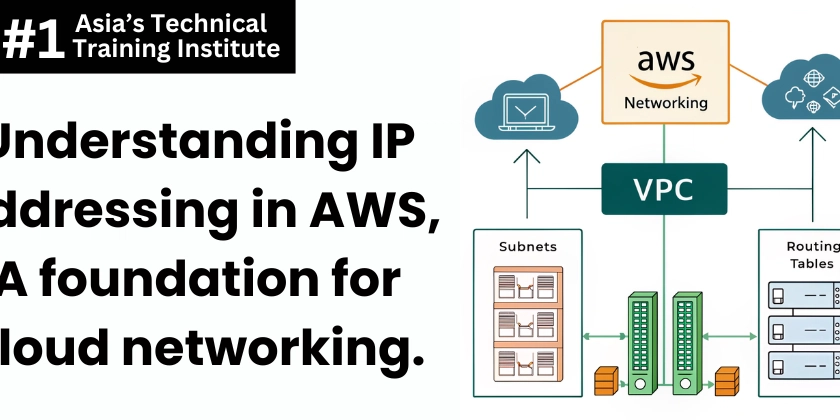

I built this system within a Kaggle Notebook, leveraging several key tools and techniques:

- Document Loading & Understanding (

pypdf): The first step was to load the PDF document and extract its raw text content, page by page. This involves understanding the PDF structure. - Text Chunking (

langchain.text_splitter): The extracted text was then broken down into smaller, manageable, overlapping chunks. This helps ensure that relevant information isn't cut off arbitrarily and prepares the text for embedding. - Embedding Generation (Google's

text-embedding-004): Each text chunk was converted into a numerical vector (an "embedding") using Google's powerful embedding model. These vectors capture the semantic meaning of the text. - Vector Store & Search (

faiss-cpu): The generated embeddings were stored in a FAISS index. FAISS allows for incredibly fast searching to find the chunk embeddings that are most semantically similar (closest in vector space) to the embedding of the user's query. - Retrieval & Generation (Google's

gemini-1.5-flash-latest): When a query comes in, its embedding is generated, FAISS finds the most relevant text chunks, and these chunks are combined with the query into a carefully crafted prompt. This prompt is then sent to Google's Gemini model, instructing it to generate the answer based only on the provided context.

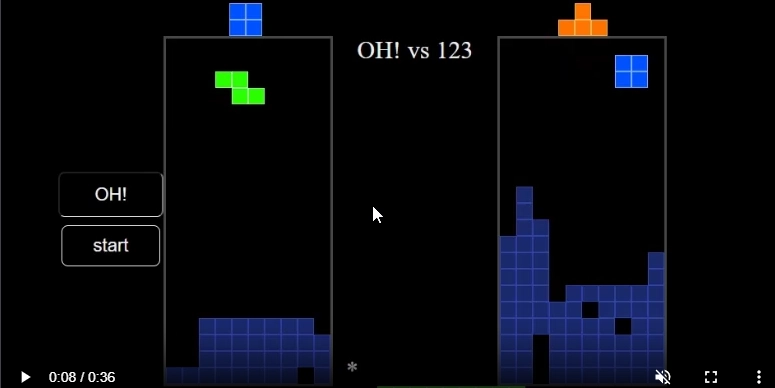

Seeing It in Action: Example Q&A

Here are some examples of the system querying the "Climate Change Indicators in the United States, 2016" report:

Query: What is the main topic of this document?

Answer:

Based on the provided text, the main topic of the document is climate change indicators in the United States. The document details its purpose of informing readers about climate change, its structure (organized into chapters focusing on different aspects of climate change), and the type of information included within each indicator.Query: Summarize the key findings mentioned.

Answer:

The provided text summarizes key findings related to climate change indicators. Seven new indicators were added (River Flooding, Coastal Flooding, Antarctic Sea Ice, Heat-Related Illnesses, West Nile Virus, Stream Temperature, and Marine Species Distribution), and three existing indicators (Arctic Sea Ice, Snow Cover, and Heat-Related Deaths) were expanded. Nearly all indicators were updated with newer data. River flooding has generally increased in the Northeast and Midwest, but decreased in the West, southern Appalachia, and northern Michigan. The report also highlights the impact of stream temperature changes on salmon, a tribally important resource. Finally, the report anticipates even more climate change indicators in the future.Query: What is the suggested citation for the report?

Answer:

U.S. Environmental Protection Agency. 2016. Climate change indicators in the United States, 2016. Fourth edition. EPA 430-R-16-004. www.epa.gov/climate-indicators.

As you can see, the system successfully retrieves relevant information and synthesizes concise answers directly from the document content.

Key Gen AI Capabilities Showcased

This project demonstrated several core Generative AI capabilities:

- Document Understanding: Processing and structuring information from a PDF.

- Embeddings: Creating meaningful numerical representations of text.

- Vector Search/Store: Enabling efficient semantic similarity search.

- Retrieval Augmented Generation (RAG): Implementing the powerful pattern of combining retrieval with generation for grounded, relevant answers.

Challenges and Future Ideas

While the system works well, there's always room for improvement. Choosing the right chunking strategy can be tricky, and extremely complex PDF layouts might require more advanced parsing. Future enhancements could include evaluating answer quality automatically, implementing more sophisticated retrieval methods, or adding a user interface.

Conclusion

Building this RAG system was a fantastic learning experience. It highlights how Generative AI, when combined with smart retrieval techniques, can create genuinely useful tools for navigating complex information. Instead of being overwhelmed by documents, we can start having conversations with them!

Want to see the code and try it yourself? Check out the full implementation in my Kaggle Notebook:

➡️ https://www.kaggle.com/code/pragnyanitr22cs024/intelligent-document-analyzer-query-system

Thanks for reading! Let me know your thoughts or questions in the comments below.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

(1).jpg?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

.png?#)

-(1).png?width=1920&height=1920&fit=bounds&quality=80&format=jpg&auto=webp#)

_Christophe_Coat_Alamy.jpg?#)

.webp?#)

.webp?#)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)