Building a Scalable Multi-Tenant Generative AI Platform with Amazon Bedrock : Part 1

Streamlining Bedrock Application Inference Profile Creation with Service Catalog In today’s dynamic cloud environments, managing resources in a multi-tenant scenario requires a standardized approach along with business governance and cost control that empowers project teams to work autonomously. In this series, we explore how to build a cost-effective and manageable multi-tenant Generative AI platform using Amazon Bedrock. In Part 1, we focus on the challenges of managing Bedrock Inference Profiles and how AWS Service Catalog offers an elegant solution to provision these profiles in a controlled and standardized manner. Introduction Managing application inference profiles for Amazon Bedrock in a multi-tenant environment can be challenging. Issues such as inconsistent resource provisioning, cost tracking, and ensuring that only approved configurations are used across different departments can quickly lead to operational overhead. AWS Service Catalog addresses these challenges by enabling you to create pre-approved CloudFormation templates that encapsulate your organizational best practices. With Service Catalog, you can: Standardize Provisioning: Enforce common configurations across all teams. Enhance Governance: Track resource deployments and apply organizational policies consistently. Improve Cost Visibility: Integrate with CloudWatch dashboards to monitor resource usage and spending. Solution Overview The solution architecture leverages several AWS services to create an automated, secure, and scalable provisioning process for Bedrock Application Inference Profiles: AWS Service Catalog: Acts as the central provisioning mechanism where a CloudFormation template is used to create Bedrock Inference Profiles as a vending machine. AWS Systems Manager (SSM) Parameter Store: Stores the ARNs of the created inference profiles, which can be securely retrieved by applications during runtime. Amazon CloudWatch: Provides dashboards for monitoring cost and usage metrics, ensuring that resource consumption remains transparent and accountable. The following diagram illustrates the high-level architecture: Bedrock Application Inference Profile Provisioning Product Step-by-Step Implementation 1. Creating the Bedrock Application Inference Profile Template (CloudFormation) Before creating any Service Catalog product, you need to have a robust CloudFormation template. This template should include all the resources required to deploy a Bedrock Application Inference Profile along with supporting resources for governance and cost tracking. For example: Application InferenceProfile Resource: Creates the Bedrock Application Inference Profile. Use parameters (e.g., ProjectName , Department , modelArn ) to customize the profile details by selecting underlying Bedrock FM model, and tags. SSM Parameter Resource: Stores the ARN of the Application Inference Profile so downstream applications can securely retrieve it. Budget Alert Resource (Optional): Configures AWS Budgets or CloudWatch alarms to track spending and notify when cost thresholds are reached. CloudFormation Template YAML: AWSTemplateFormatVersion: '2010-09-09' Transform: AWS::LanguageExtensions Parameters: ProjectName: Type: String Description: "Name of the project" Department: Type: String AllowedValues: [marketing, sales, engineering] Description: "Department name" ModelArns: Type: String Description: "Select a model ARN from the allowed list" AllowedValues: - arn:aws:bedrock:us-east-1::foundation-model/amazon.nova-pro-v1:0 - arn:aws:bedrock:us-east-1::foundation-model/ai21.j2-mid-v1 - arn:aws:bedrock:us-east-1::foundation-model/ai21.j2-ultra-v1 - arn:aws:bedrock:us-east-1::foundation-model/amazon.titan-text-premier-v1 Default: arn:aws:bedrock:us-east-1::foundation-model/amazon.nova-pro-v1:0 Resources: InferenceProfile: Type: AWS::Bedrock::ApplicationInferenceProfile Properties: InferenceProfileName: !Sub "${Department}-${ProjectName}-Profile" ModelSource: CopyFrom: !Ref ModelArns # Use the selected model ARN Tags: - Key: CostCenter Value: !Ref Department - Key: Project Value: !Ref ProjectName ProfileArnParameter: Type: AWS::SSM::Parameter Properties: Name: !Sub "/bedrock/${Department}/${ProjectName}/profile-arn" Type: String Value: !GetAtt InferenceProfile.InferenceProfileArn Tags: Project: !Ref ProjectName CostCenter: !Ref Department BudgetAlert: Type: AWS::Budgets::Budget Properties: Budget: BudgetName: !Sub "${ProjectName}-Bedrock-Budget" BudgetLimit: Amount: 1000 Unit: USD BudgetType: COST TimeUnit: MONTHLY CostFilters: TagKeyValue: - !Sub "Project$${ProjectName}" # Use TagKeyValue for tag-based filtering N

Streamlining Bedrock Application Inference Profile Creation with Service Catalog

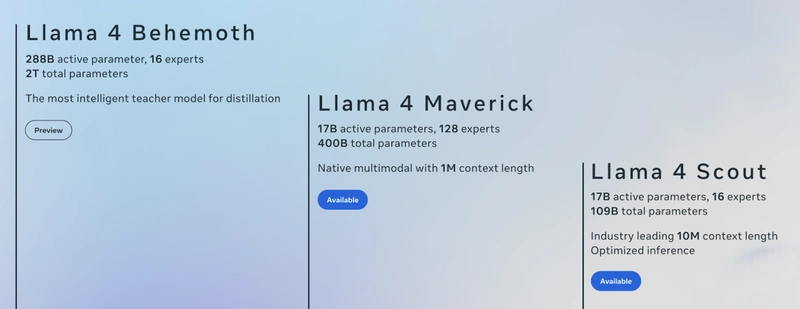

In today’s dynamic cloud environments, managing resources in a multi-tenant scenario requires a standardized approach along with business governance and cost control that empowers project teams to work autonomously. In this series, we explore how to build a cost-effective and manageable multi-tenant Generative AI platform using Amazon Bedrock. In Part 1, we focus on the challenges of managing Bedrock Inference Profiles and how AWS Service Catalog offers an elegant solution to provision these profiles in a controlled and standardized manner.

Introduction

Managing application inference profiles for Amazon Bedrock in a multi-tenant environment can be challenging. Issues such as inconsistent resource provisioning, cost tracking, and ensuring that only approved configurations are used across different departments can quickly lead to operational overhead. AWS Service Catalog addresses these challenges by enabling you to create pre-approved CloudFormation templates that encapsulate your organizational best practices. With Service Catalog, you can:

- Standardize Provisioning: Enforce common configurations across all teams.

- Enhance Governance: Track resource deployments and apply organizational policies consistently.

- Improve Cost Visibility: Integrate with CloudWatch dashboards to monitor resource usage and spending.

Solution Overview

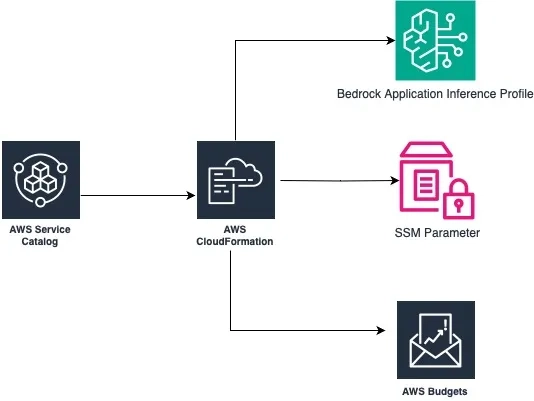

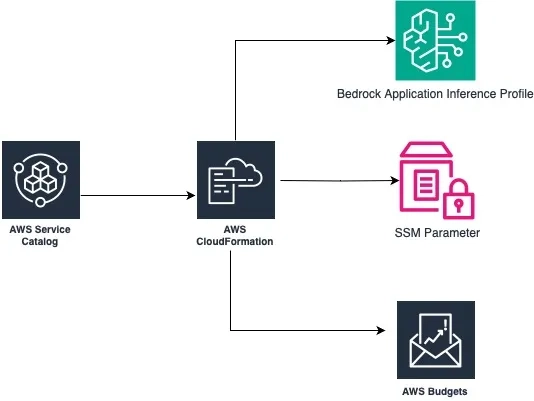

The solution architecture leverages several AWS services to create an automated, secure, and scalable provisioning process for Bedrock Application Inference Profiles:

- AWS Service Catalog: Acts as the central provisioning mechanism where a CloudFormation template is used to create Bedrock Inference Profiles as a vending machine.

- AWS Systems Manager (SSM) Parameter Store: Stores the ARNs of the created inference profiles, which can be securely retrieved by applications during runtime.

- Amazon CloudWatch: Provides dashboards for monitoring cost and usage metrics, ensuring that resource consumption remains transparent and accountable.

The following diagram illustrates the high-level architecture:

Bedrock Application Inference Profile Provisioning Product

Step-by-Step Implementation

1. Creating the Bedrock Application Inference Profile Template (CloudFormation)

Before creating any Service Catalog product, you need to have a robust CloudFormation template. This template should include all the resources required to deploy a Bedrock Application Inference Profile along with supporting resources for governance and cost tracking. For example:

-

Application InferenceProfile Resource:

Creates the Bedrock Application Inference Profile. Use parameters (e.g.,

ProjectName,Department,modelArn) to customize the profile details by selecting underlying Bedrock FM model, and tags. - SSM Parameter Resource: Stores the ARN of the Application Inference Profile so downstream applications can securely retrieve it.

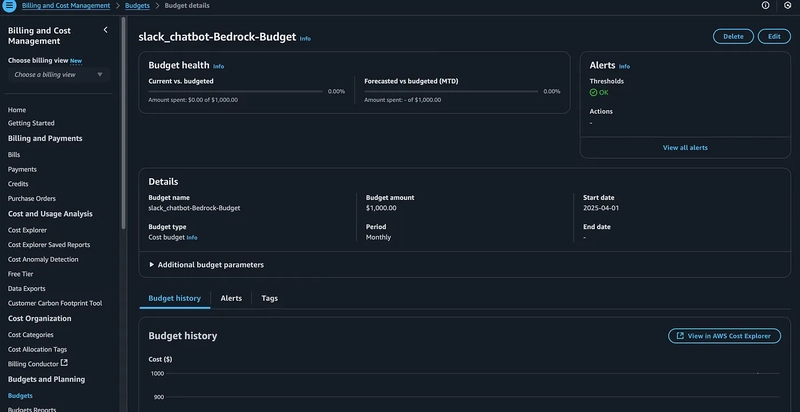

- Budget Alert Resource (Optional): Configures AWS Budgets or CloudWatch alarms to track spending and notify when cost thresholds are reached.

CloudFormation Template YAML:

AWSTemplateFormatVersion: '2010-09-09'

Transform: AWS::LanguageExtensions

Parameters:

ProjectName:

Type: String

Description: "Name of the project"

Department:

Type: String

AllowedValues: [marketing, sales, engineering]

Description: "Department name"

ModelArns:

Type: String

Description: "Select a model ARN from the allowed list"

AllowedValues:

- arn:aws:bedrock:us-east-1::foundation-model/amazon.nova-pro-v1:0

- arn:aws:bedrock:us-east-1::foundation-model/ai21.j2-mid-v1

- arn:aws:bedrock:us-east-1::foundation-model/ai21.j2-ultra-v1

- arn:aws:bedrock:us-east-1::foundation-model/amazon.titan-text-premier-v1

Default: arn:aws:bedrock:us-east-1::foundation-model/amazon.nova-pro-v1:0

Resources:

InferenceProfile:

Type: AWS::Bedrock::ApplicationInferenceProfile

Properties:

InferenceProfileName: !Sub "${Department}-${ProjectName}-Profile"

ModelSource:

CopyFrom: !Ref ModelArns # Use the selected model ARN

Tags:

- Key: CostCenter

Value: !Ref Department

- Key: Project

Value: !Ref ProjectName

ProfileArnParameter:

Type: AWS::SSM::Parameter

Properties:

Name: !Sub "/bedrock/${Department}/${ProjectName}/profile-arn"

Type: String

Value: !GetAtt InferenceProfile.InferenceProfileArn

Tags:

Project: !Ref ProjectName

CostCenter: !Ref Department

BudgetAlert:

Type: AWS::Budgets::Budget

Properties:

Budget:

BudgetName: !Sub "${ProjectName}-Bedrock-Budget"

BudgetLimit:

Amount: 1000

Unit: USD

BudgetType: COST

TimeUnit: MONTHLY

CostFilters:

TagKeyValue:

- !Sub "Project$${ProjectName}" # Use TagKeyValue for tag-based filtering

NotificationsWithSubscribers:

- Notification:

NotificationType: ACTUAL

ComparisonOperator: GREATER_THAN

Threshold: 80

ThresholdType: PERCENTAGE

Subscribers:

- SubscriptionType: EMAIL

Address: !Sub "${ProjectName}-admin@example.com"

This template is the foundation of your Service Catalog product. It enables standardized resource creation and enforces governance by embedding project-specific parameters.

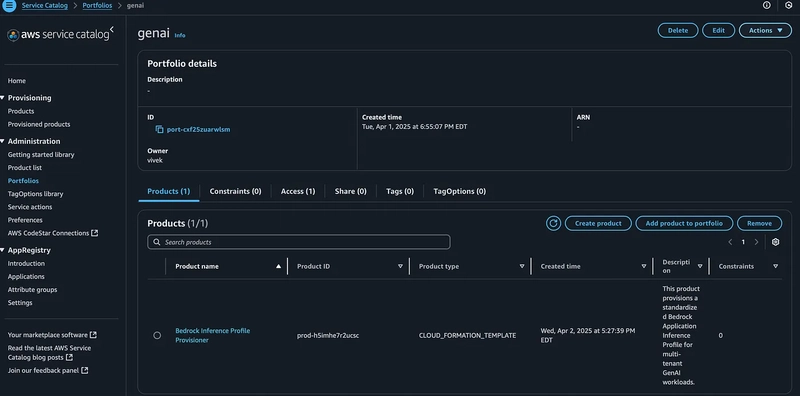

2. Creating the Service Catalog Product

With the CloudFormation template in hand, the next step is to package it as a Service Catalog product. This product encapsulates the logic defined in your template and makes it available for self-service provisioning.

- Navigate to the AWS Service Catalog Console: Open the AWS Service Catalog console at https://console.aws.amazon.com/servicecatalog/ .

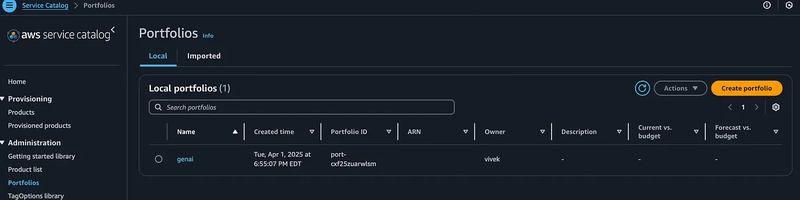

- Create a Portfolio e.g genai

3. Create a New Product:

- Product Details:

-

Product Name: e.g.,

Bedrock Inference Profile Provisioner - Product Description: Describe that this product provisions a standardized Bedrock Application Inference Profile for multi-tenant GenAI workloads.

-

Owner: e.g.,

Cloud Center of Excellence (CCoE)orIT - Version Details:

- Choose Use a CloudFormation template .

- Either upload the local CloudFormation template file (sample above) or provide an Amazon S3 URL.

- Enter a Version Name (e.g.,

v1.0) and a short description. - Support Details: Optionally, add an email contact and a support URL for troubleshooting.

-

Provisioning Parameters:

The product should expose parameters like

ProjectName,modelArnsandDepartment, which users will fill out during provisioning.

Finalize the Product:

Click Create product to save your product. It will now appear on the product list.

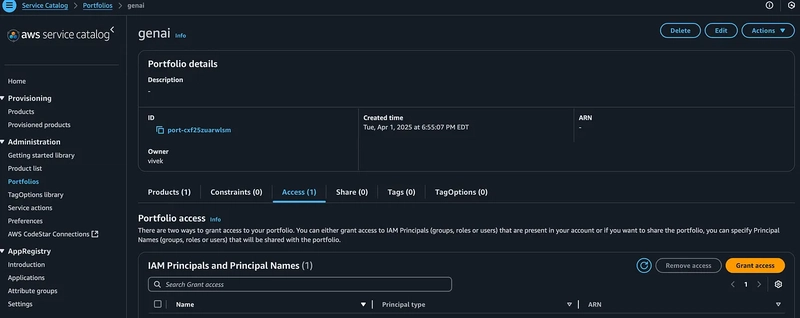

Click Grant Access: There are two ways to grant access to your portfolio. You can either grant access to IAM Principals (groups, roles or users) that are present in your account or if you want to share the portfolio, you can specify Principal Names (groups, roles or users) that will be shared with the portfolio.

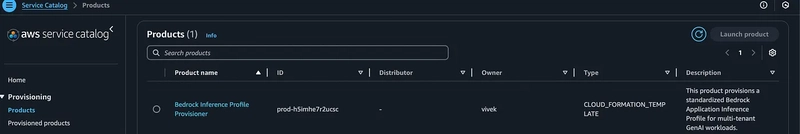

Now the Bedrock Inference Profile Provisioner should be available under Products to the granted IAM principals (groups, roles or users).

This product is now reusable and allows end users to self-service their provisioning needs without deviating from organizational standards.

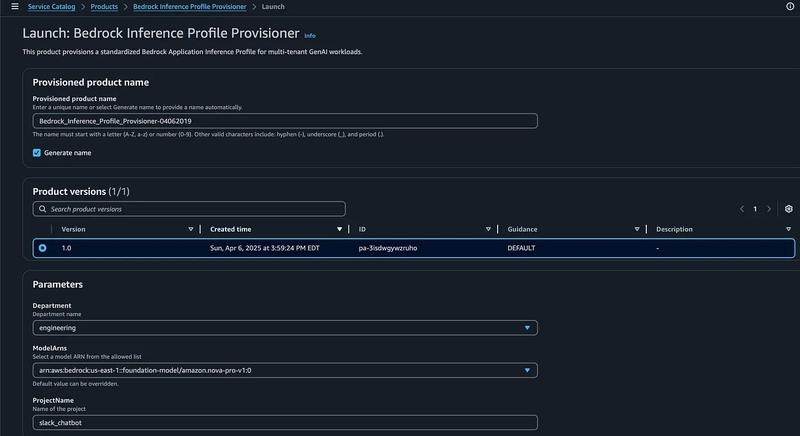

3. Provisioning the Product

Next, project teams can provision their own Bedrock Inference Profiles by:

- Navigating to the AWS Service Catalog console.

- Selecting the “Bedrock Inference Profile Provisioner” product.

- Filling in the required parameters such as

ProjectNameModelArns(select from drop down),Department(drop down). - Launching the product, which triggers the CloudFormation stack creation and provisions all associated resources.

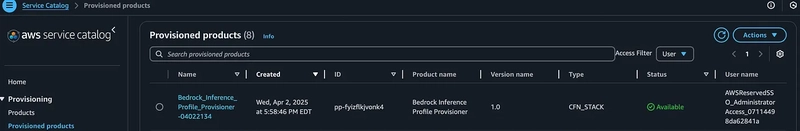

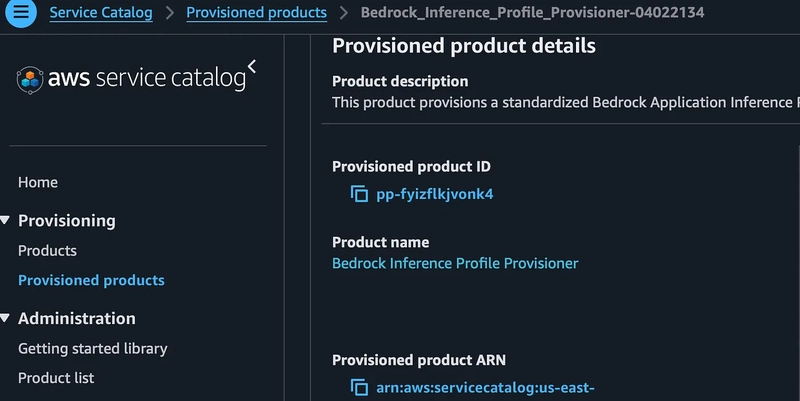

Verify the provisioned product details, including the resources created by CloudFormation, and verifying the AWS resource links under the Physical ID and CloudFormation Stack ARN.

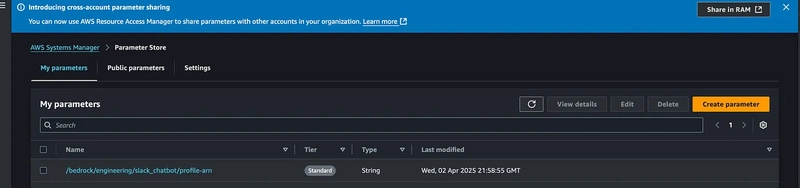

SSM Parameter containing the Bedrock Inference Profile Arn

Note: You can’t view application inference profiles in the Amazon Bedrock console. You can only view the system-defined inference profile in the console. However, you can view the application inference profile using the ‘get-inference-profile’ API. Just run the below command in order to view the details of the application inference profile (replace the

aws bedrock get-inference-profile --inference-profile-identifier

You should see an output like below

{

"inferenceProfileName": "engineering-slack_chatbot-Profile",

"createdAt": "2025-04-02T21:58:52.644591+00:00",

"updatedAt": "2025-04-02T21:58:52.644591+00:00",

"inferenceProfileArn": "arn:aws:bedrock:us-east-1:123456789012:application-inference-profile/8gjoyf1x2bn9",

"models": [

{

"modelArn": "arn:aws:bedrock:us-east-1::foundation-model/amazon.nova-pro-v1:0"

}

],

"inferenceProfileId": "8gjoyf1x2bn9",

"status": "ACTIVE",

"type": "APPLICATION"

}

Conclusion

In Part 1 of our series, we introduced a robust solution for standardizing the provisioning of Amazon Bedrock Inference Profiles using AWS Service Catalog. By encapsulating best practices into a reusable CloudFormation template and leveraging Service Catalog’s governance features, organizations can streamline resource management, enforce policies, and achieve better cost control in multi-tenant environments.

Stay tuned for Part 2, where we dive deeper into integrating runtime security measures and monitoring to further enhance your multi-tenant GenAI platform by securely retrieving Application Inference Profile ARNs from SSM Parameter Store and use them to invoke Bedrock models, ensuring proper cost allocation and access control.

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.jpg?#)

_ArtemisDiana_Alamy.jpg?#)

(1).webp?#)

-xl.jpg)

![Yes, the Gemini icon is now bigger and brighter on Android [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/02/Gemini-on-Galaxy-S25.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Rushes Five Planes of iPhones to US Ahead of New Tariffs [Report]](https://www.iclarified.com/images/news/96967/96967/96967-640.jpg)

![Apple Vision Pro 2 Allegedly in Production Ahead of 2025 Launch [Rumor]](https://www.iclarified.com/images/news/96965/96965/96965-640.jpg)