Breakthrough: Parallel Processing Makes AI Language Models 3x Faster Without Accuracy Loss

This is a Plain English Papers summary of a research paper called Breakthrough: Parallel Processing Makes AI Language Models 3x Faster Without Accuracy Loss. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter. Overview FFN Fusion technique accelerates Large Language Models (LLMs) by parallel processing Reduces sequential dependencies in Feed-Forward Networks (FFNs) 2-3× throughput improvement with minimal accuracy loss Hardware-friendly approach requiring no additional parameters or retraining Compatible with existing optimization methods like quantization Plain English Explanation Large Language Models power today's AI applications but face a major bottleneck: they process text one token (word piece) at a time. This sequential processing creates delays that limit how fast these models can generate text. The researchers found an unexpected insight - cert... Click here to read the full summary of this paper

This is a Plain English Papers summary of a research paper called Breakthrough: Parallel Processing Makes AI Language Models 3x Faster Without Accuracy Loss. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Overview

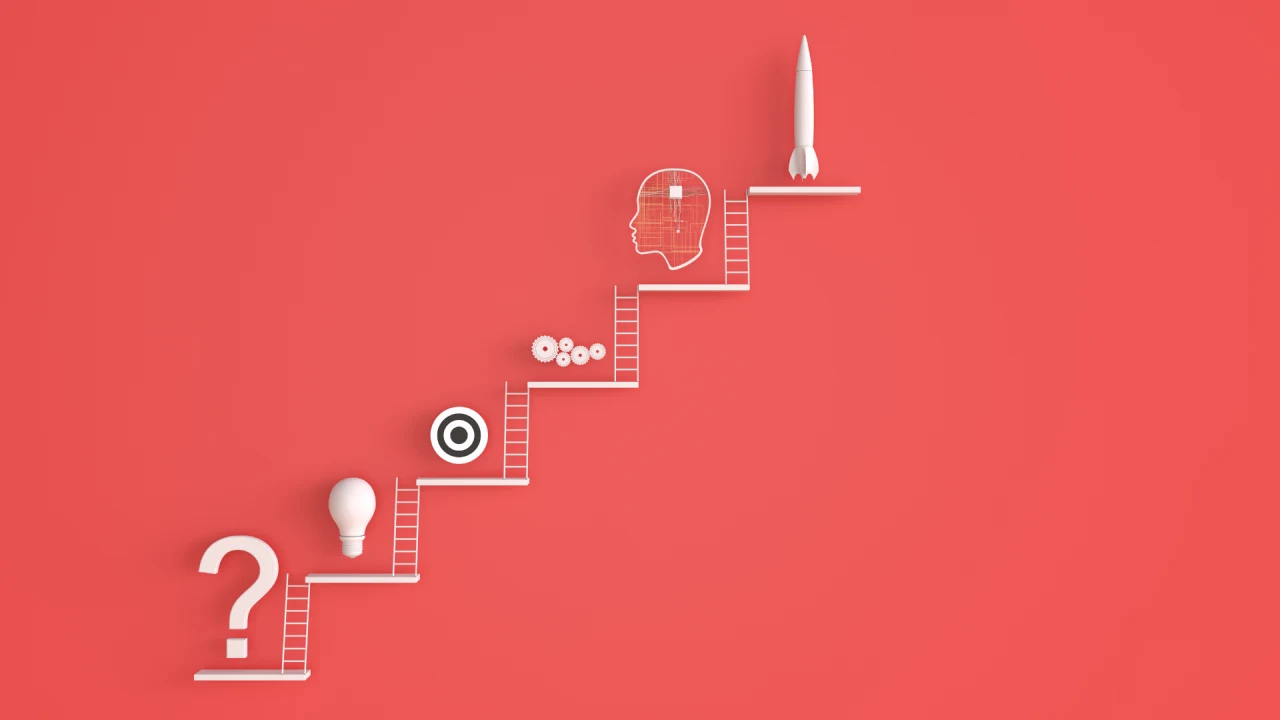

- FFN Fusion technique accelerates Large Language Models (LLMs) by parallel processing

- Reduces sequential dependencies in Feed-Forward Networks (FFNs)

- 2-3× throughput improvement with minimal accuracy loss

- Hardware-friendly approach requiring no additional parameters or retraining

- Compatible with existing optimization methods like quantization

Plain English Explanation

Large Language Models power today's AI applications but face a major bottleneck: they process text one token (word piece) at a time. This sequential processing creates delays that limit how fast these models can generate text.

The researchers found an unexpected insight - cert...

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![[Tutorial] Chapter 9: Task Dashboard: Charts](https://media2.dev.to/dynamic/image/width=800%2Cheight=%2Cfit=scale-down%2Cgravity=auto%2Cformat=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2Fnotu3uxqtwmann665q6r.png)

![[Tutorial] Chapter 10: Task Dashboard (Part 2) - Filter & Conditions](https://media2.dev.to/dynamic/image/width=800%2Cheight=%2Cfit=scale-down%2Cgravity=auto%2Cformat=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2Ffjbdyeaw51nx8g13ag12.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.jpg?#)

_ArtemisDiana_Alamy.jpg?#)

(1).webp?#)

-xl.jpg)

![Yes, the Gemini icon is now bigger and brighter on Android [U]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/02/Gemini-on-Galaxy-S25.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Rushes Five Planes of iPhones to US Ahead of New Tariffs [Report]](https://www.iclarified.com/images/news/96967/96967/96967-640.jpg)

![Apple Vision Pro 2 Allegedly in Production Ahead of 2025 Launch [Rumor]](https://www.iclarified.com/images/news/96965/96965/96965-640.jpg)