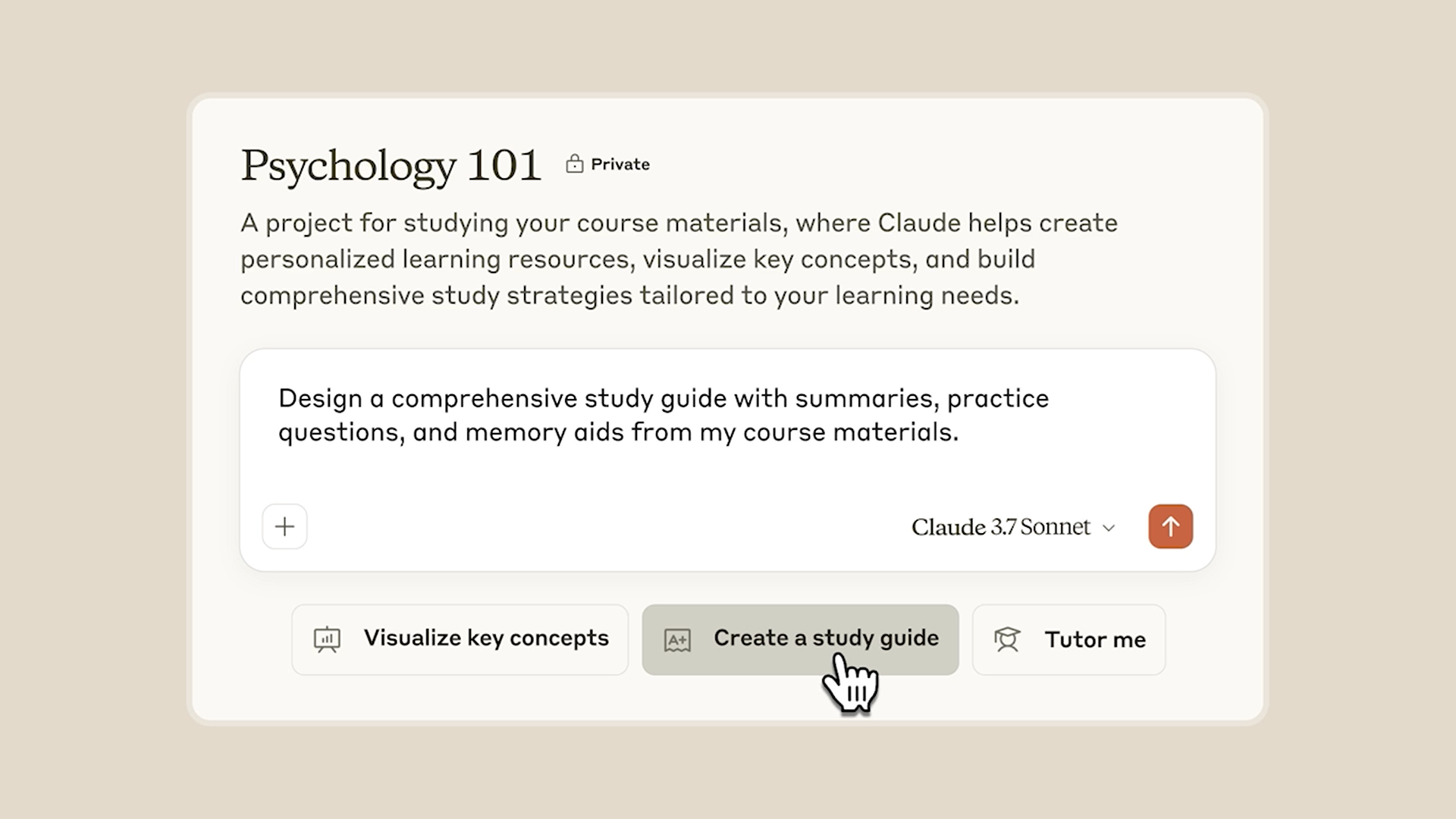

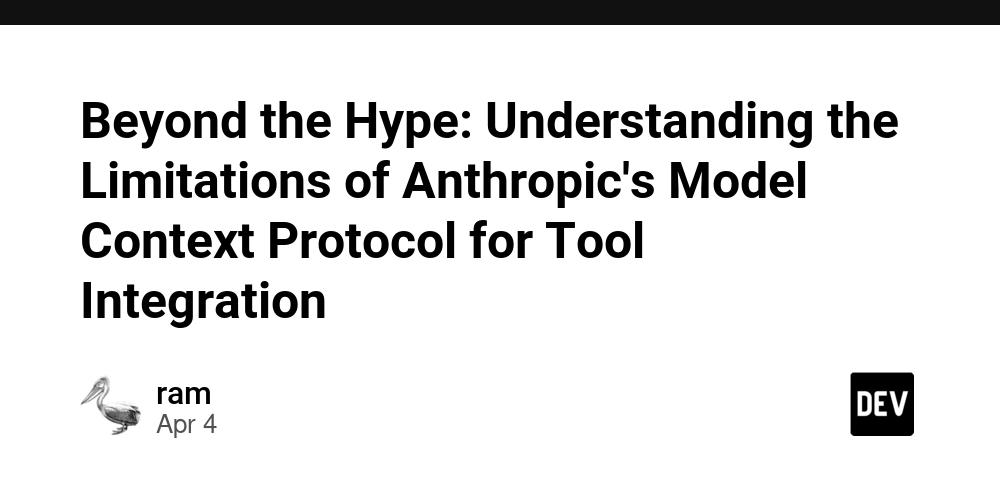

Beyond the Hype: Understanding the Limitations of Anthropic's Model Context Protocol for Tool Integration

I. Introduction: Anthropic's Model Context Protocol (MCP) Anthropic's Model Context Protocol (MCP), introduced in late 2024, aims to standardize how AI applications interact with external tools and data, similar to USB for device connectivity. MCP provides a unified API to solve the complex "M×N problem" of AI-tool integration, transforming it into a more manageable "M+N" scenario. This involves tool creators building MCP servers and application developers creating MCP clients. MCP defines key components like Tools (model-controlled functions), Resources (static data), and Prompts (pre-defined templates) to facilitate this interaction. Built upon established standards like the Language Server Protocol (LSP) and using JSON-RPC 2.0 for messaging, MCP represents a significant move towards interoperable AI agents. This report will examine the current limitations of MCP, including its stateful communication requirements, potential context window overload, and the indirect nature of LLM-tool interaction. While MCP offers a promising avenue for tool calling, its current form presents certain challenges. II. Stateful Communication and Server-Sent Events MCP establishes stateful sessions between clients and servers, with each client managing a one-to-one connection initiated on behalf of the host application. This requires MCP servers to maintain context for each client session, potentially impacting resource management and scalability. Communication primarily utilizes HTTP via Server-Sent Events (SSE), a protocol for one-way server-to-client communication over a persistent connection. While SSE is suitable for real-time updates, its unidirectional nature might limit certain interaction types, with client requests often handled via separate HTTP POST calls. Future iterations may see a transition to a more flexible Streamable HTTP transport. III. Compatibility with Stateless REST APIs A key challenge for MCP is the prevalence of stateless REST APIs in existing tools. RESTful APIs require each request to be self-contained, with no server-side session state maintained between requests. This contrasts with MCP's stateful SSE communication, potentially creating integration hurdles. Bridging this gap often necessitates intermediary layers or wrapper services acting as MCP servers for stateless REST APIs, managing session state and translating communication styles. MCP server implementations serve as these intermediaries, requiring developers to potentially implement state management for inherently stateless tools. IV. Context Window Limitations and Potential Overload LLMs have finite context windows, limiting the amount of text they can process. Each active MCP connection and its associated metadata consume tokens within this window. Integrating numerous tools via separate MCP connections can lead to a substantial increase in the context size, potentially exceeding the LLM's capacity. When this limit is reached, information may be truncated, negatively impacting the LLM's ability to reason and make accurate tool recommendations. Managing long-range dependencies and overall performance can also be affected by excessively large context windows. V. Impact on Accurate Tool Recommendations Context overload from multiple MCP connections can hinder the LLM's ability to accurately recommend tools. An abundance of tool information can confuse the LLM, making it difficult to identify the most relevant tool for a specific request. Factors beyond tool descriptions, such as tool titles and parameter specifications, also influence selection accuracy. Issues like under-selection or over-selection of tools can arise. The user's suggestion to build a Retrieval-Augmented Generation (RAG) system for tool selection indicates that simply providing all tool information might not be optimal. Dynamically adjusting the available tools based on the user's query can improve accuracy and alleviate context window pressure. VI. Indirect LLM-Tool Interaction LLMs do not directly interact with tools. Instead, they generate structured outputs specifying the tool and its parameters, which are then executed by an intermediary, the MCP client. The results are then passed back to the LLM to generate a final response. MCP acts as the communication layer for this indirect interaction, standardizing the exchange between LLM applications and external tools. This separation of concerns promotes modularity and facilitates the integration of new tools. VII. Potential Alternatives and Future Directions While MCP offers a structured approach to tool calling, other emerging standards aim to address some of its limitations. Agents.json (https://github.com/wild-card-ai/agents-json) is an open specification built on top of the OpenAPI standard, designed to formalize interactions between APIs and AI agents. Unlike MCP's stateful nature, Agents.json is stateless, with the agent managing all context. It focuses on enabling LLMs to execute complex API calls efficient

I. Introduction: Anthropic's Model Context Protocol (MCP)

Anthropic's Model Context Protocol (MCP), introduced in late 2024, aims to standardize how AI applications interact with external tools and data, similar to USB for device connectivity. MCP provides a unified API to solve the complex "M×N problem" of AI-tool integration, transforming it into a more manageable "M+N" scenario. This involves tool creators building MCP servers and application developers creating MCP clients. MCP defines key components like Tools (model-controlled functions), Resources (static data), and Prompts (pre-defined templates) to facilitate this interaction. Built upon established standards like the Language Server Protocol (LSP) and using JSON-RPC 2.0 for messaging, MCP represents a significant move towards interoperable AI agents. This report will examine the current limitations of MCP, including its stateful communication requirements, potential context window overload, and the indirect nature of LLM-tool interaction. While MCP offers a promising avenue for tool calling, its current form presents certain challenges.

II. Stateful Communication and Server-Sent Events

MCP establishes stateful sessions between clients and servers, with each client managing a one-to-one connection initiated on behalf of the host application. This requires MCP servers to maintain context for each client session, potentially impacting resource management and scalability. Communication primarily utilizes HTTP via Server-Sent Events (SSE), a protocol for one-way server-to-client communication over a persistent connection. While SSE is suitable for real-time updates, its unidirectional nature might limit certain interaction types, with client requests often handled via separate HTTP POST calls. Future iterations may see a transition to a more flexible Streamable HTTP transport.

III. Compatibility with Stateless REST APIs

A key challenge for MCP is the prevalence of stateless REST APIs in existing tools. RESTful APIs require each request to be self-contained, with no server-side session state maintained between requests. This contrasts with MCP's stateful SSE communication, potentially creating integration hurdles. Bridging this gap often necessitates intermediary layers or wrapper services acting as MCP servers for stateless REST APIs, managing session state and translating communication styles. MCP server implementations serve as these intermediaries, requiring developers to potentially implement state management for inherently stateless tools.

IV. Context Window Limitations and Potential Overload

LLMs have finite context windows, limiting the amount of text they can process. Each active MCP connection and its associated metadata consume tokens within this window. Integrating numerous tools via separate MCP connections can lead to a substantial increase in the context size, potentially exceeding the LLM's capacity. When this limit is reached, information may be truncated, negatively impacting the LLM's ability to reason and make accurate tool recommendations. Managing long-range dependencies and overall performance can also be affected by excessively large context windows.

V. Impact on Accurate Tool Recommendations

Context overload from multiple MCP connections can hinder the LLM's ability to accurately recommend tools. An abundance of tool information can confuse the LLM, making it difficult to identify the most relevant tool for a specific request. Factors beyond tool descriptions, such as tool titles and parameter specifications, also influence selection accuracy. Issues like under-selection or over-selection of tools can arise. The user's suggestion to build a Retrieval-Augmented Generation (RAG) system for tool selection indicates that simply providing all tool information might not be optimal. Dynamically adjusting the available tools based on the user's query can improve accuracy and alleviate context window pressure.

VI. Indirect LLM-Tool Interaction

LLMs do not directly interact with tools. Instead, they generate structured outputs specifying the tool and its parameters, which are then executed by an intermediary, the MCP client. The results are then passed back to the LLM to generate a final response. MCP acts as the communication layer for this indirect interaction, standardizing the exchange between LLM applications and external tools. This separation of concerns promotes modularity and facilitates the integration of new tools.

VII. Potential Alternatives and Future Directions

While MCP offers a structured approach to tool calling, other emerging standards aim to address some of its limitations.

Agents.json (https://github.com/wild-card-ai/agents-json) is an open specification built on top of the OpenAPI standard, designed to formalize interactions between APIs and AI agents. Unlike MCP's stateful nature, Agents.json is stateless, with the agent managing all context. It focuses on enabling LLMs to execute complex API calls efficiently through defined 'flows' and 'links'. This approach allows for leveraging existing agent architectures and RAG systems for state management and can be deployed on existing infrastructure.

Another relevant initiative is llms.txt (https://llmstxt.org/), a proposed standard to help AI models better understand and interact with website content. While Agents.json focuses on API interactions, llms.txt provides a structured overview of website content, making it easier for LLMs to retrieve relevant information and improve contextual understanding. It defines two main files: /llms.txt for a streamlined site structure and /llms-full.txt containing comprehensive content. While llms.txt aids in information retrieval, Agents.json is designed for executing multi-step workflows.

Addressing MCP's limitations might also involve strategies like intelligent connection management, dynamic tool activation, and summarization of tool capabilities to mitigate context overload. Exploring more flexible communication transports beyond SSE and refining RAG-based tool selection mechanisms are also crucial future directions.

VIII. Conclusion: Navigating MCP's Limitations

Anthropic's MCP is a valuable step towards standardizing AI-tool integration and provides a robust framework for tool calling. However, its current reliance on stateful SSE communication poses compatibility challenges with stateless REST APIs. The potential for context window overload from numerous connections can also impact LLM performance and tool recommendation accuracy. While MCP facilitates indirect LLM-tool interaction, developers must be mindful of this architecture. Emerging standards like Agents.json and llms.txt offer alternative or complementary approaches to consider. Continued development in connection management, communication protocols, and tool selection will be vital for the evolution of MCP and the broader landscape of AI agent-tool interaction.

.jpg)

%20Abstract%20Background%20112024%20SOURCE%20Amazon.jpg)

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)