Basic Computers Science

1. Introduction: Demystifying the Magic Computers often appear magical-throw some metal and silicon in a box, and suddenly, complex tasks are performed. But beneath the surface, everything is grounded in physics, logic, and mathematics15. 2. The Heart of the Computer: The CPU and Transistors At the core of every computer is the Central Processing Unit (CPU), a tiny chip made from silicon. This chip contains billions of transistors-microscopic switches that can be turned on or off, representing binary states 1 and 026. These binary states form the fundamental language of computers, known as the binary system12. Transistors act as switches or amplifiers, controlling the flow of electrical signals. Modern CPUs pack billions of these into a single chip, enabling rapid, complex computations2. Binary System: Each transistor's state (on/off) encodes a single bit of information12. 3. From Bits to Bytes: Storing and Representing Data Bit: The smallest unit of data, representing a 0 or 1. Byte: A group of 8 bits, allowing for 256 unique combinations (2^8)1. Binary Numbers: Each bit in a binary number represents a power of 2. For example, 1000101 equals 69 in decimal3. Hexadecimal: Because binary is hard for humans to read, computers often use hexadecimal (base-16), where one digit represents four binary bits, making it easier to interpret large binary numbers3. 4. Logic Gates and Boolean Logic Logic Gates: Built from transistors, these basic circuits perform operations based on Boolean logic (AND, OR, NOT, etc.), enabling computers to make decisions and perform calculations4. Boolean Logic: Uses true/false (1/0) values to process logical statements and control data flow in circuits4. 5. Representing Characters: Character Encodings Computers must also handle text, not just numbers. Character encodings like ASCII assign binary values to characters (e.g., "A" = 65 in ASCII), allowing computers to store and display text1. 6. Operating System and Machine Code Operating System Kernel: The core software (like Windows, Linux, or macOS) that manages hardware and software interactions, using device drivers to communicate with hardware components1. Machine Code: The lowest-level instructions, written in binary, that the CPU executes directly1 7. Memory and the Fetch-Decode-Execute Cycle RAM (Random Access Memory): Temporary storage for data and instructions, organized as a grid of addresses, each holding one byte1. Instruction Cycle: The CPU repeatedly fetches instructions from memory, decodes them, executes them, and stores the results-a process called the fetch-decode-execute cycle6. 8. CPU Speed, Cores, and Threads Clock Speed: Modern CPUs perform billions of cycles per second, synchronized by a clock generator6. Cores and Threads: CPUs often have multiple cores, each capable of handling separate tasks in parallel. Threads allow each core to switch between multiple tasks quickly16. 9. Programming Languages and Abstractions High-Level Languages: Programming in raw machine code is impractical, so we use languages like Python or C, which are compiled or interpreted into machine code1. Shells and Interfaces: The kernel is accessed via shells (command-line or graphical interfaces), making it easier for users and programs to interact with the system1. 10. Variables, Data Types, and Memory Usage Variables: Named storage locations for values. Data Types: Include integers, floating-point numbers (for decimals), characters, and strings. Different types use different amounts of memory (e.g., an integer typically uses 4 bytes)1. Floating-Point Numbers: Allow for a wide range of decimal values, but can introduce rounding errors due to binary approximations1. 11. Data Structures: Organizing Information Arrays: Fixed-size collections of elements, efficient for indexing. Linked Lists: Flexible, dynamic structures where each node points to the next, but slower for random access. Stacks (LIFO) and Queues (FIFO): Specialized structures for managing ordered data. Hash Maps/Dictionaries: Store key-value pairs for fast lookups, using hashing to determine storage location; collisions are handled with techniques like linked lists. Graphs and Trees: Graphs model networks, trees provide hierarchical organization (e.g., binary search trees for fast searching)1. 12. Algorithms and Optimization Algorithms: Step-by-step procedures for solving problems. Functions and Recursion: Functions encapsulate algorithms; recursion allows functions to call themselves, requiring a base case to prevent infinite loops. Memoization: Caching results to avoid redundant computation. Big O Notation: Describes how algorithm performance scales with input size (e.g., O(n) for linear time, O(n!) for factorial time)1. 13. Why This Knowledge Matters Understanding the layers-from

1. Introduction: Demystifying the Magic

Computers often appear magical-throw some metal and silicon in a box, and suddenly, complex tasks are performed. But beneath the surface, everything is grounded in physics, logic, and mathematics15.

2. The Heart of the Computer: The CPU and Transistors

At the core of every computer is the Central Processing Unit (CPU), a tiny chip made from silicon. This chip contains billions of transistors-microscopic switches that can be turned on or off, representing binary states 1 and 026. These binary states form the fundamental language of computers, known as the binary system12.

- Transistors act as switches or amplifiers, controlling the flow of electrical signals. Modern CPUs pack billions of these into a single chip, enabling rapid, complex computations2.

- Binary System: Each transistor's state (on/off) encodes a single bit of information12.

3. From Bits to Bytes: Storing and Representing Data

- Bit: The smallest unit of data, representing a 0 or 1.

- Byte: A group of 8 bits, allowing for 256 unique combinations (2^8)1.

-

Binary Numbers: Each bit in a binary number represents a power of 2. For example,

1000101equals 69 in decimal3. - Hexadecimal: Because binary is hard for humans to read, computers often use hexadecimal (base-16), where one digit represents four binary bits, making it easier to interpret large binary numbers3.

4. Logic Gates and Boolean Logic

- Logic Gates: Built from transistors, these basic circuits perform operations based on Boolean logic (AND, OR, NOT, etc.), enabling computers to make decisions and perform calculations4.

- Boolean Logic: Uses true/false (1/0) values to process logical statements and control data flow in circuits4.

5. Representing Characters: Character Encodings

Computers must also handle text, not just numbers. Character encodings like ASCII assign binary values to characters (e.g., "A" = 65 in ASCII), allowing computers to store and display text1.

6. Operating System and Machine Code

- Operating System Kernel: The core software (like Windows, Linux, or macOS) that manages hardware and software interactions, using device drivers to communicate with hardware components1.

- Machine Code: The lowest-level instructions, written in binary, that the CPU executes directly1

7. Memory and the Fetch-Decode-Execute Cycle

- RAM (Random Access Memory): Temporary storage for data and instructions, organized as a grid of addresses, each holding one byte1.

- Instruction Cycle: The CPU repeatedly fetches instructions from memory, decodes them, executes them, and stores the results-a process called the fetch-decode-execute cycle6.

8. CPU Speed, Cores, and Threads

- Clock Speed: Modern CPUs perform billions of cycles per second, synchronized by a clock generator6.

- Cores and Threads: CPUs often have multiple cores, each capable of handling separate tasks in parallel. Threads allow each core to switch between multiple tasks quickly16.

9. Programming Languages and Abstractions

- High-Level Languages: Programming in raw machine code is impractical, so we use languages like Python or C, which are compiled or interpreted into machine code1.

- Shells and Interfaces: The kernel is accessed via shells (command-line or graphical interfaces), making it easier for users and programs to interact with the system1.

10. Variables, Data Types, and Memory Usage

- Variables: Named storage locations for values.

- Data Types: Include integers, floating-point numbers (for decimals), characters, and strings. Different types use different amounts of memory (e.g., an integer typically uses 4 bytes)1.

- Floating-Point Numbers: Allow for a wide range of decimal values, but can introduce rounding errors due to binary approximations1.

11. Data Structures: Organizing Information

- Arrays: Fixed-size collections of elements, efficient for indexing.

- Linked Lists: Flexible, dynamic structures where each node points to the next, but slower for random access.

- Stacks (LIFO) and Queues (FIFO): Specialized structures for managing ordered data.

- Hash Maps/Dictionaries: Store key-value pairs for fast lookups, using hashing to determine storage location; collisions are handled with techniques like linked lists.

- Graphs and Trees: Graphs model networks, trees provide hierarchical organization (e.g., binary search trees for fast searching)1.

12. Algorithms and Optimization

- Algorithms: Step-by-step procedures for solving problems.

- Functions and Recursion: Functions encapsulate algorithms; recursion allows functions to call themselves, requiring a base case to prevent infinite loops.

- Memoization: Caching results to avoid redundant computation.

- Big O Notation: Describes how algorithm performance scales with input size (e.g., O(n) for linear time, O(n!) for factorial time)1.

13. Why This Knowledge Matters

Understanding the layers-from transistors and binary logic up to programming languages and algorithms-gives you a solid foundation for software engineering, system design, and troubleshooting15. Whether coding, designing, or debugging, this knowledge is invaluable.

References

- . Computer Science in 17 mins

- https://www.explainthatstuff.com/howcomputerswork.html

- https://sage-advices.com/what-does-a-transistor-do-in-a-cpu/

- https://dev.to/m_mdy_m/understanding-number-systems-binary-decimal-hexadecimal-and-beyond-55i4

- https://www.savemyexams.com/igcse/computer-science/cie/23/revision-notes/10-boolean-logic/boolean-logic/logic-gates/

- https://artsandculture.google.com/story/how-computers-work/DgWBfSPf_sFSow

- https://www.youtube.com/watch?v=fOcoLKHeOTI

- https://www.techtarget.com/whatis/definition/ASCII-American-Standard-Code-for-Information-Interchange

- https://www.techtarget.com/searchdatacenter/definition/kernel

- https://softwareg.com.au/blogs/computer-hardware/how-does-ram-and-cpu-work-together

- https://www.fortra.com/resources/articles/modeling-multi-threaded-processors

- https://www.linkedin.com/pulse/how-do-computers-work-sam-frymer

- https://newsroom.intel.com/wp-content/uploads/2024/05/tech101_transistors_vis3_v01-1024x779.gif?sa=X&ved=2ahUKEwiZufukq_2MAxUva_UHHXUXAh4Q_B16BAgEEAI

- https://informatecdigital.com/en/Number-systems-in-computing-binary-decimal-and-hexa/

- https://byjus.com/jee/basic-logic-gates/

- https://cs50.harvard.edu/ap/2020/assets/pdfs/ascii.pdf

- https://www.scaler.com/topics/kernel-in-os/

- https://www.livewiredev.com/the-relationship-between-ram-and-cpu-performance/

- https://shardeum.org/blog/cpu-cores-and-threads/

- https://www.reddit.com/r/computerscience/comments/ljfcm3/how_computers_work_the_basics/

- Exploring How Computers Work

![[The AI Show Episode 146]: Rise of “AI-First” Companies, AI Job Disruption, GPT-4o Update Gets Rolled Back, How Big Consulting Firms Use AI, and Meta AI App](https://www.marketingaiinstitute.com/hubfs/ep%20146%20cover.png)

![|ー ▶︎ [ Wouldn't it be easier if you could trace your data structure with lines? ] ー,ー,ー;](https://media2.dev.to/dynamic/image/width%3D1000,height%3D500,fit%3Dcover,gravity%3Dauto,format%3Dauto/https:%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2F2k02ska8tyhhnijercgq.png)

_Brian_Jackson_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

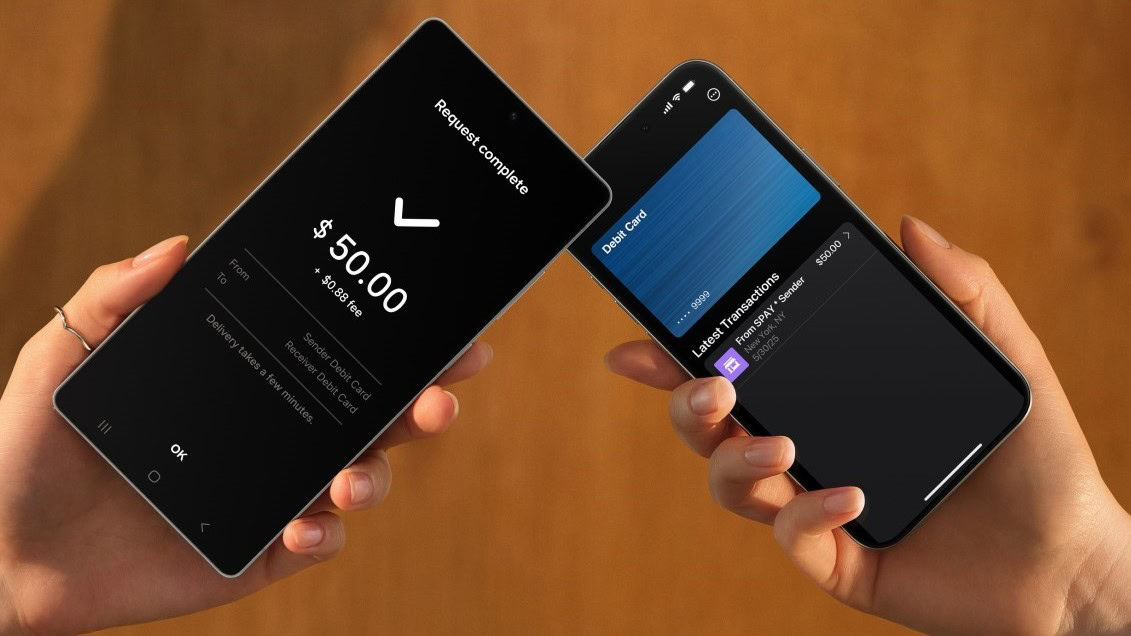

Stolen 884,000 Credit Card Details on 13 Million Clicks from Users Worldwide.webp?#)

![Apple Shares Official Teaser for 'Highest 2 Lowest' Starring Denzel Washington [Video]](https://www.iclarified.com/images/news/97221/97221/97221-640.jpg)

![Under-Display Face ID Coming to iPhone 18 Pro and Pro Max [Rumor]](https://www.iclarified.com/images/news/97215/97215/97215-640.jpg)