AWS Data migration using DMS full load configurations for efficient data loading

Relational databases migration to AWS environment into S3 data lake using AWS DMS Part V: This is the fifth blog in a multi-part series on considerations and observations during the migration of relational databases to an AWS S3 data lake using the AWS DMS service. In the previous blogs, the following considerations were discussed: Part I – Oracle DB CDC processing and network bandwidth Part II – MS SQL Server LOB and Datetime columns Part III – MongoDB load balancing and deleted records Part IV – Network bandwidth issue in details during the migrations This blog will focus mainly on full load data migration settings, configurations from RDBMS source Oracle, and it could be applicable for other RDBMS sources too. Problem statement: With major RDBMS databases in scope for the data migration to AWS, actual data migration was the critical task of this requirement. For small and medium sized tables in all the databases, full load data migration was happening very quickly. Regarding the Oracle data migration considering large Oracle tables in scope and heavy transaction rate on the source, full load tasks were taking quite a long time, more than 1 day for 12-15 large tables in Oracle and with ETL transformations, data validations it uses to take 2-3 days for the complete process. Source tables in scope for this Oracle tables data loading were as follows: Total tables : 120 Large to very large tables : 15 Rows in large tables : 100M – 500M Full load tasks for the tables in DMS was configured as shown below for one of the tables – { { "rule-type": "selection", "rule-id": "1", "rule-name": "1", "object-locator": { "schema-name": "fin", "table-name": "charge_dtls" }, "rule-action": "include", "filters": [] } } Also, these complete data processing was performed for 3-4 times during the development phase due to various reasons as follows– DMS tasks failed as source was not available for few hours over the weekend for maintenance activities Modified timestamp to process changed records not populated correctly in source tables Business requirements and reload due to data issues etc. These data reload adds overall delay for 2-3 days for complete data processing for the large tables and additional cost incurred. Solution overview: Upon further reviewing the full load tasks configured and time required for the full load completion, it was decided to use parallel load options with full load for improving the full load task completion. Parallel load helps in speeding the migration process and make it more efficient. With the parallel options, AWS DMS splits single segmented table into different threads with each thread can run in parallel. Parallel load comes in different configurations for loading data parallelly using separate threads and here parallel load range for column value boundaries used with specific column as follows { "rule-type": "table-settings", "rule-id": "1", "rule-name": "1", "object-locator": { "schema-name": "fin", "table-name": "charge_dtls" }, "parallel-load": { "type": "ranges", "columns": [ "charge_Id" ], "boundaries": [ [ "1000000" ], [ "2000000" ], [ "3000000" ], [ "4000000" ], [ "5000000" ], [ "6000000" ], [ "7000000" ], [ "8000000" ], [ "9000000" ], [ "10000000" ] ] } } Here charge_dtls table is loaded using parallel load with ranges on the Id column. First one need to identify a column to create boundaries and a column with primary, unique or index should be an good choice. This parallel range helps in a scenario for table without data partitions as in this case here. It helped to reduce overall full load time for tables by 50-60% and overall reduction in the data loading, ETL jobs and data validation activity to complete within 1.5 days against 2-3 days before. Full load task in DMS can be configured with other parallel load configurations such as – Partitions-auto: for the tables with partitions already defined while table creations. E.g. suppose above table is d

Relational databases migration to AWS environment into S3 data lake using AWS DMS Part V:

This is the fifth blog in a multi-part series on considerations and observations during the migration of relational databases to an AWS S3 data lake using the AWS DMS service.

In the previous blogs, the following considerations were discussed:

- Part I – Oracle DB CDC processing and network bandwidth

- Part II – MS SQL Server LOB and Datetime columns

- Part III – MongoDB load balancing and deleted records

- Part IV – Network bandwidth issue in details during the migrations

This blog will focus mainly on full load data migration settings, configurations from RDBMS source Oracle, and it could be applicable for other RDBMS sources too.

Problem statement:

With major RDBMS databases in scope for the data migration to AWS, actual data migration was the critical task of this requirement. For small and medium sized tables in all the databases, full load data migration was happening very quickly.

Regarding the Oracle data migration considering large Oracle tables in scope and heavy transaction rate on the source, full load tasks were taking quite a long time, more than 1 day for 12-15 large tables in Oracle and with ETL transformations, data validations it uses to take 2-3 days for the complete process.

Source tables in scope for this Oracle tables data loading were as follows:

Total tables : 120

Large to very large tables : 15

Rows in large tables : 100M – 500M

Full load tasks for the tables in DMS was configured as shown below for one of the tables –

{

{

"rule-type": "selection",

"rule-id": "1",

"rule-name": "1",

"object-locator": {

"schema-name": "fin",

"table-name": "charge_dtls"

},

"rule-action": "include",

"filters": []

}

}

Also, these complete data processing was performed for 3-4 times during the development phase due to various reasons as follows–

DMS tasks failed as source was not available for few hours over the weekend for maintenance activities

Modified timestamp to process changed records not populated correctly in source tables

Business requirements and reload due to data issues etc.

These data reload adds overall delay for 2-3 days for complete data processing for the large tables and additional cost incurred.

Solution overview:

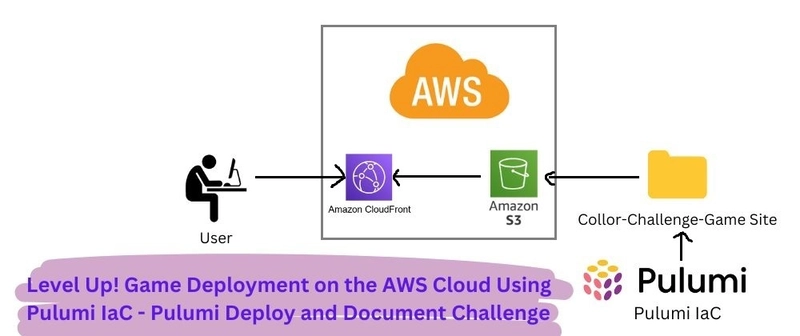

Upon further reviewing the full load tasks configured and time required for the full load completion, it was decided to use parallel load options with full load for improving the full load task completion.

Parallel load helps in speeding the migration process and make it more efficient. With the parallel options, AWS DMS splits single segmented table into different threads with each thread can run in parallel.

Parallel load comes in different configurations for loading data parallelly using separate threads and here parallel load range for column value boundaries used with specific column as follows

{

"rule-type": "table-settings",

"rule-id": "1",

"rule-name": "1",

"object-locator": {

"schema-name": "fin",

"table-name": "charge_dtls"

},

"parallel-load": {

"type": "ranges",

"columns": [

"charge_Id"

],

"boundaries": [

[

"1000000"

],

[

"2000000"

],

[

"3000000"

],

[

"4000000"

],

[

"5000000"

],

[

"6000000"

],

[

"7000000"

],

[

"8000000"

],

[

"9000000"

],

[

"10000000"

]

]

}

}

Here charge_dtls table is loaded using parallel load with ranges on the Id column.

First one need to identify a column to create boundaries and a column with primary, unique or index should be an good choice.

This parallel range helps in a scenario for table without data partitions as in this case here.

It helped to reduce overall full load time for tables by 50-60% and overall reduction in the data loading, ETL jobs and data validation activity to complete within 1.5 days against 2-3 days before.

Full load task in DMS can be configured with other parallel load configurations such as –

- Partitions-auto: for the tables with partitions already defined while table creations. E.g. suppose above table is defined with partition on charge_year while creation would be configured as follows –

{

"rule-type": "table-settings",

"rule-id": "1",

"rule-name": "1",

"object-locator": {

"schema-name": "fin",

"table-name": "charge_dtls"

},

"parallel-load": {

"type": "partitions-auto"

}

}

- Partitions-list: Above table can be loaded using partitions list on the same partition column charge_year to filter and fetch data from specific partition values, as shown below to fetch data only for partition values 2024 and 2025 for charge_year column

{

"rule-type": "table-settings",

"rule-id": "1",

"rule-name": "1",

"object-locator": {

"schema-name": "fin",

"table-name": "charge_dtls"

},

"parallel-load": {

"type": "partitions-list",

“partitions”: [“2024”, “2025”]

}

}

MaxFullLoadSubTasks is settings for the number of tables or table segments to run in parallel with max value as 49 and default value as 8.

It needs to be configured accordingly for running full load using parallel configurations or multiple small tables to run in parallel.

Conclusion:

In this blog, it was discussed about the parallel load options available during full load data migration for improving full load performance and reducing the required data loading time. Most of the times these options were not considered, and data loading is completed using default configurations.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.webp?#)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![Apple Considers Delaying Smart Home Hub Until 2026 [Gurman]](https://www.iclarified.com/images/news/96946/96946/96946-640.jpg)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)