Automating Tests for Multiple Generative AI APIs Using Postman (Newman) and Exporting Responses to CSV

When integrating generative AI into a chatbot, I wanted to compare the responses of various AI APIs (OpenAI, Gemini, Bedrock (Claude)) and analyze the differences in output. To achieve this, I used Postman’s Newman to batch execute API requests and save the results in a CSV file. This article explains how to set up each API and use Postman (Newman) for response comparison. API Configuration First, set up the generative AI APIs (OpenAI, Gemini, Bedrock) in Postman. 1. OpenAI API Creating an API Key Log in to OpenAI’s API platform and create an API key. Additionally, you must charge at least $5 USD in credits to use the API. Postman Configuration Send a request to OpenAI’s /chat/completions endpoint. Endpoint: POST https://api.openai.com/v1/chat/completions Headers: Content-Type: application/json Authorization: Bearer Body: { "model": "gpt-4o", "messages": [ { "role": "system", "content": "{{system_prompt}}" }, { "role": "user", "content": "{{input_prompt}}" } ], "max_tokens": 200, "temperature": 0.7 } Notes {{system_prompt}} and {{input_prompt}} will be populated with values from prompts.json used by Newman (explained later). max_tokens and temperature are adjustable parameters. 2. Gemini API Creating an API Key Create a Google Cloud project and enable the Gemini API. Then, generate an API key from "API & Services > Credentials". Postman Configuration Gemini API requires the API key to be passed as a query parameter. Endpoint: POST https://generativelanguage.googleapis.com/v1/models/gemini-1.5-pro:generateContent Params: key: Headers: Content-Type: application/json Body: { "contents": [ { "role": "user", "parts": [ { "text": "{{system_prompt}}" } ] }, { "role": "user", "parts": [ { "text": "{{input_prompt}}" } ] } ], "generationConfig": { "temperature": 0.7, "maxOutputTokens": 200 } } 3. Bedrock (Claude API) Creating an API Key In the AWS Console, navigate to "Amazon Bedrock > Model Access" and request access to the desired model. Create an IAM user and grant it necessary Bedrock access permissions. It’s recommended to use a custom policy instead of BedrockFullAccess to minimize security risks. Then, generate an access key (and secret access key) in the IAM console. Postman Configuration Bedrock API uses AWS Signature authentication, which should be configured in Postman’s Auth tab. Endpoint: POST https://bedrock-runtime.ap-northeast-1.amazonaws.com/model/anthropic.claude-3-5-sonnet-20240620-v1:0/invoke Auth: Auth Type: AWS Signature AccessKey: SecretKey: AWS Region: Service Name: bedrock Headers: Content-Type: application/json Accept: application/json Body: { "messages": [ { "role": "system", "content": "{{system_prompt}}" }, { "role": "user", "content": "{{input_prompt}}" } ], "max_tokens": 200, "anthropic_version": "bedrock-2023-05-31" } Running Tests with Newman Newman is a CLI tool for running Postman collections. It allows sending the same prompt to different AI models and exporting responses to a CSV file for comparison. 1. Install Newman $ npm install newman newman-reporter-csv 2. Export Postman Collection Save the API requests as collection.json. 3. Create prompts.json [ { "system_prompt": "You are a travel planner.", "input_prompt": "Suggest three travel destinations." }, { "system_prompt": "You are an IT engineer and a programming instructor.", "input_prompt": "Recommend three programming languages for beginners." } ] 4. Execute Newman $ npx newman run collection.json -d prompts.json -r cli,csv \ --reporter-csv-export responses.csv --reporter-csv-includeBody This generates a CSV with response data, enabling easy comparison of different AI model outputs. Conclusion By leveraging Postman and Newman, we can automate the testing of multiple generative AI APIs, ensuring a standardized method for evaluating different AI responses. The ability to store results in CSV format enables easy comparison and analysis, making it a valuable approach for chatbot development and AI benchmarking. Future enhancements could include integrating automated validation checks, improving logging mechanisms, and exploring additional AI models for broader comparisons.

When integrating generative AI into a chatbot, I wanted to compare the responses of various AI APIs (OpenAI, Gemini, Bedrock (Claude)) and analyze the differences in output. To achieve this, I used Postman’s Newman to batch execute API requests and save the results in a CSV file.

This article explains how to set up each API and use Postman (Newman) for response comparison.

API Configuration

First, set up the generative AI APIs (OpenAI, Gemini, Bedrock) in Postman.

1. OpenAI API

Creating an API Key

Log in to OpenAI’s API platform and create an API key.

Additionally, you must charge at least $5 USD in credits to use the API.

Postman Configuration

Send a request to OpenAI’s /chat/completions endpoint.

- Endpoint:

POST https://api.openai.com/v1/chat/completions

- Headers:

Content-Type: application/json

Authorization: Bearer

- Body:

{

"model": "gpt-4o",

"messages": [

{

"role": "system",

"content": "{{system_prompt}}"

},

{

"role": "user",

"content": "{{input_prompt}}"

}

],

"max_tokens": 200,

"temperature": 0.7

}

Notes

-

{{system_prompt}}and{{input_prompt}}will be populated with values fromprompts.jsonused by Newman (explained later). -

max_tokensandtemperatureare adjustable parameters.

2. Gemini API

Creating an API Key

Create a Google Cloud project and enable the Gemini API.

Then, generate an API key from "API & Services > Credentials".

Postman Configuration

Gemini API requires the API key to be passed as a query parameter.

- Endpoint:

POST https://generativelanguage.googleapis.com/v1/models/gemini-1.5-pro:generateContent

- Params:

key:

- Headers:

Content-Type: application/json

- Body:

{

"contents": [

{

"role": "user",

"parts": [

{ "text": "{{system_prompt}}" }

]

},

{

"role": "user",

"parts": [

{ "text": "{{input_prompt}}" }

]

}

],

"generationConfig": {

"temperature": 0.7,

"maxOutputTokens": 200

}

}

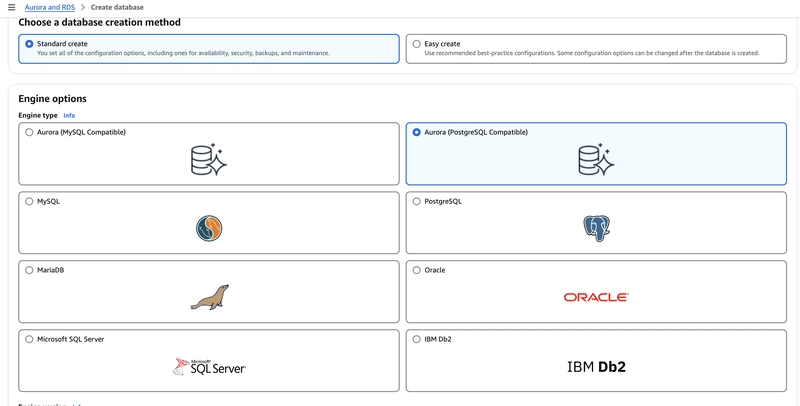

3. Bedrock (Claude API)

Creating an API Key

In the AWS Console, navigate to "Amazon Bedrock > Model Access" and request access to the desired model.

Create an IAM user and grant it necessary Bedrock access permissions. It’s recommended to use a custom policy instead of BedrockFullAccess to minimize security risks.

Then, generate an access key (and secret access key) in the IAM console.

Postman Configuration

Bedrock API uses AWS Signature authentication, which should be configured in Postman’s Auth tab.

- Endpoint:

POST https://bedrock-runtime.ap-northeast-1.amazonaws.com/model/anthropic.claude-3-5-sonnet-20240620-v1:0/invoke

- Auth:

Auth Type: AWS Signature

AccessKey:

SecretKey:

AWS Region:

Service Name: bedrock

- Headers:

Content-Type: application/json

Accept: application/json

- Body:

{

"messages": [

{

"role": "system",

"content": "{{system_prompt}}"

},

{

"role": "user",

"content": "{{input_prompt}}"

}

],

"max_tokens": 200,

"anthropic_version": "bedrock-2023-05-31"

}

Running Tests with Newman

Newman is a CLI tool for running Postman collections. It allows sending the same prompt to different AI models and exporting responses to a CSV file for comparison.

1. Install Newman

$ npm install newman newman-reporter-csv

2. Export Postman Collection

Save the API requests as collection.json.

3. Create prompts.json

[

{ "system_prompt": "You are a travel planner.", "input_prompt": "Suggest three travel destinations." },

{ "system_prompt": "You are an IT engineer and a programming instructor.", "input_prompt": "Recommend three programming languages for beginners." }

]

4. Execute Newman

$ npx newman run collection.json -d prompts.json -r cli,csv \

--reporter-csv-export responses.csv --reporter-csv-includeBody

This generates a CSV with response data, enabling easy comparison of different AI model outputs.

Conclusion

By leveraging Postman and Newman, we can automate the testing of multiple generative AI APIs, ensuring a standardized method for evaluating different AI responses. The ability to store results in CSV format enables easy comparison and analysis, making it a valuable approach for chatbot development and AI benchmarking. Future enhancements could include integrating automated validation checks, improving logging mechanisms, and exploring additional AI models for broader comparisons.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)