AI Agents with Fetch.ai: Tips for Smarter Automation ⚡

Fetch.ai’s uAgents framework allows developers to build intelligent, autonomous AI agents that can automate tasks, communicate, and learn from data. Whether you're new to AI agents or looking to optimize your workflow, these tips will help you make the most of Fetch.ai’s uAgents. Use Async for Faster Communication Fetch.ai’s uAgents framework supports asynchronous communication, making it easy to handle multiple tasks at once. Instead of blocking operations, always use async and await in your agent logic. async def handle_message(ctx: Context, sender: str, message: str): response = f"Received your message: {message}" await ctx.send(sender, response) Persist Agent State with Storage Instead of losing data when your agent restarts, use ctx.storage to store and retrieve persistent state. async def store_data(ctx: Context, sender: str, message: str): ctx.storage["last_message"] = message await ctx.send(sender, "Message stored!") Retrieve the stored value later: last_message = ctx.storage.get("last_message", "No message found") Secure Agent Communication Use encryption to ensure secure message exchanges between agents. Fetch.ai’s uAgents framework supports built-in encryption to protect sensitive data. ctx.send(receiver_address, encrypted_data, encrypt=True) Use Multiple Slave Agents for Load Balancing Instead of processing everything in a single agent, distribute tasks across multiple slave agents. The master agent can assign tasks dynamically based on availability. slave_agents = ["slave-1-address", "slave-2-address"] selected_agent = random.choice(slave_agents) await ctx.send(selected_agent, "Process this task!") Enable Auto-Restart for Reliability If your agent crashes, you don’t want to restart it manually. Use a process manager like systemd or pm2 to keep it running. pm2 start agent.py --name my-fetch-agent Log Everything for Easier Debugging Use structured logging to track agent activity and debug issues efficiently. ctx.logger.info(f"Received message from {sender}: {message}") Simulate Agent Interactions Locally Before deploying agents, test interactions locally using pytest or simple Python scripts. await master_agent.send(slave_agent.address, "Test message") Deploy Agents in the Cloud for Scalability To ensure high availability, deploy your Fetch.ai agents on cloud platforms like AWS, GCP, or DigitalOcean. Use containerization with Docker: docker build -t fetch-agent . docker run -d --name fetch-agent fetch-agent Set Up Webhooks for Real-Time Events If your agent needs to interact with external systems, use webhooks to trigger events. ctx.send_webhook("https://myapi.com/webhook", payload) Integrate AI/ML for Smarter Agents Enhance your agents by integrating AI models for decision-making, natural language processing, or fraud detection. from transformers import pipeline nlp_model = pipeline("sentiment-analysis") def analyze_text(text): return nlp_model(text) Final Thoughts AI agents powered by Fetch.AI open up exciting possibilities for automation, optimization, and decentralized intelligence. Whether you’re building smart contracts, autonomous trading bots, or intelligent supply chain solutions, Fetch.AI provides a robust foundation to get started. If you’re new to Fetch.AI, here are some useful resources to dive deeper: Fetch.AI Documentation – Learn the fundamentals and advanced capabilities of the Fetch ecosystem. Fetch.AI GitHub – Explore open-source projects and real-world implementations. Fetch.AI Developer Portal – Find tools, SDKs, and tutorials to build your own AI-powered agents. Fetch.AI Discord – Connect with the community and get support from experts. With the right approach, you can leverage Fetch.AI to create intelligent, autonomous systems that enhance efficiency and decision-making across industries. What are you building with Fetch.AI? Drop a comment below—I’d love to hear your thoughts!

Fetch.ai’s uAgents framework allows developers to build intelligent, autonomous AI agents that can automate tasks, communicate, and learn from data. Whether you're new to AI agents or looking to optimize your workflow, these tips will help you make the most of Fetch.ai’s uAgents.

Use Async for Faster Communication

Fetch.ai’s uAgents framework supports asynchronous communication, making it easy to handle multiple tasks at once. Instead of blocking operations, always use async and await in your agent logic.

async def handle_message(ctx: Context, sender: str, message: str):

response = f"Received your message: {message}"

await ctx.send(sender, response)

Persist Agent State with Storage

Instead of losing data when your agent restarts, use ctx.storage to store and retrieve persistent state.

async def store_data(ctx: Context, sender: str, message: str):

ctx.storage["last_message"] = message

await ctx.send(sender, "Message stored!")

Retrieve the stored value later:

last_message = ctx.storage.get("last_message", "No message found")

Secure Agent Communication

Use encryption to ensure secure message exchanges between agents. Fetch.ai’s uAgents framework supports built-in encryption to protect sensitive data.

ctx.send(receiver_address, encrypted_data, encrypt=True)

Use Multiple Slave Agents for Load Balancing

Instead of processing everything in a single agent, distribute tasks across multiple slave agents. The master agent can assign tasks dynamically based on availability.

slave_agents = ["slave-1-address", "slave-2-address"]

selected_agent = random.choice(slave_agents)

await ctx.send(selected_agent, "Process this task!")

Enable Auto-Restart for Reliability

If your agent crashes, you don’t want to restart it manually. Use a process manager like systemd or pm2 to keep it running.

pm2 start agent.py --name my-fetch-agent

Log Everything for Easier Debugging

Use structured logging to track agent activity and debug issues efficiently.

ctx.logger.info(f"Received message from {sender}: {message}")

Simulate Agent Interactions Locally

Before deploying agents, test interactions locally using pytest or simple Python scripts.

await master_agent.send(slave_agent.address, "Test message")

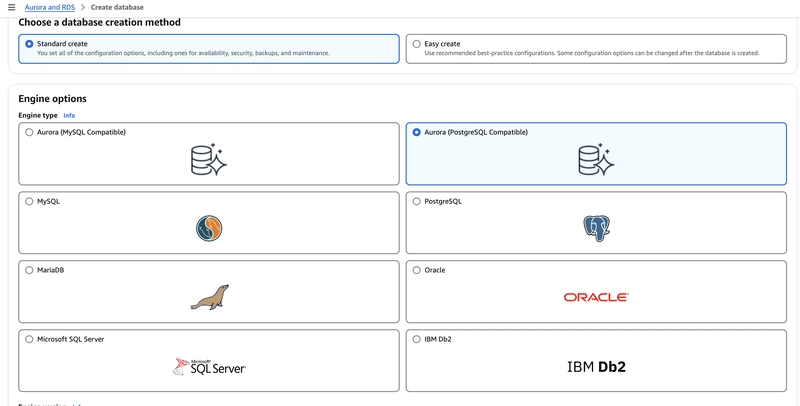

Deploy Agents in the Cloud for Scalability

To ensure high availability, deploy your Fetch.ai agents on cloud platforms like AWS, GCP, or DigitalOcean. Use containerization with Docker:

docker build -t fetch-agent .

docker run -d --name fetch-agent fetch-agent

Set Up Webhooks for Real-Time Events

If your agent needs to interact with external systems, use webhooks to trigger events.

ctx.send_webhook("https://myapi.com/webhook", payload)

Integrate AI/ML for Smarter Agents

Enhance your agents by integrating AI models for decision-making, natural language processing, or fraud detection.

from transformers import pipeline

nlp_model = pipeline("sentiment-analysis")

def analyze_text(text):

return nlp_model(text)

Final Thoughts

AI agents powered by Fetch.AI open up exciting possibilities for automation, optimization, and decentralized intelligence. Whether you’re building smart contracts, autonomous trading bots, or intelligent supply chain solutions, Fetch.AI provides a robust foundation to get started.

If you’re new to Fetch.AI, here are some useful resources to dive deeper:

- Fetch.AI Documentation – Learn the fundamentals and advanced capabilities of the Fetch ecosystem.

- Fetch.AI GitHub – Explore open-source projects and real-world implementations.

- Fetch.AI Developer Portal – Find tools, SDKs, and tutorials to build your own AI-powered agents.

- Fetch.AI Discord – Connect with the community and get support from experts.

With the right approach, you can leverage Fetch.AI to create intelligent, autonomous systems that enhance efficiency and decision-making across industries. What are you building with Fetch.AI? Drop a comment below—I’d love to hear your thoughts!

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)