Advanced Data Analytics Techniques for the Experienced Analyst

Advanced data analytics techniques are essential for experienced analysts looking to deepen their understanding and enhance their skills. This guide explores several sophisticated methods that can provide valuable insights and drive data-driven decision-making. Predictive Analytics and Forecasting Predictive analytics allows analysts to anticipate future trends and behaviors using historical data and statistical algorithms. One of the most widely used techniques is regression analysis, which helps identify relationships between variables—such as how advertising spend impacts sales. Time series forecasting, using models like ARIMA and exponential smoothing, enables businesses to predict seasonal trends and future demand. Moreover, machine learning methods like Random Forest and Gradient Boosting add an extra layer of accuracy by automatically learning patterns in data without manual intervention. These techniques are crucial in areas like risk management, customer retention, and financial planning. Machine Learning and Artificial Intelligence Integrating machine learning into analytics takes the insights game to the next level. Supervised learning techniques like decision trees, support vector machines, and neural networks are used for tasks like classification and regression. On the other hand, unsupervised learning techniques such as k-means clustering and principal component analysis help uncover hidden patterns, making them ideal for customer segmentation or market basket analysis. Reinforcement learning is also gaining traction in areas like dynamic pricing and recommendation engines. Mastery of machine learning tools—such as scikit-learn, TensorFlow, or PyTorch—can empower analysts to automate and scale their analytical capabilities across massive datasets. Building Robust Data Pipelines As data sources multiply, it becomes essential for analysts to understand how data flows through systems. Data engineering skills are increasingly important. Knowing how to design and manage ETL (Extract, Transform, Load) pipelines ensures that the data reaching your analysis is clean, structured, and up-to-date. Tools like Apache Airflow for scheduling and dbt for transformation allow teams to automate and monitor complex workflows. Furthermore, understanding data storage solutions—such as data lakes and warehouses like Snowflake or BigQuery—helps in optimizing query performance and managing costs efficiently. Solid pipeline design is the foundation of reliable analytics. Feature Engineering and Model Optimization Feature engineering is the art of transforming raw data into meaningful inputs for machine learning models. An experienced analyst knows that the success of a model often depends more on the quality of features than the complexity of the algorithm. Creating interaction features, handling categorical variables with techniques like target encoding, and normalizing or scaling numerical data are all part of this process. Moreover, feature selection techniques like Recursive Feature Elimination (RFE) or LASSO regression help reduce model complexity and improve interpretability. These steps not only enhance accuracy but also reduce the risk of overfitting. Natural Language Processing (NLP) In an age where data is not just numbers but also text, the ability to analyze textual information becomes invaluable. Natural Language Processing (NLP) allows analysts to extract insights from customer reviews, emails, social media posts, and more. With sentiment analysis, businesses can gauge public opinion about their products or services. Topic modeling techniques like Latent Dirichlet Allocation (LDA) help in summarizing large volumes of documents. More advanced NLP tasks, such as named entity recognition (NER) and text summarization, are being used in legal tech, healthcare, and finance. Tools like spaCy, NLTK, and Hugging Face’s transformers library provide powerful capabilities for text analytics. Real-Time Analytics As industries demand faster decision-making, real-time analytics has become a game-changer. Traditional batch processing may no longer be sufficient when organizations need to react to changes as they happen. Real-time analytics allows analysts to monitor operations instantly—whether it’s detecting fraud in banking, adjusting inventory in e-commerce, or tracking system health in IT operations. Technologies like Apache Kafka, Spark Streaming, and Flink help process data on the fly. Combined with real-time dashboards built in Power BI, Tableau, or Grafana, these systems ensure that insights are not only accurate but also immediate. Advanced Visualization and Storytelling Experienced analysts know that the true power of data lies in how it’s communicated. Advanced data visualization goes beyond static charts to include interactive dashboards and dynamic visual storytelling. Tools like Tableau, Power BI, and Plotly allow users to

Advanced data analytics techniques are essential for experienced analysts looking to deepen their understanding and enhance their skills. This guide explores several sophisticated methods that can provide valuable insights and drive data-driven decision-making.

Predictive Analytics and Forecasting

Predictive analytics allows analysts to anticipate future trends and behaviors using historical data and statistical algorithms. One of the most widely used techniques is regression analysis, which helps identify relationships between variables—such as how advertising spend impacts sales. Time series forecasting, using models like ARIMA and exponential smoothing, enables businesses to predict seasonal trends and future demand. Moreover, machine learning methods like Random Forest and Gradient Boosting add an extra layer of accuracy by automatically learning patterns in data without manual intervention. These techniques are crucial in areas like risk management, customer retention, and financial planning.

Machine Learning and Artificial Intelligence

Integrating machine learning into analytics takes the insights game to the next level. Supervised learning techniques like decision trees, support vector machines, and neural networks are used for tasks like classification and regression. On the other hand, unsupervised learning techniques such as k-means clustering and principal component analysis help uncover hidden patterns, making them ideal for customer segmentation or market basket analysis. Reinforcement learning is also gaining traction in areas like dynamic pricing and recommendation engines. Mastery of machine learning tools—such as scikit-learn, TensorFlow, or PyTorch—can empower analysts to automate and scale their analytical capabilities across massive datasets.

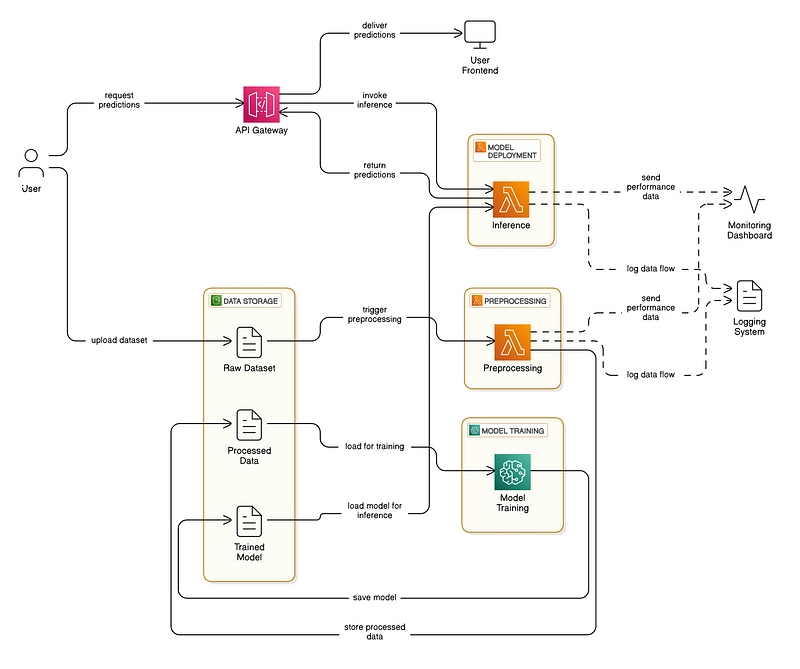

Building Robust Data Pipelines

As data sources multiply, it becomes essential for analysts to understand how data flows through systems. Data engineering skills are increasingly important. Knowing how to design and manage ETL (Extract, Transform, Load) pipelines ensures that the data reaching your analysis is clean, structured, and up-to-date. Tools like Apache Airflow for scheduling and dbt for transformation allow teams to automate and monitor complex workflows. Furthermore, understanding data storage solutions—such as data lakes and warehouses like Snowflake or BigQuery—helps in optimizing query performance and managing costs efficiently. Solid pipeline design is the foundation of reliable analytics.

Feature Engineering and Model Optimization

Feature engineering is the art of transforming raw data into meaningful inputs for machine learning models. An experienced analyst knows that the success of a model often depends more on the quality of features than the complexity of the algorithm. Creating interaction features, handling categorical variables with techniques like target encoding, and normalizing or scaling numerical data are all part of this process. Moreover, feature selection techniques like Recursive Feature Elimination (RFE) or LASSO regression help reduce model complexity and improve interpretability. These steps not only enhance accuracy but also reduce the risk of overfitting.

Natural Language Processing (NLP)

In an age where data is not just numbers but also text, the ability to analyze textual information becomes invaluable. Natural Language Processing (NLP) allows analysts to extract insights from customer reviews, emails, social media posts, and more. With sentiment analysis, businesses can gauge public opinion about their products or services. Topic modeling techniques like Latent Dirichlet Allocation (LDA) help in summarizing large volumes of documents. More advanced NLP tasks, such as named entity recognition (NER) and text summarization, are being used in legal tech, healthcare, and finance. Tools like spaCy, NLTK, and Hugging Face’s transformers library provide powerful capabilities for text analytics.

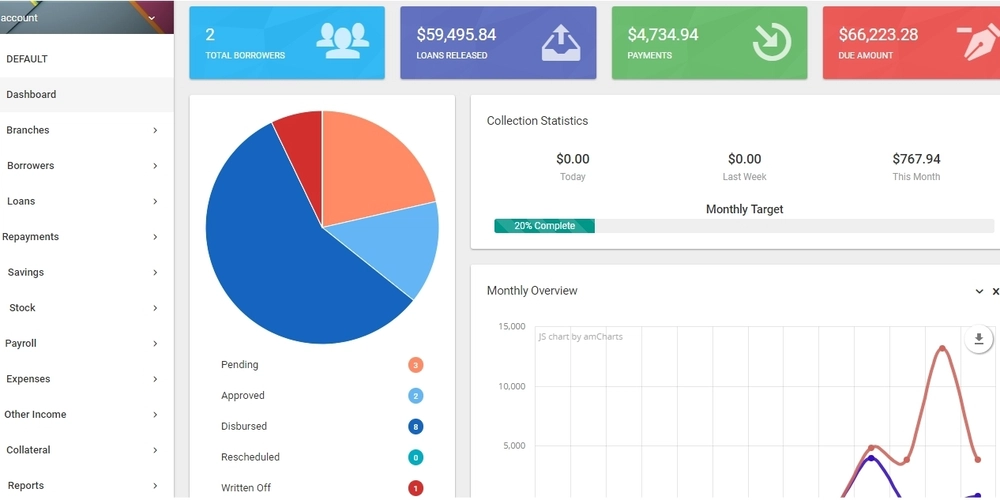

Real-Time Analytics

As industries demand faster decision-making, real-time analytics has become a game-changer. Traditional batch processing may no longer be sufficient when organizations need to react to changes as they happen. Real-time analytics allows analysts to monitor operations instantly—whether it’s detecting fraud in banking, adjusting inventory in e-commerce, or tracking system health in IT operations. Technologies like Apache Kafka, Spark Streaming, and Flink help process data on the fly. Combined with real-time dashboards built in Power BI, Tableau, or Grafana, these systems ensure that insights are not only accurate but also immediate.

Advanced Visualization and Storytelling

Experienced analysts know that the true power of data lies in how it’s communicated. Advanced data visualization goes beyond static charts to include interactive dashboards and dynamic visual storytelling. Tools like Tableau, Power BI, and Plotly allow users to explore data through filters, sliders, and drill-down options. Analysts can also use geospatial tools like GeoPandas or Mapbox to create location-based insights. In some cases, network graphs and tree maps help visualize relationships that are not easily seen in tables. Effective visualization guides decision-makers through the data, focusing on clarity, context, and actionable outcomes.

Causal Inference and Experimental Design

While correlation is useful, understanding causation is critical for making reliable business decisions. Causal inference techniques help determine whether a specific action leads to a desired outcome. A/B testing, commonly used in marketing and product design, is the most straightforward form of experimentation. But when randomized control is not feasible, techniques like Propensity Score Matching or Difference-in-Differences (DiD) help simulate experimental conditions using observational data. These methods are especially valuable when evaluating campaign effectiveness, policy changes, or pricing strategies.

Ethics and Responsible Data Use

As analytics becomes more powerful, so does the responsibility of using it ethically. Experienced analysts must be vigilant about bias in their models, especially when dealing with sensitive attributes like race, gender, or age. Techniques for fairness auditing and model interpretability—such as SHAP values or LIME—help maintain transparency. Adherence to data privacy regulations like GDPR or CCPA is not just legal compliance, but a matter of trust. Embedding ethical considerations into the analytical process is essential for long-term success and integrity.

Continuous Learning and Adaptation

Data analytics evolves constantly. To stay competitive, analysts must embrace lifelong learning. Engaging with blogs, online communities, and taking professional courses, such as a data analytics course in Noida, Delhi, Mumbai, or other parts of India, helps you stay current with the latest tools and trends. Attending webinars, conferences, or local meetups introduces fresh perspectives and emerging practices. The more you explore, adapt, and grow, the more valuable you become in your field.

In The End

Advanced data analytics is more than a collection of tools—it’s a mindset. It’s about approaching problems with curiosity, precision, and a readiness to dig deeper than surface-level insights. As you continue your journey, remember that mastering analytics is not just about models and metrics, but about driving real, measurable impact.

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![[DEALS] The All-in-One Microsoft Office Pro 2019 for Windows: Lifetime License + Windows 11 Pro Bundle (89% off) & Other Deals Up To 98% Off](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![Is this too much for a modular monolith system? [closed]](https://i.sstatic.net/pYL1nsfg.png)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What features do you get with Gemini Advanced? [April 2025]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2024/02/gemini-advanced-cover.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Shares Official Trailer for 'Long Way Home' Starring Ewan McGregor and Charley Boorman [Video]](https://www.iclarified.com/images/news/97069/97069/97069-640.jpg)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)