What Happens When AI Becomes the Client?

Most APIs were built for humans. We wrote docs assuming someone would read them. We wrapped our endpoints in auth flows and user interfaces. We expected a person, or maybe a service, on the other side. But that’s already changing. AI models are now consuming APIs directly. Not as passive data readers, but as active participants. They parse user intent, synthesize context, and trigger backend actions. They're not just clients. They're intermediaries. And that changes everything. From Prompts to Protocols When models call APIs, natural language stops being enough. We like to imagine that models “understand” prompts, but in practice, they just compute probabilities over sequences of text. They don’t grasp nuance the way humans do. A tiny shift in phrasing can derail the whole interaction. That makes coordination between models brittle. And the more we ask them to collaborate, especially across tools, teams, or tasks, the worse that brittleness becomes. What’s the fix? Not better prompts. Structure. We need something like a communication protocol. A way for models to reason about roles, goals, and permissions—not just the text of a request. Cosmo + MCP: Building Toward Structured AI Interactions At WunderGraph, we’ve been building toward that future with Cosmo, our GraphQL federation platform. We recently added support for Anthropic’s Model Context Protocol, which gives models a schema-aware interface for accessing APIs. Instead of tossing raw prompts over HTTP, agents can interact through structured definitions, like a strongly typed contract between backend services and autonomous clients. It’s still early, but it’s a step toward something more repeatable. More predictable. More machine-native. It’s Not Just About APIs Anymore Think about how humans collaborate. A product designer hands off work to a frontend engineer. A nurse calls in a specialist. A marketing team reviews a PR draft. Each role has context. Structure. A shared understanding of who does what. Right now, most AI agents operate in isolation. You send a prompt. They respond. Maybe they call a tool. But there's no persistent memory. No shared assumptions. No reliable handoff between agents. That’s the gap that protocols aim to close. Oxford’s Agora Protocol is one example. It is designed to let agents coordinate by dynamically negotiating shared protocol docs. Google’s A2A protocol takes a more prescriptive route with capability graphs and structured formats. Other proposals like agents.json and llms.txt are about making websites and APIs more discoverable to agents, like a robots.txt for LLMs. These are early signals of a larger shift: a move toward a protocol-native internet where the primary consumers aren’t humans but systems. A Safer Path Forward There’s also a safety angle here. Anthropic’s AI Harms framework suggests that predictable behavior under stress requires more than model alignment. It requires structured, interpretable systems. The Partnership on AI has called for machine-readable usage policies and verifiable outputs. Structure isn’t just about coordination. It’s about control. A well-designed protocol can carry trust signals, permissions, and provenance across interactions. It makes reasoning traceable. And it gives teams confidence that agentic behavior won’t spiral out of scope. So… What Now? This ecosystem is still early. Most agent frameworks are duct-taped together with plugins and retry loops. But the direction is clear: if AI is going to scale as a first-class client, we need shared expectations, machine-readable interfaces, and secure boundaries. At WunderGraph, we’re treating that as a core infrastructure problem. Cosmo is becoming a way to expose APIs to agents through structured federation, not just public endpoints. Because if models are going to collaborate, they need something better than prompts. They need a protocol. Original blog: Why AI Needs a Common Language

Most APIs were built for humans.

We wrote docs assuming someone would read them. We wrapped our endpoints in auth flows and user interfaces. We expected a person, or maybe a service, on the other side.

But that’s already changing.

AI models are now consuming APIs directly. Not as passive data readers, but as active participants. They parse user intent, synthesize context, and trigger backend actions. They're not just clients. They're intermediaries.

And that changes everything.

From Prompts to Protocols

When models call APIs, natural language stops being enough.

We like to imagine that models “understand” prompts, but in practice, they just compute probabilities over sequences of text. They don’t grasp nuance the way humans do. A tiny shift in phrasing can derail the whole interaction.

That makes coordination between models brittle. And the more we ask them to collaborate, especially across tools, teams, or tasks, the worse that brittleness becomes.

What’s the fix? Not better prompts. Structure.

We need something like a communication protocol. A way for models to reason about roles, goals, and permissions—not just the text of a request.

Cosmo + MCP: Building Toward Structured AI Interactions

At WunderGraph, we’ve been building toward that future with Cosmo, our GraphQL federation platform.

We recently added support for Anthropic’s Model Context Protocol, which gives models a schema-aware interface for accessing APIs. Instead of tossing raw prompts over HTTP, agents can interact through structured definitions, like a strongly typed contract between backend services and autonomous clients.

It’s still early, but it’s a step toward something more repeatable. More predictable. More machine-native.

It’s Not Just About APIs Anymore

Think about how humans collaborate.

A product designer hands off work to a frontend engineer. A nurse calls in a specialist. A marketing team reviews a PR draft. Each role has context. Structure. A shared understanding of who does what.

Right now, most AI agents operate in isolation. You send a prompt. They respond. Maybe they call a tool. But there's no persistent memory. No shared assumptions. No reliable handoff between agents.

That’s the gap that protocols aim to close.

Oxford’s Agora Protocol is one example. It is designed to let agents coordinate by dynamically negotiating shared protocol docs. Google’s A2A protocol takes a more prescriptive route with capability graphs and structured formats. Other proposals like agents.json and llms.txt are about making websites and APIs more discoverable to agents, like a robots.txt for LLMs.

These are early signals of a larger shift: a move toward a protocol-native internet where the primary consumers aren’t humans but systems.

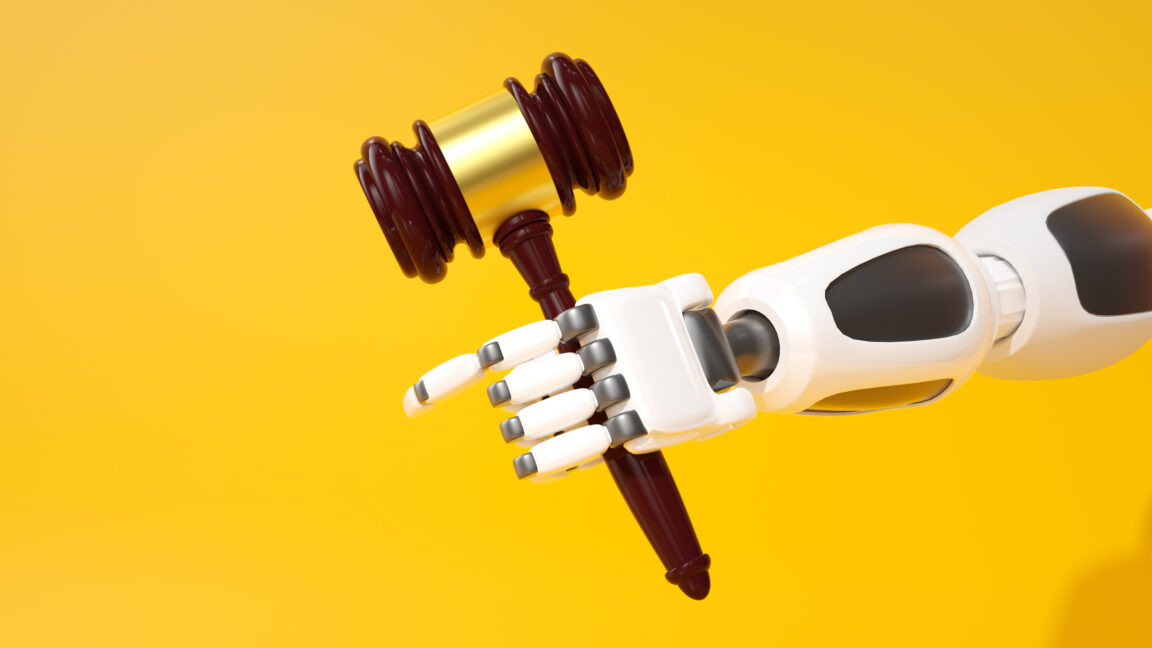

A Safer Path Forward

There’s also a safety angle here.

Anthropic’s AI Harms framework suggests that predictable behavior under stress requires more than model alignment. It requires structured, interpretable systems. The Partnership on AI has called for machine-readable usage policies and verifiable outputs.

Structure isn’t just about coordination. It’s about control.

A well-designed protocol can carry trust signals, permissions, and provenance across interactions. It makes reasoning traceable. And it gives teams confidence that agentic behavior won’t spiral out of scope.

So… What Now?

This ecosystem is still early. Most agent frameworks are duct-taped together with plugins and retry loops. But the direction is clear: if AI is going to scale as a first-class client, we need shared expectations, machine-readable interfaces, and secure boundaries.

At WunderGraph, we’re treating that as a core infrastructure problem. Cosmo is becoming a way to expose APIs to agents through structured federation, not just public endpoints.

Because if models are going to collaborate, they need something better than prompts.

They need a protocol.

Original blog: Why AI Needs a Common Language

![[The AI Show Episode 145]: OpenAI Releases o3 and o4-mini, AI Is Causing “Quiet Layoffs,” Executive Order on Youth AI Education & GPT-4o’s Controversial Update](https://www.marketingaiinstitute.com/hubfs/ep%20145%20cover.png)

![From Art School Drop-out to Microsoft Engineer with Shashi Lo [Podcast #170]](https://cdn.hashnode.com/res/hashnode/image/upload/v1746203291209/439bf16b-c820-4fe8-b69e-94d80533b2df.png?#)

(1).jpg?#)

_Inge_Johnsson-Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Developing AI 'Vibe-Coding' Assistant for Xcode With Anthropic [Report]](https://www.iclarified.com/images/news/97200/97200/97200-640.jpg)

![Apple's New Ads Spotlight Apple Watch for Kids [Video]](https://www.iclarified.com/images/news/97197/97197/97197-640.jpg)

![[Weekly funding roundup April 26-May 2] VC inflow continues to remain downcast](https://images.yourstory.com/cs/2/220356402d6d11e9aa979329348d4c3e/WeeklyFundingRoundupNewLogo1-1739546168054.jpg)