VL-Rethinker: RL Drives Self-Reflection in Vision-Language Models for Smarter Reasoning

This is a Plain English Papers summary of a research paper called VL-Rethinker: RL Drives Self-Reflection in Vision-Language Models for Smarter Reasoning. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter. Introduction: The Emergence of Slow-Thinking in AI Systems Recent advances in AI have revealed a critical distinction between "fast-thinking" and "slow-thinking" systems. While models like GPT-4o excel at quick responses, systems like GPT-o1 and DeepSeek-R1 demonstrate superior problem-solving through explicit reflection. These slow-thinking systems significantly outperform fast-thinking counterparts on complex math and science benchmarks. However, this advantage hasn't fully translated to multimodal reasoning tasks. Current multimodal models struggle with visual mathematical reasoning, where problems require both image understanding and complex reasoning. This gap presents a significant opportunity to enhance vision-language models (VLMs) with deliberate reasoning capabilities. Enter VL-Rethinker, a new approach that uses reinforcement learning to cultivate slow-thinking capabilities in VLMs without relying on distillation from existing systems. The research addresses fundamental challenges in applying reinforcement learning to VLMs and introduces novel techniques to encourage self-reflection and verification. Method Overview showing the two-stage approach of VL-Rethinker: enhancing reasoning through SSR and promoting deliberate thinking through forced rethinking. Background: The Limitations of Current VLMs in Complex Reasoning Vision-language models have made remarkable progress in understanding images and responding to visual queries. However, they struggle with complex visual reasoning tasks, particularly those requiring mathematical problem-solving or multi-step logical deduction. Unlike text-only models where "slow-thinking" capabilities have emerged through techniques like chain-of-thought prompting and tree-of-thought reasoning, VLMs haven't fully developed these deliberative processes. When faced with complex visual math problems, current VLMs often make reasoning errors despite correctly perceiving the visual elements. Reinforcement learning offers a promising approach to improve VLM reasoning. Rather than imitating existing models, RL allows VLMs to discover effective reasoning strategies through trial and error. However, applying RL to large vision-language models introduces unique challenges that require innovative solutions. The Vanishing Advantages Challenge: Why Standard RL Methods Struggle with VLMs A significant obstacle in applying reinforcement learning to vision-language models is the "Vanishing Advantages" problem. This phenomenon occurs when the advantages (signals indicating which actions are better than expected) rapidly converge to zero during training. Visualization of the Vanishing Advantages problem showing how model training rapidly saturates, with effective queries dropping to just 20% within 256 steps. In practical terms, this means that after a short period of training, most samples in each batch provide minimal learning signal. With standard GRPO (a variant of PPO optimized for language models), the proportion of training samples with non-zero advantages drops dramatically - from 100% to merely 20% within 256 training steps. This effectively wastes 80% of computational resources and severely limits the model's ability to learn complex reasoning patterns. This problem is particularly severe for large 72B parameter models, where deep exploration of the solution space is necessary for discovering effective reasoning strategies. The rapid vanishing of advantages prevents the model from properly exploring different reasoning approaches, resulting in suboptimal performance on complex tasks. Selective Sample Replay: Maintaining Learning Efficiency To address the Vanishing Advantages problem, the researchers introduce Selective Sample Replay (SSR), a technique that maintains learning efficiency by selectively replaying informative samples from previous training iterations. SSR works by maintaining a replay buffer that stores only samples with non-zero advantages, meaning they still provide useful learning signals. From this buffer, samples are selected with a probability proportional to their absolute advantage values: P(select j) = |Âj|α / ∑k∈Breplay|Âk|α Where α is a hyperparameter (set to 1 in experiments) controlling prioritization intensity. This prioritization ensures that samples with stronger learning signals are more likely to be selected for training. By focusing computational resources on the most informative examples, SSR counteracts the reward uniformity problem that leads to vanishing advantages. This approach ensures a richer diversity of advantages within each training batch, providing more consistent gradient signals. The result is more stable training that preve

This is a Plain English Papers summary of a research paper called VL-Rethinker: RL Drives Self-Reflection in Vision-Language Models for Smarter Reasoning. If you like these kinds of analysis, you should join AImodels.fyi or follow us on Twitter.

Introduction: The Emergence of Slow-Thinking in AI Systems

Recent advances in AI have revealed a critical distinction between "fast-thinking" and "slow-thinking" systems. While models like GPT-4o excel at quick responses, systems like GPT-o1 and DeepSeek-R1 demonstrate superior problem-solving through explicit reflection. These slow-thinking systems significantly outperform fast-thinking counterparts on complex math and science benchmarks.

However, this advantage hasn't fully translated to multimodal reasoning tasks. Current multimodal models struggle with visual mathematical reasoning, where problems require both image understanding and complex reasoning. This gap presents a significant opportunity to enhance vision-language models (VLMs) with deliberate reasoning capabilities.

Enter VL-Rethinker, a new approach that uses reinforcement learning to cultivate slow-thinking capabilities in VLMs without relying on distillation from existing systems. The research addresses fundamental challenges in applying reinforcement learning to VLMs and introduces novel techniques to encourage self-reflection and verification.

Method Overview showing the two-stage approach of VL-Rethinker: enhancing reasoning through SSR and promoting deliberate thinking through forced rethinking.

Background: The Limitations of Current VLMs in Complex Reasoning

Vision-language models have made remarkable progress in understanding images and responding to visual queries. However, they struggle with complex visual reasoning tasks, particularly those requiring mathematical problem-solving or multi-step logical deduction.

Unlike text-only models where "slow-thinking" capabilities have emerged through techniques like chain-of-thought prompting and tree-of-thought reasoning, VLMs haven't fully developed these deliberative processes. When faced with complex visual math problems, current VLMs often make reasoning errors despite correctly perceiving the visual elements.

Reinforcement learning offers a promising approach to improve VLM reasoning. Rather than imitating existing models, RL allows VLMs to discover effective reasoning strategies through trial and error. However, applying RL to large vision-language models introduces unique challenges that require innovative solutions.

The Vanishing Advantages Challenge: Why Standard RL Methods Struggle with VLMs

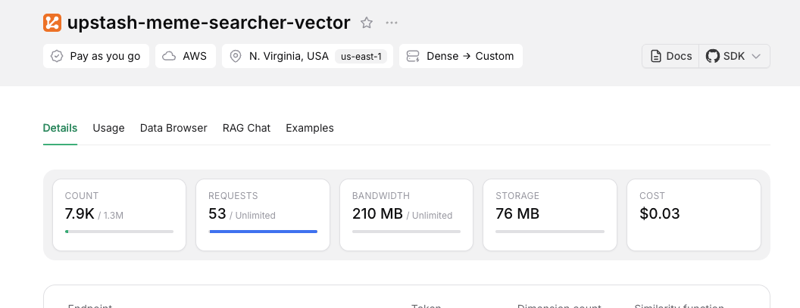

A significant obstacle in applying reinforcement learning to vision-language models is the "Vanishing Advantages" problem. This phenomenon occurs when the advantages (signals indicating which actions are better than expected) rapidly converge to zero during training.

Visualization of the Vanishing Advantages problem showing how model training rapidly saturates, with effective queries dropping to just 20% within 256 steps.

In practical terms, this means that after a short period of training, most samples in each batch provide minimal learning signal. With standard GRPO (a variant of PPO optimized for language models), the proportion of training samples with non-zero advantages drops dramatically - from 100% to merely 20% within 256 training steps. This effectively wastes 80% of computational resources and severely limits the model's ability to learn complex reasoning patterns.

This problem is particularly severe for large 72B parameter models, where deep exploration of the solution space is necessary for discovering effective reasoning strategies. The rapid vanishing of advantages prevents the model from properly exploring different reasoning approaches, resulting in suboptimal performance on complex tasks.

Selective Sample Replay: Maintaining Learning Efficiency

To address the Vanishing Advantages problem, the researchers introduce Selective Sample Replay (SSR), a technique that maintains learning efficiency by selectively replaying informative samples from previous training iterations.

SSR works by maintaining a replay buffer that stores only samples with non-zero advantages, meaning they still provide useful learning signals. From this buffer, samples are selected with a probability proportional to their absolute advantage values:

P(select j) = |Âj|α / ∑k∈Breplay|Âk|α

Where α is a hyperparameter (set to 1 in experiments) controlling prioritization intensity. This prioritization ensures that samples with stronger learning signals are more likely to be selected for training.

By focusing computational resources on the most informative examples, SSR counteracts the reward uniformity problem that leads to vanishing advantages. This approach ensures a richer diversity of advantages within each training batch, providing more consistent gradient signals. The result is more stable training that prevents premature stagnation and allows for deeper exploration of reasoning strategies.

Forced Rethinking: Teaching VLMs to Self-Reflect

While SSR improves optimization stability, the researchers observed that complex deliberative thinking patterns didn't naturally emerge from standard RL training. To explicitly cultivate deeper reasoning processes, they introduced a technique called Forced Rethinking.

This method proactively encourages the model to engage in internal deliberation by appending a specific "rethinking trigger" to the model's initial responses during training. After the VLM generates an initial response y₁ to input x, a textual trigger is appended, prompting the model to generate a subsequent response segment y₂. The complete sequence becomes y = y₁ ⊕ trigger ⊕ y₂.

The rethinking triggers fall into three categories:

- Self-verification: "Let me double-check my answer..."

- Self-correction: "On second thought, I made a mistake..."

- Self-questioning: "Wait, let me reconsider this problem..."

To avoid disrupting the learned policy, this strategy is applied to only a fraction of generated responses during training. The training objective is augmented with an additional negative log-likelihood loss specifically applied to the y₂ segments that lead to correct final answers, directly incentivizing the model to generate effective rethinking patterns.

This approach differs from methods that rely on supervised fine-tuning distillation from existing slow-thinking systems. VL-Rethinker doesn't require a rethinking step for every query but learns to strategically engage in this process when necessary, leading to more efficient inference. The model even demonstrates emergent metacognitive abilities, such as identifying flaws in problem statements during its reflection process.

Experimental Setup: Training VL-Rethinker

The researchers built VL-Rethinker on the Qwen-VL-2.5-Instruct foundation model, which provides strong baseline capabilities for vision-language tasks. They developed two variants: a 72B parameter model and a more efficient 7B parameter version.

The models were trained on a diverse dataset of 16K samples, including:

- Mathematical reasoning problems with images

- Scientific reasoning problems with images

- Text-only mathematical and logical reasoning problems

The reinforcement learning training followed a two-stage process:

- Stage 1: GRPO with Selective Sample Replay to enhance general reasoning capabilities

- Stage 2: Forced Rethinking to promote deliberate reasoning and self-reflection

For evaluation, the researchers used seven challenging benchmarks that test different aspects of visual reasoning:

- Math-focused: MathVista, MathVerse, MathVision

- Multi-disciplinary: MMMU-Pro, MMMU, EMMA

- Real-world: MEGA-Bench

Results: VL-Rethinker's Performance Across Benchmarks

VL-Rethinker achieves state-of-the-art results across multiple challenging vision-language reasoning benchmarks, particularly excelling at mathematical visual reasoning tasks.

| Model | Math-Related | Multi-Discipline | Real-World | ||||

|---|---|---|---|---|---|---|---|

| MathVista testmini |

MathVerse testmini |

MathVision test |

MMMU-Pro overall |

MMMU val |

EMMA full |

MEGA core |

|

| Proprietary Model | |||||||

| OpenAI-o1 | 73.9 | 57.0 | 42.2 | 62.4 | 78.2 | 45.7 | 56.2 |

| OpenAI-GPT-4o | 60.0 | 41.2 | 30.6 | 51.9 | 69.1 | 32.7 | 52.7 |

| Claude-3.5-Sonnet | 67.7 | 47.8 | 33.5 | 51.5 | 68.3 | 35.1 | 52.3 |

| Gemini-2.0-Flash | 73.4 | 54.6 | 41.3 | 51.7 | 70.7 | 33.6 | 54.1 |

| Open-Source Models | |||||||

| Llama4-Scout-109B | 70.7 | - | - | 52.2 | 69.4 | - | 47.4 |

| InternVL-2.5-78B | 72.3 | 51.7 | 34.9 | 48.6 | 61.8 | 27.1 | 44.1 |

| QvQ-72B | 71.4 | 48.6 | 35.9 | 51.5 | 70.3 | 32.0 | 8.8 |

| LLava-OV-72B | 67.5 | 39.1 | 30.1 | 31.0 | 56.8 | 23.8 | 29.7 |

| Qwen-2.5-VL-32B | 74.7 | 48.5 | 38.4 | 49.5 | 70.0 | 31.1 | 13.3 |

| Qwen-2.5-VL-72B | 74.8 | 57.2 | 38.1 | 51.6 | 70.2 | 34.1 | 49.0 |

| VL-RethinKER-72B | 80.3 | 61.7 | 43.9 | 53.9 | 68.8 | 38.9 | 51.3 |

| $\Delta$ (Ours - Open SoTA) | $+5.5$ | $+4.5$ | $+5.5$ | $+1.7$ | $-1.4$ | $+5.8$ | $+2.3$ |

Table 1: Comparison between VL-Rethinker-72B and other state-of-the-art models across multiple benchmarks.

The 72B parameter version of VL-Rethinker achieves remarkable improvements on math-related benchmarks:

- 80.3% on MathVista (+5.5% over previous open-source state-of-the-art)

- 61.7% on MathVerse (+4.5%)

- 43.9% on MathVision (+5.5%)

These results demonstrate that the reinforcement learning techniques effectively enhance the model's mathematical reasoning abilities. Notably, VL-Rethinker-72B also narrows the gap with proprietary models like GPT-o1 and even outperforms it on some metrics.

The smaller 7B parameter version also shows impressive results, particularly compared to other models of similar size:

| Model | Math-Related | Multi-Discipline | Real-World | ||||

|---|---|---|---|---|---|---|---|

| MathVista testmini |

MathVerse testmini |

MathVision test |

MMMU-Pro overall |

MMMU val |

EMMA full |

MEGA core |

|

| General Vision-Language Models | |||||||

| InternVL2-8B | 58.3 | - | 17.4 | 29.0 | 51.2 | 19.8 | 26.0 |

| InternVL2.5-8B | 64.4 | 39.5 | 19.7 | 34.3 | 56.0 | - | 30.4 |

| QwenVL2-7B | 58.2 | - | 16.3 | 30.5 | 54.1 | 20.2 | 34.8 |

| QwenVL2.5-7B | 68.2 | 46.3 | 25.1 | 36.9 | ${ }^{1} 54.3$ | 21.5 | 35.0 |

| Llava-OV-7B | 63.2 | 26.2 | - | 24.1 | 48.8 | 18.3 | 22.9 |

| Kimi-VL-16B | 68.7 | 44.9 | 21.4 | - | ${ }^{1} 55.7$ | - | - |

| Vision-Language Reasoning Models | |||||||

| MM-Eureka-8B | 67.1 | 40.4 | 22.2 | 27.8 | 49.2 | - | - |

| R1-VL-7B | 63.5 | 40.0 | 24.7 | 7.8 | 44.5 | 8.3 | 29.9 |

| R1-Onevision-7B | 64.1 | 46.4 | 29.9 | 21.6 | - | 20.8 | 27.1 |

| OpenVLThinker-7B | 70.2 | 47.9 | 25.3 | 37.3 | 52.5 | 26.6 | 12.0 |

| VL-RethinKER-7B | 74.9 | 54.2 | 32.3 | 41.7 | 56.7 | 29.7 | 37.2 |

| $\Delta$ (Ours - Prev SoTA) | $+4.7$ | $+6.3$ | $+2.4$ | $+4.4$ | $+0.7$ | $+3.1$ | $+2.2$ |

Table 2: Comparison of VL-Rethinker-7B with other vision-language reasoning models of similar size.

VL-Rethinker-7B outperforms all other small-scale models across the board, with particularly strong gains on math-related benchmarks. This demonstrates that the techniques are effective even with more resource-efficient models.

Ablation Studies: The Impact of Each Component

To understand the contribution of each component, the researchers conducted comprehensive ablation studies:

| Model | RL-Algo | Data | MathVision | MathVista | MathVerse | MMMU-Pro | EMMA |

|---|---|---|---|---|---|---|---|

| VL-RethinkER-7B | SSR | 16 K | 32.3 | 74.9 | 54.2 | 41.7 | 29.7 |

| w/o 'Forced-Rethinking' | SSR | 16 K | 29.8 | 72.4 | 53.2 | 40.9 | 29.5 |

| - no SSR | Filter | 16 K | 28.5 | 72.0 | 50.0 | 40.0 | 26.9 |

| - no SSR& Filter | GRPO | 16 K | 26.0 | 70.9 | 51.4 | 38.8 | 26.2 |

| - no Text | SSR | 13 K | 29.1 | 73.5 | 53.5 | 41.1 | 28.7 |

| - no Science&Text | SSR | 11 K | 28.0 | 71.6 | 50.3 | 39.7 | 28.0 |

Table 3: Ablation study results showing the impact of each component in VL-Rethinker.

The ablation studies reveal several key insights:

Forced Rethinking provides significant gains across all benchmarks, with MathVision showing the largest improvement (+2.5%). This confirms that explicitly encouraging self-reflection enhances complex reasoning.

Selective Sample Replay substantially improves performance compared to standard GRPO, particularly on MathVision (+2.5%) and MathVerse (+3.2%). This validates that addressing the vanishing advantages problem is crucial for effective learning.

Text-Only Data contributes meaningfully to overall performance, especially on science-related tasks. This suggests that general reasoning capabilities transfer well to visual reasoning tasks.

Science Data plays an important role in mathematical visual reasoning, indicating that diverse training domains help build more robust reasoning abilities.

The combination of both SSR and Forced Rethinking yields the best overall performance, demonstrating their complementary nature in improving VLM reasoning capabilities.

Conclusion: Advancing Visual Reasoning Through Self-Reflection

VL-Rethinker represents a significant advance in vision-language reasoning capabilities. By addressing the fundamental challenges of applying reinforcement learning to VLMs, the researchers have developed a system that demonstrates superior performance on complex visual reasoning tasks.

The key innovations—Selective Sample Replay and Forced Rethinking—offer complementary benefits:

- SSR addresses the vanishing advantages problem, ensuring efficient learning throughout training

- Forced Rethinking explicitly cultivates the slow-thinking capabilities needed for complex reasoning

Together, these techniques enable VL-Rethinker to achieve state-of-the-art results across multiple challenging benchmarks, particularly in mathematical visual reasoning tasks.

The success of VL-Rethinker suggests that reinforcement learning, when carefully adapted to the unique challenges of VLMs, can effectively develop deliberative reasoning capabilities without relying on distillation from existing systems. This creates a path forward for building more capable AI systems that can tackle increasingly complex visual reasoning tasks.

Most importantly, the research demonstrates that teaching AI systems to reflect on their own reasoning—to think more deeply before reaching conclusions—represents a promising direction for advancing artificial intelligence. As VLMs continue to improve, those equipped with these slow-thinking capabilities will likely demonstrate increasingly sophisticated reasoning on par with the best text-only systems.

![[Webinar] AI Is Already Inside Your SaaS Stack — Learn How to Prevent the Next Silent Breach](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiOWn65wd33dg2uO99NrtKbpYLfcepwOLidQDMls0HXKlA91k6HURluRA4WXgJRAZldEe1VReMQZyyYt1PgnoAn5JPpILsWlXIzmrBSs_TBoyPwO7hZrWouBg2-O3mdeoeSGY-l9_bsZB7vbpKjTSvG93zNytjxgTaMPqo9iq9Z5pGa05CJOs9uXpwHFT4/s1600/ai-cyber.jpg?#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![What’s new in Android’s April 2025 Google System Updates [U: 4/18]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/01/google-play-services-3.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)