Vibe Coding: The pretext to system failure

Back to the internet – A lot of advances have been made in software engineering, especially coding; AI has sent shock waves to the entire ecosystem with computer assisted coding. In fact, a lot of CEOs speculate by the year 2026 90% of code will be AI generated, I doubt this. Lets go back to the basic principle of LLMs (Large Language Models) – data. Large language models spool out data based on data they have learnt from over time. This is data crawled over the internet. Computer programming is not a straightforward field, when it involves fulfilling the customer’s idea conception, things could be cranky. A lot has happened since LLMs like ChatGpt, Cursor and Claude began spooling code for developers. Even project managers can claim to write an entire application without having to learn coding – I guess you should be happy as developer. I recently installed the latest version of visual studio and I was impressed with the code completion feature – because it did exactly what I wanted it to do. Why I was impressed wasn’t just because it did exactly what I wanted to do but it did it in my own coding style, it learnt from my coding pattern and I just went along with the flow. It is a great sham that beginners are being sold the lie that you no longer need to learn coding to write software. The term called for this is ‘vibe coding’ – a way of writing unreliable software. One thing LLMs haven’t gotten so good at is ‘comprehension with intent and context’, this is a very trivia topic even for human beings. Interpreting intent in a context could be such a daunting task especially if you do not have the full scope of the subject – a topic which humans are still bad at, especially when it comes to transferring context to machines. Understanding the fundamentals of programming, the language and framework being used is sacrosanct to writing any sustainable and scalable computer program. This is non-negotiable. Can AI refactor the spaghetti code it generated to produce an optimal solution? Can you fix legacy code by not understanding the code base? Several reports have emerged of Cursor deleting random files or an entire code base just to fix an issue it created, or not understanding where to fix the problem, something you could do by just placing breakpoints in the call stack. Spooling out code does not replace having understanding of problem and tools required to solve it. How do you know you have to secure your api keys before they are being shipped? How do you recognize an Sql injection if AI writes bad code? Or how will you know you’re to implement api keys rate limiting before you go live? I guess @leojr94_ on X can tell more about this experience. According to this article by msn a C++ professional who dug into the archives of the code for strobelight – Meta’s profiling application which collects observability data from several of its services, discovered a performance debt. How did he solve this? Just by introducing the ampersand(&) which is an address operator which allowed the program to make reference to the actual data instead of making a copy of it each time it needs it. This single character commit constituted a 20% reduction in computing power required to perform the same operation which equated to an estimated 15,000 servers in capacity savings per year. This is the beauty of software engineering. You can vibe code yourself into prototyping but you can’t vibe code yourself into production ready systems, lest you’ll vibe code your company into bankruptcy.

Back to the internet –

A lot of advances have been made in software engineering, especially coding; AI has sent shock waves to the entire ecosystem with computer assisted coding. In fact, a lot of CEOs speculate by the year 2026 90% of code will be AI generated, I doubt this.

Lets go back to the basic principle of LLMs (Large Language Models) – data. Large language models spool out data based on data they have learnt from over time. This is data crawled over the internet. Computer programming is not a straightforward field, when it involves fulfilling the customer’s idea conception, things could be cranky.

A lot has happened since LLMs like ChatGpt, Cursor and Claude began spooling code for developers. Even project managers can claim to write an entire application without having to learn coding – I guess you should be happy as developer.

I recently installed the latest version of visual studio and I was impressed with the code completion feature – because it did exactly what I wanted it to do. Why I was impressed wasn’t just because it did exactly what I wanted to do but it did it in my own coding style, it learnt from my coding pattern and I just went along with the flow.

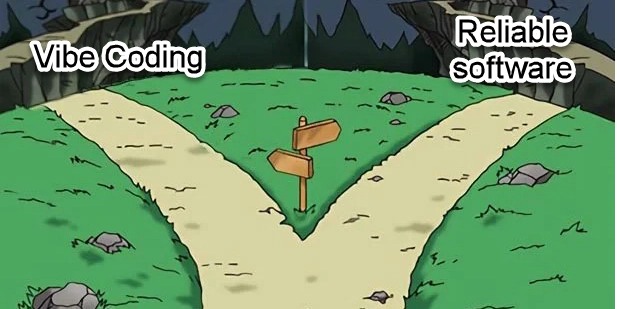

It is a great sham that beginners are being sold the lie that you no longer need to learn coding to write software. The term called for this is ‘vibe coding’ – a way of writing unreliable software.

One thing LLMs haven’t gotten so good at is ‘comprehension with intent and context’, this is a very trivia topic even for human beings. Interpreting intent in a context could be such a daunting task especially if you do not have the full scope of the subject – a topic which humans are still bad at, especially when it comes to transferring context to machines.

Understanding the fundamentals of programming, the language and framework being used is sacrosanct to writing any sustainable and scalable computer program. This is non-negotiable.

Can AI refactor the spaghetti code it generated to produce an optimal solution? Can you fix legacy code by not understanding the code base? Several reports have emerged of Cursor deleting random files or an entire code base just to fix an issue it created, or not understanding where to fix the problem, something you could do by just placing breakpoints in the call stack.

Spooling out code does not replace having understanding of problem and tools required to solve it. How do you know you have to secure your api keys before they are being shipped? How do you recognize an Sql injection if AI writes bad code? Or how will you know you’re to implement api keys rate limiting before you go live? I guess @leojr94_ on X can tell more about this experience.

According to this article by msn a C++ professional who dug into the archives of the code for strobelight – Meta’s profiling application which collects observability data from several of its services, discovered a performance debt. How did he solve this? Just by introducing the ampersand(&) which is an address operator which allowed the program to make reference to the actual data instead of making a copy of it each time it needs it. This single character commit constituted a 20% reduction in computing power required to perform the same operation which equated to an estimated 15,000 servers in capacity savings per year. This is the beauty of software engineering.

You can vibe code yourself into prototyping but you can’t vibe code yourself into production ready systems, lest you’ll vibe code your company into bankruptcy.

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[FREE EBOOKS] The Kubernetes Bible, The Ultimate Linux Shell Scripting Guide & Four More Best Selling Titles](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

_Christophe_Coat_Alamy.jpg?#)

![Rapidus in Talks With Apple as It Accelerates Toward 2nm Chip Production [Report]](https://www.iclarified.com/images/news/96937/96937/96937-640.jpg)