Unpacking Containers on AWS: A Practical Guide to ECS and EC2

Remember the "it works on my machine" problem? Or the sheer pain of setting up a new server, installing dependencies, and ensuring your application runs exactly the same way it did in development? For years, virtual machines (VMs) were our go-to solution for isolating applications. But they came with baggage – each VM needed its own full operating system, consuming significant resources and adding overhead for patching and management. Then came containers. Lightweight, portable, and designed for consistency. They packaged your application and everything it needs to run – libraries, dependencies, configuration – into a neat little box. But running lots of containers across multiple servers? That introduces a new challenge: orchestration. How do you manage their lifecycle, scale them, and ensure they can talk to each other? This is where Amazon Elastic Container Service (ECS) steps in. And while Fargate often steals the spotlight for its "serverless" container experience, understanding the classic, powerful ECS with EC2 launch type is fundamental. It gives you control, flexibility, and can often be the most cost-effective option for many workloads. Let's dive in and demystify running containers on AWS using ECS and EC2. Why It Matters: In today's cloud-native world, containers are table stakes. They enable faster deployments, greater portability, improved resource utilization, and lay the groundwork for microservices architectures. Running containers at scale requires a robust orchestration platform. AWS provides ECS (and EKS, their managed Kubernetes service). ECS is a highly opinionated, deeply integrated AWS service built specifically for the AWS ecosystem. It simplifies the complexity of managing containerized applications, allowing you to focus on building, not infrastructure. Choosing the ECS/EC2 launch type means you retain control over the underlying EC2 instances that host your containers, offering a balance of management and flexibility that's perfect for many scenarios, especially when you need specific instance types or want to leverage existing EC2 investments. The Concept in Simple Terms: Your Apartment Building Analogy Let's use an analogy to make sense of this. Imagine you're a property manager responsible for housing many different tenants (your applications). Virtual Machines (VMs): Think of a VM like a standalone house you build for each tenant. Each house has its own foundation, walls, roof, plumbing, and electrical system (the Guest OS and its overhead). It offers great isolation, but building and maintaining each house is expensive and time-consuming. (See the 'VMs vs. Containers' diagram ). Containers: Now, think of containers like standardized apartments within a large, efficiently built apartment building. Each apartment (container) is a self-contained living space (your application and its dependencies) but shares the building's core infrastructure – the foundation, walls, roof, and utilities come from the building itself (the Host OS kernel and server hardware). This is much more resource-efficient. (See the 'VMs vs. Containers' diagram - ). EC2 Instances (in this context): These are the actual apartment buildings that you own and manage. You are responsible for the building's structure, maintenance, and making sure it's ready for tenants. (See the 'ECS Cluster' diagram, showing EC2 instances within the cluster). ECS (The Service): ECS is like your property management company. It doesn't build the buildings (you provide the EC2 instances), but it handles all the tenant management: Finding available units in your buildings. Assigning tenants (containers) to those units. Making sure the right number of tenants are in each building. Handling new tenants arriving or old ones leaving (scaling). Connecting tenants to shared services like mailboxes (Load Balancers ) or ensuring they follow building rules (networking, security). With ECS (EC2 launch type), you provide the buildings (EC2s), and ECS manages the tenants (containers) inside them. Deeper Dive: How ECS (EC2 Launch Type) Works Okay, let's switch back to the technical terms. Based on the provided diagrams (Image 1 & 2), here's a breakdown of the core components when using ECS with the EC2 launch type: ECS Cluster: A logical grouping of resources. Primarily, this is where your EC2 instances register themselves to become part of the cluster capacity. (Image 1 clearly shows EC2 instances belonging to a Cluster). EC2 Container Instance: These are standard Amazon EC2 instances that you launch and manage. They need to have the ECS Agent installed and running. The agent registers the instance with the specified ECS cluster and allows ECS to deploy tasks onto it. You are responsible for patching, scaling, and managing these EC2 instances (e.g., using Auto Scaling Groups). (Image 1 & 2 show EC2 as a compute option). ECS Agent: Software that runs on each EC2 container instance. It communicat

Remember the "it works on my machine" problem? Or the sheer pain of setting up a new server, installing dependencies, and ensuring your application runs exactly the same way it did in development?

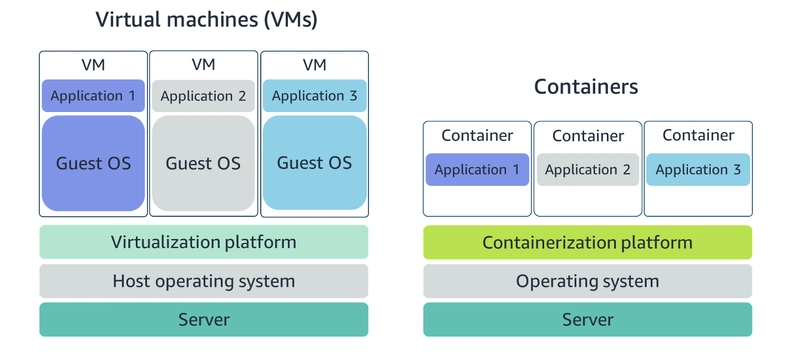

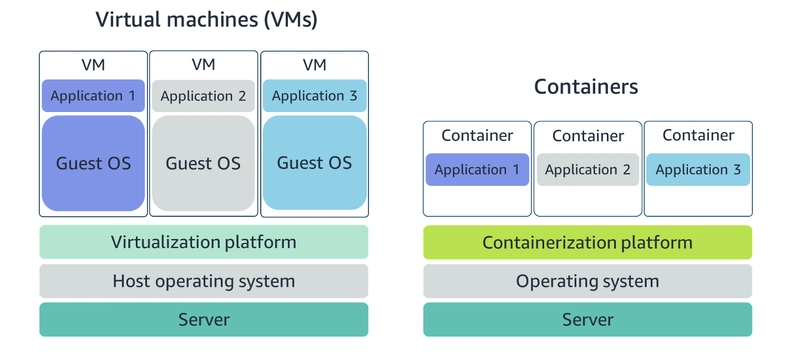

For years, virtual machines (VMs) were our go-to solution for isolating applications. But they came with baggage – each VM needed its own full operating system, consuming significant resources and adding overhead for patching and management.

Then came containers. Lightweight, portable, and designed for consistency. They packaged your application and everything it needs to run – libraries, dependencies, configuration – into a neat little box. But running lots of containers across multiple servers? That introduces a new challenge: orchestration. How do you manage their lifecycle, scale them, and ensure they can talk to each other?

This is where Amazon Elastic Container Service (ECS) steps in. And while Fargate often steals the spotlight for its "serverless" container experience, understanding the classic, powerful ECS with EC2 launch type is fundamental. It gives you control, flexibility, and can often be the most cost-effective option for many workloads.

Let's dive in and demystify running containers on AWS using ECS and EC2.

Why It Matters:

In today's cloud-native world, containers are table stakes. They enable faster deployments, greater portability, improved resource utilization, and lay the groundwork for microservices architectures.

Running containers at scale requires a robust orchestration platform. AWS provides ECS (and EKS, their managed Kubernetes service). ECS is a highly opinionated, deeply integrated AWS service built specifically for the AWS ecosystem. It simplifies the complexity of managing containerized applications, allowing you to focus on building, not infrastructure.

Choosing the ECS/EC2 launch type means you retain control over the underlying EC2 instances that host your containers, offering a balance of management and flexibility that's perfect for many scenarios, especially when you need specific instance types or want to leverage existing EC2 investments.

The Concept in Simple Terms: Your Apartment Building Analogy

Let's use an analogy to make sense of this.

Imagine you're a property manager responsible for housing many different tenants (your applications).

- Virtual Machines (VMs): Think of a VM like a standalone house you build for each tenant. Each house has its own foundation, walls, roof, plumbing, and electrical system (the Guest OS and its overhead). It offers great isolation, but building and maintaining each house is expensive and time-consuming. (See the 'VMs vs. Containers' diagram ).

- Containers: Now, think of containers like standardized apartments within a large, efficiently built apartment building. Each apartment (container) is a self-contained living space (your application and its dependencies) but shares the building's core infrastructure – the foundation, walls, roof, and utilities come from the building itself (the Host OS kernel and server hardware). This is much more resource-efficient. (See the 'VMs vs. Containers' diagram - ).

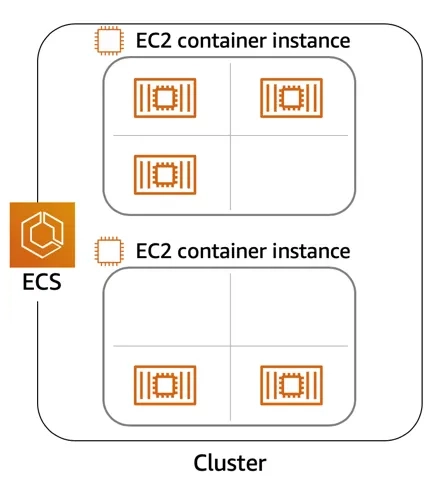

- EC2 Instances (in this context): These are the actual apartment buildings that you own and manage. You are responsible for the building's structure, maintenance, and making sure it's ready for tenants. (See the 'ECS Cluster' diagram, showing EC2 instances within the cluster).

- ECS (The Service): ECS is like your property management company. It doesn't build the buildings (you provide the EC2 instances), but it handles all the tenant management:

- Finding available units in your buildings.

- Assigning tenants (containers) to those units.

- Making sure the right number of tenants are in each building.

- Handling new tenants arriving or old ones leaving (scaling).

- Connecting tenants to shared services like mailboxes (Load Balancers ) or ensuring they follow building rules (networking, security).

With ECS (EC2 launch type), you provide the buildings (EC2s), and ECS manages the tenants (containers) inside them.

Deeper Dive: How ECS (EC2 Launch Type) Works

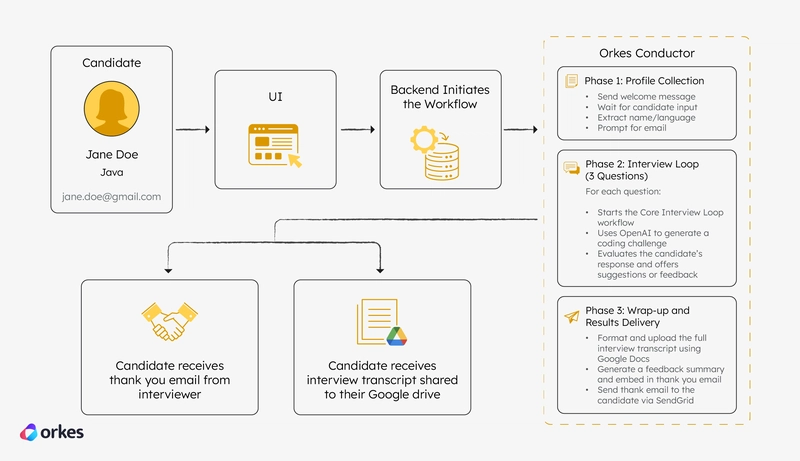

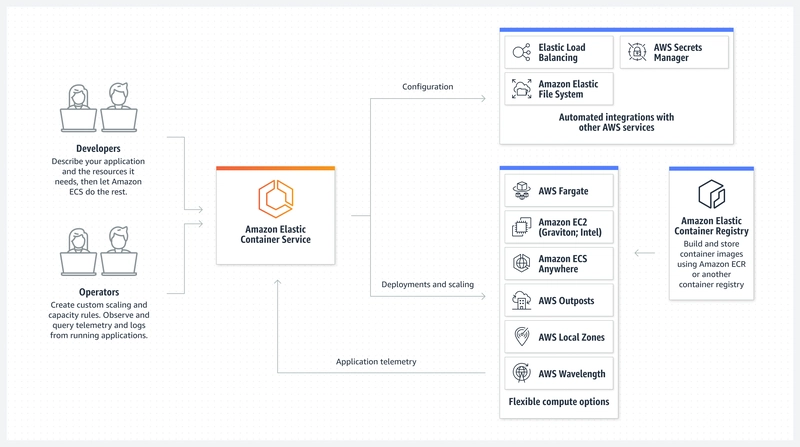

Okay, let's switch back to the technical terms. Based on the provided diagrams (Image 1 & 2), here's a breakdown of the core components when using ECS with the EC2 launch type:

- ECS Cluster: A logical grouping of resources. Primarily, this is where your EC2 instances register themselves to become part of the cluster capacity. (Image 1 clearly shows EC2 instances belonging to a Cluster).

- EC2 Container Instance: These are standard Amazon EC2 instances that you launch and manage. They need to have the ECS Agent installed and running. The agent registers the instance with the specified ECS cluster and allows ECS to deploy tasks onto it. You are responsible for patching, scaling, and managing these EC2 instances (e.g., using Auto Scaling Groups). (Image 1 & 2 show EC2 as a compute option).

- ECS Agent: Software that runs on each EC2 container instance. It communicates with the ECS control plane to report instance status, resource utilization, and to accept and execute task placement requests.

- Task Definition: This is the blueprint for your application, describing one or more containers that work together. It specifies things like:

- Which Docker image to use (often from Amazon ECR - Image 2).

- CPU and memory requirements for each container.

- Networking ports.

- Mount points for data volumes (e.g., with Amazon EFS - Image 2).

- Environment variables and secrets (retrieved securely from AWS Secrets Manager - Image 2).

- Task: An instance of a Task Definition running on an EC2 container instance. A single EC2 instance can run multiple tasks and multiple containers. (Image 1 shows multiple container icons within each EC2 instance box).

- Service: Defines how to run and maintain a desired number of instances of a specific Task Definition simultaneously in an ECS cluster. ECS Services handle:

- Maintaining the desired count of tasks.

- Replacing unhealthy tasks.

- Rolling deployments for updates.

- Integration with Elastic Load Balancing (ELB) to distribute traffic across tasks (Image 2).

- Optional integration with Service Discovery.

In essence, you prepare your application as a container image, create a Task Definition describing it, tell an ECS Service how many copies you want running, and ensure you have EC2 instances in your ECS cluster with enough resources. ECS then takes care of placing and managing the tasks on your EC2 instances.

Practical Example or Use Case: Deploying a Simple Web App

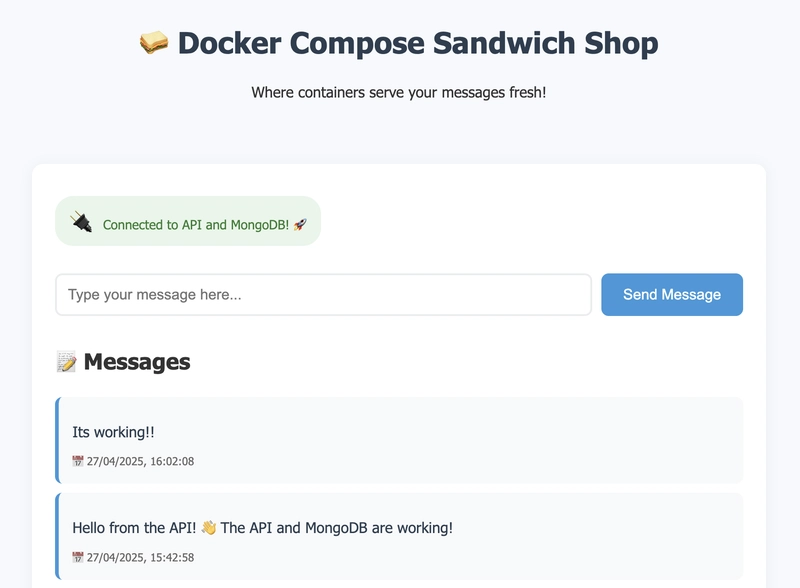

Let's walk through a simplified flow for deploying a containerized Node.js web application using ECS (EC2 launch type):

-

Containerize: You write your Node.js application and create a

Dockerfileto package it.

# Use an official Node.js runtime as a parent image FROM node:18 # Set the working directory in the container WORKDIR /usr/src/app # Copy package.json and package-lock.json COPY package*.json ./ # Install node dependencies RUN npm install # Copy the rest of the application code COPY . . # Make port 8080 available to the world outside this container EXPOSE 8080 # Run the application CMD [ "node", "server.js" ] -

Build & Push: You build the Docker image locally and push it to Amazon Elastic Container Registry (ECR). This is a secure, managed Docker registry. (Image 2).

# Assuming you have AWS CLI configured and Docker installed # Replace, aws ecr create-repository --repository-name my_web_app docker build -t my_web_app . aws ecr get-login-password --region, | docker login --username AWS --password-stdin .dkr.ecr. .amazonaws.com docker tag my_web_app:latest .dkr.ecr. .amazonaws.com/my_web_app:latest docker push .dkr.ecr. .amazonaws.com/my_web_app:latest Create Task Definition: In the AWS Management Console or using the AWS CLI/IaC (CloudFormation, CDK), you define an ECS Task Definition. You specify the ECR image URI, required CPU/memory, port mappings (e.g., container port 8080 to host port 0), and potentially other settings.

Create ECS Cluster: If you don't have one, create an ECS cluster. This doesn't initially contain instances.

Launch EC2 Instances: Launch EC2 instances (using a specific ECS-optimized AMI is recommended) and configure their User Data script to install the ECS Agent and register them to your cluster. Using an Auto Scaling Group here is best practice for resilience and scaling.

Create ECS Service: Create an ECS Service for your web app, referencing the Task Definition. You specify the number of desired tasks (e.g., 3 instances of your app), select the ECS cluster, choose the EC2 launch type, and potentially configure it to integrate with an Application Load Balancer (ALB) to distribute incoming web traffic across your running tasks. (Image 2).

ECS Does the Rest: ECS sees your desired task count and the available resources on your registered EC2 instances in the cluster. It instructs the ECS Agents on suitable instances to pull your image from ECR and start the containers. The ALB routes traffic to these running tasks. If a task fails, ECS starts a new one. If traffic increases and you've configured service auto-scaling (based on metrics like CPU utilization), ECS can increase the desired count, and your EC2 Auto Scaling Group can launch more instances if needed.

This flow allows you to deploy and scale your containerized application efficiently, with ECS managing the complexities of placement and desired state on the EC2 fleet you control.

Common Mistakes or Misunderstandings:

- Treating Container Instances like Pets: With the EC2 launch type, it's easy to fall back into managing the EC2 instances manually (SSHing in, installing software). Resist this! Treat them as Phoenix servers – if one has an issue, terminate it and let the Auto Scaling Group replace it. Your focus should be on the containers and ECS, not the individual VMs.

- Not Setting Resource Limits: If you don't define CPU and memory limits in your Task Definitions, one runaway container can starve others on the same EC2 instance, leading to instability for unrelated applications.

- Storing Sensitive Data Inside Images: Never hardcode secrets (database passwords, API keys) directly in your container images or Task Definitions. Use AWS Secrets Manager or AWS Systems Manager Parameter Store, and integrate them via the Task Definition. (Image 2 shows integration with Secrets Manager).

- Ignoring Logging and Monitoring: Ensure your containers log to standard output (stdout) and standard error (stderr). Configure the ECS agent's logging driver (like

awslogs) to send these logs to Amazon CloudWatch Logs for centralized monitoring and troubleshooting.

Pro Tips & Hidden Features:

- ECS Exec: Need to debug a running container? Instead of SSHing into the EC2 instance and finding the container ID, use

aws ecs execute-command(or enable it via the console). This gives you a shell directly inside a running container for inspection. - Placement Constraints and Strategies: You can tell ECS how to place tasks on your EC2 instances (e.g., spread them across Availability Zones, binpack them to utilize instances more fully, or require specific instance attributes). This is configured in the Service definition.

- Task Placement and Cluster Management with Capacity Providers: Use ECS Capacity Providers to manage the relationship between ECS and your EC2 Auto Scaling Groups. This allows ECS to scale the underlying EC2 capacity based on the task demands of your services, making the scaling experience more seamless.

- Service Connect: A feature for simplifying service-to-service communication within your ECS applications, providing resilient, built-in service discovery and traffic routing.

Final Thoughts :

ECS with the EC2 launch type provides a powerful, flexible, and cost-effective way to run and orchestrate your containerized applications on AWS. While it requires managing the underlying EC2 instances, it offers fine-grained control and is deeply integrated with other AWS services you already use.

It's a fantastic stepping stone into the world of containers on AWS and is suitable for a wide range of workloads. Don't shy away from understanding this core pattern!

Ready to try it out? Head over to the AWS Management Console, navigate to ECS, and launch your first cluster and service using the EC2 launch type. The AWS Free Tier allows you to experiment with EC2 instances and ECS without charge up to certain limits.

What are your thoughts? Have you used ECS with EC2? What are your favorite tips? Let me know in the comments!

![[The AI Show Episode 143]: ChatGPT Revenue Surge, New AGI Timelines, Amazon’s AI Agent, Claude for Education, Model Context Protocol & LLMs Pass the Turing Test](https://www.marketingaiinstitute.com/hubfs/ep%20143%20cover.png)

![[DEALS] Koofr Cloud Storage: Lifetime Subscription (1TB) (80% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

CISO’s Core Focus.webp?#)

![Hostinger Horizons lets you effortlessly turn ideas into web apps without coding [10% off]](https://i0.wp.com/9to5mac.com/wp-content/uploads/sites/6/2025/04/IMG_1551.png?resize=1200%2C628&quality=82&strip=all&ssl=1)

![This new Google TV streaming dongle looks just like a Chromecast [Gallery]](https://i0.wp.com/9to5google.com/wp-content/uploads/sites/4/2025/04/thomson-cast-150-google-tv-1.jpg?resize=1200%2C628&quality=82&strip=all&ssl=1)

![iPadOS 19 May Introduce Menu Bar, iOS 19 to Support External Displays [Rumor]](https://www.iclarified.com/images/news/97137/97137/97137-640.jpg)

![Apple Drops New Immersive Adventure Episode for Vision Pro: 'Hill Climb' [Video]](https://www.iclarified.com/images/news/97133/97133/97133-640.jpg)