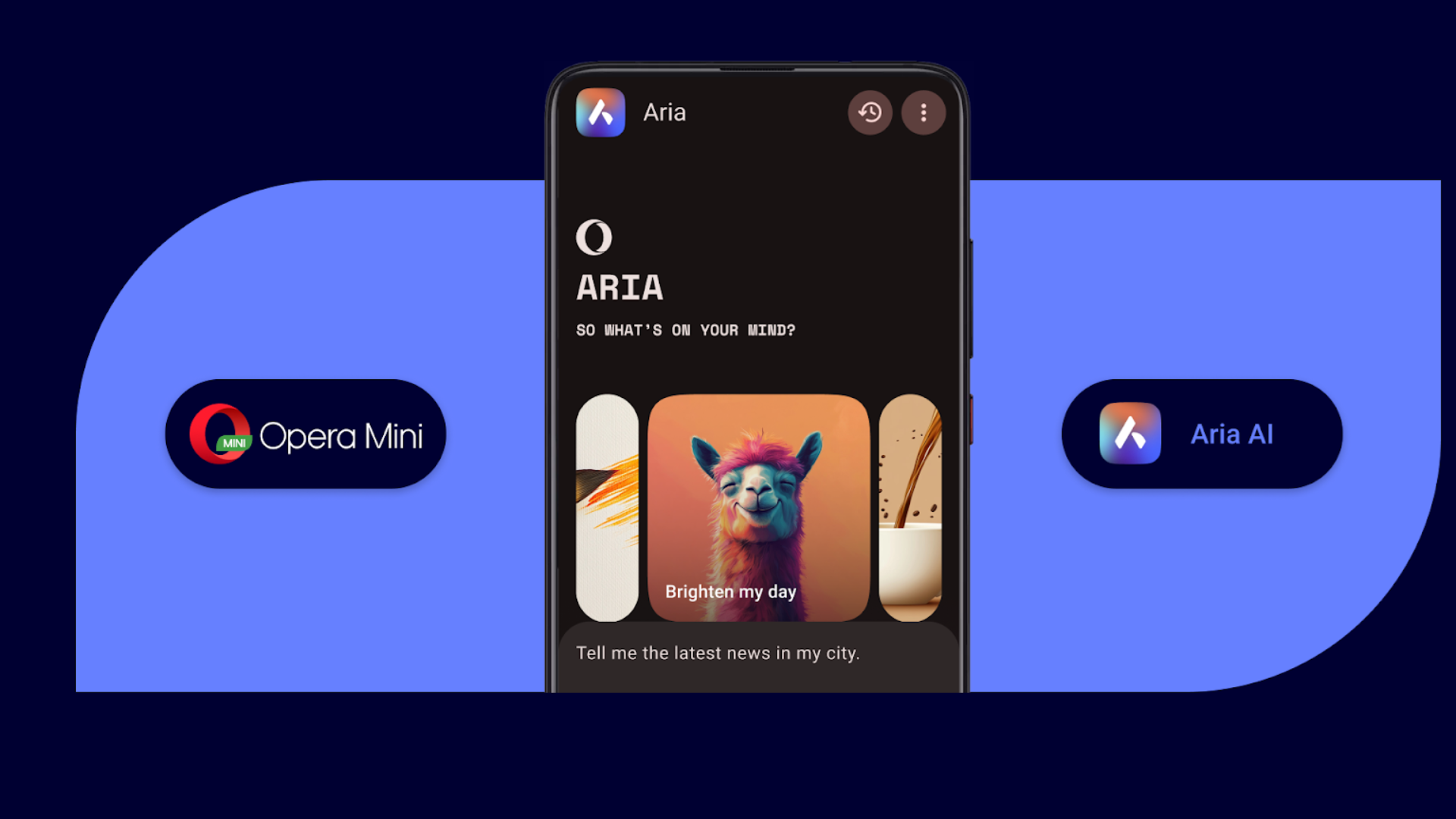

Understanding Few-Shot, Zero-Shot, and One-Shot Learning in AI

In recent years, natural language processing (NLP) and machine learning (ML) have gained significant traction, particularly with the advancements in models like GPT-3 and its successors. A crucial aspect of these models is their ability to understand and generate human-like text based on limited examples. This brings us to the concepts of few-shot, zero-shot, and one-shot learning. What is Few-Shot Learning? Few-shot learning refers to the ability of a model to learn and make predictions based on a very small amount of training data. In NLP, this means that the model can understand the intent behind a prompt or a sentence with just a few examples provided. For instance, if you show a model a few sentences labeled as positive reviews, it can infer the pattern and classify other sentences correctly as positive or negative. Zero-Shot Learning Explained Zero-shot learning takes this a step further. It allows a model to perform a task without having seen any examples for that specific task during the training phase. Instead, it relies on its vast knowledge acquired from other tasks and data. For example, if you ask a model to translate a sentence it hasn't been trained on, it can still leverage context and linguistic patterns to provide a reasonable translation. The Role of One-Shot Learning One-shot learning is positioned between few-shot and zero-shot learning. In this approach, the model is provided with only a single example of the task to learn from. This is particularly interesting in applications like face recognition, where a model can identify a person based on just one photograph. Practical Applications Few-Shot Learning: Ideal for scenarios with limited labeled data, like medical diagnoses where examples are hard to come by. Zero-Shot Learning: Very useful in real-time applications such as customer support chatbots, where it might encounter questions it has never trained on before. One-Shot Learning: Best used in image recognition tasks, where unique instances can provide enough information for the model to make a prediction. Conclusion Understanding the nuances of few-shot, zero-shot, and one-shot learning can significantly impact how developers create AI applications. As the technology continues to evolve, mastering these concepts will empower us to build more robust and intelligent systems capable of handling diverse tasks with minimal data requirements.

In recent years, natural language processing (NLP) and machine learning (ML) have gained significant traction, particularly with the advancements in models like GPT-3 and its successors. A crucial aspect of these models is their ability to understand and generate human-like text based on limited examples. This brings us to the concepts of few-shot, zero-shot, and one-shot learning.

What is Few-Shot Learning?

Few-shot learning refers to the ability of a model to learn and make predictions based on a very small amount of training data. In NLP, this means that the model can understand the intent behind a prompt or a sentence with just a few examples provided. For instance, if you show a model a few sentences labeled as positive reviews, it can infer the pattern and classify other sentences correctly as positive or negative.

Zero-Shot Learning Explained

Zero-shot learning takes this a step further. It allows a model to perform a task without having seen any examples for that specific task during the training phase. Instead, it relies on its vast knowledge acquired from other tasks and data. For example, if you ask a model to translate a sentence it hasn't been trained on, it can still leverage context and linguistic patterns to provide a reasonable translation.

The Role of One-Shot Learning

One-shot learning is positioned between few-shot and zero-shot learning. In this approach, the model is provided with only a single example of the task to learn from. This is particularly interesting in applications like face recognition, where a model can identify a person based on just one photograph.

Practical Applications

- Few-Shot Learning: Ideal for scenarios with limited labeled data, like medical diagnoses where examples are hard to come by.

- Zero-Shot Learning: Very useful in real-time applications such as customer support chatbots, where it might encounter questions it has never trained on before.

- One-Shot Learning: Best used in image recognition tasks, where unique instances can provide enough information for the model to make a prediction.

Conclusion

Understanding the nuances of few-shot, zero-shot, and one-shot learning can significantly impact how developers create AI applications. As the technology continues to evolve, mastering these concepts will empower us to build more robust and intelligent systems capable of handling diverse tasks with minimal data requirements.

![[Webinar] AI Is Already Inside Your SaaS Stack — Learn How to Prevent the Next Silent Breach](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiOWn65wd33dg2uO99NrtKbpYLfcepwOLidQDMls0HXKlA91k6HURluRA4WXgJRAZldEe1VReMQZyyYt1PgnoAn5JPpILsWlXIzmrBSs_TBoyPwO7hZrWouBg2-O3mdeoeSGY-l9_bsZB7vbpKjTSvG93zNytjxgTaMPqo9iq9Z5pGa05CJOs9uXpwHFT4/s1600/ai-cyber.jpg?#)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![Rogue Company Elite tier list of best characters [April 2025]](https://media.pocketgamer.com/artwork/na-33136-1657102075/rogue-company-ios-android-tier-cover.jpg?#)

_Andreas_Prott_Alamy.jpg?width=1280&auto=webp&quality=80&disable=upscale#)

![Apple Watch Series 10 Back On Sale for $299! [Lowest Price Ever]](https://www.iclarified.com/images/news/96657/96657/96657-640.jpg)

![EU Postpones Apple App Store Fines Amid Tariff Negotiations [Report]](https://www.iclarified.com/images/news/97068/97068/97068-640.jpg)

![Apple Slips to Fifth in China's Smartphone Market with 9% Decline [Report]](https://www.iclarified.com/images/news/97065/97065/97065-640.jpg)