Think Fast, Code Faster: Local AI Development with Docker Model Runner

Docker has taken a bold step in the AI space with its new experimental feature, Docker Model Runner, introduced in Docker Desktop for Mac 4.40+. This feature brings Large Language Model (LLM) inference directly to your machine, integrating seamlessly into Docker’s familiar ecosystem. With native GPU acceleration and the flexibility of running AI models locally, Docker Model Runner is a game-changer for developers, data scientists, and AI enthusiasts. Let’s dive into what Docker Model Runner is, how it works, and how you can get started. What is Docker Model Runner? Docker Model Runner provides a Docker-native experience for running LLMs locally on Apple Silicon Macs, leveraging GPU acceleration. Unlike traditional containerized AI models, Model Runner uses a host-installed inference server (currently powered by llama.cpp) to maximize performance. For those using Windows with NVIDIA GPUs, support is expected in April 2025. This feature allows developers to build, test, and iterate on AI applications without relying on cloud-based APIs. Whether you’re developing AI assistants or integrating AI into existing apps, Docker Model Runner has you covered. Key Benefits of Docker Model Runner Local AI Inference: Perform inference tasks without external API calls, ensuring data privacy. GPU Acceleration: Harness the full power of Apple Silicon GPUs for lightning-fast model execution. Seamless Docker Integration: Manage AI models using familiar Docker CLI commands. Faster Iterations: Test and iterate AI applications locally with minimal latency. Native Docker Integration with docker model CLI Docker Desktop 4.40+ introduces the docker model CLI, making AI models first-class citizens within Docker. You can now pull, run, inspect, and manage models with commands similar to managing containers, images, and volumes. Here are a few useful commands: docker model --help # List available commands docker model list # List the available models that can be run with the Docker Model Runner docker model status # Check if the Docker Model Runner is running docker model ls # List downloaded models docker model pull # Download a model docker model run # Run a model with the Docker Model Runner docker model rm # Remove a model downloaded from Docker Hub docker model version # Show the Docker Model Runner version How Does Docker Model Runner Work? Unlike traditional containers, Docker Model Runner runs AI models directly on your machine. It uses llama.cpp as the inference server, bypassing the containerization layer to minimize overhead and maximize GPU utilization. Key Technical Insights Host-level Process: Models run directly on the host system using llama.cpp. GPU Acceleration: Apple Silicon’s Metal API is used for GPU acceleration. M*odel Loading:* Models are pulled from Docker Hub and cached locally. They are dynamically loaded into memory for efficient inference. This architecture results in faster model execution than containerised AI solutions, reducing development time and improving the developer experience. Who Should Use Docker Model Runner? Docker Model Runner is designed for: AI Developers: Build and test GenAI-powered apps locally. Data Scientists: Efficiently run and evaluate models using local GPU resources. Application Developers: Integrate AI capabilities into applications without leaving the Docker ecosystem. Teams Developing AI Prototypes: Rapidly iterate on AI applications without cloud dependency. Getting Started with Docker Model Runner Ready to experience Docker Model Runner? Follow these steps to get started: Step 1: Install Docker Desktop 4.40+ Ensure you have Docker Desktop 4.40 or later installed on your Apple Silicon Mac. You can download the latest version from the Docker website. My Docker Desktop Version is "Docker Desktop 4.40.0 (186969) is currently the newest version available." Step 2: Enable Model Runner Open Docker Desktop. Navigate to Settings > Features in Development. Ensure Docker Model Runner is enabled. For TCP support, also enable “Enable host-side TCP support”. This allows models to accept connections on port 12434 by default. Step 3: Verify Installation Run the following command to check if Model Runner is active: command: docker model status # Output: Docker Model Runner is running ~/Documents/docker docker model status Docker Model Runner is running Step 4: Download a Model Download a model from Docker Hub using the following command: command: docker model pull ai/llama3.2:1B-Q8_0 ~/Documents/docker docker model pull ai/llama3.2:1B-Q8_0 Downloaded: 1259.13 MB Model ai/llama3.2:1B-Q8_0 pulled successfully You can choose from a range of models, including: ai/llama3.2 ai/gemma3 ai/qwen2.5 ai/mistral Refer here for more models: https://hub.docker.com/u/ai Step 5: Run a Model Send a simple input to your model using: command: docker model run ai/llama3.2:1B-Q8_0 "Hi, how

Docker has taken a bold step in the AI space with its new experimental feature, Docker Model Runner, introduced in Docker Desktop for Mac 4.40+. This feature brings Large Language Model (LLM) inference directly to your machine, integrating seamlessly into Docker’s familiar ecosystem. With native GPU acceleration and the flexibility of running AI models locally, Docker Model Runner is a game-changer for developers, data scientists, and AI enthusiasts.

Let’s dive into what Docker Model Runner is, how it works, and how you can get started.

What is Docker Model Runner?

Docker Model Runner provides a Docker-native experience for running LLMs locally on Apple Silicon Macs, leveraging GPU acceleration. Unlike traditional containerized AI models, Model Runner uses a host-installed inference server (currently powered by llama.cpp) to maximize performance. For those using Windows with NVIDIA GPUs, support is expected in April 2025.

This feature allows developers to build, test, and iterate on AI applications without relying on cloud-based APIs. Whether you’re developing AI assistants or integrating AI into existing apps, Docker Model Runner has you covered.

Key Benefits of Docker Model Runner

Local AI Inference: Perform inference tasks without external API calls, ensuring data privacy.

GPU Acceleration: Harness the full power of Apple Silicon GPUs for lightning-fast model execution.

Seamless Docker Integration: Manage AI models using familiar Docker CLI commands.

Faster Iterations: Test and iterate AI applications locally with minimal latency.

Native Docker Integration with docker model CLI

Docker Desktop 4.40+ introduces the docker model CLI, making AI models first-class citizens within Docker. You can now pull, run, inspect, and manage models with commands similar to managing containers, images, and volumes.

Here are a few useful commands:

docker model --help # List available commands

docker model list # List the available models that can be run with the Docker Model Runner

docker model status # Check if the Docker Model Runner is running

docker model ls # List downloaded models

docker model pull # Download a model

docker model run # Run a model with the Docker Model Runner

docker model rm # Remove a model downloaded from Docker Hub

docker model version # Show the Docker Model Runner version

How Does Docker Model Runner Work?

Unlike traditional containers, Docker Model Runner runs AI models directly on your machine. It uses llama.cpp as the inference server, bypassing the containerization layer to minimize overhead and maximize GPU utilization.

Key Technical Insights

Host-level Process: Models run directly on the host system using llama.cpp.

GPU Acceleration: Apple Silicon’s Metal API is used for GPU acceleration.

M*odel Loading:* Models are pulled from Docker Hub and cached locally. They are dynamically loaded into memory for efficient inference.

This architecture results in faster model execution than containerised AI solutions, reducing development time and improving the developer experience.

Who Should Use Docker Model Runner?

Docker Model Runner is designed for:

AI Developers: Build and test GenAI-powered apps locally.

Data Scientists: Efficiently run and evaluate models using local GPU resources.

Application Developers: Integrate AI capabilities into applications without leaving the Docker ecosystem.

Teams Developing AI Prototypes: Rapidly iterate on AI applications without cloud dependency.

Getting Started with Docker Model Runner

Ready to experience Docker Model Runner? Follow these steps to get started:

Step 1: Install Docker Desktop 4.40+

Ensure you have Docker Desktop 4.40 or later installed on your Apple Silicon Mac. You can download the latest version from the Docker website.

My Docker Desktop Version is "Docker Desktop 4.40.0 (186969) is currently the newest version available."

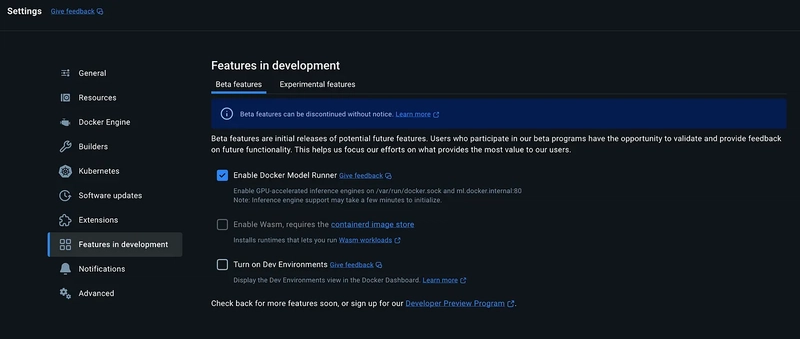

Step 2: Enable Model Runner

Open Docker Desktop.

Navigate to Settings > Features in Development.

Ensure Docker Model Runner is enabled.

For TCP support, also enable “Enable host-side TCP support”. This allows models to accept connections on port 12434 by default.

Step 3: Verify Installation

Run the following command to check if Model Runner is active:

command: docker model status

# Output: Docker Model Runner is running

~/Documents/docker docker model status

Docker Model Runner is running

Step 4: Download a Model

Download a model from Docker Hub using the following command:

command: docker model pull ai/llama3.2:1B-Q8_0

~/Documents/docker docker model pull ai/llama3.2:1B-Q8_0

Downloaded: 1259.13 MB

Model ai/llama3.2:1B-Q8_0 pulled successfully

You can choose from a range of models, including:

ai/llama3.2

ai/gemma3

ai/qwen2.5

ai/mistral

Refer here for more models: https://hub.docker.com/u/ai

Step 5: Run a Model

Send a simple input to your model using:

command: docker model run ai/llama3.2:1B-Q8_0 "Hi, how are you?"

# example Output: Hello! How can I help you today?

~/Documents/docker docker model run ai/llama3.2:1B-Q8_0 "Hi, how are you?"

I'm just a computer program, so I don't have emotions or feelings like humans do, but thanks for asking! How can I assist you today?

You can also run models in interactive mode:

command: docker model run ai/llama3.2:1B-Q8_0

> Why is the sky blue?

~/Documents/docker docker model run ai/llama3.2:1B-Q8_0

> Why is the sky blue?

Interactive chat mode started. Type '/bye' to exit.

> Why is the sky blue?

The sky appears blue because of a phenomenon called Rayleigh scattering, named after the British physicist Lord Rayleigh, who first described it in the late 19th century.

Here's what happens:

**The Short Answer:** The shorter (blue) wavelengths of light are scattered more than the longer (red) wavelengths, giving the sky its blue appearance.

**The Longer Answer:**

When sunlight enters Earth's atmosphere, it encounters tiny molecules of gases such as nitrogen (N2) and oxygen (O2). These molecules are much smaller than the wavelength of light, and they scatter the light in all directions. This scattering effect is more pronounced for shorter wavelengths, like blue and violet, due to the smaller particle sizes.

There are two types of scattering that occur in the atmosphere:

1. **Rayleigh scattering**: This type of scattering occurs when light is scattered by small particles, like the molecules of gases in the atmosphere. The shorter wavelengths (like blue and violet) are scattered more than the longer wavelengths (like red and orange). This is because the smaller particles are more effective at scattering the shorter wavelengths.

2. **Mie scattering**: This type of scattering occurs when light is scattered by larger particles, like dust and pollen. The longer wavelengths (like red and orange) are scattered less than the shorter wavelengths.

**Why the blue color dominates:**

As sunlight enters the atmosphere, it encounters more Rayleigh scattering than Mie scattering. The scattered blue light is then reflected back into our eyes, creating the illusion of a blue sky. The longer wavelengths, like red and orange, are scattered in all directions and are not reflected back, making them appear more red.

**Additional factors:**

Other factors can also influence the color of the sky, such as:

* **Dust and pollution**: Tiny particles in the atmosphere can scatter light in different ways, changing the color of the sky.

* **Clouds**: Clouds can reflect or absorb light, changing the color of the sky.

* **Time of day and year**: The angle of the sun and the position of the Earth in its orbit can also affect the color of the sky.

Now, the next time you gaze up at the sky, remember that the blue color is a result of a fascinating combination of Rayleigh and Mie scattering!

> /bye

Chat session ended.

Step 6: Remove a Model

When you no longer need a model, remove it using:

command: docker model rm ai/llama3.2:1B-Q8_0

~/Doc/docker docker model rm ai/llama3.2:1B-Q8_0

Model ai/llama3.2:1B-Q8_0 removed successfully

Using Model Runner in Your Application

You can integrate Model Runner into your AI applications using its OpenAI-compatible endpoints. Follow these steps to build a GenAI application:

Step 1: Download the Model

docker model pull ai/llama3.2:1B-Q8_0

Step 2: Clone the Repository from the official Docker GitHub

https://github.com/docker/hello-genai

git clone https://github.com/docker/hello-genai

cd hello-genai

~/Documents/docker git clone https://github.com/docker/hello-genai

cd hello-genai

Cloning into 'hello-genai'...

remote: Enumerating objects: 31, done.

remote: Counting objects: 100% (31/31), done.

remote: Compressing objects: 100% (26/26), done.

remote: Total 31 (delta 4), reused 27 (delta 2), pack-reused 0 (from 0)

Receiving objects: 100% (31/31), 15.49 KiB | 7.75 MiB/s, done.

Resolving deltas: 100% (4/4), done.

~/Documents/docker/hello-genai main ll

total 56

-rw-r--r-- 1 macpro staff 413B 29 Mar 18:15 Dockerfile

-rw-r--r-- 1 macpro staff 11K 29 Mar 18:15 LICENSE

-rw-r--r-- 1 macpro staff 1.1K 29 Mar 18:15 README.md

-rw-r--r-- 1 macpro staff 800B 29 Mar 18:15 docker-compose.yml

drwxr-xr-x 7 macpro staff 224B 29 Mar 18:15 go-genai

drwxr-xr-x 6 macpro staff 192B 29 Mar 18:15 node-genai

drwxr-xr-x 7 macpro staff 224B 29 Mar 18:15 py-genai

-rwxr-xr-x 1 macpro staff 517B 29 Mar 18:15 run.sh

Step 3: Configure the Backend: Set the environment variables

BASE_URL=http://model-runner.docker.internal/engines/llama.cpp/v1/

MODEL=ai/llama3.2:1B-Q8_0

~/Documents/docker/hello-genai BASE_URL=http://model-runner.docker.internal/engines/llama.cpp/v1/

MODEL=ai/llama3.2:1B-Q8_0

Step 4: Start the Application

command: docker compose up -d

~/Documents/docker/hello-genai main docker compose up -d

Compose now can delegate build to bake for better performances

Just set COMPOSE_BAKE=true

[+] Building 12.1s (47/47) FINISHED docker:desktop-linux

=> [node-genai internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 329B 0.0s

=> [go-genai internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 706B 0.0s

=> [python-genai internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 421B 0.0s

=> [go-genai internal] load metadata for docker.io/library/alpine:latest 1.3s

=> [go-genai internal] load metadata for docker.io/library/golang:1.21-alpine 1.3s

=> [python-genai internal] load metadata for docker.io/library/python:3.11-slim 1.3s

=> [node-genai internal] load metadata for docker.io/library/node:20-alpine 1.3s

=> [go-genai auth] library/alpine:pull token for registry-1.docker.io 0.0s

=> [go-genai auth] library/golang:pull token for registry-1.docker.io 0.0s

=> [python-genai auth] library/python:pull token for registry-1.docker.io 0.0s

=> [node-genai auth] library/node:pull token for registry-1.docker.io 0.0s

=> [python-genai internal] load .dockerignore 0.0s

=> => transferring context: 271B 0.0s

=> [go-genai internal] load .dockerignore 0.0s

=> => transferring context: 239B 0.0s

=> [node-genai internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [python-genai 1/7] FROM docker.io/library/python:3.11-slim@sha256:7029b00486ac40bed03e36775b864d3f3d39dcbdf19cd45 3.5s

=> => resolve docker.io/library/python:3.11-slim@sha256:7029b00486ac40bed03e36775b864d3f3d39dcbdf19cd45e6a52d541e6c1 0.0s

=> => sha256:7029b00486ac40bed03e36775b864d3f3d39dcbdf19cd45e6a52d541e6c178f0 9.13kB / 9.13kB 0.0s

=> => sha256:b302c93452310b37ffcd9436bbfdb0a3d5b60b19dd3c86badb83b66d20c38ca4 1.75kB / 1.75kB 0.0s

=> => sha256:f4c484c0338e730d3e53480fb48088dc3b3a016858b94559ce7087577ae3b204 5.31kB / 5.31kB 0.0s

=> => sha256:d9b6365477446a79987b20560ae52637be6f54d6d2f801e16aaa0ca25dd0964b 28.04MB / 28.04MB 1.3s

=> => sha256:3f529d1f5c642f942a2f18a72c6b14f378767cdf3f66131f04497701e8361712 3.33MB / 3.33MB 1.0s

=> => sha256:18513d00e8c20c79d50d6646c0f48fe4349ead2882c93b54d94bb54917adbd90 16.13MB / 16.13MB 1.4s

=> => sha256:82a6dca8a532d0999dead89c78b567015ba2ee32175dea32fefbecf004cad9d6 249B / 249B 1.2s

=> => extracting sha256:d9b6365477446a79987b20560ae52637be6f54d6d2f801e16aaa0ca25dd0964b 1.3s

=> => extracting sha256:3f529d1f5c642f942a2f18a72c6b14f378767cdf3f66131f04497701e8361712 0.1s

=> => extracting sha256:18513d00e8c20c79d50d6646c0f48fe4349ead2882c93b54d94bb54917adbd90 0.6s

=> => extracting sha256:82a6dca8a532d0999dead89c78b567015ba2ee32175dea32fefbecf004cad9d6 0.0s

=> [python-genai internal] load build context 0.0s

=> => transferring context: 9.84kB 0.0s

=> [go-genai builder 1/7] FROM docker.io/library/golang:1.21-alpine@sha256:2414035b086e3c42b99654c8b26e6f5b1b1598080 4.7s

=> => resolve docker.io/library/golang:1.21-alpine@sha256:2414035b086e3c42b99654c8b26e6f5b1b1598080d65fd03c7f499552f 0.0s

=> => sha256:2414035b086e3c42b99654c8b26e6f5b1b1598080d65fd03c7f499552ff4dc94 10.30kB / 10.30kB 0.0s

=> => sha256:5bc6d0431a4fdc57fb24d437c177c5e02fde1d0585eeb4c5d483c1b65aebfb00 1.92kB / 1.92kB 0.0s

=> => sha256:2bbe4e7e4d4e0f6f1b6c7192f01b9c7099e921b9fe8eae0c5c939a1d257f7e81 2.10kB / 2.10kB 0.0s

=> => sha256:690e87867337b8441990047e169b892933e9006bdbcbed52ab7a356945477a4d 4.09MB / 4.09MB 0.2s

=> => sha256:171883aaf475f5dea5723bb43248d9cf3f3c3a7cf5927947a8bed4836bbccb62 293.51kB / 293.51kB 0.2s

=> => sha256:2a6022646f09ee78a83ef4abd0f5af04071b6563cf16a18e00fb2dcfe63ca0a3 64.11MB / 64.11MB 1.0s

=> => extracting sha256:690e87867337b8441990047e169b892933e9006bdbcbed52ab7a356945477a4d 0.1s

=> => sha256:e495e1face5cc12777f452389e1da15202c37ec00ba024f12f841b5c90a47057 127B / 127B 0.5s

=> => sha256:4f4fb700ef54461cfa02571ae0db9a0dc1e0cdb5577484a6d75e68dc38e8acc1 32B / 32B 0.5s

=> => extracting sha256:171883aaf475f5dea5723bb43248d9cf3f3c3a7cf5927947a8bed4836bbccb62 0.0s

=> => extracting sha256:2a6022646f09ee78a83ef4abd0f5af04071b6563cf16a18e00fb2dcfe63ca0a3 3.6s

=> => extracting sha256:e495e1face5cc12777f452389e1da15202c37ec00ba024f12f841b5c90a47057 0.0s

=> => extracting sha256:4f4fb700ef54461cfa02571ae0db9a0dc1e0cdb5577484a6d75e68dc38e8acc1 0.0s

=> [go-genai stage-1 1/4] FROM docker.io/library/alpine:latest@sha256:a8560b36e8b8210634f77d9f7f9efd7ffa463e380b75e2 1.7s

=> => resolve docker.io/library/alpine:latest@sha256:a8560b36e8b8210634f77d9f7f9efd7ffa463e380b75e2e74aff4511df3ef88 0.0s

=> => sha256:a8560b36e8b8210634f77d9f7f9efd7ffa463e380b75e2e74aff4511df3ef88c 9.22kB / 9.22kB 0.0s

=> => sha256:757d680068d77be46fd1ea20fb21db16f150468c5e7079a08a2e4705aec096ac 1.02kB / 1.02kB 0.0s

=> => sha256:8d591b0b7dea080ea3be9e12ae563eebf9869168ffced1cb25b2470a3d9fe15e 597B / 597B 0.0s

=> => sha256:6e771e15690e2fabf2332d3a3b744495411d6e0b00b2aea64419b58b0066cf81 3.99MB / 3.99MB 1.5s

=> => extracting sha256:6e771e15690e2fabf2332d3a3b744495411d6e0b00b2aea64419b58b0066cf81 0.1s

=> [go-genai internal] load build context 0.0s

=> => transferring context: 12.11kB 0.0s

=> [node-genai 1/6] FROM docker.io/library/node:20-alpine@sha256:8bda036ddd59ea51a23bc1a1035d3b5c614e72c01366d989f41 3.3s

=> => resolve docker.io/library/node:20-alpine@sha256:8bda036ddd59ea51a23bc1a1035d3b5c614e72c01366d989f4120e8adca196 0.0s

=> => sha256:8bda036ddd59ea51a23bc1a1035d3b5c614e72c01366d989f4120e8adca196d4 7.67kB / 7.67kB 0.0s

=> => sha256:646bc11400802534d70bc576fc9704bb6409e0ed802658a8e785472d160c38d3 1.72kB / 1.72kB 0.0s

=> => sha256:f06038a15a690bf7aa19f84b6d7bf2646640654b47fd5d2dd8fca56ff81f0789 6.20kB / 6.20kB 0.0s

=> => sha256:6e771e15690e2fabf2332d3a3b744495411d6e0b00b2aea64419b58b0066cf81 3.99MB / 3.99MB 1.5s

=> => sha256:8bfaf621f0ce4e4ddf5efac2af68a506ee03c6cb9151dd7f9b2b8937b3cfdfb5 1.26MB / 1.26MB 1.7s

=> => sha256:0e3e948a6346a46c6ad228463eb896bd63a271b9b122fc90d39a8702b2349746 42.63MB / 42.63MB 2.0s

=> => extracting sha256:6e771e15690e2fabf2332d3a3b744495411d6e0b00b2aea64419b58b0066cf81 0.1s

=> => sha256:8d73ebbcecf2389cf3461a46c9a11972293f334e5093233adb4f1c65ef91e4e0 444B / 444B 1.7s

=> => extracting sha256:0e3e948a6346a46c6ad228463eb896bd63a271b9b122fc90d39a8702b2349746 1.1s

=> => extracting sha256:8bfaf621f0ce4e4ddf5efac2af68a506ee03c6cb9151dd7f9b2b8937b3cfdfb5 0.0s

=> => extracting sha256:8d73ebbcecf2389cf3461a46c9a11972293f334e5093233adb4f1c65ef91e4e0 0.0s

=> [node-genai internal] load build context 0.0s

=> => transferring context: 10.20kB 0.0s

=> [go-genai stage-1 2/4] RUN apk --no-cache add ca-certificates 0.8s

=> [go-genai stage-1 3/4] WORKDIR /root/ 0.1s

=> [node-genai 2/6] WORKDIR /app 0.1s

=> [node-genai 3/6] COPY package*.json ./ 0.0s

=> [node-genai 4/6] RUN npm install 2.4s

=> [python-genai 2/7] WORKDIR /app 0.0s

=> [python-genai 3/7] COPY requirements.txt . 0.0s

=> [python-genai 4/7] RUN pip install --no-cache-dir -r requirements.txt 2.9s

=> [go-genai builder 2/7] RUN apk add --no-cache git 0.8s

=> [go-genai builder 3/7] WORKDIR /app 0.0s

=> [go-genai builder 4/7] COPY go.mod ./ 0.0s

=> [go-genai builder 5/7] RUN go mod download 0.1s

=> [go-genai builder 6/7] COPY . . 0.0s

=> [go-genai builder 7/7] RUN CGO_ENABLED=0 GOOS=linux go build -o /go-genai 4.7s

=> [node-genai 5/6] COPY . . 0.0s

=> [node-genai 6/6] RUN mkdir -p views 0.2s

=> [node-genai] exporting to image 0.1s

=> => exporting layers 0.1s

=> => writing image sha256:05338f427d2bc2449ac87eea0888961c68a93df74ca11b535b1f292a7179b098 0.0s

=> => naming to docker.io/library/hello-genai-node-genai 0.0s

=> [node-genai] resolving provenance for metadata file 0.0s

=> [python-genai 5/7] COPY . . 0.0s

=> [python-genai 6/7] RUN mkdir -p templates 0.1s

=> [python-genai 7/7] COPY templates/index.html templates/ 0.0s

=> [python-genai] exporting to image 0.1s

=> => exporting layers 0.1s

=> => writing image sha256:ee3a3acc92d2574fd1ce81ad8e167e2bedb32202b5cbf3cd0e80927797a37893 0.0s

=> => naming to docker.io/library/hello-genai-python-genai 0.0s

=> [python-genai] resolving provenance for metadata file 0.0s

=> [go-genai stage-1 4/4] COPY --from=builder /go-genai . 0.0s

=> [go-genai] exporting to image 0.1s

=> => exporting layers 0.1s

=> => writing image sha256:7ce7c4d915612db46ca4ee65dc53499a69509a80d8ecbb372366c58a1f6be533 0.0s

=> => naming to docker.io/library/hello-genai-go-genai 0.0s

=> [go-genai] resolving provenance for metadata file 0.0s

[+] Running 7/7

✔ go-genai Built 0.0s

✔ node-genai Built 0.0s

✔ python-genai Built 0.0s

✔ Network hello-genai_default Created 0.0s

✔ Container hello-genai-python-genai-1 Started 0.4s

✔ Container hello-genai-node-genai-1 Started 0.4s

✔ Container hello-genai-go-genai-1 Started

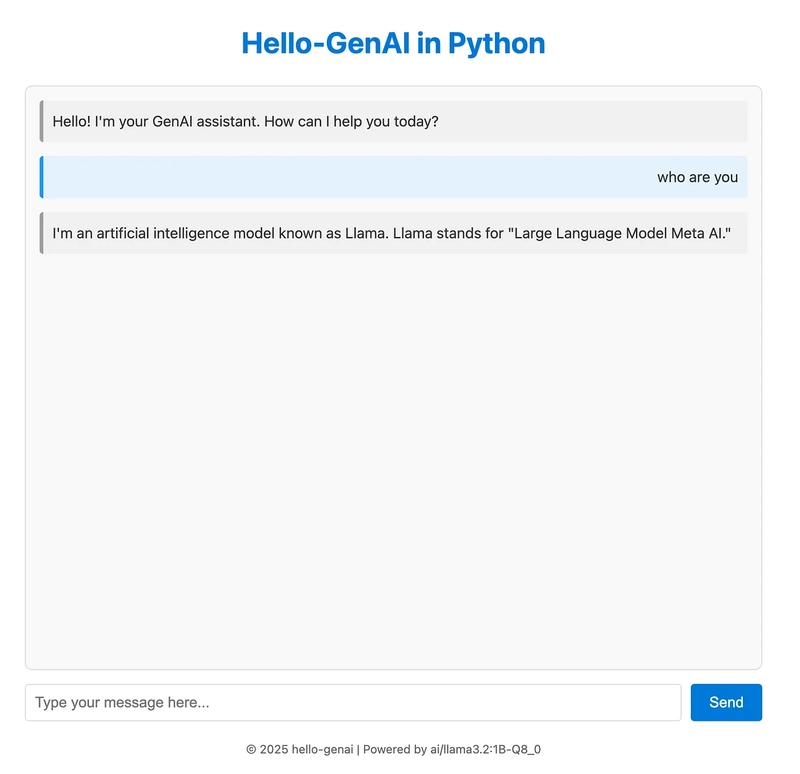

Access the App: Visit http://localhost:8081 to interact with your AI-powered application.

Step 5: Chat with the Application

Step 6: Stop the Application

~/Documents/docker/hello-genai main docker-compose down ok 14s 18:17:53

[+] Running 4/4

✔ Container hello-genai-go-genai-1 Removed 0.2s

✔ Container hello-genai-python-genai-1 Removed 10.3s

✔ Container hello-genai-node-genai-1 Removed 10.3s

✔ Network hello-genai_default Removed

Conclusion

Docker Model Runner makes AI development faster, simpler, and more efficient by bringing AI inference to your local machine. With powerful GPU acceleration and seamless Docker integration, you can build and test GenAI applications with minimal friction.

Whether you’re an AI researcher, developer, or enthusiast, Docker Model Runner is an invaluable addition to your AI toolkit. Give it a try and experience the future of local AI development.

Stay tuned for upcoming updates, including Windows support and additional model integrations!

Happy coding!

![[The AI Show Episode 142]: ChatGPT’s New Image Generator, Studio Ghibli Craze and Backlash, Gemini 2.5, OpenAI Academy, 4o Updates, Vibe Marketing & xAI Acquires X](https://www.marketingaiinstitute.com/hubfs/ep%20142%20cover.png)

![[DEALS] The Premium Learn to Code Certification Bundle (97% off) & Other Deals Up To 98% Off – Offers End Soon!](https://www.javacodegeeks.com/wp-content/uploads/2012/12/jcg-logo.jpg)

![From drop-out to software architect with Jason Lengstorf [Podcast #167]](https://cdn.hashnode.com/res/hashnode/image/upload/v1743796461357/f3d19cd7-e6f5-4d7c-8bfc-eb974bc8da68.png?#)

.png?#)

.jpg?#)

![iPhone 17 Pro Won't Feature Two-Toned Back [Gurman]](https://www.iclarified.com/images/news/96944/96944/96944-640.jpg)

_Christophe_Coat_Alamy.jpg?#)

(1).webp?#)

![Tariffs Threaten Apple's $999 iPhone Price Point in the U.S. [Gurman]](https://www.iclarified.com/images/news/96943/96943/96943-640.jpg)