The guide to MCP I never had.

AI agents are finally stepping beyond chat. They are solving multi-step problems, coordinating workflows and operating autonomously. And behind many of these breakthroughs is MCP. MCP is going viral. But if you are overwhelmed by the jargon, you’re not alone. Today, we will explore why existing AI tools fall short and how MCP solves that problem. We will cover the core components, why they matter, 3-layer architecture and its limitations. You will also find practical examples with use cases. Just the guide I wish I had when I started. What is covered? In a nutshell, we are covering these topics in detail. The problem of existing AI tools. Introduction to MCP and its core components. How does MCP work under the hood? The problem MCP solves and why it even matters. The 3 Layers of MCP (and how I finally understood them). The easiest way to connect 100+ managed MCP servers with built-in Auth. Six practical examples with demos. Some limitations of MCP. We will be covering a lot so let's get started. 1. The problem of existing AI tools. If you’ve ever tried building an AI agent that actually does stuff like checking emails or sending Slack messages (based on your workflow), you know the pain: the process is messy and most of the time the output is not worth it. Yes, we have got amazing APIs. Yes, tools exist. But practical usage and reliability aren't that much. Even tools like Cursor (which got ultra hyped on Twitter) are getting recent complaints about poor performance. 1. Too many APIs, not nearly enough context Every tool you want the AI to use is basically a mini API integration. So imagine a user says: "Did Anmol email me about yesterday's meet report?" For an LLM to answer, it has to: Realize this is an email search task, not a Slack or Notion query. Pick the correct endpoint let's say search_email_messages Parse and summarize the results in natural language All while staying within the context window. That’s a lot. Models often forget, guess or hallucinate their way through it. And if you cannot verify the accuracy, you don't even realize the problem. 2. APIs are step-based but LLMs aren’t good at remembering steps. Let's take a basic example of CRM. First, you get the contact ID → get_contact_id Then, fetch their current data → read_contact Finally, patch the update → patch_contact In traditional code, you can abstract this into a function and be done. But with LLMs? Each step is a chance to mess up due to a wrong parameter, missed field or broken chain. And suddenly your “AI assistant” is just apologizing in natural language instead of updating anything. 3. Fragile tower of prompt engineering APIs evolve. Docs change. Auth flows get updated. You might wake up one morning to find that your perfectly working agent now breaks due to third-party changes. And unlike traditional apps, there’s no shared framework or abstraction to fall back on. Every AI tool integration is a fragile tower of prompt engineering, JSON crafting. It risks breaking your AI agent's "muscle memory." 4. Vendor lock-in. Built your tools for GPT-4? Cool. But you will need to rewrite all your function descriptions and system prompts from scratch if you ever switch to other tools like Claude or Gemini. It's not such a big issue but there is no such universal solution. There has to be a way for tools and models to communicate cleanly, without stuffing all the logic into bloated prompts. That's where MCP comes in. 2. Introduction to MCP and its core components. Model Context Protocol (MCP) is a new open protocol that standardizes how applications provide context and tools to LLMs. Think of it as a universal connector for AI. MCP works as a plugin system for Cursor which allows you to extend the Agent’s capabilities by connecting it to various data sources and tools. credit goes to Greg Isenburg on YouTube MCP helps you build agents and complex workflows on top of LLMs. For example, an MCP server for Obsidian helps AI assistants search and read notes from your Obsidian vault. Your AI agent can now: → Send emails through Gmail → Create tasks in Linear → Search documents in Notion → Post messages in Slack → Update records in Salesforce All by sending natural-language instructions through a standardized interface. Think about what this means for productivity. Tasks that once required switching between 5+ apps can now happen in a single conversation with your agent. At its core, MCP follows a client-server architecture where a host application can connect to multiple servers. Credit goes to ByteByteGo Core Components. Here are the core components in any general MCP Server. MCP hosts - apps like Claude Desktop, Cursor, Windsurf or AI tools that want to access data via MCP. MCP Clients - protocol clients that maintain 1:1 connections with MCP servers, acting as the communication bridge. MCP Server

AI agents are finally stepping beyond chat. They are solving multi-step problems, coordinating workflows and operating autonomously. And behind many of these breakthroughs is MCP.

MCP is going viral. But if you are overwhelmed by the jargon, you’re not alone.

Today, we will explore why existing AI tools fall short and how MCP solves that problem. We will cover the core components, why they matter, 3-layer architecture and its limitations. You will also find practical examples with use cases.

Just the guide I wish I had when I started.

What is covered?

In a nutshell, we are covering these topics in detail.

- The problem of existing AI tools.

- Introduction to MCP and its core components.

- How does MCP work under the hood?

- The problem MCP solves and why it even matters.

- The 3 Layers of MCP (and how I finally understood them).

- The easiest way to connect 100+ managed MCP servers with built-in Auth.

- Six practical examples with demos.

- Some limitations of MCP.

We will be covering a lot so let's get started.

1. The problem of existing AI tools.

If you’ve ever tried building an AI agent that actually does stuff like checking emails or sending Slack messages (based on your workflow), you know the pain: the process is messy and most of the time the output is not worth it.

Yes, we have got amazing APIs. Yes, tools exist.

But practical usage and reliability aren't that much.

Even tools like Cursor (which got ultra hyped on Twitter) are getting recent complaints about poor performance.

1. Too many APIs, not nearly enough context

Every tool you want the AI to use is basically a mini API integration. So imagine a user says: "Did Anmol email me about yesterday's meet report?"

For an LLM to answer, it has to:

- Realize this is an email search task, not a Slack or Notion query.

- Pick the correct endpoint let's say

search_email_messages - Parse and summarize the results in natural language

All while staying within the context window. That’s a lot. Models often forget, guess or hallucinate their way through it.

And if you cannot verify the accuracy, you don't even realize the problem.

2. APIs are step-based but LLMs aren’t good at remembering steps.

Let's take a basic example of CRM.

- First, you get the contact ID →

get_contact_id - Then, fetch their current data →

read_contact - Finally, patch the update →

patch_contact

In traditional code, you can abstract this into a function and be done. But with LLMs? Each step is a chance to mess up due to a wrong parameter, missed field or broken chain. And suddenly your “AI assistant” is just apologizing in natural language instead of updating anything.

3. Fragile tower of prompt engineering

APIs evolve. Docs change. Auth flows get updated. You might wake up one morning to find that your perfectly working agent now breaks due to third-party changes.

And unlike traditional apps, there’s no shared framework or abstraction to fall back on. Every AI tool integration is a fragile tower of prompt engineering, JSON crafting. It risks breaking your AI agent's "muscle memory."

4. Vendor lock-in.

Built your tools for GPT-4? Cool. But you will need to rewrite all your function descriptions and system prompts from scratch if you ever switch to other tools like Claude or Gemini.

It's not such a big issue but there is no such universal solution.

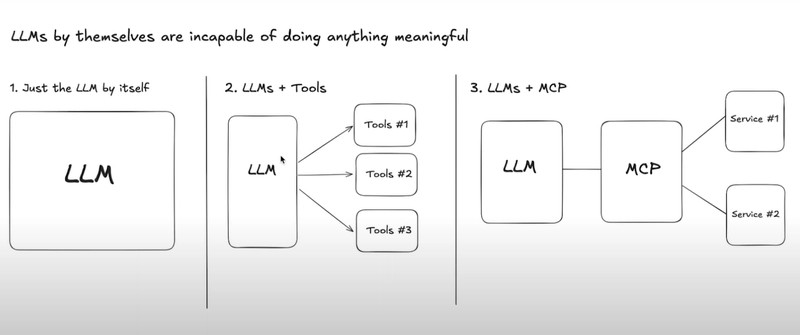

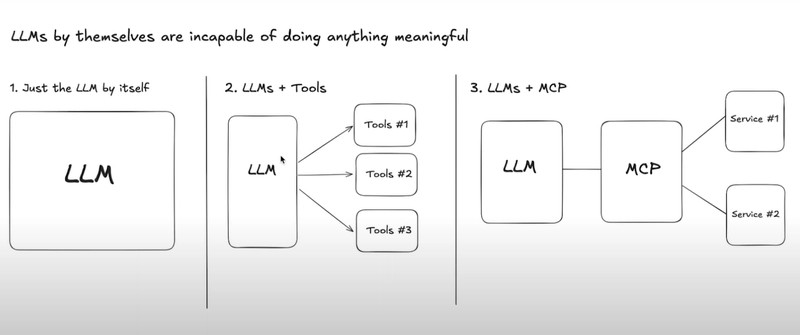

There has to be a way for tools and models to communicate cleanly, without stuffing all the logic into bloated prompts. That's where MCP comes in.

2. Introduction to MCP and its core components.

Model Context Protocol (MCP) is a new open protocol that standardizes how applications provide context and tools to LLMs.

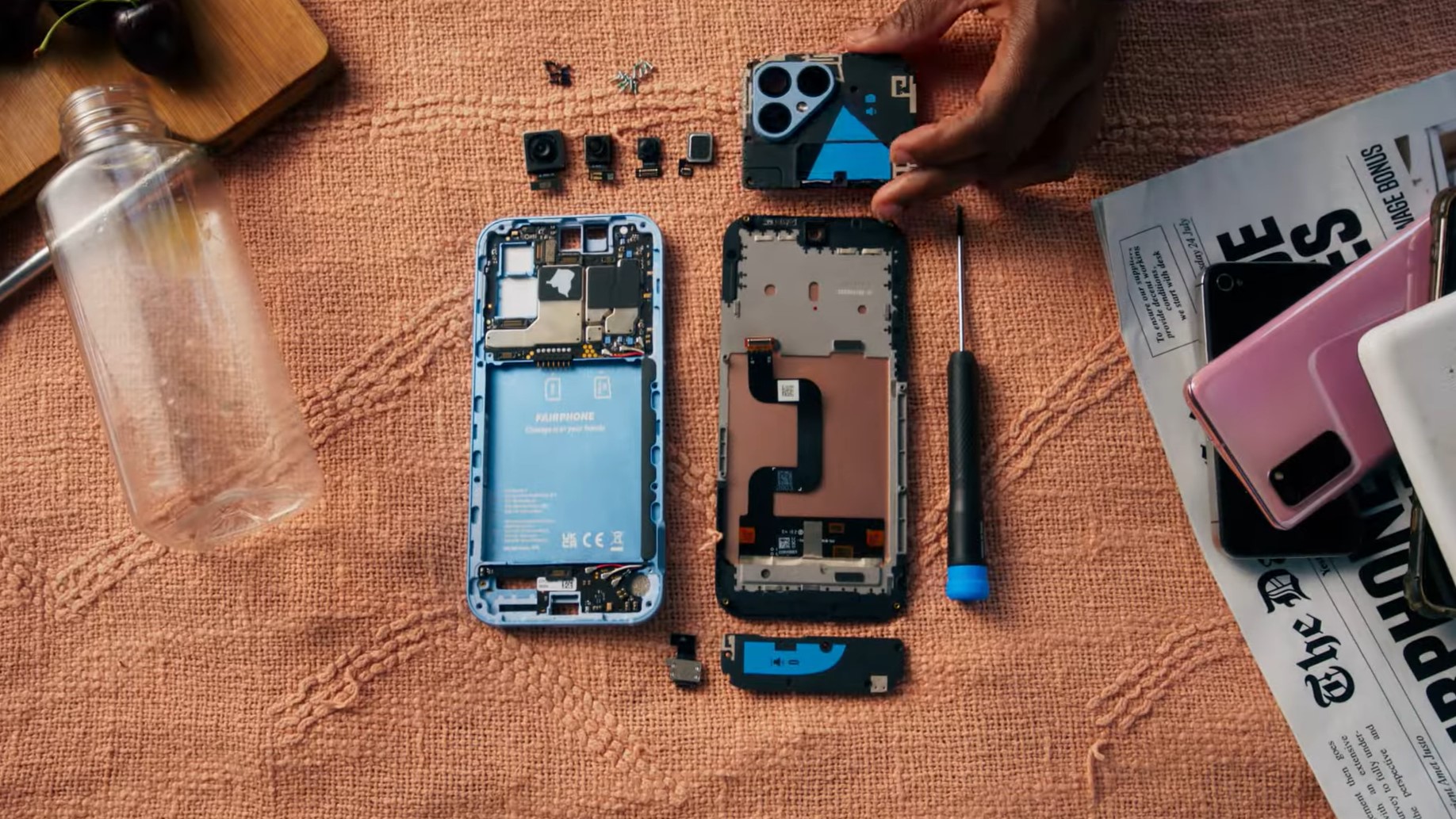

Think of it as a universal connector for AI. MCP works as a plugin system for Cursor which allows you to extend the Agent’s capabilities by connecting it to various data sources and tools.

credit goes to Greg Isenburg on YouTube

credit goes to Greg Isenburg on YouTube

MCP helps you build agents and complex workflows on top of LLMs.

For example, an MCP server for Obsidian helps AI assistants search and read notes from your Obsidian vault.

Your AI agent can now:

→ Send emails through Gmail

→ Create tasks in Linear

→ Search documents in Notion

→ Post messages in Slack

→ Update records in Salesforce

All by sending natural-language instructions through a standardized interface.

Think about what this means for productivity. Tasks that once required switching between 5+ apps can now happen in a single conversation with your agent.

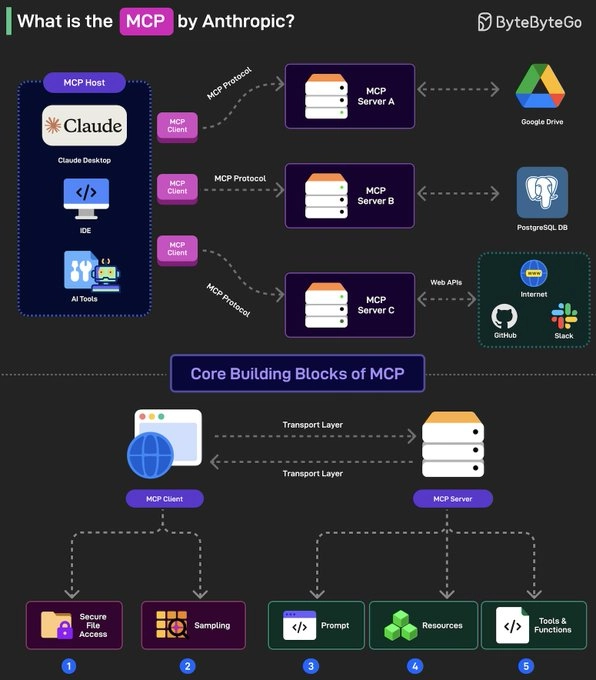

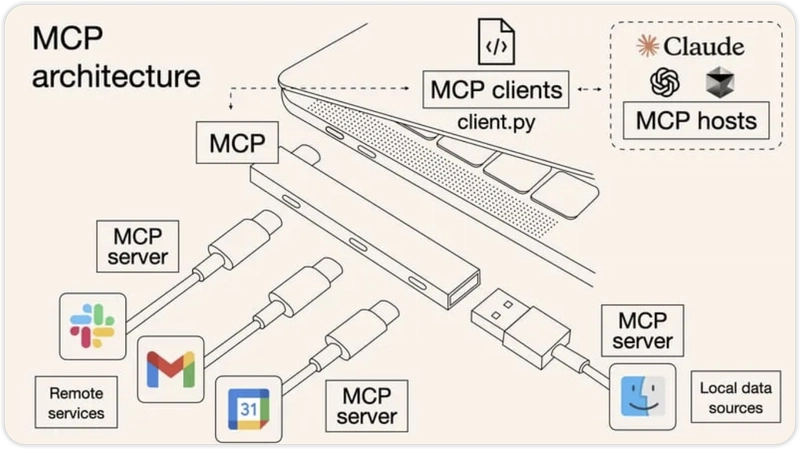

At its core, MCP follows a client-server architecture where a host application can connect to multiple servers.

Core Components.

Here are the core components in any general MCP Server.

MCP hosts- apps like Claude Desktop, Cursor, Windsurf or AI tools that want to access data via MCP.MCP Clients- protocol clients that maintain 1:1 connections with MCP servers, acting as the communication bridge.MCP Servers- lightweight programs that each expose specific capabilities (like reading files, query databases...) through the standardized Model Context Protocol.Local Data Sources- files, databases and services on your computer that MCP servers can securely access. For instance, a browser automation MCP server needs access to your browser to work.Remote Services- External APIs and cloud-based systems that MCP servers can connect to.

If you're interested in reading the architecture, check out official docs. It covers protocol layers, connection lifecycle and error handling with the overall implementation.

We are going to cover everything but if you're interested in reading more about MCP, check out these two blogs:

- What is the Model Context Protocol (MCP)? by the Builder.io team

- MCP: What It Is and Why It Matters by Addy Osmani

3. How does MCP work under the hood?

The MCP ecosystem comes down to several key players that function together. Let's study in brief about them.

⚡ Clients.

Clients are the apps you actually use like Cursor, Claude Desktop, and others. Their job is to:

- Request available capabilities from an MCP server

- Present those capabilities (tools, resources, prompts) to the AI model

- Relay the AI's tool usage requests back to the server and return the results

- Provide models with the basic MCP protocol overview for consistent interaction

They handle communication between the system’s frontends: the user, the AI model, and the MCP server.

⚡ Servers.

MCP servers serve as intermediaries between users/AI and external services. They:

- Offer a standardized JSON-RPC interface for tool and resource access

- Convert existing APIs into MCP-compatible capabilities without requiring API changes

- Handle authentication, capability definitions and communication standards

They provide context, tools and prompts to clients.

⚡ Service providers.

These are external systems or platforms (like Discord, Notion, Figma) that perform actual tasks. They don’t change their APIs for MCP.

This whole setup allows developers to plug any compatible API into any MCP-aware client, avoiding dependence on centralized integrations by large AI providers.

MCP Building Blocks: Tools, Resources and Prompts

⚡ Tools.

Tools represent actions an AI can perform such as search_emails or create_issue_linear. They form the foundation of how models execute real-world functions through MCP.

⚡ Resources.

Resources represent any kind of data that an MCP server wants to make available to clients. This can include:

- File contents

- Database records

- API responses

- Live system data

- Screenshots and images

- Log files

- And more

Each resource is identified by a unique URI (like file://user/prefs.json) which can be project notes, coding preferences or anything specific to you. It contains either text or binary data.

Resources are identified using URIs that follow this format.

[protocol]://[host]/[path]

For example:

file:///home/user/documents/report.pdfpostgres://database/customers/schemascreen://localhost/display1

Servers can also define their own custom URI schemes. You can read more on official docs.

⚡ Prompts.

Tools let the AI do stuff, but prompts guide the AI on how to behave while doing it.

It's like instructions to the model during tool usage. They act like operational guides helping the AI follow specific styles, workflows or safety protocols like if it follows a specific safety checklist before hitting that delete_everything button.

.jpg)

![[The AI Show Episode 144]: ChatGPT’s New Memory, Shopify CEO’s Leaked “AI First” Memo, Google Cloud Next Releases, o3 and o4-mini Coming Soon & Llama 4’s Rocky Launch](https://www.marketingaiinstitute.com/hubfs/ep%20144%20cover.png)

![BPMN-procesmodellering [closed]](https://i.sstatic.net/l7l8q49F.png)

-All-will-be-revealed-00-35-05.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-All-will-be-revealed-00-17-36.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

-Jack-Black---Steve's-Lava-Chicken-(Official-Music-Video)-A-Minecraft-Movie-Soundtrack-WaterTower-00-00-32_lMoQ1fI.png?width=1920&height=1920&fit=bounds&quality=70&format=jpg&auto=webp#)

_Weyo_alamy.png?width=1280&auto=webp&quality=80&disable=upscale#)

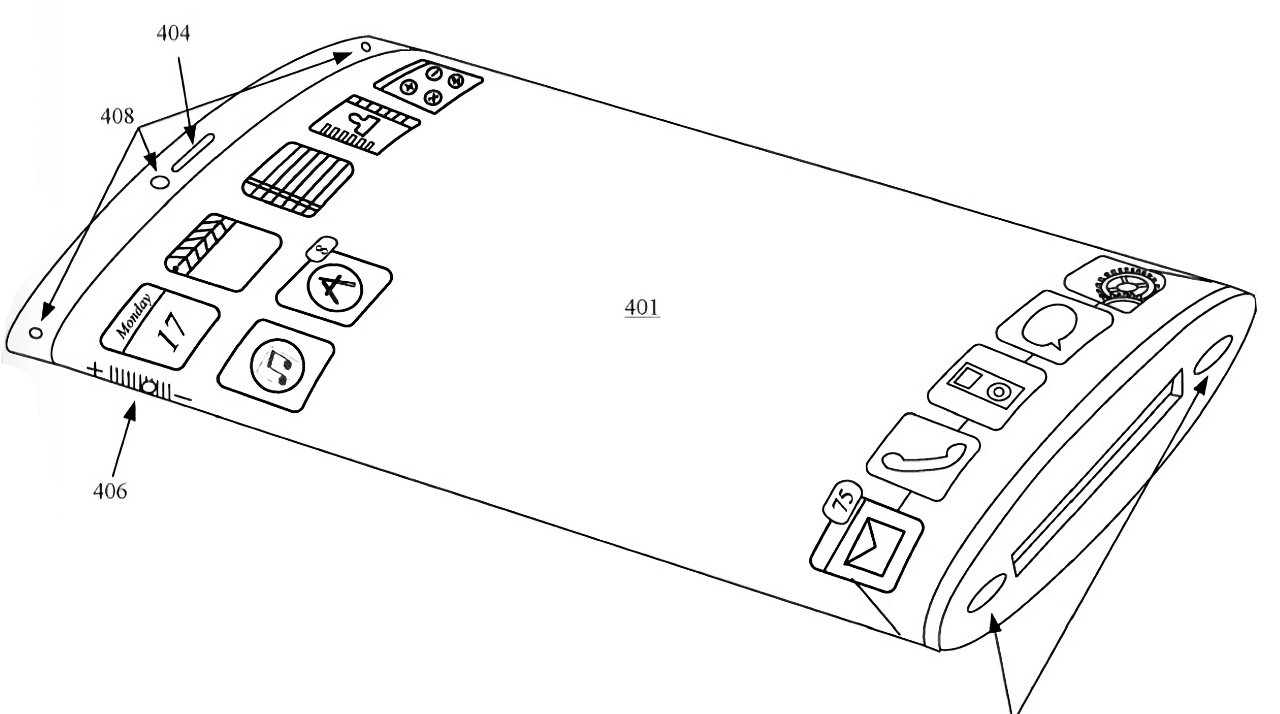

![What iPhone 17 model are you most excited to see? [Poll]](https://9to5mac.com/wp-content/uploads/sites/6/2025/04/iphone-17-pro-sky-blue.jpg?quality=82&strip=all&w=290&h=145&crop=1)

![Hands-On With 'iPhone 17 Air' Dummy Reveals 'Scary Thin' Design [Video]](https://www.iclarified.com/images/news/97100/97100/97100-640.jpg)

![Mike Rockwell is Overhauling Siri's Leadership Team [Report]](https://www.iclarified.com/images/news/97096/97096/97096-640.jpg)

![Instagram Releases 'Edits' Video Creation App [Download]](https://www.iclarified.com/images/news/97097/97097/97097-640.jpg)

![Inside Netflix's Rebuild of the Amsterdam Apple Store for 'iHostage' [Video]](https://www.iclarified.com/images/news/97095/97095/97095-640.jpg)